Nicholas Gisolfi

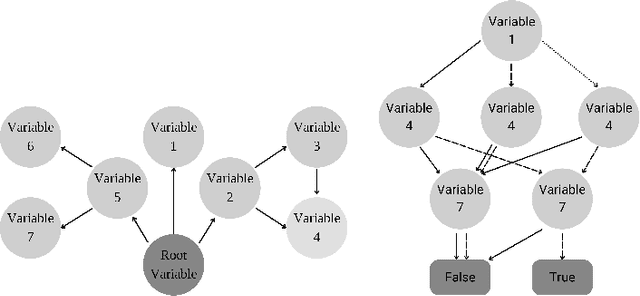

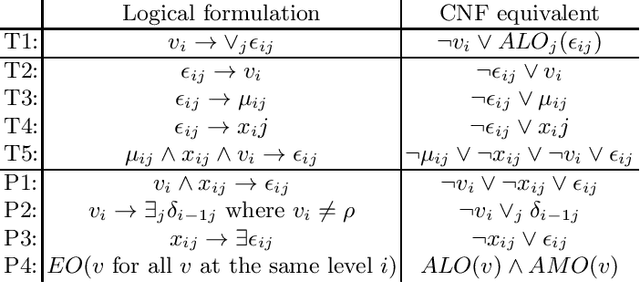

A SAT-based approach to rigorous verification of Bayesian networks

Aug 02, 2024

Abstract:Recent advancements in machine learning have accelerated its widespread adoption across various real-world applications. However, in safety-critical domains, the deployment of machine learning models is riddled with challenges due to their complexity, lack of interpretability, and absence of formal guarantees regarding their behavior. In this paper, we introduce a verification framework tailored for Bayesian networks, designed to address these drawbacks. Our framework comprises two key components: (1) a two-step compilation and encoding scheme that translates Bayesian networks into Boolean logic literals, and (2) formal verification queries that leverage these literals to verify various properties encoded as constraints. Specifically, we introduce two verification queries: if-then rules (ITR) and feature monotonicity (FMO). We benchmark the efficiency of our verification scheme and demonstrate its practical utility in real-world scenarios.

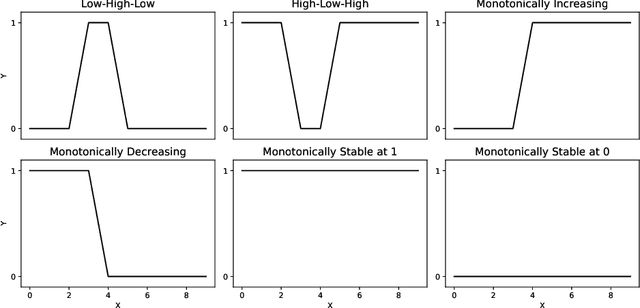

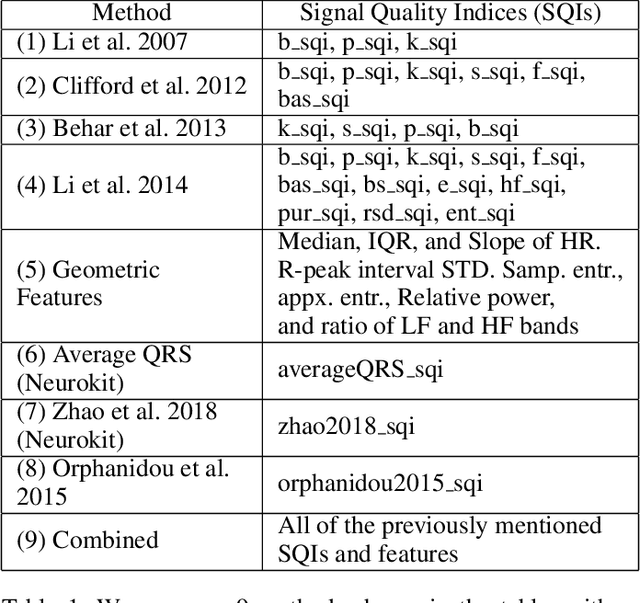

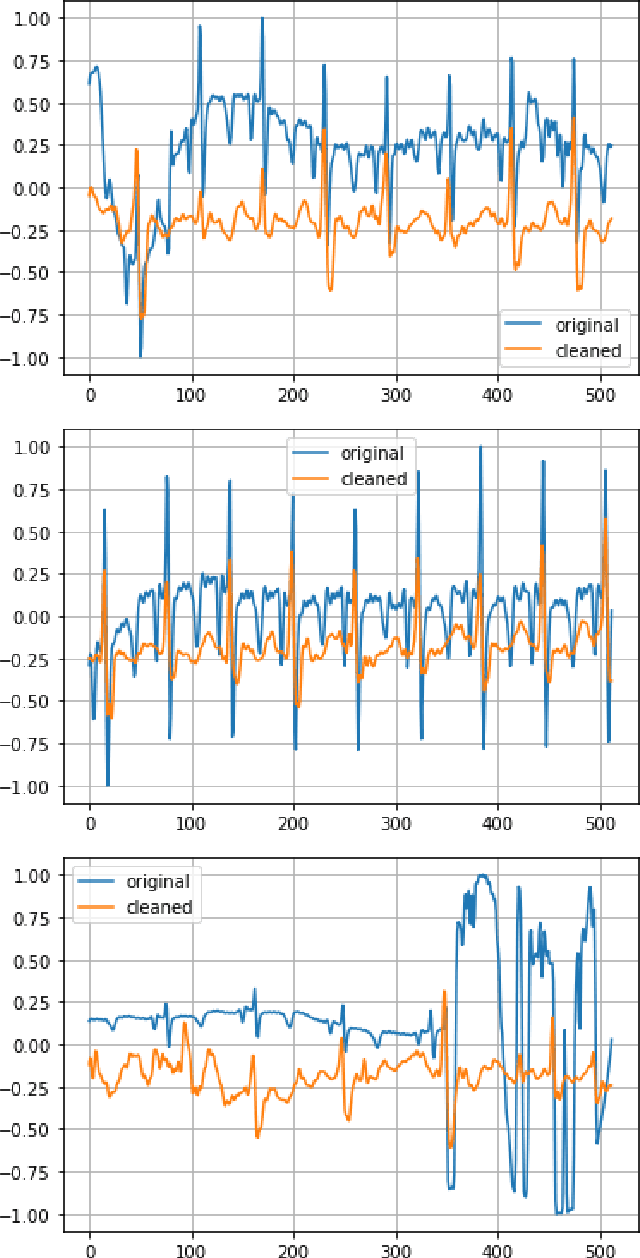

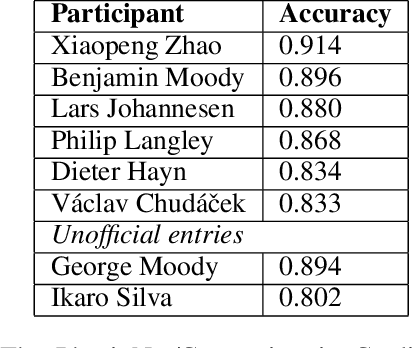

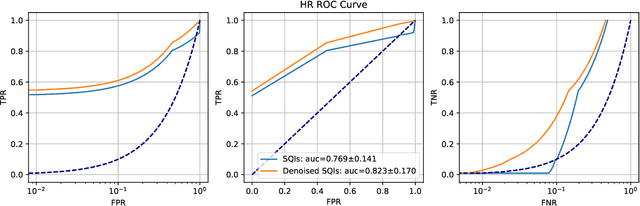

Signal Quality Auditing for Time-series Data

Feb 01, 2024

Abstract:Signal quality assessment (SQA) is required for monitoring the reliability of data acquisition systems, especially in AI-driven Predictive Maintenance (PMx) application contexts. SQA is vital for addressing "silent failures" of data acquisition hardware and software, which when unnoticed, misinform the users of data, creating the risk for incorrect decisions with unintended or even catastrophic consequences. We have developed an open-source software implementation of signal quality indices (SQIs) for the analysis of time-series data. We codify a range of SQIs, demonstrate them using established benchmark data, and show that they can be effective for signal quality assessment. We also study alternative approaches to denoising time-series data in an attempt to improve the quality of the already degraded signal, and evaluate them empirically on relevant real-world data. To our knowledge, our software toolkit is the first to provide an open source implementation of a broad range of signal quality assessment and improvement techniques validated on publicly available benchmark data for ease of reproducibility. The generality of our framework can be easily extended to assessing reliability of arbitrary time-series measurements in complex systems, especially when morphological patterns of the waveform shapes and signal periodicity are of key interest in downstream analyses.

Provably Robust Model-Centric Explanations for Critical Decision-Making

Oct 26, 2021

Abstract:We recommend using a model-centric, Boolean Satisfiability (SAT) formalism to obtain useful explanations of trained model behavior, different and complementary to what can be gleaned from LIME and SHAP, popular data-centric explanation tools in Artificial Intelligence (AI). We compare and contrast these methods, and show that data-centric methods may yield brittle explanations of limited practical utility. The model-centric framework, however, can offer actionable insights into risks of using AI models in practice. For critical applications of AI, split-second decision making is best informed by robust explanations that are invariant to properties of data, the capability offered by model-centric frameworks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge