Armin Alaghi

Analysis and Mitigations of Reverse Engineering Attacks on Local Feature Descriptors

May 09, 2021

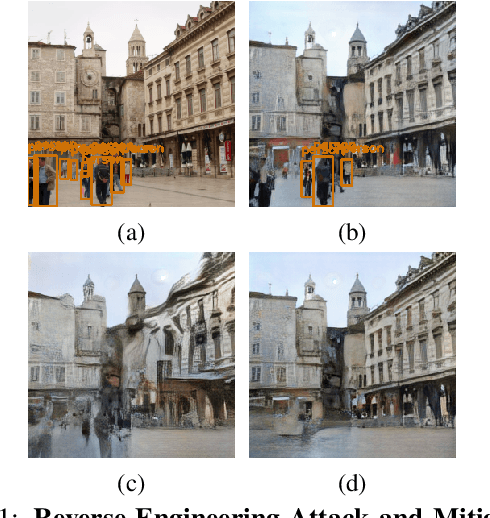

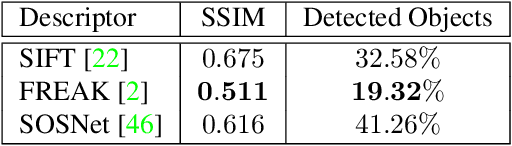

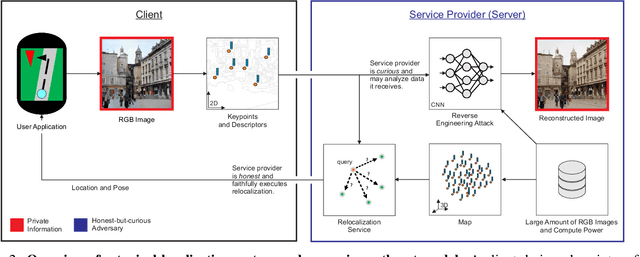

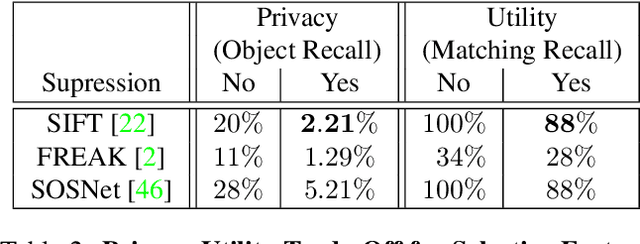

Abstract:As autonomous driving and augmented reality evolve, a practical concern is data privacy. In particular, these applications rely on localization based on user images. The widely adopted technology uses local feature descriptors, which are derived from the images and it was long thought that they could not be reverted back. However, recent work has demonstrated that under certain conditions reverse engineering attacks are possible and allow an adversary to reconstruct RGB images. This poses a potential risk to user privacy. We take this a step further and model potential adversaries using a privacy threat model. Subsequently, we show under controlled conditions a reverse engineering attack on sparse feature maps and analyze the vulnerability of popular descriptors including FREAK, SIFT and SOSNet. Finally, we evaluate potential mitigation techniques that select a subset of descriptors to carefully balance privacy reconstruction risk while preserving image matching accuracy; our results show that similar accuracy can be obtained when revealing less information.

Neural Network Compression for Noisy Storage Devices

Feb 15, 2021

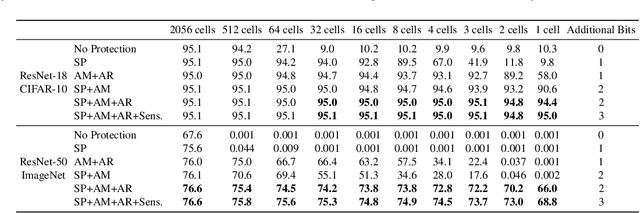

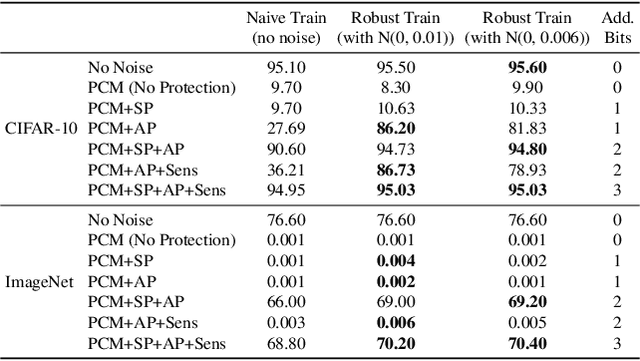

Abstract:Compression and efficient storage of neural network (NN) parameters is critical for applications that run on resource-constrained devices. Although NN model compression has made significant progress, there has been considerably less investigation in the actual physical storage of NN parameters. Conventionally, model compression and physical storage are decoupled, as digital storage media with error correcting codes (ECCs) provide robust error-free storage. This decoupled approach is inefficient, as it forces the storage to treat each bit of the compressed model equally, and to dedicate the same amount of resources to each bit. We propose a radically different approach that: (i) employs analog memories to maximize the capacity of each memory cell, and (ii) jointly optimizes model compression and physical storage to maximize memory utility. We investigate the challenges of analog storage by studying model storage on phase change memory (PCM) arrays and develop a variety of robust coding strategies for NN model storage. We demonstrate the efficacy of our approach on MNIST, CIFAR-10 and ImageNet datasets for both existing and novel compression methods. Compared to conventional error-free digital storage, our method has the potential to reduce the memory size by one order of magnitude, without significantly compromising the stored model's accuracy.

MATIC: Learning Around Errors for Efficient Low-Voltage Neural Network Accelerators

Mar 23, 2018

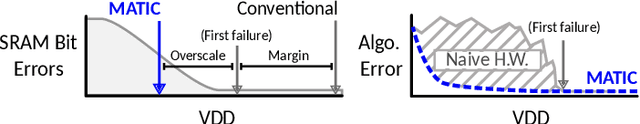

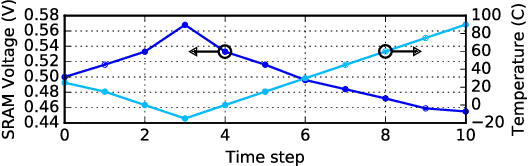

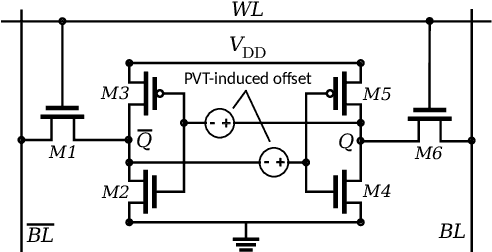

Abstract:As a result of the increasing demand for deep neural network (DNN)-based services, efforts to develop dedicated hardware accelerators for DNNs are growing rapidly. However,while accelerators with high performance and efficiency on convolutional deep neural networks (Conv-DNNs) have been developed, less progress has been made with regards to fully-connected DNNs (FC-DNNs). In this paper, we propose MATIC (Memory Adaptive Training with In-situ Canaries), a methodology that enables aggressive voltage scaling of accelerator weight memories to improve the energy-efficiency of DNN accelerators. To enable accurate operation with voltage overscaling, MATIC combines the characteristics of destructive SRAM reads with the error resilience of neural networks in a memory-adaptive training process. Furthermore, PVT-related voltage margins are eliminated using bit-cells from synaptic weights as in-situ canaries to track runtime environmental variation. Demonstrated on a low-power DNN accelerator that we fabricate in 65 nm CMOS, MATIC enables up to 60-80 mV of voltage overscaling (3.3x total energy reduction versus the nominal voltage), or 18.6x application error reduction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge