Archontis Politis

Beyond Omnidirectional: Neural Ambisonics Encoding for Arbitrary Microphone Directivity Patterns using Cross-Attention

Jan 30, 2026Abstract:We present a deep neural network approach for encoding microphone array signals into Ambisonics that generalizes to arbitrary microphone array configurations with fixed microphone count but varying locations and frequency-dependent directional characteristics. Unlike previous methods that rely only on array geometry as metadata, our approach uses directional array transfer functions, enabling accurate characterization of real-world arrays. The proposed architecture employs separate encoders for audio and directional responses, combining them through cross-attention mechanisms to generate array-independent spatial audio representations. We evaluate the method on simulated data in two settings: a mobile phone with complex body scattering, and a free-field condition, both with varying numbers of sound sources in reverberant environments. Evaluations demonstrate that our approach outperforms both conventional digital signal processing-based methods and existing deep neural network solutions. Furthermore, using array transfer functions instead of geometry as metadata input improves accuracy on realistic arrays.

Multi-Utterance Speech Separation and Association Trained on Short Segments

Jul 03, 2025Abstract:Current deep neural network (DNN) based speech separation faces a fundamental challenge -- while the models need to be trained on short segments due to computational constraints, real-world applications typically require processing significantly longer recordings with multiple utterances per speaker than seen during training. In this paper, we investigate how existing approaches perform in this challenging scenario and propose a frequency-temporal recurrent neural network (FTRNN) that effectively bridges this gap. Our FTRNN employs a full-band module to model frequency dependencies within each time frame and a sub-band module that models temporal patterns in each frequency band. Despite being trained on short fixed-length segments of 10 s, our model demonstrates robust separation when processing signals significantly longer than training segments (21-121 s) and preserves speaker association across utterance gaps exceeding those seen during training. Unlike the conventional segment-separation-stitch paradigm, our lightweight approach (0.9 M parameters) performs inference on long audio without segmentation, eliminating segment boundary distortions while simplifying deployment. Experimental results demonstrate the generalization ability of FTRNN for multi-utterance speech separation and speaker association.

Attractor-Based Speech Separation of Multiple Utterances by Unknown Number of Speakers

May 22, 2025Abstract:This paper addresses the problem of single-channel speech separation, where the number of speakers is unknown, and each speaker may speak multiple utterances. We propose a speech separation model that simultaneously performs separation, dynamically estimates the number of speakers, and detects individual speaker activities by integrating an attractor module. The proposed system outperforms existing methods by introducing an attractor-based architecture that effectively combines local and global temporal modeling for multi-utterance scenarios. To evaluate the method in reverberant and noisy conditions, a multi-speaker multi-utterance dataset was synthesized by combining Librispeech speech signals with WHAM! noise signals. The results demonstrate that the proposed system accurately estimates the number of sources. The system effectively detects source activities and separates the corresponding utterances into correct outputs in both known and unknown source count scenarios.

Score-informed Music Source Separation: Improving Synthetic-to-real Generalization in Classical Music

Mar 10, 2025

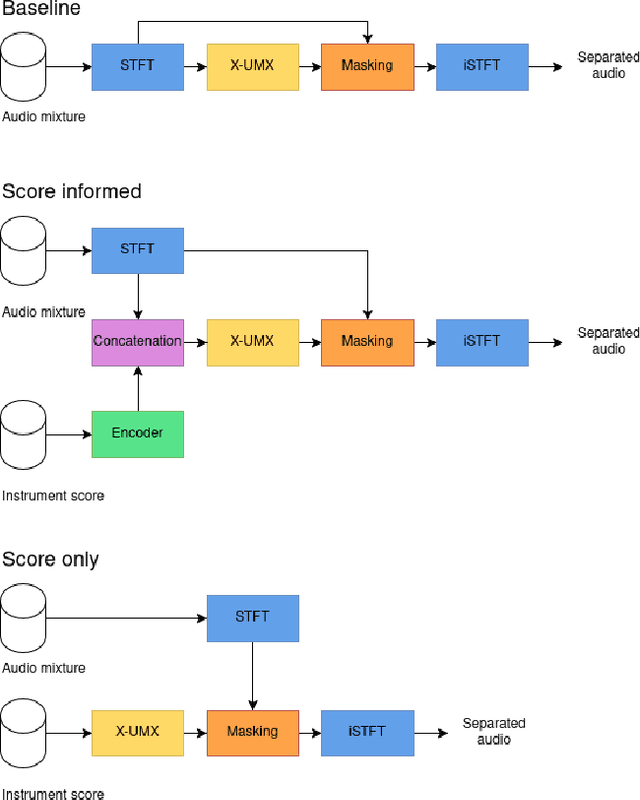

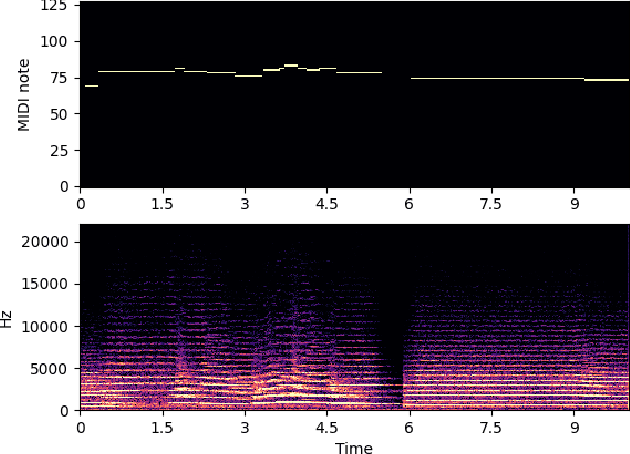

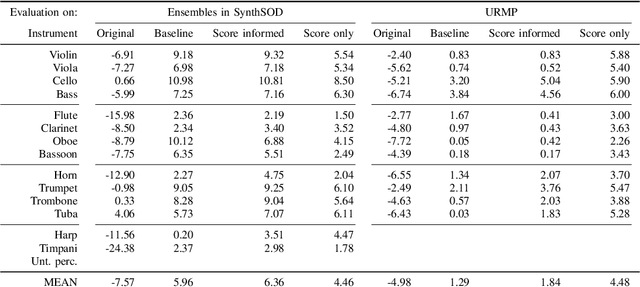

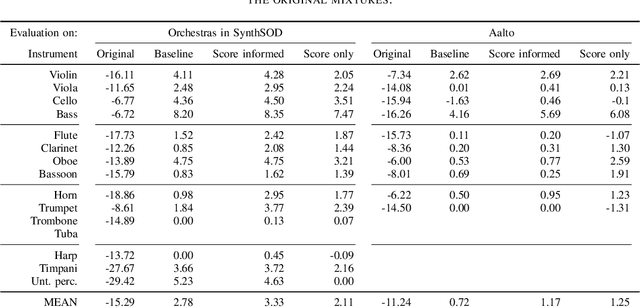

Abstract:Music source separation is the task of separating a mixture of instruments into constituent tracks. Music source separation models are typically trained using only audio data, although additional information can be used to improve the model's separation capability. In this paper, we propose two ways of using musical scores to aid music source separation: a score-informed model where the score is concatenated with the magnitude spectrogram of the audio mixture as the input of the model, and a model where we use only the score to calculate the separation mask. We train our models on synthetic data in the SynthSOD dataset and evaluate our methods on the URMP and Aalto anechoic orchestra datasets, comprised of real recordings. The score-informed model improves separation results compared to a baseline approach, but struggles to generalize from synthetic to real data, whereas the score-only model shows a clear improvement in synthetic-to-real generalization.

Gen-A: Generalizing Ambisonics Neural Encoding to Unseen Microphone Arrays

Jan 14, 2025

Abstract:Using deep neural networks (DNNs) for encoding of microphone array (MA) signals to the Ambisonics spatial audio format can surpass certain limitations of established conventional methods, but existing DNN-based methods need to be trained separately for each MA. This paper proposes a DNN-based method for Ambisonics encoding that can generalize to arbitrary MA geometries unseen during training. The method takes as inputs the MA geometry and MA signals and uses a multi-level encoder consisting of separate paths for geometry and signal data, where geometry features inform the signal encoder at each level. The method is validated in simulated anechoic and reverberant conditions with one and two sources. The results indicate improvement over conventional encoding across the whole frequency range for dry scenes, while for reverberant scenes the improvement is frequency-dependent.

Class-Incremental Learning for Sound Event Localization and Detection

Nov 19, 2024Abstract:This paper investigates the feasibility of class-incremental learning (CIL) for Sound Event Localization and Detection (SELD) tasks. The method features an incremental learner that can learn new sound classes independently while preserving knowledge of old classes. The continual learning is achieved through a mean square error-based distillation loss to minimize output discrepancies between subsequent learners. The experiments are conducted on the TAU-NIGENS Spatial Sound Events 2021 dataset, which includes 12 different sound classes and demonstrate the efficacy of proposed method. We begin by learning 8 classes and introduce the 4 new classes at next stage. After the incremental phase, the system is evaluated on the full set of learned classes. Results show that, for this realistic dataset, our proposed method successfully maintains baseline performance across all metrics.

SynthSOD: Developing an Heterogeneous Dataset for Orchestra Music Source Separation

Sep 17, 2024

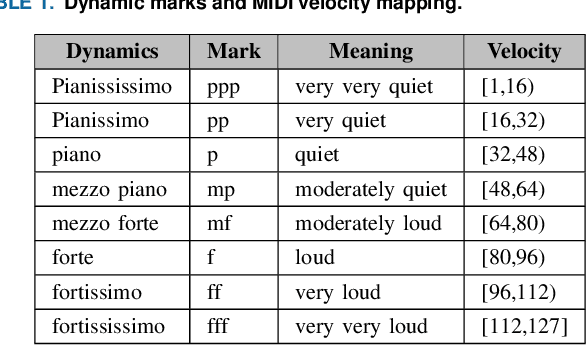

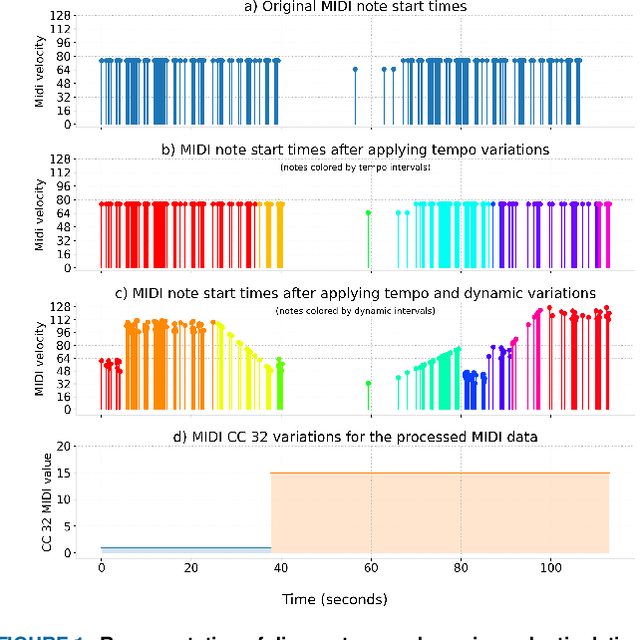

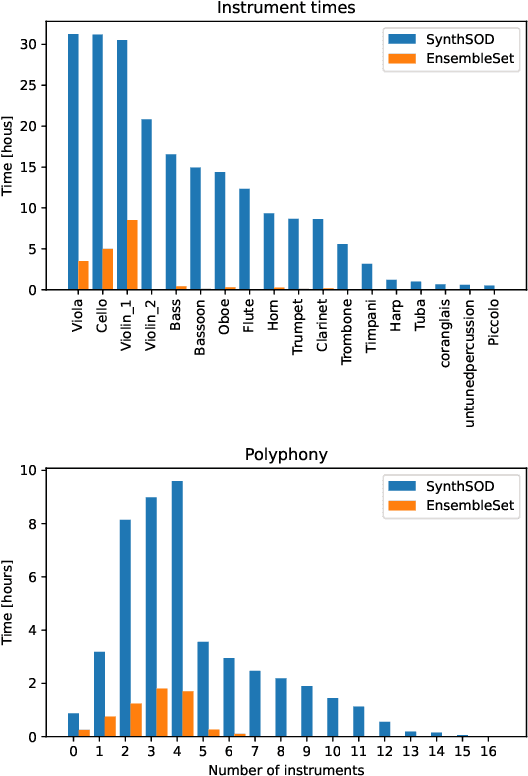

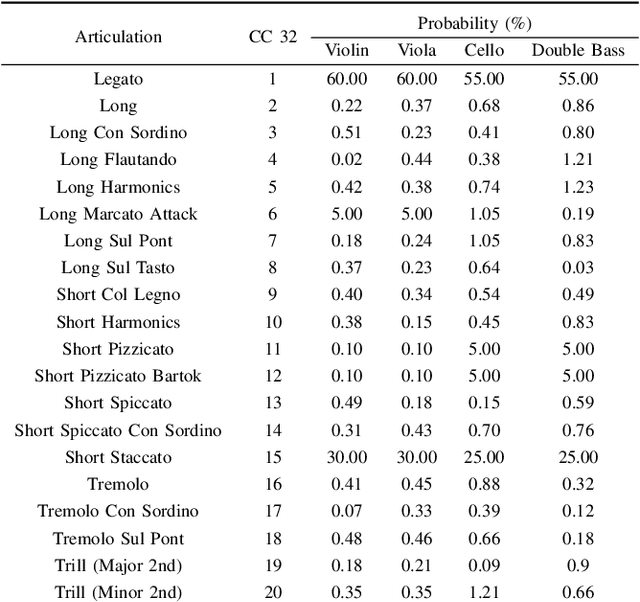

Abstract:Recent advancements in music source separation have significantly progressed, particularly in isolating vocals, drums, and bass elements from mixed tracks. These developments owe much to the creation and use of large-scale, multitrack datasets dedicated to these specific components. However, the challenge of extracting similarly sounding sources from orchestra recordings has not been extensively explored, largely due to a scarcity of comprehensive and clean (i.e bleed-free) multitrack datasets. In this paper, we introduce a novel multitrack dataset called SynthSOD, developed using a set of simulation techniques to create a realistic (i.e. using high-quality soundfonts), musically motivated, and heterogeneous training set comprising different dynamics, natural tempo changes, styles, and conditions. Moreover, we demonstrate the application of a widely used baseline music separation model trained on our synthesized dataset w.r.t to the well-known EnsembleSet, and evaluate its performance under both synthetic and real-world conditions.

Gaunt coefficients for complex and real spherical harmonics with applications to spherical array processing and Ambisonics

Jul 09, 2024Abstract:Acoustical signal processing of directional representations of sound fields, including source, receiver, and scatterer transfer functions, are often expressed and modeled in the spherical harmonic domain (SHD). Certain such modeling operations, or applications of those models, involve multiplications of those directional quantities, which can also be expressed conveniently in the SHD through coupling coefficients known as Gaunt coefficients. Since the definition and notation of Gaunt coefficients varies across acoustical publications, this work defines them based on established conventions of complex and real spherical harmonics (SHs) along with a convenient matrix form for spherical multiplication of directionally band-limited spherical functions. Additionally, the report provides a derivation of the Gaunt coefficients for real SHs, which has been missing from the literature and can be used directly in spatial audio frameworks such as Ambisonics. Matlab code is provided that can compute all coefficients up to user specified SH orders. Finally, a number of relevant acoustical processing examples from the literature are presented, following the matrix formalism of coefficients introduced in the report.

Reference Channel Selection by Multi-Channel Masking for End-to-End Multi-Channel Speech Enhancement

Jun 05, 2024

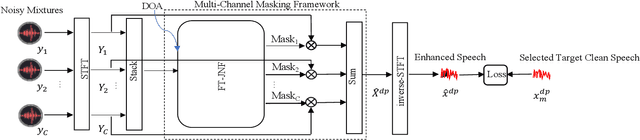

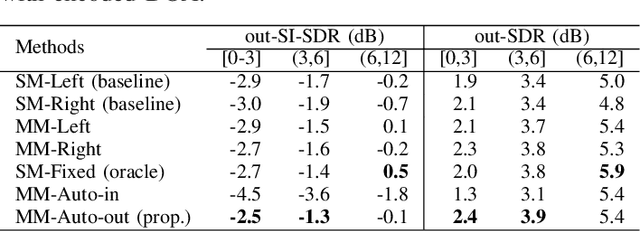

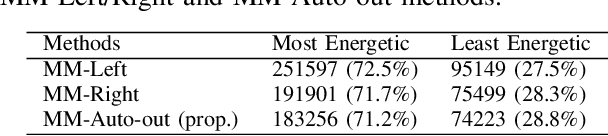

Abstract:In end-to-end multi-channel speech enhancement, the traditional approach of designating one microphone signal as the reference for processing may not always yield optimal results. The limitation is particularly in scenarios with large distributed microphone arrays with varying speaker-to-microphone distances or compact, highly directional microphone arrays where speaker or microphone positions change over time. Current mask-based methods often fix the reference channel during training, which makes it not possible to adaptively select the reference channel for optimal performance. To address this problem, we introduce an adaptive approach for selecting the optimal reference channel. Our method leverages a multi-channel masking-based scheme, where multiple masked signals are combined to generate a single-channel output signal. This enhanced signal is then used for loss calculation, while the reference clean speech is adjusted based on the highest scale-invariant signal-to-distortion ratio (SI-SDR). The experimental results on the Spear challenge simulated dataset D4 demonstrate the superiority of our proposed method over the conventional approach of using a fixed reference channel with single-channel masking

Speaker Distance Estimation in Enclosures from Single-Channel Audio

Mar 26, 2024

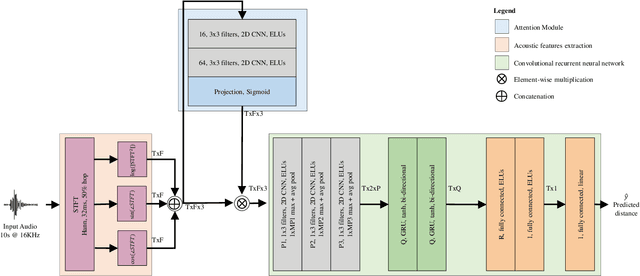

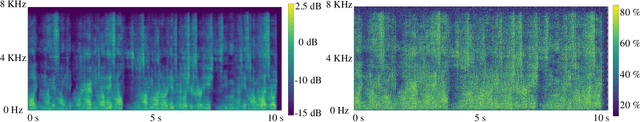

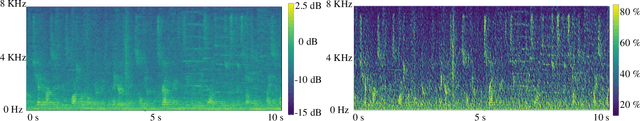

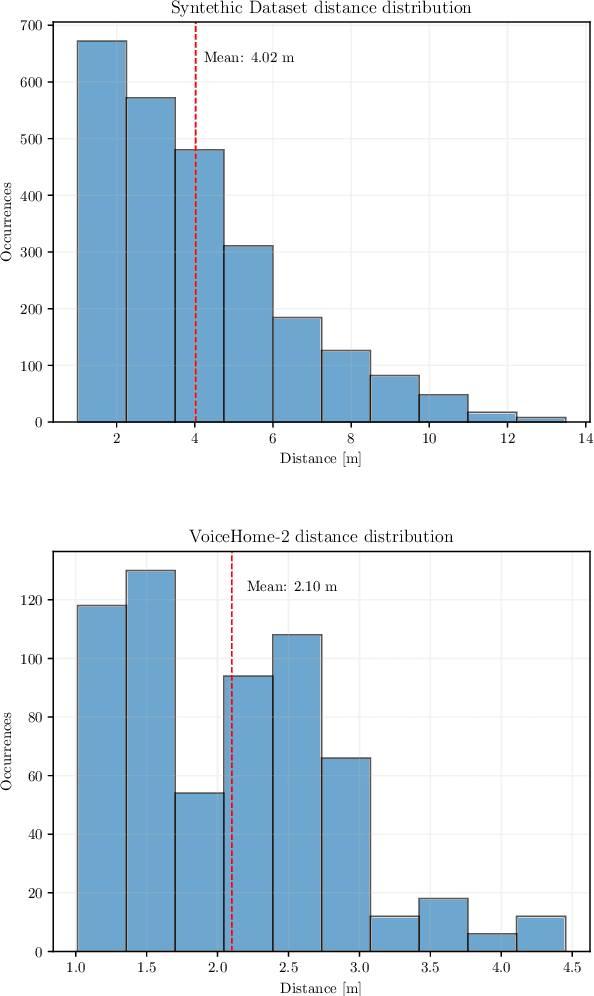

Abstract:Distance estimation from audio plays a crucial role in various applications, such as acoustic scene analysis, sound source localization, and room modeling. Most studies predominantly center on employing a classification approach, where distances are discretized into distinct categories, enabling smoother model training and achieving higher accuracy but imposing restrictions on the precision of the obtained sound source position. Towards this direction, in this paper we propose a novel approach for continuous distance estimation from audio signals using a convolutional recurrent neural network with an attention module. The attention mechanism enables the model to focus on relevant temporal and spectral features, enhancing its ability to capture fine-grained distance-related information. To evaluate the effectiveness of our proposed method, we conduct extensive experiments using audio recordings in controlled environments with three levels of realism (synthetic room impulse response, measured response with convolved speech, and real recordings) on four datasets (our synthetic dataset, QMULTIMIT, VoiceHome-2, and STARSS23). Experimental results show that the model achieves an absolute error of 0.11 meters in a noiseless synthetic scenario. Moreover, the results showed an absolute error of about 1.30 meters in the hybrid scenario. The algorithm's performance in the real scenario, where unpredictable environmental factors and noise are prevalent, yields an absolute error of approximately 0.50 meters. For reproducible research purposes we make model, code, and synthetic datasets available at https://github.com/michaelneri/audio-distance-estimation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge