Aniket Gujarathi

Design and Development of Autonomous Delivery Robot

Mar 16, 2021

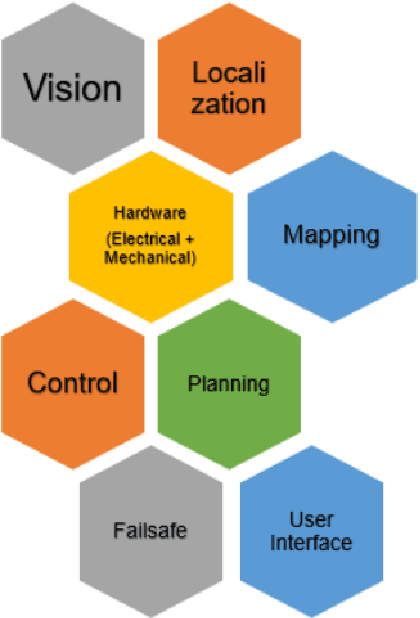

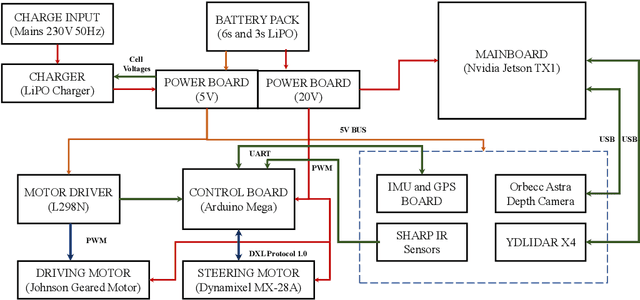

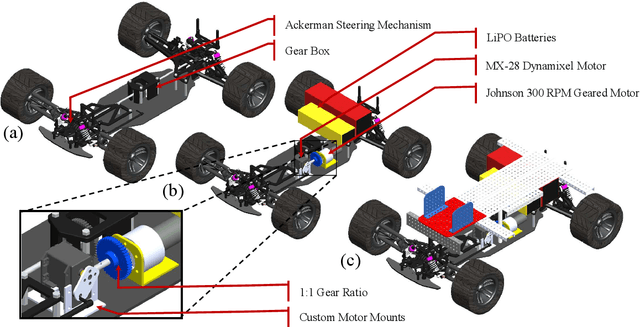

Abstract:The field of autonomous robotics is growing at a rapid rate. The trend to use increasingly more sensors in vehicles is driven both by legislation and consumer demands for higher safety and reliable service. Nowadays, robots are found everywhere, ranging from homes, hospitals to industries, and military operations. Autonomous robots are developed to be robust enough to work beside humans and to carry out jobs efficiently. Humans have a natural sense of understanding of the physical forces acting around them like gravity, sense of motion, etc. which are not taught explicitly but are developed naturally. However, this is not the case with robots. To make the robot fully autonomous and competent to work with humans, the robot must be able to perceive the situation and devise a plan for smooth operation, considering all the adversities that may occur while carrying out the tasks. In this thesis, we present an autonomous mobile robot platform that delivers the package within the VNIT campus without any human intercommunication. From an initial user-supplied geographic target location, the system plans an optimized path and autonomously navigates through it. The entire pipeline of an autonomous robot working in outdoor environments is explained in detail in this thesis.

RoRD: Rotation-Robust Descriptors and Orthographic Views for Local Feature Matching

Mar 15, 2021

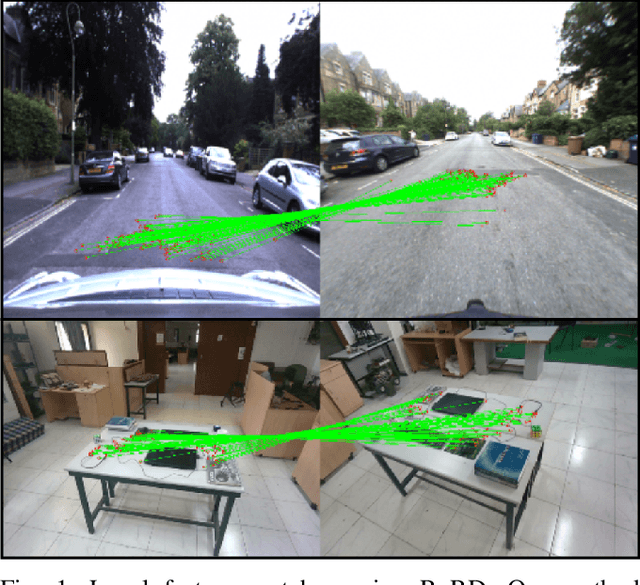

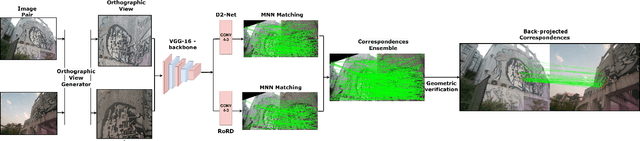

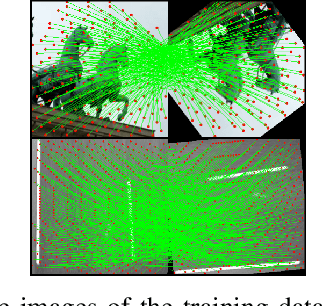

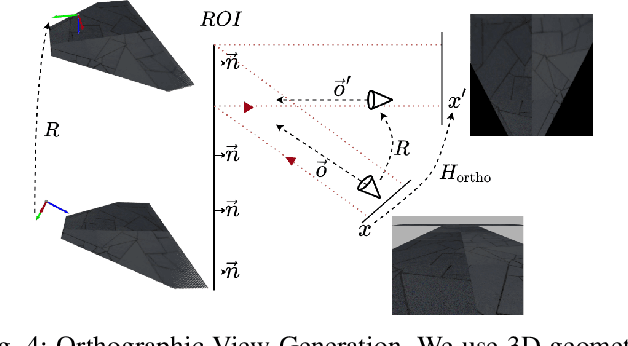

Abstract:The use of local detectors and descriptors in typical computer vision pipelines work well until variations in viewpoint and appearance change become extreme. Past research in this area has typically focused on one of two approaches to this challenge: the use of projections into spaces more suitable for feature matching under extreme viewpoint changes, and attempting to learn features that are inherently more robust to viewpoint change. In this paper, we present a novel framework that combines learning of invariant descriptors through data augmentation and orthographic viewpoint projection. We propose rotation-robust local descriptors, learnt through training data augmentation based on rotation homographies, and a correspondence ensemble technique that combines vanilla feature correspondences with those obtained through rotation-robust features. Using a range of benchmark datasets as well as contributing a new bespoke dataset for this research domain, we evaluate the effectiveness of the proposed approach on key tasks including pose estimation and visual place recognition. Our system outperforms a range of baseline and state-of-the-art techniques, including enabling higher levels of place recognition precision across opposing place viewpoints and achieves practically-useful performance levels even under extreme viewpoint changes.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge