Aneta Neumann

Bi-Objective Evolutionary Optimization for Large-Scale Open Pit Mine Scheduling Problem under Uncertainty with Chance Constraints

Nov 11, 2025Abstract:The open-pit mine scheduling problem (OPMSP) is a complex, computationally expensive process in long-term mine planning, constrained by operational and geological dependencies. Traditional deterministic approaches often ignore geological uncertainty, leading to suboptimal and potentially infeasible production schedules. Chance constraints allow modeling of stochastic components by ensuring probabilistic constraints are satisfied with high probability. This paper presents a bi-objective formulation of the OPMSP that simultaneously maximizes expected net present value and minimizes scheduling risk, independent of the confidence level required for the constraint. Solutions are represented using integer encoding, inherently satisfying reserve constraints. We introduce a domain-specific greedy randomized initialization and a precedence-aware period-swap mutation operator. We integrate these operators into three multi-objective evolutionary algorithms: the global simple evolutionary multi-objective optimizer (GSEMO), a mutation-only variant of multi-objective evolutionary algorithm based on decomposition (MOEA/D), and non-dominated sorting genetic algorithm II (NSGA-II). We compare our bi-objective formulation against the single-objective approach, which depends on a specific confidence level, by analyzing mine deposits consisting of up to 112 687 blocks. Results demonstrate that the proposed bi-objective formulation yields more robust and balanced trade-offs between economic value and risk compared to single-objective, confidence-dependent approach.

Quality Diversity Genetic Programming for Learning Scheduling Heuristics

Jul 03, 2025Abstract:Real-world optimization often demands diverse, high-quality solutions. Quality-Diversity (QD) optimization is a multifaceted approach in evolutionary algorithms that aims to generate a set of solutions that are both high-performing and diverse. QD algorithms have been successfully applied across various domains, providing robust solutions by exploring diverse behavioral niches. However, their application has primarily focused on static problems, with limited exploration in the context of dynamic combinatorial optimization problems. Furthermore, the theoretical understanding of QD algorithms remains underdeveloped, particularly when applied to learning heuristics instead of directly learning solutions in complex and dynamic combinatorial optimization domains, which introduces additional challenges. This paper introduces a novel QD framework for dynamic scheduling problems. We propose a map-building strategy that visualizes the solution space by linking heuristic genotypes to their behaviors, enabling their representation on a QD map. This map facilitates the discovery and maintenance of diverse scheduling heuristics. Additionally, we conduct experiments on both fixed and dynamically changing training instances to demonstrate how the map evolves and how the distribution of solutions unfolds over time. We also discuss potential future research directions that could enhance the learning process and broaden the applicability of QD algorithms to dynamic combinatorial optimization challenges.

Weighted-Scenario Optimisation for the Chance Constrained Travelling Thief Problem

May 01, 2025Abstract:The chance constrained travelling thief problem (chance constrained TTP) has been introduced as a stochastic variation of the classical travelling thief problem (TTP) in an attempt to embody the effect of uncertainty in the problem definition. In this work, we characterise the chance constrained TTP using a limited number of weighted scenarios. Each scenario represents a similar TTP instance, differing slightly in the weight profile of the items and associated with a certain probability of occurrence. Collectively, the weighted scenarios represent a relaxed form of a stochastic TTP instance where the objective is to maximise the expected benefit while satisfying the knapsack constraint with a larger probability. We incorporate a set of evolutionary algorithms and heuristic procedures developed for the classical TTP, and formulate adaptations that apply to the weighted scenario-based representation of the problem. The analysis focuses on the performance of the algorithms on different settings and examines the impact of uncertainty on the quality of the solutions.

Feature-based Evolutionary Diversity Optimization of Discriminating Instances for Chance-constrained Optimization Problems

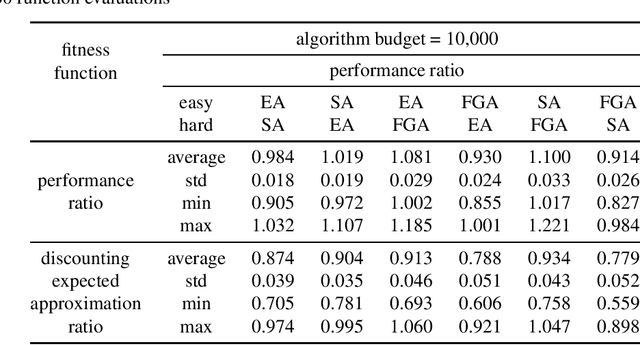

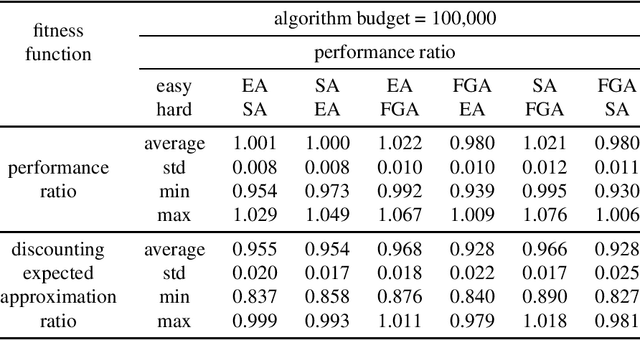

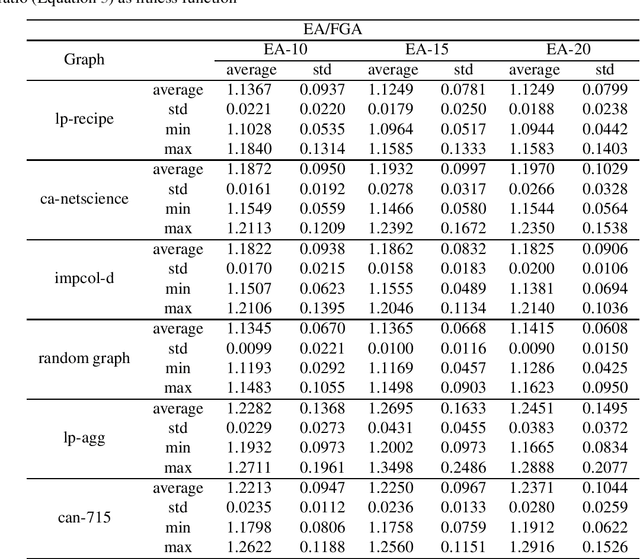

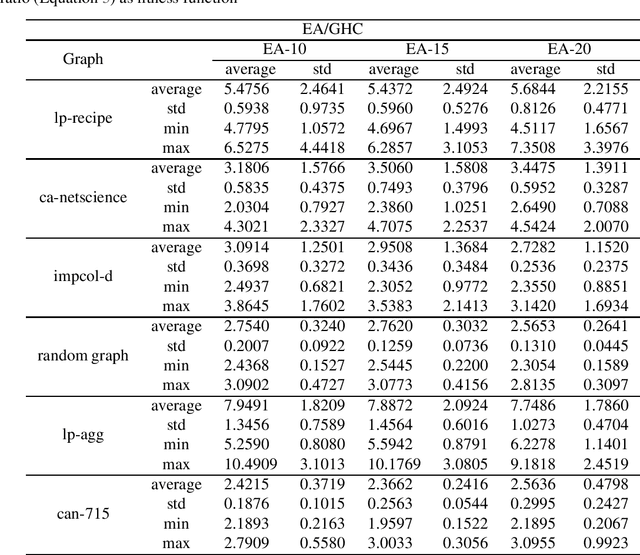

Jan 24, 2025Abstract:Algorithm selection is crucial in the field of optimization, as no single algorithm performs perfectly across all types of optimization problems. Finding the best algorithm among a given set of algorithms for a given problem requires a detailed analysis of the problem's features. To do so, it is important to have a diverse set of benchmarking instances highlighting the difference in algorithms' performance. In this paper, we evolve diverse benchmarking instances for chance-constrained optimization problems that contain stochastic components characterized by their expected values and variances. These instances clearly differentiate the performance of two given algorithms, meaning they are easy to solve by one algorithm and hard to solve by the other. We introduce a $(\mu+1)~EA$ for feature-based diversity optimization to evolve such differentiating instances. We study the chance-constrained maximum coverage problem with stochastic weights on the vertices as an example of chance-constrained optimization problems. The experimental results demonstrate that our method successfully generates diverse instances based on different features while effectively distinguishing the performance between a pair of algorithms.

Theoretical Analysis of Quality Diversity Algorithms for a Classical Path Planning Problem

Dec 16, 2024

Abstract:Quality diversity (QD) algorithms have shown to provide sets of high quality solutions for challenging problems in robotics, games, and combinatorial optimisation. So far, theoretical foundational explaining their good behaviour in practice lack far behind their practical success. We contribute to the theoretical understanding of these algorithms and study the behaviour of QD algorithms for a classical planning problem seeking several solutions. We study the all-pairs-shortest-paths (APSP) problem which gives a natural formulation of the behavioural space based on all pairs of nodes of the given input graph that can be used by Map-Elites QD algorithms. Our results show that Map-Elites QD algorithms are able to compute a shortest path for each pair of nodes efficiently in parallel. Furthermore, we examine parent selection techniques for crossover that exhibit significant speed ups compared to the standard QD approach.

Illuminating the Diversity-Fitness Trade-Off in Black-Box Optimization

Aug 29, 2024

Abstract:In real-world applications, users often favor structurally diverse design choices over one high-quality solution. It is hence important to consider more solutions that decision-makers can compare and further explore based on additional criteria. Alongside the existing approaches of evolutionary diversity optimization, quality diversity, and multimodal optimization, this paper presents a fresh perspective on this challenge by considering the problem of identifying a fixed number of solutions with a pairwise distance above a specified threshold while maximizing their average quality. We obtain first insight into these objectives by performing a subset selection on the search trajectories of different well-established search heuristics, whether specifically designed with diversity in mind or not. We emphasize that the main goal of our work is not to present a new algorithm but to look at the problem in a more fundamental and theoretically tractable way by asking the question: What trade-off exists between the minimum distance within batches of solutions and the average quality of their fitness? These insights also provide us with a way of making general claims concerning the properties of optimization problems that shall be useful in turn for benchmarking algorithms of the approaches enumerated above. A possibly surprising outcome of our empirical study is the observation that naive uniform random sampling establishes a very strong baseline for our problem, hardly ever outperformed by the search trajectories of the considered heuristics. We interpret these results as a motivation to develop algorithms tailored to produce diverse solutions of high average quality.

Local Optima in Diversity Optimization: Non-trivial Offspring Population is Essential

Jul 12, 2024Abstract:The main goal of diversity optimization is to find a diverse set of solutions which satisfy some lower bound on their fitness. Evolutionary algorithms (EAs) are often used for such tasks, since they are naturally designed to optimize populations of solutions. This approach to diversity optimization, called EDO, has been previously studied from theoretical perspective, but most studies considered only EAs with a trivial offspring population such as the $(\mu + 1)$ EA. In this paper we give an example instance of a $k$-vertex cover problem, which highlights a critical difference of the diversity optimization from the regular single-objective optimization, namely that there might be a locally optimal population from which we can escape only by replacing at least two individuals at once, which the $(\mu + 1)$ algorithms cannot do. We also show that the $(\mu + \lambda)$ EA with $\lambda \ge \mu$ can effectively find a diverse population on $k$-vertex cover, if using a mutation operator inspired by Branson and Sutton (TCS 2023). To avoid the problem of subset selection which arises in the $(\mu + \lambda)$ EA when it optimizes diversity, we also propose the $(1_\mu + 1_\mu)$ EA$_D$, which is an analogue of the $(1 + 1)$ EA for populations, and which is also efficient at optimizing diversity on the $k$-vertex cover problem.

Evolving Reliable Differentiating Constraints for the Chance-constrained Maximum Coverage Problem

May 29, 2024

Abstract:Chance-constrained problems involve stochastic components in the constraints which can be violated with a small probability. We investigate the impact of different types of chance constraints on the performance of iterative search algorithms and study the classical maximum coverage problem in graphs with chance constraints. Our goal is to evolve reliable chance constraint settings for a given graph where the performance of algorithms differs significantly not just in expectation but with high confidence. This allows to better learn and understand how different types of algorithms can deal with different types of constraint settings and supports automatic algorithm selection. We develop an evolutionary algorithm that provides sets of chance constraints that differentiate the performance of two stochastic search algorithms with high confidence. We initially use traditional approximation ratio as the fitness function of (1+1)~EA to evolve instances, which shows inadequacy to generate reliable instances. To address this issue, we introduce a new measure to calculate the performance difference for two algorithms, which considers variances of performance ratios. Our experiments show that our approach is highly successful in solving the instability issue of the performance ratios and leads to evolving reliable sets of chance constraints with significantly different performance for various types of algorithms.

Runtime Analysis of Evolutionary Diversity Optimization on the Multi-objective (LeadingOnes, TrailingZeros) Problem

Apr 19, 2024Abstract:The diversity optimization is the class of optimization problems, in which we aim at finding a diverse set of good solutions. One of the frequently used approaches to solve such problems is to use evolutionary algorithms which evolve a desired diverse population. This approach is called evolutionary diversity optimization (EDO). In this paper, we analyse EDO on a 3-objective function LOTZ$_k$, which is a modification of the 2-objective benchmark function (LeadingOnes, TrailingZeros). We prove that the GSEMO computes a set of all Pareto-optimal solutions in $O(kn^3)$ expected iterations. We also analyze the runtime of the GSEMO$_D$ (a modification of the GSEMO for diversity optimization) until it finds a population with the best possible diversity for two different diversity measures, the total imbalance and the sorted imbalances vector. For the first measure we show that the GSEMO$_D$ optimizes it asymptotically faster than it finds a Pareto-optimal population, in $O(kn^2\log(n))$ expected iterations, and for the second measure we show an upper bound of $O(k^2n^3\log(n))$ expected iterations. We complement our theoretical analysis with an empirical study, which shows a very similar behavior for both diversity measures that is close to the theory predictions.

Sampling-based Pareto Optimization for Chance-constrained Monotone Submodular Problems

Apr 18, 2024Abstract:Recently surrogate functions based on the tail inequalities were developed to evaluate the chance constraints in the context of evolutionary computation and several Pareto optimization algorithms using these surrogates were successfully applied in optimizing chance-constrained monotone submodular problems. However, the difference in performance between algorithms using the surrogates and those employing the direct sampling-based evaluation remains unclear. Within the paper, a sampling-based method is proposed to directly evaluate the chance constraint. Furthermore, to address the problems with more challenging settings, an enhanced GSEMO algorithm integrated with an adaptive sliding window, called ASW-GSEMO, is introduced. In the experiments, the ASW-GSEMO employing the sampling-based approach is tested on the chance-constrained version of the maximum coverage problem with different settings. Its results are compared with those from other algorithms using different surrogate functions. The experimental findings indicate that the ASW-GSEMO with the sampling-based evaluation approach outperforms other algorithms, highlighting that the performances of algorithms using different evaluation methods are comparable. Additionally, the behaviors of ASW-GSEMO are visualized to explain the distinctions between it and the algorithms utilizing the surrogate functions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge