Andreas Hauptmann

Learned iterative networks: An operator learning perspective

Dec 09, 2025

Abstract:Learned image reconstruction has become a pillar in computational imaging and inverse problems. Among the most successful approaches are learned iterative networks, which are formulated by unrolling classical iterative optimisation algorithms for solving variational problems. While the underlying algorithm is usually formulated in the functional analytic setting, learned approaches are often viewed as purely discrete. In this chapter we present a unified operator view for learned iterative networks. Specifically, we formulate a learned reconstruction operator, defining how to compute, and separately the learning problem, which defines what to compute. In this setting we present common approaches and show that many approaches are closely related in their core. We review linear as well as nonlinear inverse problems in this framework and present a short numerical study to conclude.

Towards robust quantitative photoacoustic tomography via learned iterative methods

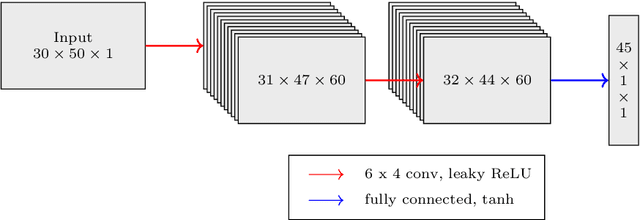

Oct 31, 2025Abstract:Photoacoustic tomography (PAT) is a medical imaging modality that can provide high-resolution tissue images based on the optical absorption. Classical reconstruction methods for quantifying the absorption coefficients rely on sufficient prior information to overcome noisy and imperfect measurements. As these methods utilize computationally expensive forward models, the computation becomes slow, limiting their potential for time-critical applications. As an alternative approach, deep learning-based reconstruction methods have been established for faster and more accurate reconstructions. However, most of these methods rely on having a large amount of training data, which is not the case in practice. In this work, we adopt the model-based learned iterative approach for the use in Quantitative PAT (QPAT), in which additional information from the model is iteratively provided to the updating networks, allowing better generalizability with scarce training data. We compare the performance of different learned updates based on gradient descent, Gauss-Newton, and Quasi-Newton methods. The learning tasks are formulated as greedy, requiring iterate-wise optimality, as well as end-to-end, where all networks are trained jointly. The implemented methods are tested with ideal simulated data as well as against a digital twin dataset that emulates scarce training data and high modeling error.

Deep Learning Based Reconstruction Methods for Electrical Impedance Tomography

Aug 08, 2025

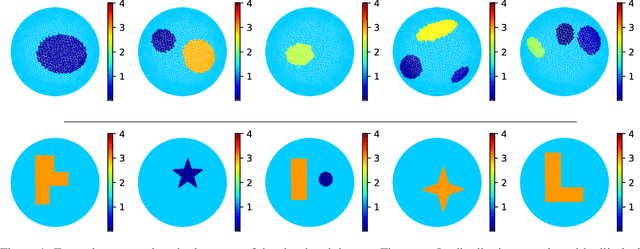

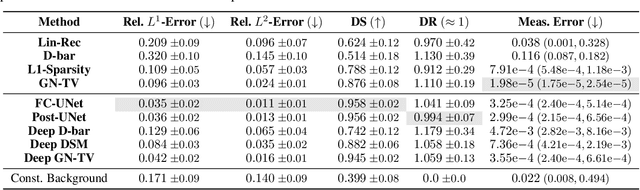

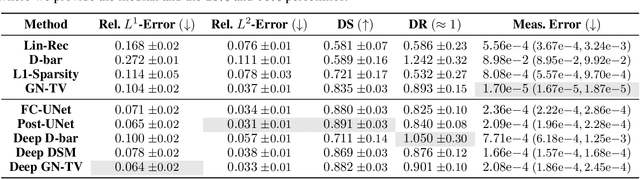

Abstract:Electrical Impedance Tomography (EIT) is a powerful imaging modality widely used in medical diagnostics, industrial monitoring, and environmental studies. The EIT inverse problem is about inferring the internal conductivity distribution of the concerned object from the voltage measurements taken on its boundary. This problem is severely ill-posed, and requires advanced computational approaches for accurate and reliable image reconstruction. Recent innovations in both model-based reconstruction and deep learning have driven significant progress in the field. In this review, we explore learned reconstruction methods that employ deep neural networks for solving the EIT inverse problem. The discussion focuses on the complete electrode model, one popular mathematical model for real-world applications of EIT. We compare a wide variety of learned approaches, including fully-learned, post-processing and learned iterative methods, with several conventional model-based reconstruction techniques, e.g., sparsity regularization, regularized Gauss-Newton iteration and level set method. The evaluation is based on three datasets: a simulated dataset of ellipses, an out-of-distribution simulated dataset, and the KIT4 dataset, including real-world measurements. Our results demonstrate that learned methods outperform model-based methods for in-distribution data but face challenges in generalization, where hybrid methods exhibit a good balance of accuracy and adaptability.

Digital twins enable full-reference quality assessment of photoacoustic image reconstructions

May 30, 2025

Abstract:Quantitative comparison of the quality of photoacoustic image reconstruction algorithms remains a major challenge. No-reference image quality measures are often inadequate, but full-reference measures require access to an ideal reference image. While the ground truth is known in simulations, it is unknown in vivo, or in phantom studies, as the reference depends on both the phantom properties and the imaging system. We tackle this problem by using numerical digital twins of tissue-mimicking phantoms and the imaging system to perform a quantitative calibration to reduce the simulation gap. The contributions of this paper are two-fold: First, we use this digital-twin framework to compare multiple state-of-the-art reconstruction algorithms. Second, among these is a Fourier transform-based reconstruction algorithm for circular detection geometries, which we test on experimental data for the first time. Our results demonstrate the usefulness of digital phantom twins by enabling assessment of the accuracy of the numerical forward model and enabling comparison of image reconstruction schemes with full-reference image quality assessment. We show that the Fourier transform-based algorithm yields results comparable to those of iterative time reversal, but at a lower computational cost. All data and code are publicly available on Zenodo: https://doi.org/10.5281/zenodo.15388429.

Learned enclosure method for experimental EIT data

Apr 15, 2025

Abstract:Electrical impedance tomography (EIT) is a non-invasive imaging method with diverse applications, including medical imaging and non-destructive testing. The inverse problem of reconstructing internal electrical conductivity from boundary measurements is nonlinear and highly ill-posed, making it difficult to solve accurately. In recent years, there has been growing interest in combining analytical methods with machine learning to solve inverse problems. In this paper, we propose a method for estimating the convex hull of inclusions from boundary measurements by combining the enclosure method proposed by Ikehata with neural networks. We demonstrate its performance using experimental data. Compared to the classical enclosure method with least squares fitting, the learned convex hull achieves superior performance on both simulated and experimental data.

Self-Supervised Denoiser Framework

Nov 29, 2024

Abstract:Reconstructing images using Computed Tomography (CT) in an industrial context leads to specific challenges that differ from those encountered in other areas, such as clinical CT. Indeed, non-destructive testing with industrial CT will often involve scanning multiple similar objects while maintaining high throughput, requiring short scanning times, which is not a relevant concern in clinical CT. Under-sampling the tomographic data (sinograms) is a natural way to reduce the scanning time at the cost of image quality since the latter depends on the number of measurements. In such a scenario, post-processing techniques are required to compensate for the image artifacts induced by the sinogram sparsity. We introduce the Self-supervised Denoiser Framework (SDF), a self-supervised training method that leverages pre-training on highly sampled sinogram data to enhance the quality of images reconstructed from undersampled sinogram data. The main contribution of SDF is that it proposes to train an image denoiser in the sinogram space by setting the learning task as the prediction of one sinogram subset from another. As such, it does not require ground-truth image data, leverages the abundant data modality in CT, the sinogram, and can drastically enhance the quality of images reconstructed from a fraction of the measurements. We demonstrate that SDF produces better image quality, in terms of peak signal-to-noise ratio, than other analytical and self-supervised frameworks in both 2D fan-beam or 3D cone-beam CT settings. Moreover, we show that the enhancement provided by SDF carries over when fine-tuning the image denoiser on a few examples, making it a suitable pre-training technique in a context where there is little high-quality image data. Our results are established on experimental datasets, making SDF a strong candidate for being the building block of foundational image-enhancement models in CT.

Model-based reconstructions for quantitative imaging in photoacoustic tomography

Nov 27, 2023

Abstract:The reconstruction task in photoacoustic tomography can vary a lot depending on measured targets, geometry, and especially the quantity we want to recover. Specifically, as the signal is generated due to the coupling of light and sound by the photoacoustic effect, we have the possibility to recover acoustic as well as optical tissue parameters. This is referred to as quantitative imaging, i.e, correct recovery of physical parameters and not just a qualitative image. In this chapter, we aim to give an overview on established reconstruction techniques in photoacoustic tomography. We start with modelling of the optical and acoustic phenomena, necessary for a reliable recovery of quantitative values. Furthermore, we give an overview of approaches for the tomographic reconstruction problem with an emphasis on the recovery of quantitative values, from direct and fast analytic approaches to computationally involved optimisation based techniques and recent data-driven approaches.

Inverse Problems with Learned Forward Operators

Nov 21, 2023

Abstract:Solving inverse problems requires knowledge of the forward operator, but accurate models can be computationally expensive and hence cheaper variants are desired that do not compromise reconstruction quality. This chapter reviews reconstruction methods in inverse problems with learned forward operators that follow two different paradigms. The first one is completely agnostic to the forward operator and learns its restriction to the subspace spanned by the training data. The framework of regularisation by projection is then used to find a reconstruction. The second one uses a simplified model of the physics of the measurement process and only relies on the training data to learn a model correction. We present the theory of these two approaches and compare them numerically. A common theme emerges: both methods require, or at least benefit from, training data not only for the forward operator, but also for its adjoint.

Electrical Impedance Tomography: A Fair Comparative Study on Deep Learning and Analytic-based Approaches

Oct 28, 2023Abstract:Electrical Impedance Tomography (EIT) is a powerful imaging technique with diverse applications, e.g., medical diagnosis, industrial monitoring, and environmental studies. The EIT inverse problem is about inferring the internal conductivity distribution of an object from measurements taken on its boundary. It is severely ill-posed, necessitating advanced computational methods for accurate image reconstructions. Recent years have witnessed significant progress, driven by innovations in analytic-based approaches and deep learning. This review explores techniques for solving the EIT inverse problem, focusing on the interplay between contemporary deep learning-based strategies and classical analytic-based methods. Four state-of-the-art deep learning algorithms are rigorously examined, harnessing the representational capabilities of deep neural networks to reconstruct intricate conductivity distributions. In parallel, two analytic-based methods, rooted in mathematical formulations and regularisation techniques, are dissected for their strengths and limitations. These methodologies are evaluated through various numerical experiments, encompassing diverse scenarios that reflect real-world complexities. A suite of performance metrics is employed to assess the efficacy of these methods. These metrics collectively provide a nuanced understanding of the methods' ability to capture essential features and delineate complex conductivity patterns. One novel feature of the study is the incorporation of variable conductivity scenarios, introducing a level of heterogeneity that mimics textured inclusions. This departure from uniform conductivity assumptions mimics realistic scenarios where tissues or materials exhibit spatially varying electrical properties. Exploring how each method responds to such variable conductivity scenarios opens avenues for understanding their robustness and adaptability.

Convergent regularization in inverse problems and linear plug-and-play denoisers

Jul 18, 2023Abstract:Plug-and-play (PnP) denoising is a popular iterative framework for solving imaging inverse problems using off-the-shelf image denoisers. Their empirical success has motivated a line of research that seeks to understand the convergence of PnP iterates under various assumptions on the denoiser. While a significant amount of research has gone into establishing the convergence of the PnP iteration for different regularity conditions on the denoisers, not much is known about the asymptotic properties of the converged solution as the noise level in the measurement tends to zero, i.e., whether PnP methods are provably convergent regularization schemes under reasonable assumptions on the denoiser. This paper serves two purposes: first, we provide an overview of the classical regularization theory in inverse problems and survey a few notable recent data-driven methods that are provably convergent regularization schemes. We then continue to discuss PnP algorithms and their established convergence guarantees. Subsequently, we consider PnP algorithms with linear denoisers and propose a novel spectral filtering technique to control the strength of regularization arising from the denoiser. Further, by relating the implicit regularization of the denoiser to an explicit regularization functional, we rigorously show that PnP with linear denoisers leads to a convergent regularization scheme. More specifically, we prove that in the limit as the noise vanishes, the PnP reconstruction converges to the minimizer of a regularization potential subject to the solution satisfying the noiseless operator equation. The theoretical analysis is corroborated by numerical experiments for the classical inverse problem of tomographic image reconstruction.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge