Emilien Valat

Improving the Generalisation of Learned Reconstruction Frameworks

Nov 16, 2025

Abstract:Ensuring proper generalization is a critical challenge in applying data-driven methods for solving inverse problems in imaging, as neural networks reconstructing an image must perform well across varied datasets and acquisition geometries. In X-ray Computed Tomography (CT), convolutional neural networks (CNNs) are widely used to filter the projection data but are ill-suited for this task as they apply grid-based convolutions to the sinogram, which inherently lies on a line manifold, not a regular grid. The CNNs, unaware of the geometry, are implicitly tied to it and require an excessive amount of parameters as they must infer the relations between measurements from the data rather than from prior information. The contribution of this paper is twofold. First, we introduce a graph data structure to represent CT acquisition geometries and tomographic data, providing a detailed explanation of the graph's structure for circular, cone-beam geometries. Second, we propose GLM, a hybrid neural network architecture that leverages both graph and grid convolutions to process tomographic data. We demonstrate that GLM outperforms CNNs when performance is quantified in terms of structural similarity and peak signal-to-noise ratio, despite the fact that GLM uses only a fraction of the trainable parameters. Compared to CNNs, GLM also requires significantly less training time and memory, and its memory requirements scale better. Crucially, GLM demonstrates robust generalization to unseen variations in the acquisition geometry, like when training only on fully sampled CT data and then testing on sparse-view CT data.

Self-Supervised Denoiser Framework

Nov 29, 2024

Abstract:Reconstructing images using Computed Tomography (CT) in an industrial context leads to specific challenges that differ from those encountered in other areas, such as clinical CT. Indeed, non-destructive testing with industrial CT will often involve scanning multiple similar objects while maintaining high throughput, requiring short scanning times, which is not a relevant concern in clinical CT. Under-sampling the tomographic data (sinograms) is a natural way to reduce the scanning time at the cost of image quality since the latter depends on the number of measurements. In such a scenario, post-processing techniques are required to compensate for the image artifacts induced by the sinogram sparsity. We introduce the Self-supervised Denoiser Framework (SDF), a self-supervised training method that leverages pre-training on highly sampled sinogram data to enhance the quality of images reconstructed from undersampled sinogram data. The main contribution of SDF is that it proposes to train an image denoiser in the sinogram space by setting the learning task as the prediction of one sinogram subset from another. As such, it does not require ground-truth image data, leverages the abundant data modality in CT, the sinogram, and can drastically enhance the quality of images reconstructed from a fraction of the measurements. We demonstrate that SDF produces better image quality, in terms of peak signal-to-noise ratio, than other analytical and self-supervised frameworks in both 2D fan-beam or 3D cone-beam CT settings. Moreover, we show that the enhancement provided by SDF carries over when fine-tuning the image denoiser on a few examples, making it a suitable pre-training technique in a context where there is little high-quality image data. Our results are established on experimental datasets, making SDF a strong candidate for being the building block of foundational image-enhancement models in CT.

HandCT: hands-on computational dataset for X-Ray Computed Tomography and Machine-Learning

Apr 17, 2023Abstract:Machine-learning methods rely on sufficiently large dataset to learn data distributions. They are widely used in research in X-Ray Computed Tomography, from low-dose scan denoising to optimisation of the reconstruction process. The lack of datasets prevents the scalability of these methods to realistic 3D problems. We develop a 3D procedural dataset in order to produce samples for data-driven algorithms. It is made of a meshed model of a left hand and a script to randomly change its anatomic properties and pose whilst conserving realistic features. This open-source solution relies on the freeware Blender and its Python core. Blender handles the modelling, the mesh and the generation of the hand's pose, whilst Python processes file format conversion from obj file to matrix and functions to scale and center the volume for further processing. Dataset availability and quality drives research in machine-learning. We design a dataset that weighs few megabytes, provides truthful samples and proposes continuous enhancements using version control. We anticipate this work to be a starting point for anatomically accurate procedural datasets. For instance, by adding more internal features and fine tuning their X-Ray attenuation properties.

Data-Driven Interpolation for Super-Scarce X-Ray Computed Tomography

May 16, 2022

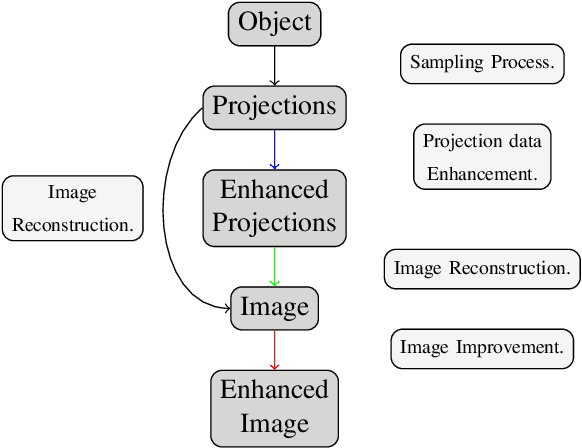

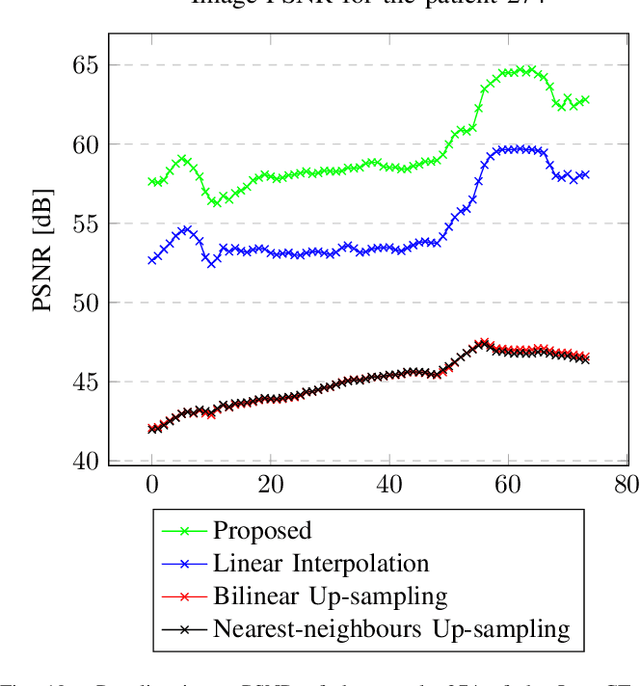

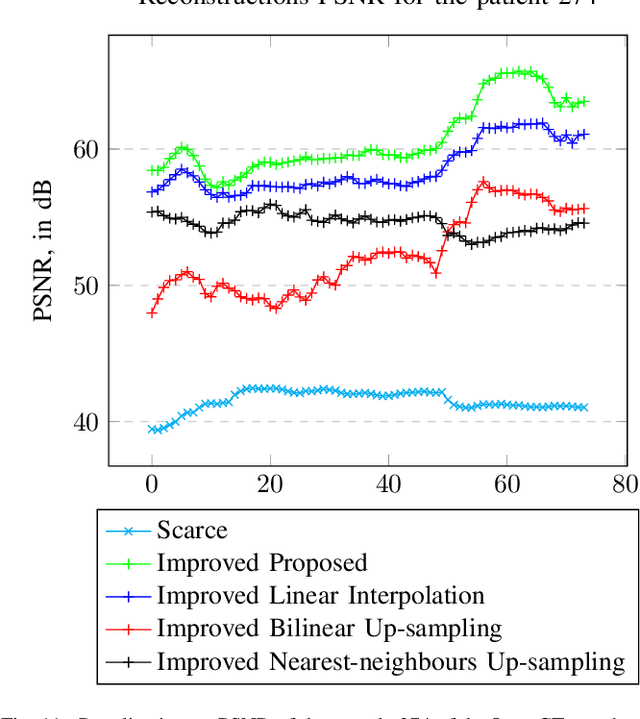

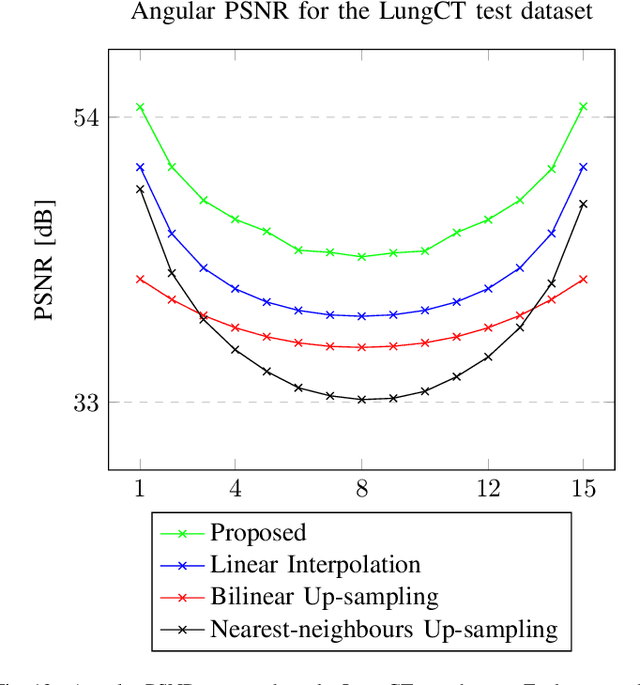

Abstract:We address the problem of reconstructing X-Ray tomographic images from scarce measurements by interpolating missing acquisitions using a self-supervised approach. To do so, we train shallow neural networks to combine two neighbouring acquisitions into an estimated measurement at an intermediate angle. This procedure yields an enhanced sequence of measurements that can be reconstructed using standard methods, or further enhanced using regularisation approaches. Unlike methods that improve the sequence of acquisitions using an initial deterministic interpolation followed by machine-learning enhancement, we focus on inferring one measurement at once. This allows the method to scale to 3D, the computation to be faster and crucially, the interpolation to be significantly better than the current methods, when they exist. We also establish that a sequence of measurements must be processed as such, rather than as an image or a volume. We do so by comparing interpolation and up-sampling methods, and find that the latter significantly under-perform. We compare the performance of the proposed method against deterministic interpolation and up-sampling procedures and find that it outperforms them, even when used jointly with a state-of-the-art projection-data enhancement approach using machine-learning. These results are obtained for 2D and 3D imaging, on large biomedical datasets, in both projection space and image space.

Sinogram Enhancement with Generative Adversarial Networks using Shape Priors

Feb 01, 2022Abstract:Compensating scarce measurements by inferring them from computational models is a way to address ill-posed inverse problems. We tackle Limited Angle Tomography by completing the set of acquisitions using a generative model and prior-knowledge about the scanned object. Using a Generative Adversarial Network as model and Computer-Assisted Design data as shape prior, we demonstrate a quantitative and qualitative advantage of our technique over other state-of-the-art methods. Inferring a substantial number of consecutive missing measurements, we offer an alternative to other image inpainting techniques that fall short of providing a satisfying answer to our research question: can X-Ray exposition be reduced by using generative models to infer lacking measurements?

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge