Thomas Blumensath

Stereo X-ray tomography on deformed object tracking

Apr 30, 2025Abstract:X-ray computed tomography is a powerful tool for volumetric imaging, but requires the collection of a large number of low-noise projection images, which is often too time consuming, limiting its applicability. In our previous work \cite{shang2023stereo}, we proposed a stereo X-ray tomography system to map the 3D position of fiducial markers using only two projections of a static volume. In dynamic imaging settings, where objects undergo deformations during imaging, this static method can be extended by utilizing additional temporal information. We thus extend the method to track the deformation of fiducial markers in 3D space, where we use knowledge of the initial object shape as prior information, improving the prediction of the evolution of its deformed state over time. In particular, knowledge of the initial object's stereo projections is shown to improve the method's robustness to noise when detecting fiducial marker locations in the projections of the deformed objects. Furthermore, after feature detection, by using the features' initial 3D position information in the undeformed object, we can also demonstrate improvements in the 3D mapping of the deformed features. Using a range of deformed 3D objects, this new approach is shown to be able to track fiducial markers in noisy stereo tomography images with subpixel accuracy.

Imaging on the Edge: Mapping Object Corners and Edges with Stereo X-ray Tomography

Apr 29, 2025

Abstract:X-ray computed tomography is a powerful tool for volumetric imaging, where three-dimensional (3D) images are generated from a large number of individual X-ray projection images. Collecting the required number of low noise projection images is however time-consuming and so the technique is not currently applicable when spatial information needs to be collected with high temporal resolution, such as in the study of dynamic processes. In our previous work, inspired by stereo vision, we developed stereo X-ray imaging methods that operate with only two X-ray projection images. Previously we have shown how this allowed us to map point and line fiducial markers into 3D space at significantly faster temporal resolutions. In this paper, we make two further contributions. Firstly, instead of utilising internal fiducial markers, we demonstrate the applicability of the method to the 3D mapping of sharp object corners, a problem of interest in measuring the deformation of manufactured components under different loads. Furthermore, we demonstrate how the approach can be applied to real stereo X-ray data, even in settings where we do not have the annotated real training data that was required for the training of our previous Machine Learning approach. This is achieved by substituting the real data with a relatively simple synthetic training dataset designed to mimic key aspects of the real data.

Invertible Low-Dimensional Modelling of X-ray Absorption Spectra for Potential Applications in Spectral X-ray Imaging

Jul 10, 2023Abstract:X-ray interaction with matter is an energy-dependent process that is contingent on the atomic structure of the constituent material elements. The most advanced models to capture this relationship currently rely on Monte Carlo (MC) simulations. Whilst these very accurate models, in many problems in spectral X-ray imaging, such as data compression, noise removal, spectral estimation, and the quantitative measurement of material compositions, these models are of limited use, as these applications typically require the efficient inversion of the model, that is, they require the estimation of the best model parameters for a given spectral measurement. Current models that can be easily inverted however typically only work when modelling spectra in regions away from their K-edges, so they have limited utility when modelling a wider range of materials. In this paper, we thus propose a novel, non-linear model that combines a deep neural network autoencoder with an optimal linear model based on the Singular Value Decomposition (SVD). We compare our new method to other alternative linear and non-linear approaches, a sparse model and an alternative deep learning model. We demonstrate the advantages of our method over traditional models, especially when modelling X-ray absorption spectra that contain K-edges in the energy range of interest.

Stereo X-ray Tomography

Feb 26, 2023Abstract:X-ray tomography is a powerful volumetric imaging technique, but detailed three dimensional (3D) imaging requires the acquisition of a large number of individual X-ray images, which is time consuming. For applications where spatial information needs to be collected quickly, for example, when studying dynamic processes, standard X-ray tomography is therefore not applicable. Inspired by stereo vision, in this paper, we develop X-ray imaging methods that work with two X-ray projection images. In this setting, without the use of additional strong prior information, we no longer have enough information to fully recover the 3D tomographic images. However, up to a point, we are nevertheless able to extract spatial locations of point and line features. From stereo vision, it is well known that, for a known imaging geometry, once the same point is identified in two images taken from different directions, then the point's location in 3D space is exactly specified. The challenge is the matching of points between images. As X-ray transmission images are fundamentally different from the surface reflection images used in standard computer vision, we here develop a different feature identification and matching approach. In fact, once point like features are identified, if there are limited points in the image, then they can often be matched exactly. In fact, by utilising a third observation from an appropriate direction, matching becomes unique. Once matched, point locations in 3D space are easily computed using geometric considerations. Linear features, with clear end points, can be located using a similar approach.

Data-Driven Interpolation for Super-Scarce X-Ray Computed Tomography

May 16, 2022

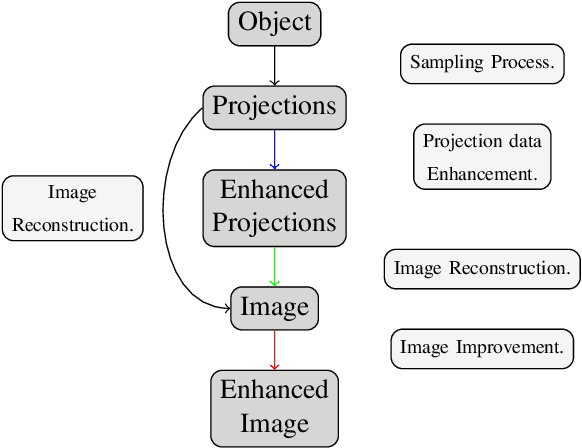

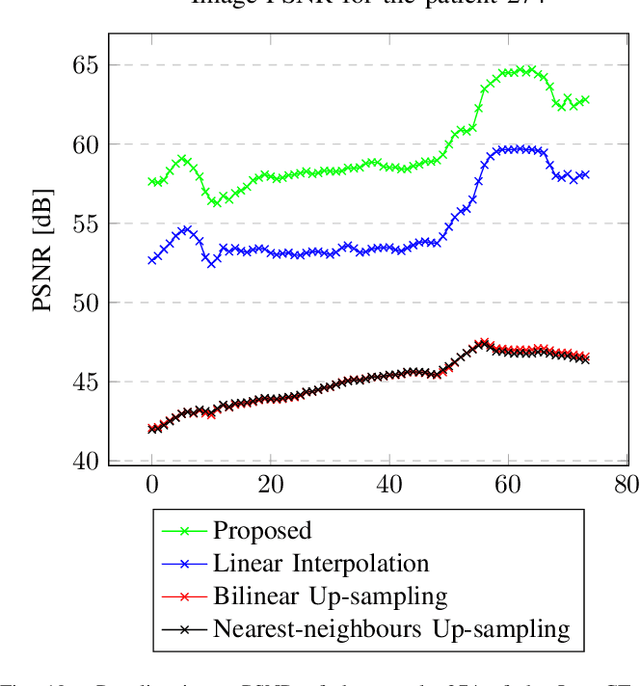

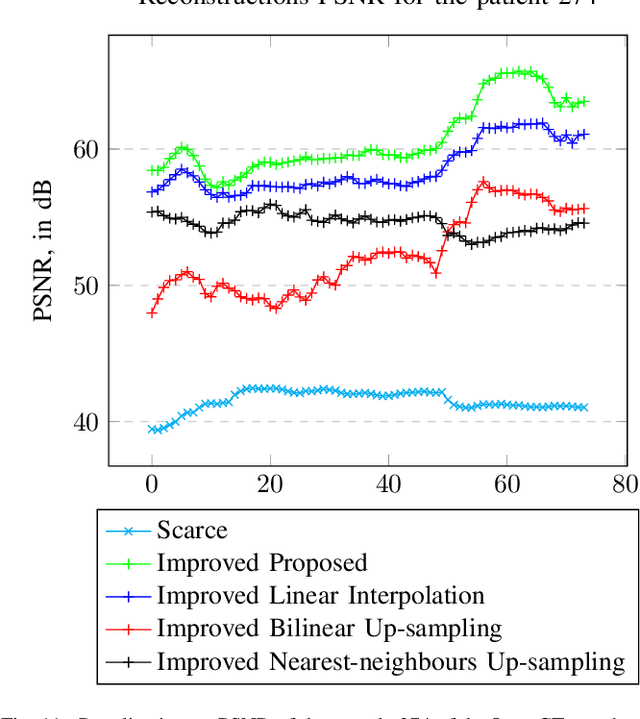

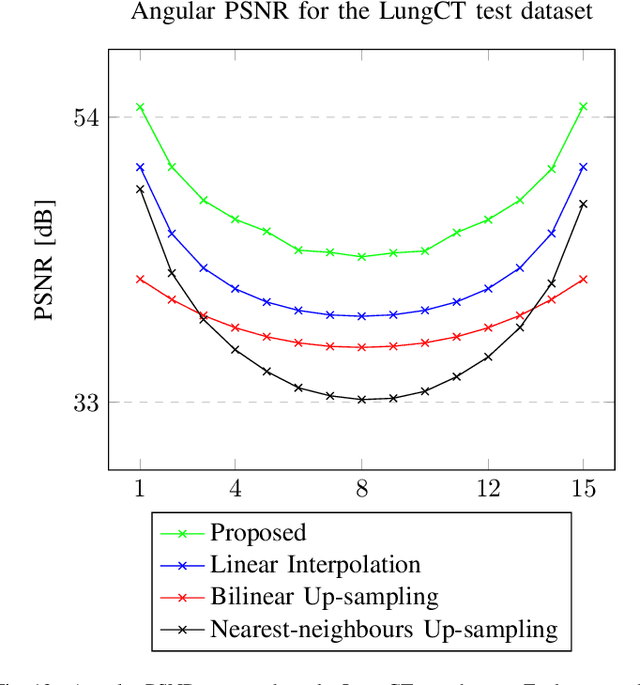

Abstract:We address the problem of reconstructing X-Ray tomographic images from scarce measurements by interpolating missing acquisitions using a self-supervised approach. To do so, we train shallow neural networks to combine two neighbouring acquisitions into an estimated measurement at an intermediate angle. This procedure yields an enhanced sequence of measurements that can be reconstructed using standard methods, or further enhanced using regularisation approaches. Unlike methods that improve the sequence of acquisitions using an initial deterministic interpolation followed by machine-learning enhancement, we focus on inferring one measurement at once. This allows the method to scale to 3D, the computation to be faster and crucially, the interpolation to be significantly better than the current methods, when they exist. We also establish that a sequence of measurements must be processed as such, rather than as an image or a volume. We do so by comparing interpolation and up-sampling methods, and find that the latter significantly under-perform. We compare the performance of the proposed method against deterministic interpolation and up-sampling procedures and find that it outperforms them, even when used jointly with a state-of-the-art projection-data enhancement approach using machine-learning. These results are obtained for 2D and 3D imaging, on large biomedical datasets, in both projection space and image space.

Sinogram Enhancement with Generative Adversarial Networks using Shape Priors

Feb 01, 2022Abstract:Compensating scarce measurements by inferring them from computational models is a way to address ill-posed inverse problems. We tackle Limited Angle Tomography by completing the set of acquisitions using a generative model and prior-knowledge about the scanned object. Using a Generative Adversarial Network as model and Computer-Assisted Design data as shape prior, we demonstrate a quantitative and qualitative advantage of our technique over other state-of-the-art methods. Inferring a substantial number of consecutive missing measurements, we offer an alternative to other image inpainting techniques that fall short of providing a satisfying answer to our research question: can X-Ray exposition be reduced by using generative models to infer lacking measurements?

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge