Andre Kaup

Overview of Variable Rate Coding in JPEG AI

Mar 20, 2025Abstract:Empirical evidence has demonstrated that learning-based image compression can outperform classical compression frameworks. This has led to the ongoing standardization of learned-based image codecs, namely Joint Photographic Experts Group (JPEG) AI. The objective of JPEG AI is to enhance compression efficiency and provide a software and hardwarefriendly solution. Based on our research, JPEG AI represents the first standardization that can facilitate the implementation of a learned image codec on a mobile device. This article presents an overview of the variable rate coding functionality in JPEG AI, which includes three variable rate adaptations: a threedimensional quality map, a fast bit rate matching algorithm, and a training strategy. The variable rate adaptations offer a continuous rate function up to 2.0 bpp, exhibiting a high level of performance, a flexible bit allocation between different color components, and a region of interest function for the specified use case. The evaluation of performance encompasses both objective and subjective results. With regard to the objective bit rate matching, the main profile with low complexity yielded a 13.1% BD-rate gain over VVC intra, while the high profile with high complexity achieved a 19.2% BD-rate gain over VVC intra. The BD-rate result is calculated as the mean of the seven perceptual metrics defined in the JPEG AI common test conditions. With respect to subjective results, the example of improving the quality of the region of interest is illustrated.

End-to-end learned Lossy Dynamic Point Cloud Attribute Compression

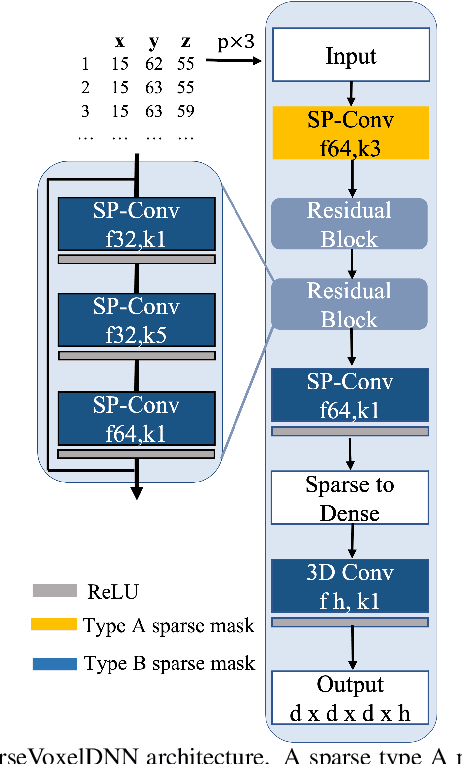

Aug 20, 2024

Abstract:Recent advancements in point cloud compression have primarily emphasized geometry compression while comparatively fewer efforts have been dedicated to attribute compression. This study introduces an end-to-end learned dynamic lossy attribute coding approach, utilizing an efficient high-dimensional convolution to capture extensive inter-point dependencies. This enables the efficient projection of attribute features into latent variables. Subsequently, we employ a context model that leverage previous latent space in conjunction with an auto-regressive context model for encoding the latent tensor into a bitstream. Evaluation of our method on widely utilized point cloud datasets from the MPEG and Microsoft demonstrates its superior performance compared to the core attribute compression module Region-Adaptive Hierarchical Transform method from MPEG Geometry Point Cloud Compression with 38.1% Bjontegaard Delta-rate saving in average while ensuring a low-complexity encoding/decoding.

Bit Rate Matching Algorithm Optimization in JPEG-AI Verification Model

Feb 27, 2024Abstract:The research on neural network (NN) based image compression has shown superior performance compared to classical compression frameworks. Unlike the hand-engineered transforms in the classical frameworks, NN-based models learn the non-linear transforms providing more compact bit representations, and achieve faster coding speed on parallel devices over their classical counterparts. Those properties evoked the attention of both scientific and industrial communities, resulting in the standardization activity JPEG-AI. The verification model for the standardization process of JPEG-AI is already in development and has surpassed the advanced VVC intra codec. To generate reconstructed images with the desired bits per pixel and assess the BD-rate performance of both the JPEG-AI verification model and VVC intra, bit rate matching is employed. However, the current state of the JPEG-AI verification model experiences significant slowdowns during bit rate matching, resulting in suboptimal performance due to an unsuitable model. The proposed methodology offers a gradual algorithmic optimization for matching bit rates, resulting in a fourfold acceleration and over 1% improvement in BD-rate at the base operation point. At the high operation point, the acceleration increases up to sixfold.

Bit Distribution Study and Implementation of Spatial Quality Map in the JPEG-AI Standardization

Feb 27, 2024Abstract:Currently, there is a high demand for neural network-based image compression codecs. These codecs employ non-linear transforms to create compact bit representations and facilitate faster coding speeds on devices compared to the hand-crafted transforms used in classical frameworks. The scientific and industrial communities are highly interested in these properties, leading to the standardization effort of JPEG-AI. The JPEG-AI verification model has been released and is currently under development for standardization. Utilizing neural networks, it can outperform the classic codec VVC intra by over 10% BD-rate operating at base operation point. Researchers attribute this success to the flexible bit distribution in the spatial domain, in contrast to VVC intra's anchor that is generated with a constant quality point. However, our study reveals that VVC intra displays a more adaptable bit distribution structure through the implementation of various block sizes. As a result of our observations, we have proposed a spatial bit allocation method to optimize the JPEG-AI verification model's bit distribution and enhance the visual quality. Furthermore, by applying the VVC bit distribution strategy, the objective performance of JPEG-AI verification mode can be further improved, resulting in a maximum gain of 0.45 dB in PSNR-Y.

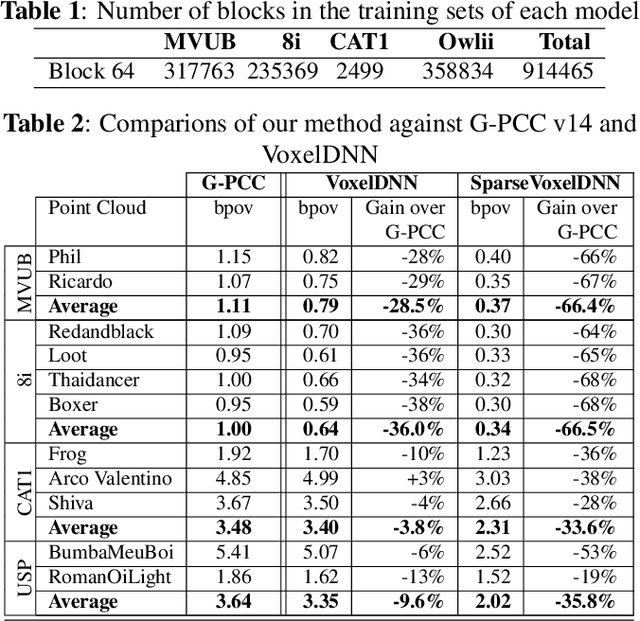

Deep probabilistic model for lossless scalable point cloud attribute compression

Mar 11, 2023Abstract:In recent years, several point cloud geometry compression methods that utilize advanced deep learning techniques have been proposed, but there are limited works on attribute compression, especially lossless compression. In this work, we build an end-to-end multiscale point cloud attribute coding method (MNeT) that progressively projects the attributes onto multiscale latent spaces. The multiscale architecture provides an accurate context for the attribute probability modeling and thus minimizes the coding bitrate with a single network prediction. Besides, our method allows scalable coding that lower quality versions can be easily extracted from the losslessly compressed bitstream. We validate our method on a set of point clouds from MVUB and MPEG and show that our method outperforms recently proposed methods and on par with the latest G-PCC version 14. Besides, our coding time is substantially faster than G-PCC.

Learning-Based Conditional Image Coder Using Color Separation

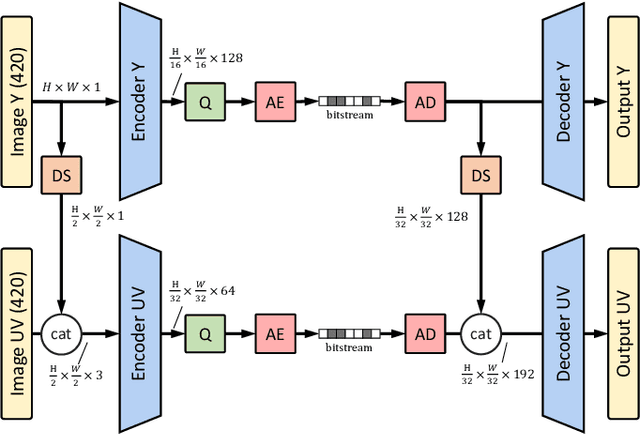

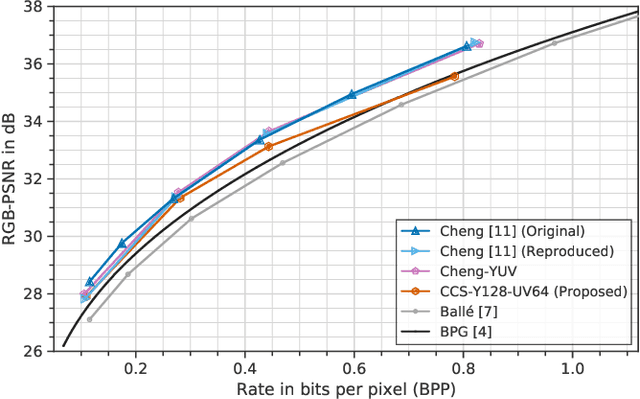

Dec 12, 2022

Abstract:Recently, image compression codecs based on Neural Networks(NN) outperformed the state-of-art classic ones such as BPG, an image format based on HEVC intra. However, the typical NN codec has high complexity, and it has limited options for parallel data processing. In this work, we propose a conditional separation principle that aims to improve parallelization and lower the computational requirements of an NN codec. We present a Conditional Color Separation (CCS) codec which follows this principle. The color components of an image are split into primary and non-primary ones. The processing of each component is done separately, by jointly trained networks. Our approach allows parallel processing of each component, flexibility to select different channel numbers, and an overall complexity reduction. The CCS codec uses over 40% less memory, has 2x faster encoding and 22% faster decoding speed, with only 4% BD-rate loss in RGB PSNR compared to our baseline model over BPG.

Learning-based Lossless Point Cloud Geometry Coding using Sparse Representations

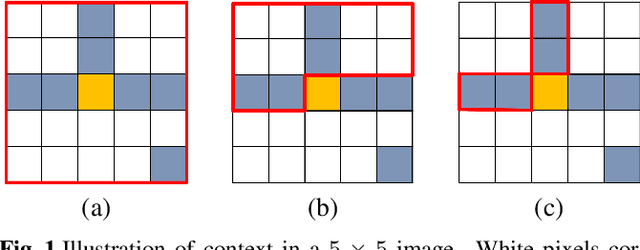

Apr 11, 2022

Abstract:Most point cloud compression methods operate in the voxel or octree domain which is not the original representation of point clouds. Those representations either remove the geometric information or require high computational power for processing. In this paper, we propose a context-based lossless point cloud geometry compression that directly processes the point representation. Operating on a point representation allows us to preserve geometry correlation between points and thus to obtain an accurate context model while significantly reduce the computational cost. Specifically, our method uses a sparse convolution neural network to estimate the voxel occupancy sequentially from the x,y,z input data. Experimental results show that our method outperforms the state-of-the-art geometry compression standard from MPEG with average rate savings of 52% on a diverse set of point clouds from four different datasets.

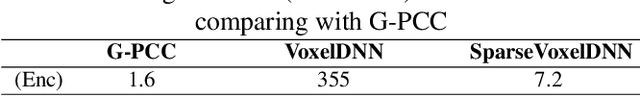

Compressive Online Robust Principal Component Analysis with Optical Flow for Video Foreground-Background Separation

Oct 25, 2017

Abstract:In the context of online Robust Principle Component Analysis (RPCA) for the video foreground-background separation, we propose a compressive online RPCA with optical flow that separates recursively a sequence of frames into sparse (foreground) and low-rank (background) components. Our method considers a small set of measurements taken per data vector (frame), which is different from conventional batch RPCA, processing all the data directly. The proposed method also incorporates multiple prior information, namely previous foreground and background frames, to improve the separation and then updates the prior information for the next frame. Moreover, the foreground prior frames are improved by estimating motions between the previous foreground frames using optical flow and compensating the motions to achieve higher quality foreground prior. The proposed method is applied to online video foreground and background separation from compressive measurements. The visual and quantitative results show that our method outperforms the existing methods.

Incorporating Prior Information in Compressive Online Robust Principal Component Analysis

May 27, 2017

Abstract:We consider an online version of the robust Principle Component Analysis (PCA), which arises naturally in time-varying source separations such as video foreground-background separation. This paper proposes a compressive online robust PCA with prior information for recursively separating a sequences of frames into sparse and low-rank components from a small set of measurements. In contrast to conventional batch-based PCA, which processes all the frames directly, the proposed method processes measurements taken from each frame. Moreover, this method can efficiently incorporate multiple prior information, namely previous reconstructed frames, to improve the separation and thereafter, update the prior information for the next frame. We utilize multiple prior information by solving $n\text{-}\ell_{1}$ minimization for incorporating the previous sparse components and using incremental singular value decomposition ($\mathrm{SVD}$) for exploiting the previous low-rank components. We also establish theoretical bounds on the number of measurements required to guarantee successful separation under assumptions of static or slowly-changing low-rank components. Using numerical experiments, we evaluate our bounds and the performance of the proposed algorithm. In addition, we apply the proposed algorithm to online video foreground and background separation from compressive measurements. Experimental results show that the proposed method outperforms the existing methods.

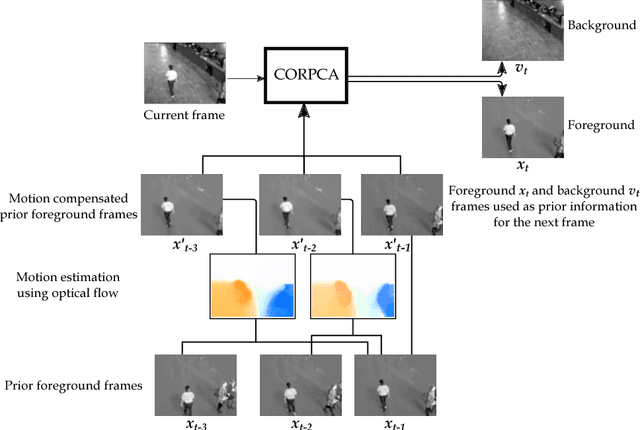

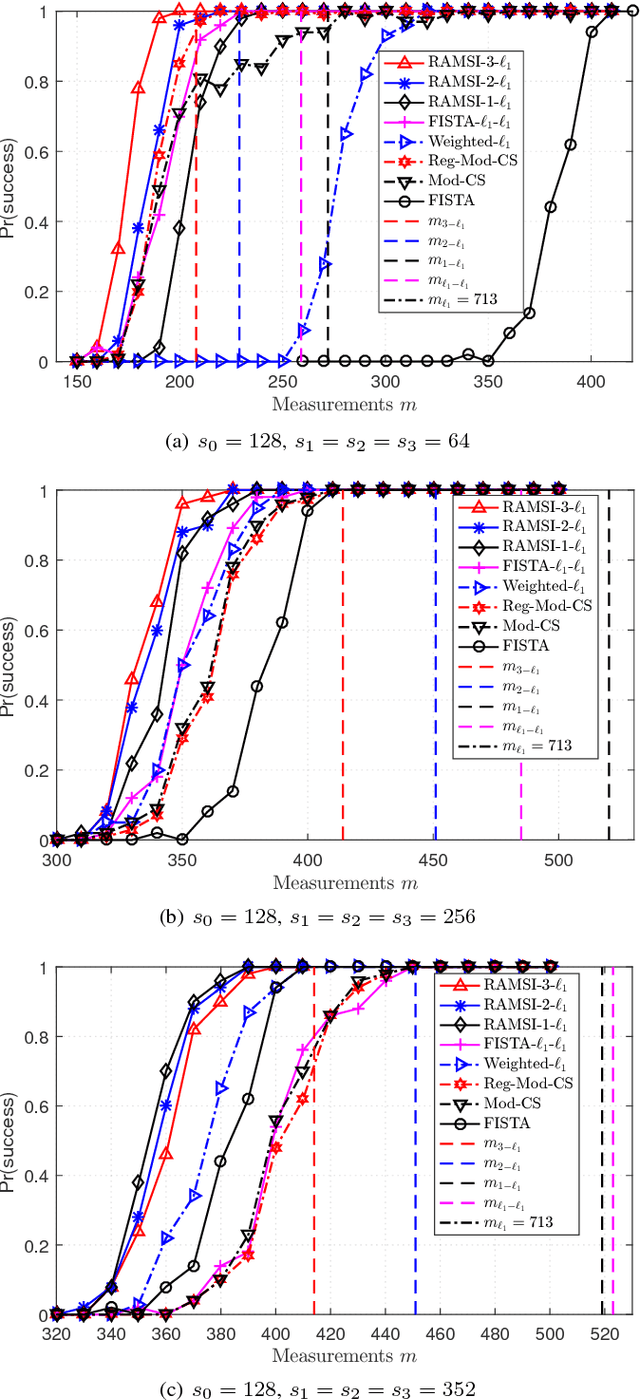

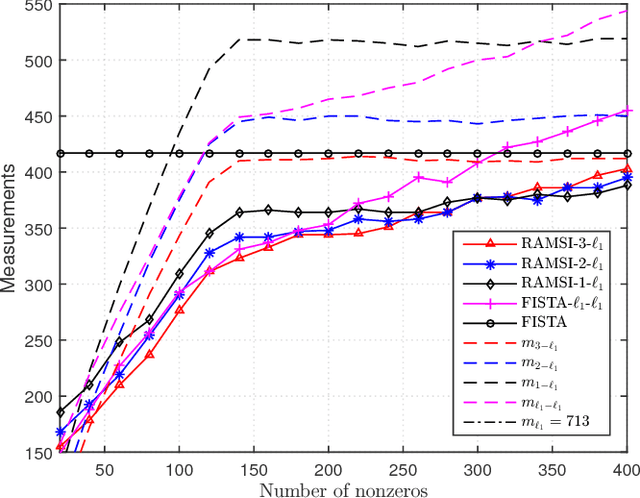

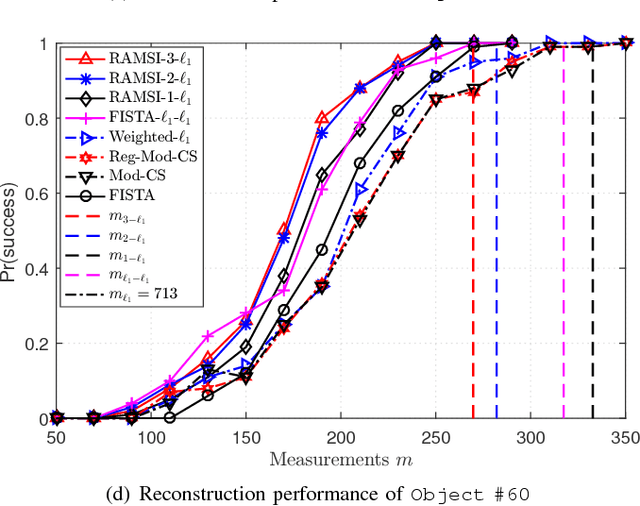

Measurement Bounds for Sparse Signal Reconstruction with Multiple Side Information

Jan 18, 2017

Abstract:In the context of compressed sensing (CS), this paper considers the problem of reconstructing sparse signals with the aid of other given correlated sources as multiple side information. To address this problem, we theoretically study a generic \textcolor{black}{weighted $n$-$\ell_{1}$ minimization} framework and propose a reconstruction algorithm that leverages multiple side information signals (RAMSI). The proposed RAMSI algorithm computes adaptively optimal weights among the side information signals at every reconstruction iteration. In addition, we establish theoretical bounds on the number of measurements that are required to successfully reconstruct the sparse source by using \textcolor{black}{weighted $n$-$\ell_{1}$ minimization}. The analysis of the established bounds reveal that \textcolor{black}{weighted $n$-$\ell_{1}$ minimization} can achieve sharper bounds and significant performance improvements compared to classical CS. We evaluate experimentally the proposed RAMSI algorithm and the established bounds using synthetic sparse signals as well as correlated feature histograms, extracted from a multiview image database for object recognition. The obtained results show clearly that the proposed algorithm outperforms state-of-the-art algorithms---\textcolor{black}{including classical CS, $\ell_1\text{-}\ell_1$ minimization, Modified-CS, regularized Modified-CS, and weighted $\ell_1$ minimization}---in terms of both the theoretical bounds and the practical performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge