Learning-based Lossless Point Cloud Geometry Coding using Sparse Representations

Paper and Code

Apr 11, 2022

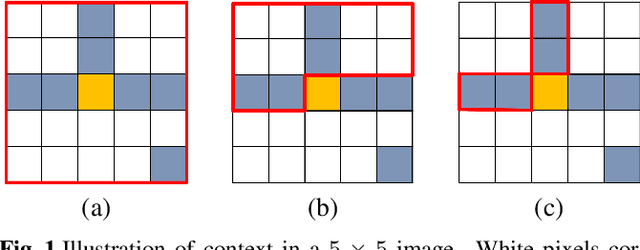

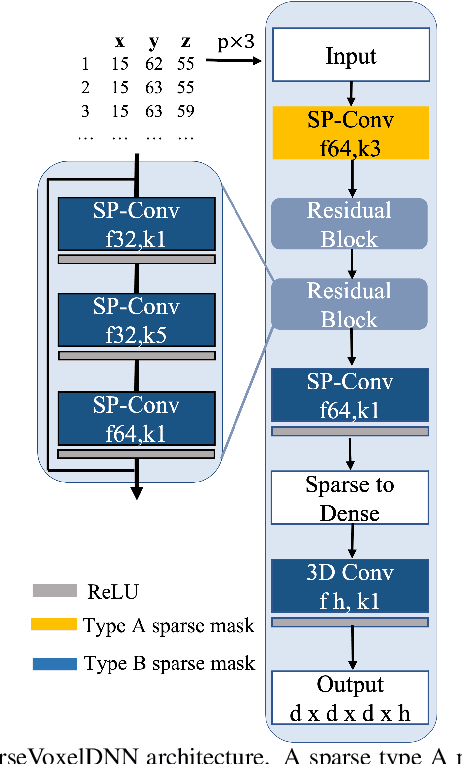

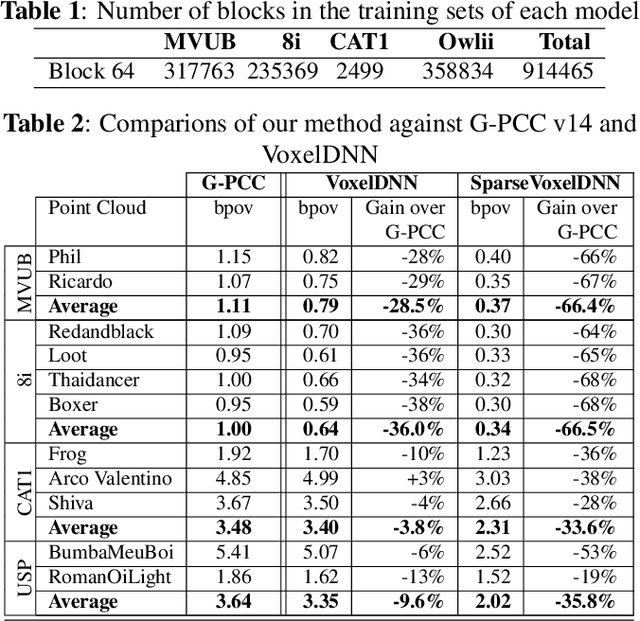

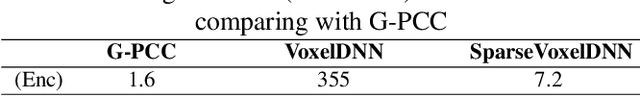

Most point cloud compression methods operate in the voxel or octree domain which is not the original representation of point clouds. Those representations either remove the geometric information or require high computational power for processing. In this paper, we propose a context-based lossless point cloud geometry compression that directly processes the point representation. Operating on a point representation allows us to preserve geometry correlation between points and thus to obtain an accurate context model while significantly reduce the computational cost. Specifically, our method uses a sparse convolution neural network to estimate the voxel occupancy sequentially from the x,y,z input data. Experimental results show that our method outperforms the state-of-the-art geometry compression standard from MPEG with average rate savings of 52% on a diverse set of point clouds from four different datasets.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge