Amirreza Razmjoo

CCDP: Composition of Conditional Diffusion Policies with Guided Sampling

Mar 19, 2025Abstract:Imitation Learning offers a promising approach to learn directly from data without requiring explicit models, simulations, or detailed task definitions. During inference, actions are sampled from the learned distribution and executed on the robot. However, sampled actions may fail for various reasons, and simply repeating the sampling step until a successful action is obtained can be inefficient. In this work, we propose an enhanced sampling strategy that refines the sampling distribution to avoid previously unsuccessful actions. We demonstrate that by solely utilizing data from successful demonstrations, our method can infer recovery actions without the need for additional exploratory behavior or a high-level controller. Furthermore, we leverage the concept of diffusion model decomposition to break down the primary problem (which may require long-horizon history to manage failures) into multiple smaller, more manageable sub-problems in learning, data collection, and inference, thereby enabling the system to adapt to variable failure counts. Our approach yields a low-level controller that dynamically adjusts its sampling space to improve efficiency when prior samples fall short. We validate our method across several tasks, including door opening with unknown directions, object manipulation, and button-searching scenarios, demonstrating that our approach outperforms traditional baselines.

A Smooth Analytical Formulation of Collision Detection and Rigid Body Dynamics With Contact

Mar 14, 2025Abstract:Generating intelligent robot behavior in contact-rich settings is a research problem where zeroth-order methods currently prevail. A major contributor to the success of such methods is their robustness in the face of non-smooth and discontinuous optimization landscapes that are characteristic of contact interactions, yet zeroth-order methods remain computationally inefficient. It is therefore desirable to develop methods for perception, planning and control in contact-rich settings that can achieve further efficiency by making use of first and second order information (i.e., gradients and Hessians). To facilitate this, we present a joint formulation of collision detection and contact modelling which, compared to existing differentiable simulation approaches, provides the following benefits: i) it results in forward and inverse dynamics that are entirely analytical (i.e. do not require solving optimization or root-finding problems with iterative methods) and smooth (i.e. twice differentiable), ii) it supports arbitrary collision geometries without needing a convex decomposition, and iii) its runtime is independent of the number of contacts. Through simulation experiments, we demonstrate the validity of the proposed formulation as a "physics for inference" that can facilitate future development of efficient methods to generate intelligent contact-rich behavior.

Sampling-Based Constrained Motion Planning with Products of Experts

Dec 23, 2024Abstract:We present a novel approach to enhance the performance of sampling-based Model Predictive Control (MPC) in constrained optimization by leveraging products of experts. Our methodology divides the main problem into two components: one focused on optimality and the other on feasibility. By combining the solutions from each component, represented as distributions, we apply products of experts to implement a project-then-sample strategy. In this strategy, the optimality distribution is projected into the feasible area, allowing for more efficient sampling. This approach contrasts with the traditional sample-then-project method, leading to more diverse exploration and reducing the accumulation of samples on the boundaries. We demonstrate an effective implementation of this principle using a tensor train-based distribution model, which is characterized by its non-parametric nature, ease of combination with other distributions at the task level, and straightforward sampling technique. We adapt existing tensor train models to suit this purpose and validate the efficacy of our approach through experiments in various tasks, including obstacle avoidance, non-prehensile manipulation, and tasks involving staying on manifolds. Our experimental results demonstrate that the proposed method consistently outperforms known baselines, providing strong empirical support for its effectiveness.

Robust Contact-rich Manipulation through Implicit Motor Adaptation

Dec 16, 2024Abstract:Contact-rich manipulation plays a vital role in daily human activities, yet uncertain physical parameters pose significant challenges for both model-based and model-free planning and control. A promising approach to address this challenge is to develop policies robust to a wide range of parameters. Domain adaptation and domain randomization are commonly used to achieve such policies but often compromise generalization to new instances or perform conservatively due to neglecting instance-specific information. \textit{Explicit motor adaptation} addresses these issues by estimating system parameters online and then retrieving the parameter-conditioned policy from a parameter-augmented base policy. However, it typically relies on precise system identification or additional high-quality policy retraining, presenting substantial challenges for contact-rich tasks with diverse physical parameters. In this work, we propose \textit{implicit motor adaptation}, which leverages tensor factorization as an implicit representation of the base policy. Given a roughly estimated parameter distribution, the parameter-conditioned policy can be efficiently derived by exploiting the separable structure of tensor cores from the base policy. This framework eliminates the need for precise system estimation and policy retraining while preserving optimal behavior and strong generalization. We provide a theoretical analysis validating this method, supported by numerical evaluations on three contact-rich manipulation primitives. Both simulation and real-world experiments demonstrate its ability to generate robust policies for diverse instances.

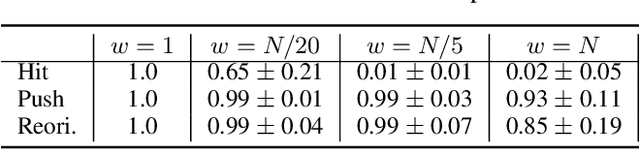

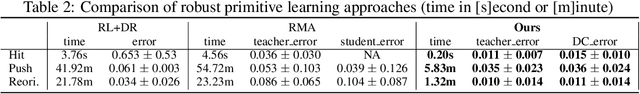

Robust Manipulation Primitive Learning via Domain Contraction

Oct 15, 2024

Abstract:Contact-rich manipulation plays an important role in human daily activities, but uncertain parameters pose significant challenges for robots to achieve comparable performance through planning and control. To address this issue, domain adaptation and domain randomization have been proposed for robust policy learning. However, they either lose the generalization ability across diverse instances or perform conservatively due to neglecting instance-specific information. In this paper, we propose a bi-level approach to learn robust manipulation primitives, including parameter-augmented policy learning using multiple models, and parameter-conditioned policy retrieval through domain contraction. This approach unifies domain randomization and domain adaptation, providing optimal behaviors while keeping generalization ability. We validate the proposed method on three contact-rich manipulation primitives: hitting, pushing, and reorientation. The experimental results showcase the superior performance of our approach in generating robust policies for instances with diverse physical parameters.

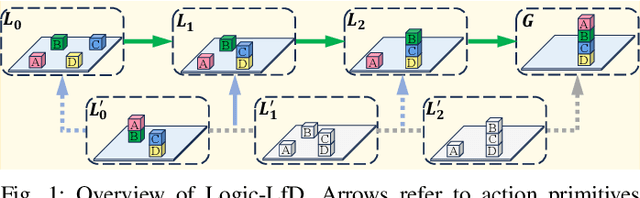

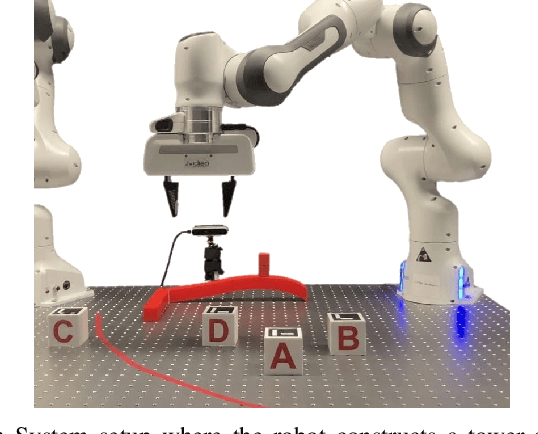

Learn2Decompose: Learning Problem Decomposition for Efficient Task and Motion Planning

Aug 13, 2024Abstract:We focus on designing efficient Task and Motion Planning (TAMP) approach for long-horizon manipulation tasks involving multi-step manipulation of multiple objects. TAMP solvers typically require exponentially longer planning time as the planning horizon and the number of environmental objects increase. To address this challenge, we first propose Learn2Decompose, a Learning from Demonstrations (LfD) approach that learns embedding task rules from demonstrations and decomposes the long-horizon problem into several subproblems. These subproblems require planning over shorter horizons with fewer objects and can be solved in parallel. We then design a parallelized hierarchical TAMP framework that concurrently solves the subproblems and concatenates the resulting subplans for the target task, significantly improving the planning efficiency of classical TAMP solvers. The effectiveness of our proposed methods is validated in both simulation and real-world experiments.

Configuration Space Distance Fields for Manipulation Planning

Jun 03, 2024Abstract:The signed distance field is a popular implicit shape representation in robotics, providing geometric information about objects and obstacles in a form that can easily be combined with control, optimization and learning techniques. Most often, SDFs are used to represent distances in task space, which corresponds to the familiar notion of distances that we perceive in our 3D world. However, SDFs can mathematically be used in other spaces, including robot configuration spaces. For a robot manipulator, this configuration space typically corresponds to the joint angles for each articulation of the robot. While it is customary in robot planning to express which portions of the configuration space are free from collision with obstacles, it is less common to think of this information as a distance field in the configuration space. In this paper, we demonstrate the potential of considering SDFs in the robot configuration space for optimization, which we call the configuration space distance field. Similarly to the use of SDF in task space, CDF provides an efficient joint angle distance query and direct access to the derivatives. Most approaches split the overall computation with one part in task space followed by one part in configuration space. Instead, CDF allows the implicit structure to be leveraged by control, optimization, and learning problems in a unified manner. In particular, we propose an efficient algorithm to compute and fuse CDFs that can be generalized to arbitrary scenes. A corresponding neural CDF representation using multilayer perceptrons is also presented to obtain a compact and continuous representation while improving computation efficiency. We demonstrate the effectiveness of CDF with planar obstacle avoidance examples and with a 7-axis Franka robot in inverse kinematics and manipulation planning tasks.

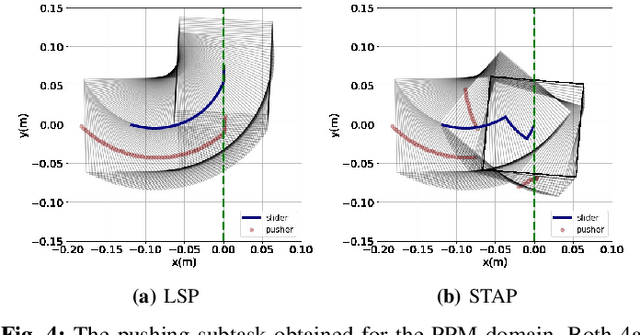

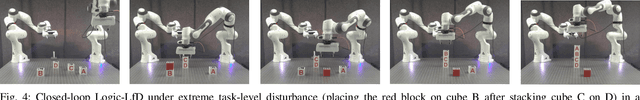

Logic-Skill Programming: An Optimization-based Approach to Sequential Skill Planning

May 07, 2024

Abstract:Recent advances in robot skill learning have unlocked the potential to construct task-agnostic skill libraries, facilitating the seamless sequencing of multiple simple manipulation primitives (aka. skills) to tackle significantly more complex tasks. Nevertheless, determining the optimal sequence for independently learned skills remains an open problem, particularly when the objective is given solely in terms of the final geometric configuration rather than a symbolic goal. To address this challenge, we propose Logic-Skill Programming (LSP), an optimization-based approach that sequences independently learned skills to solve long-horizon tasks. We formulate a first-order extension of a mathematical program to optimize the overall cumulative reward of all skills within a plan, abstracted by the sum of value functions. To solve such programs, we leverage the use of Tensor Train to construct the value function space, and rely on alternations between symbolic search and skill value optimization to find the appropriate skill skeleton and optimal subgoal sequence. Experimental results indicate that the obtained value functions provide a superior approximation of cumulative rewards compared to state-of-the-art Reinforcement Learning methods. Furthermore, we validate LSP in three manipulation domains, encompassing both prehensile and non-prehensile primitives. The results demonstrate its capability to identify the optimal solution over the full logic and geometric path. The real-robot experiments showcase the effectiveness of our approach to cope with contact uncertainty and external disturbances in the real world.

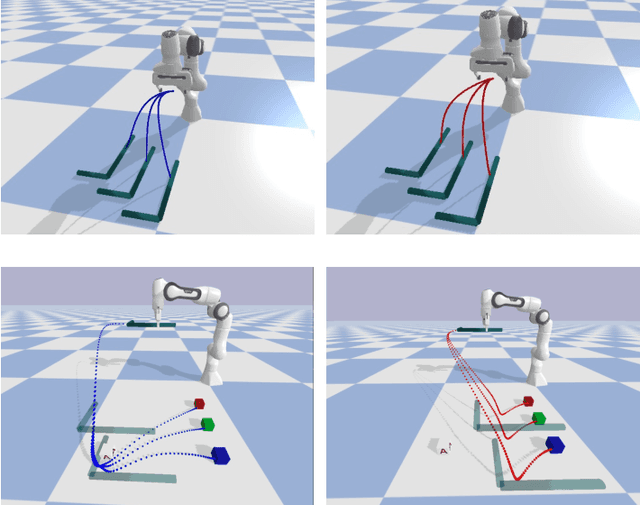

Logic Dynamic Movement Primitives for Long-horizon Manipulation Tasks in Dynamic Environments

Apr 24, 2024

Abstract:Learning from Demonstration (LfD) stands as an efficient framework for imparting human-like skills to robots. Nevertheless, designing an LfD framework capable of seamlessly imitating, generalizing, and reacting to disturbances for long-horizon manipulation tasks in dynamic environments remains a challenge. To tackle this challenge, we present Logic Dynamic Movement Primitives (Logic-DMP), which combines Task and Motion Planning (TAMP) with an optimal control formulation of DMP, allowing us to incorporate motion-level via-point specifications and to handle task-level variations or disturbances in dynamic environments. We conduct a comparative analysis of our proposed approach against several baselines, evaluating its generalization ability and reactivity across three long-horizon manipulation tasks. Our experiment demonstrates the fast generalization and reactivity of Logic-DMP for handling task-level variants and disturbances in long-horizon manipulation tasks.

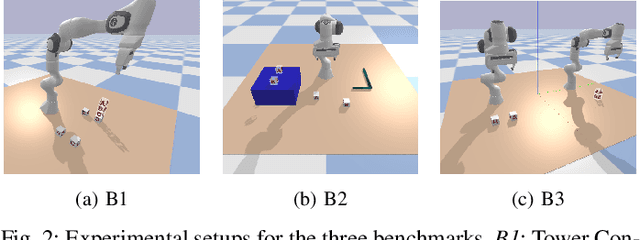

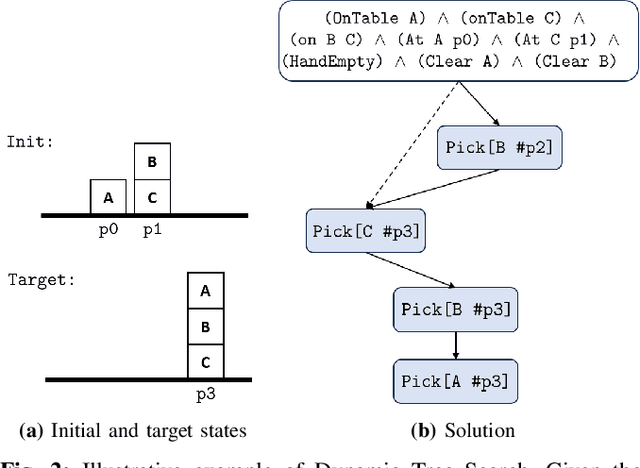

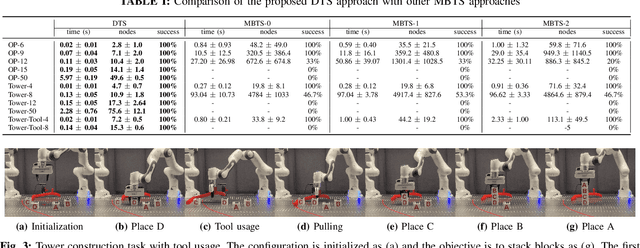

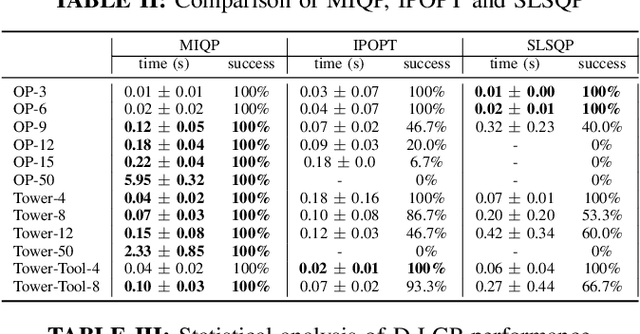

D-LGP: Dynamic Logic-Geometric Program for Combined Task and Motion Planning

Dec 05, 2023

Abstract:Many real-world sequential manipulation tasks involve a combination of discrete symbolic search and continuous motion planning, collectively known as combined task and motion planning (TAMP). However, prevailing methods often struggle with the computational burden and intricate combinatorial challenges stemming from the multitude of action skeletons. To address this, we propose Dynamic Logic-Geometric Program (D-LGP), a novel approach integrating Dynamic Tree Search and global optimization for efficient hybrid planning. Through empirical evaluation on three benchmarks, we demonstrate the efficacy of our approach, showcasing superior performance in comparison to state-of-the-art techniques. We validate our approach through simulation and demonstrate its capability for online replanning under uncertainty and external disturbances in the real world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge