Alexei Efros

Vision Transformers Don't Need Trained Registers

Jun 09, 2025

Abstract:We investigate the mechanism underlying a previously identified phenomenon in Vision Transformers -- the emergence of high-norm tokens that lead to noisy attention maps. We observe that in multiple models (e.g., CLIP, DINOv2), a sparse set of neurons is responsible for concentrating high-norm activations on outlier tokens, leading to irregular attention patterns and degrading downstream visual processing. While the existing solution for removing these outliers involves retraining models from scratch with additional learned register tokens, we use our findings to create a training-free approach to mitigate these artifacts. By shifting the high-norm activations from our discovered register neurons into an additional untrained token, we can mimic the effect of register tokens on a model already trained without registers. We demonstrate that our method produces cleaner attention and feature maps, enhances performance over base models across multiple downstream visual tasks, and achieves results comparable to models explicitly trained with register tokens. We then extend test-time registers to off-the-shelf vision-language models to improve their interpretability. Our results suggest that test-time registers effectively take on the role of register tokens at test-time, offering a training-free solution for any pre-trained model released without them.

GAN-Supervised Dense Visual Alignment

Dec 09, 2021

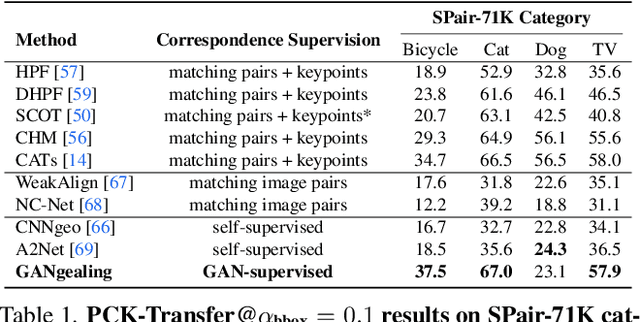

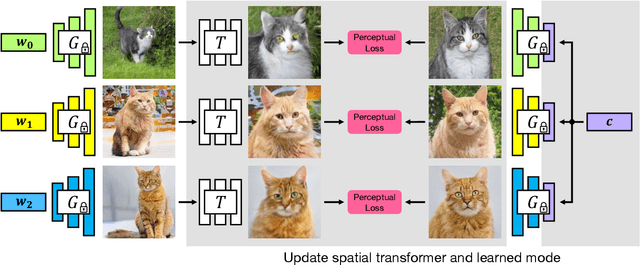

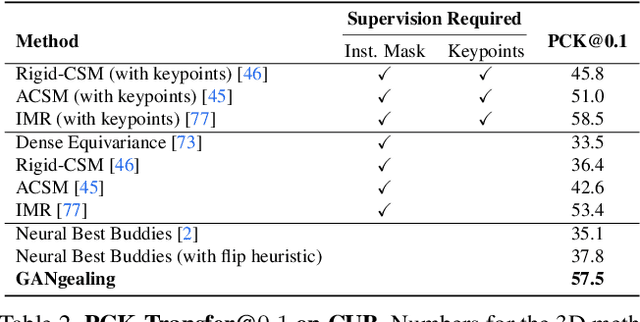

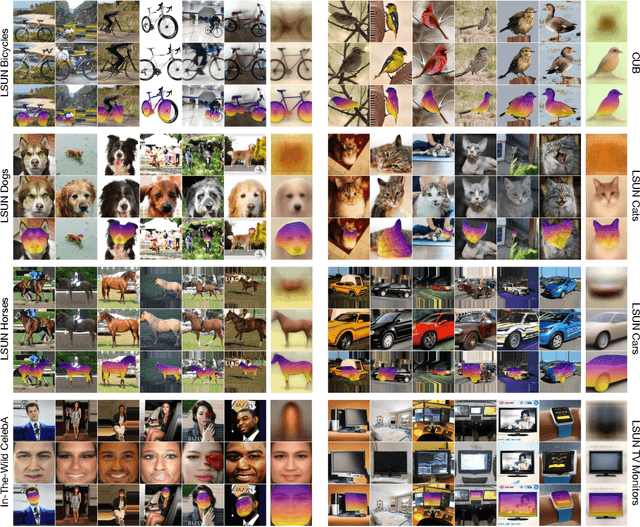

Abstract:We propose GAN-Supervised Learning, a framework for learning discriminative models and their GAN-generated training data jointly end-to-end. We apply our framework to the dense visual alignment problem. Inspired by the classic Congealing method, our GANgealing algorithm trains a Spatial Transformer to map random samples from a GAN trained on unaligned data to a common, jointly-learned target mode. We show results on eight datasets, all of which demonstrate our method successfully aligns complex data and discovers dense correspondences. GANgealing significantly outperforms past self-supervised correspondence algorithms and performs on-par with (and sometimes exceeds) state-of-the-art supervised correspondence algorithms on several datasets -- without making use of any correspondence supervision or data augmentation and despite being trained exclusively on GAN-generated data. For precise correspondence, we improve upon state-of-the-art supervised methods by as much as $3\times$. We show applications of our method for augmented reality, image editing and automated pre-processing of image datasets for downstream GAN training.

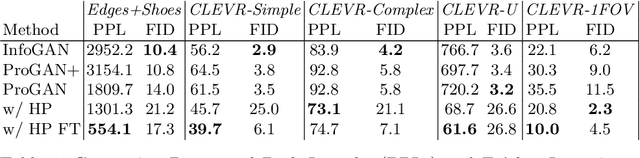

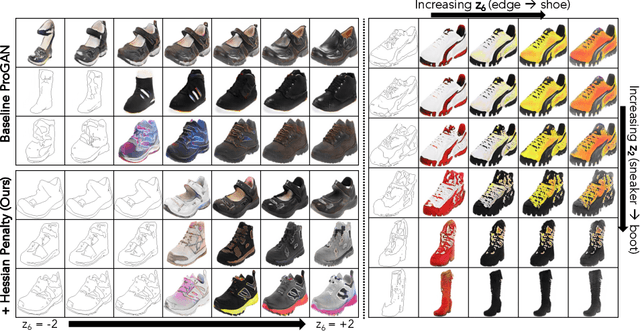

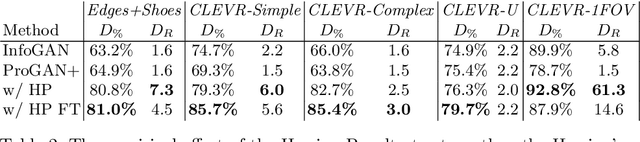

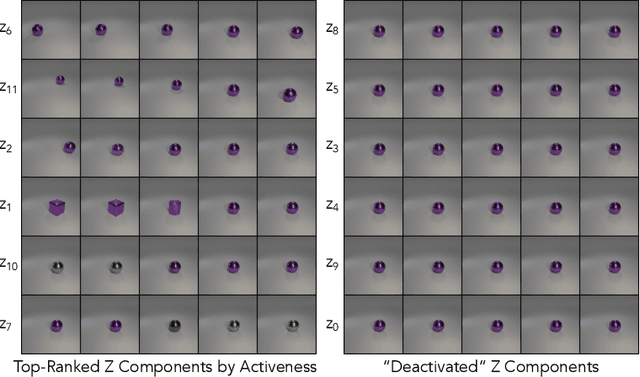

The Hessian Penalty: A Weak Prior for Unsupervised Disentanglement

Aug 24, 2020

Abstract:Existing disentanglement methods for deep generative models rely on hand-picked priors and complex encoder-based architectures. In this paper, we propose the Hessian Penalty, a simple regularization term that encourages the Hessian of a generative model with respect to its input to be diagonal. We introduce a model-agnostic, unbiased stochastic approximation of this term based on Hutchinson's estimator to compute it efficiently during training. Our method can be applied to a wide range of deep generators with just a few lines of code. We show that training with the Hessian Penalty often causes axis-aligned disentanglement to emerge in latent space when applied to ProGAN on several datasets. Additionally, we use our regularization term to identify interpretable directions in BigGAN's latent space in an unsupervised fashion. Finally, we provide empirical evidence that the Hessian Penalty encourages substantial shrinkage when applied to over-parameterized latent spaces.

Morphology-Agnostic Visual Robotic Control

Dec 31, 2019

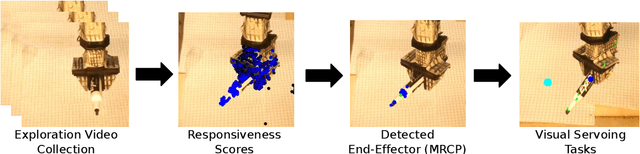

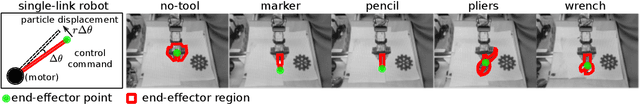

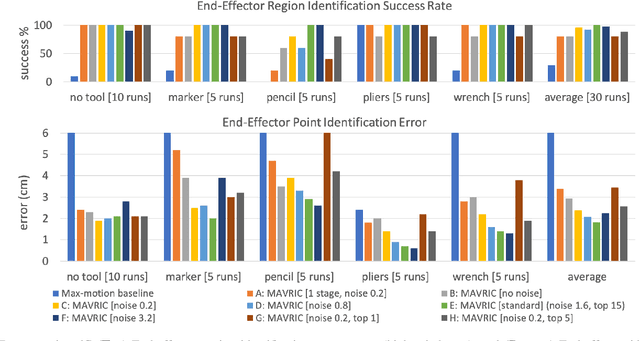

Abstract:Existing approaches for visuomotor robotic control typically require characterizing the robot in advance by calibrating the camera or performing system identification. We propose MAVRIC, an approach that works with minimal prior knowledge of the robot's morphology, and requires only a camera view containing the robot and its environment and an unknown control interface. MAVRIC revolves around a mutual information-based method for self-recognition, which discovers visual "control points" on the robot body within a few seconds of exploratory interaction, and these control points in turn are then used for visual servoing. MAVRIC can control robots with imprecise actuation, no proprioceptive feedback, unknown morphologies including novel tools, unknown camera poses, and even unsteady handheld cameras. We demonstrate our method on visually-guided 3D point reaching, trajectory following, and robot-to-robot imitation.

A Computer Vision Pipeline for Automated Determination of Cardiac Structure and Function and Detection of Disease by Two-Dimensional Echocardiography

Jan 12, 2018

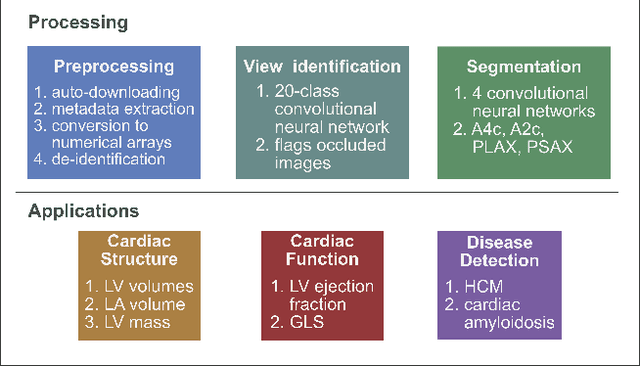

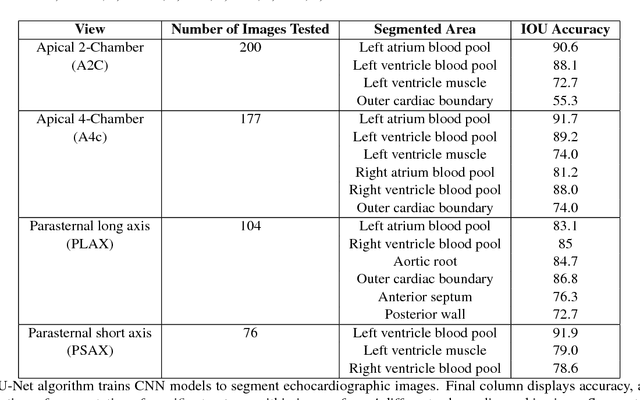

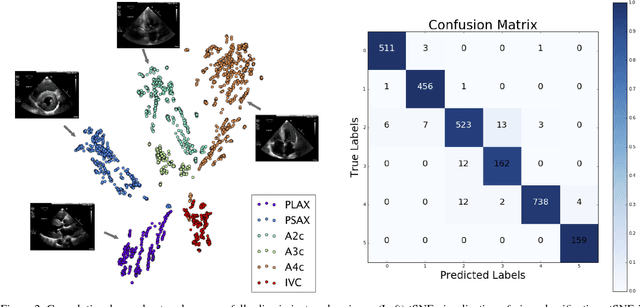

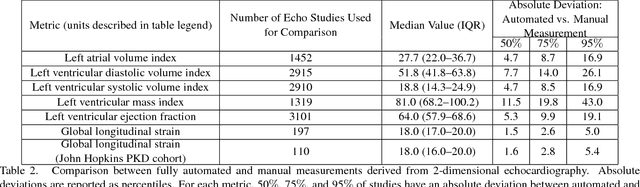

Abstract:Automated cardiac image interpretation has the potential to transform clinical practice in multiple ways including enabling low-cost serial assessment of cardiac function in the primary care and rural setting. We hypothesized that advances in computer vision could enable building a fully automated, scalable analysis pipeline for echocardiogram (echo) interpretation. Our approach entailed: 1) preprocessing; 2) convolutional neural networks (CNN) for view identification, image segmentation, and phasing of the cardiac cycle; 3) quantification of chamber volumes and left ventricular mass; 4) particle tracking to compute longitudinal strain; and 5) targeted disease detection. CNNs accurately identified views (e.g. 99% for apical 4-chamber) and segmented individual cardiac chambers. Cardiac structure measurements agreed with study report values (e.g. mean absolute deviations (MAD) of 7.7 mL/kg/m2 for left ventricular diastolic volume index, 2918 studies). We computed automated ejection fraction and longitudinal strain measurements (within 2 cohorts), which agreed with commercial software-derived values [for ejection fraction, MAD=5.3%, N=3101 studies; for strain, MAD=1.5% (n=197) and 1.6% (n=110)], and demonstrated applicability to serial monitoring of breast cancer patients for trastuzumab cardiotoxicity. Overall, we found that, compared to manual measurements, automated measurements had superior performance across seven internal consistency metrics with an average increase in the Spearman correlation coefficient of 0.05 (p=0.02). Finally, we developed disease detection algorithms for hypertrophic cardiomyopathy and cardiac amyloidosis, with C-statistics of 0.93 and 0.84, respectively. Our pipeline lays the groundwork for using automated interpretation to support point-of-care handheld cardiac ultrasound and large-scale analysis of the millions of echos archived within healthcare systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge