Alessandro Antonucci

Automatic Prompt Optimization for Dataset-Level Feature Discovery

Jan 20, 2026Abstract:Feature extraction from unstructured text is a critical step in many downstream classification pipelines, yet current approaches largely rely on hand-crafted prompts or fixed feature schemas. We formulate feature discovery as a dataset-level prompt optimization problem: given a labelled text corpus, the goal is to induce a global set of interpretable and discriminative feature definitions whose realizations optimize a downstream supervised learning objective. To this end, we propose a multi-agent prompt optimization framework in which language-model agents jointly propose feature definitions, extract feature values, and evaluate feature quality using dataset-level performance and interpretability feedback. Instruction prompts are iteratively refined based on this structured feedback, enabling optimization over prompts that induce shared feature sets rather than per-example predictions. This formulation departs from prior prompt optimization methods that rely on per-sample supervision and provides a principled mechanism for automatic feature discovery from unstructured text.

On the Correlation between Individual Fairness and Predictive Accuracy in Probabilistic Models

Sep 16, 2025Abstract:We investigate individual fairness in generative probabilistic classifiers by analysing the robustness of posterior inferences to perturbations in private features. Building on established results in robustness analysis, we hypothesise a correlation between robustness and predictive accuracy, specifically, instances exhibiting greater robustness are more likely to be classified accurately. We empirically assess this hypothesis using a benchmark of fourteen datasets with fairness concerns, employing Bayesian networks as the underlying generative models. To address the computational complexity associated with robustness analysis over multiple private features with Bayesian networks, we reformulate the problem as a most probable explanation task in an auxiliary Markov random field. Our experiments confirm the hypothesis about the correlation, suggesting novel directions to mitigate the traditional trade-off between fairness and accuracy.

Explaining Strategic Decisions in Multi-Agent Reinforcement Learning for Aerial Combat Tactics

May 16, 2025Abstract:Artificial intelligence (AI) is reshaping strategic planning, with Multi-Agent Reinforcement Learning (MARL) enabling coordination among autonomous agents in complex scenarios. However, its practical deployment in sensitive military contexts is constrained by the lack of explainability, which is an essential factor for trust, safety, and alignment with human strategies. This work reviews and assesses current advances in explainability methods for MARL with a focus on simulated air combat scenarios. We proceed by adapting various explainability techniques to different aerial combat scenarios to gain explanatory insights about the model behavior. By linking AI-generated tactics with human-understandable reasoning, we emphasize the need for transparency to ensure reliable deployment and meaningful human-machine interaction. By illuminating the crucial importance of explainability in advancing MARL for operational defense, our work supports not only strategic planning but also the training of military personnel with insightful and comprehensible analyses.

Enhancing Aerial Combat Tactics through Hierarchical Multi-Agent Reinforcement Learning

May 13, 2025

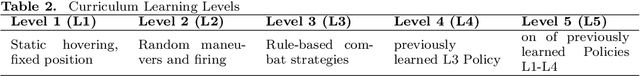

Abstract:This work presents a Hierarchical Multi-Agent Reinforcement Learning framework for analyzing simulated air combat scenarios involving heterogeneous agents. The objective is to identify effective Courses of Action that lead to mission success within preset simulations, thereby enabling the exploration of real-world defense scenarios at low cost and in a safe-to-fail setting. Applying deep Reinforcement Learning in this context poses specific challenges, such as complex flight dynamics, the exponential size of the state and action spaces in multi-agent systems, and the capability to integrate real-time control of individual units with look-ahead planning. To address these challenges, the decision-making process is split into two levels of abstraction: low-level policies control individual units, while a high-level commander policy issues macro commands aligned with the overall mission targets. This hierarchical structure facilitates the training process by exploiting policy symmetries of individual agents and by separating control from command tasks. The low-level policies are trained for individual combat control in a curriculum of increasing complexity. The high-level commander is then trained on mission targets given pre-trained control policies. The empirical validation confirms the advantages of the proposed framework.

Intelligent tutoring systems by Bayesian nets with noisy gates

Sep 09, 2024

Abstract:Directed graphical models such as Bayesian nets are often used to implement intelligent tutoring systems able to interact in real-time with learners in a purely automatic way. When coping with such models, keeping a bound on the number of parameters might be important for multiple reasons. First, as these models are typically based on expert knowledge, a huge number of parameters to elicit might discourage practitioners from adopting them. Moreover, the number of model parameters affects the complexity of the inferences, while a fast computation of the queries is needed for real-time feedback. We advocate logical gates with uncertainty for a compact parametrization of the conditional probability tables in the underlying Bayesian net used by tutoring systems. We discuss the semantics of the model parameters to elicit and the assumptions required to apply such approach in this domain. We also derive a dedicated inference scheme to speed up computations.

Rubric-based Learner Modelling via Noisy Gates Bayesian Networks for Computational Thinking Skills Assessment

Aug 02, 2024Abstract:In modern and personalised education, there is a growing interest in developing learners' competencies and accurately assessing them. In a previous work, we proposed a procedure for deriving a learner model for automatic skill assessment from a task-specific competence rubric, thus simplifying the implementation of automated assessment tools. The previous approach, however, suffered two main limitations: (i) the ordering between competencies defined by the assessment rubric was only indirectly modelled; (ii) supplementary skills, not under assessment but necessary for accomplishing the task, were not included in the model. In this work, we address issue (i) by introducing dummy observed nodes, strictly enforcing the skills ordering without changing the network's structure. In contrast, for point (ii), we design a network with two layers of gates, one performing disjunctive operations by noisy-OR gates and the other conjunctive operations through logical ANDs. Such changes improve the model outcomes' coherence and the modelling tool's flexibility without compromising the model's compact parametrisation, interpretability and simple experts' elicitation. We used this approach to develop a learner model for Computational Thinking (CT) skills assessment. The CT-cube skills assessment framework and the Cross Array Task (CAT) are used to exemplify it and demonstrate its feasibility.

Safe Road-Crossing by Autonomous Wheelchairs: a Novel Dataset and its Experimental Evaluation

Mar 13, 2024Abstract:Safe road-crossing by self-driving vehicles is a crucial problem to address in smart-cities. In this paper, we introduce a multi-sensor fusion approach to support road-crossing decisions in a system composed by an autonomous wheelchair and a flying drone featuring a robust sensory system made of diverse and redundant components. To that aim, we designed an analytical danger function based on explainable physical conditions evaluated by single sensors, including those using machine learning and artificial vision. As a proof-of-concept, we provide an experimental evaluation in a laboratory environment, showing the advantages of using multiple sensors, which can improve decision accuracy and effectively support safety assessment. We made the dataset available to the scientific community for further experimentation. The work has been developed in the context of an European project named REXASI-PRO, which aims to develop trustworthy artificial intelligence for social navigation of people with reduced mobility.

A Note on Bayesian Networks with Latent Root Variables

Feb 26, 2024Abstract:We characterise the likelihood function computed from a Bayesian network with latent variables as root nodes. We show that the marginal distribution over the remaining, manifest, variables also factorises as a Bayesian network, which we call empirical. A dataset of observations of the manifest variables allows us to quantify the parameters of the empirical Bayesian net. We prove that (i) the likelihood of such a dataset from the original Bayesian network is dominated by the global maximum of the likelihood from the empirical one; and that (ii) such a maximum is attained if and only if the parameters of the Bayesian network are consistent with those of the empirical model.

Zero-shot Causal Graph Extrapolation from Text via LLMs

Dec 22, 2023Abstract:We evaluate the ability of large language models (LLMs) to infer causal relations from natural language. Compared to traditional natural language processing and deep learning techniques, LLMs show competitive performance in a benchmark of pairwise relations without needing (explicit) training samples. This motivates us to extend our approach to extrapolating causal graphs through iterated pairwise queries. We perform a preliminary analysis on a benchmark of biomedical abstracts with ground-truth causal graphs validated by experts. The results are promising and support the adoption of LLMs for such a crucial step in causal inference, especially in medical domains, where the amount of scientific text to analyse might be huge, and the causal statements are often implicit.

Humanising robot-assisted navigation

Oct 17, 2023Abstract:Robot-assisted navigation is a perfect example of a class of applications requiring flexible control approaches. When the human is reliable, the robot should concede space to their initiative. When the human makes inappropriate choices the robot controller should kick-in guiding them towards safer paths. Shared authority control is a way to achieve this behaviour by deciding online how much of the authority should be given to the human and how much should be retained by the robot. An open problem is how to evaluate the appropriateness of the human's choices. One possible way is to consider the deviation from an ideal path computed by the robot. This choice is certainly safe and efficient, but it emphasises the importance of the robot's decision and relegates the human to a secondary role. In this paper, we propose a different paradigm: a human's behaviour is correct if, at every time, it bears a close resemblance to what other humans do in similar situations. This idea is implemented through the combination of machine learning and adaptive control. The map of the environment is decomposed into a grid. In each cell, we classify the possible motions that the human executes. We use a neural network classifier to classify the current motion, and the probability score is used as a hyperparameter in the control to vary the amount of intervention. The experiments collected for the paper show the feasibility of the idea. A qualitative evaluation, done by surveying the users after they have tested the robot, shows that the participants preferred our control method over a state-of-the-art visco-elastic control.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge