Aijun An

Adapt-As-You-Walk Through the Clouds: Training-Free Online Test-Time Adaptation of 3D Vision-Language Foundation Models

Nov 19, 2025

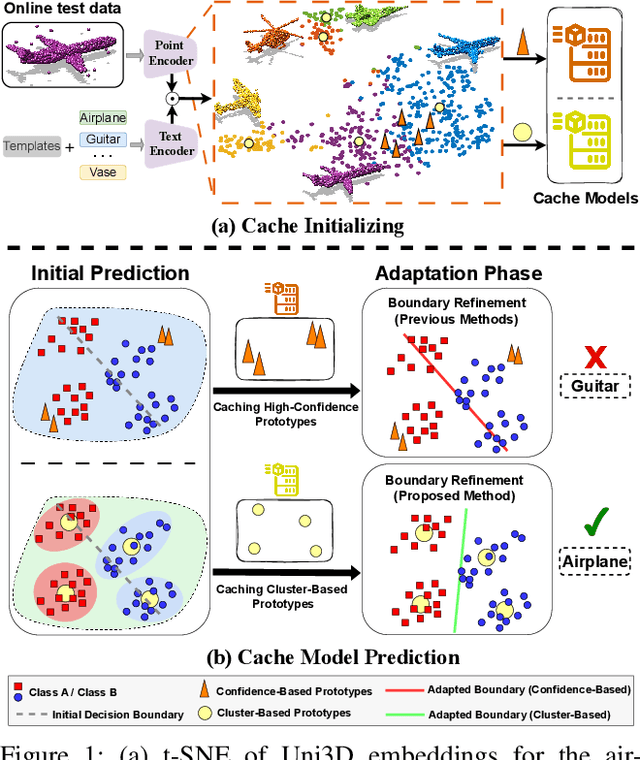

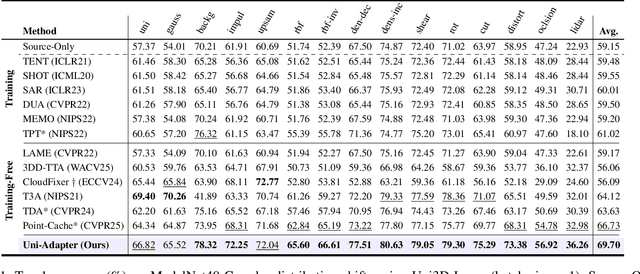

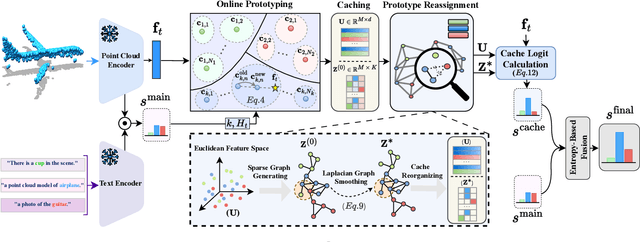

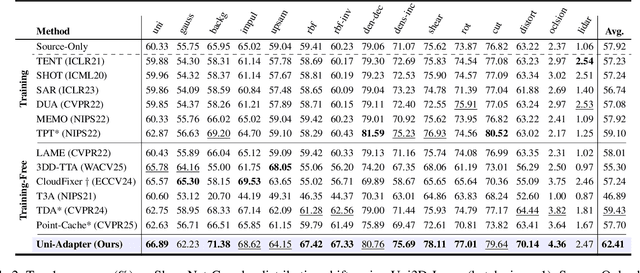

Abstract:3D Vision-Language Foundation Models (VLFMs) have shown strong generalization and zero-shot recognition capabilities in open-world point cloud processing tasks. However, these models often underperform in practical scenarios where data are noisy, incomplete, or drawn from a different distribution than the training data. To address this, we propose Uni-Adapter, a novel training-free online test-time adaptation (TTA) strategy for 3D VLFMs based on dynamic prototype learning. We define a 3D cache to store class-specific cluster centers as prototypes, which are continuously updated to capture intra-class variability in heterogeneous data distributions. These dynamic prototypes serve as anchors for cache-based logit computation via similarity scoring. Simultaneously, a graph-based label smoothing module captures inter-prototype similarities to enforce label consistency among similar prototypes. Finally, we unify predictions from the original 3D VLFM and the refined 3D cache using entropy-weighted aggregation for reliable adaptation. Without retraining, Uni-Adapter effectively mitigates distribution shifts, achieving state-of-the-art performance on diverse 3D benchmarks over different 3D VLFMs, improving ModelNet-40C by 10.55%, ScanObjectNN-C by 8.26%, and ShapeNet-C by 4.49% over the source 3D VLFMs.

Evolution of ReID: From Early Methods to LLM Integration

Jun 16, 2025Abstract:Person re-identification (ReID) has evolved from handcrafted feature-based methods to deep learning approaches and, more recently, to models incorporating large language models (LLMs). Early methods struggled with variations in lighting, pose, and viewpoint, but deep learning addressed these issues by learning robust visual features. Building on this, LLMs now enable ReID systems to integrate semantic and contextual information through natural language. This survey traces that full evolution and offers one of the first comprehensive reviews of ReID approaches that leverage LLMs, where textual descriptions are used as privileged information to improve visual matching. A key contribution is the use of dynamic, identity-specific prompts generated by GPT-4o, which enhance the alignment between images and text in vision-language ReID systems. Experimental results show that these descriptions improve accuracy, especially in complex or ambiguous cases. To support further research, we release a large set of GPT-4o-generated descriptions for standard ReID datasets. By bridging computer vision and natural language processing, this survey offers a unified perspective on the field's development and outlines key future directions such as better prompt design, cross-modal transfer learning, and real-world adaptability.

Test-Time Adaptation of 3D Point Clouds via Denoising Diffusion Models

Nov 21, 2024

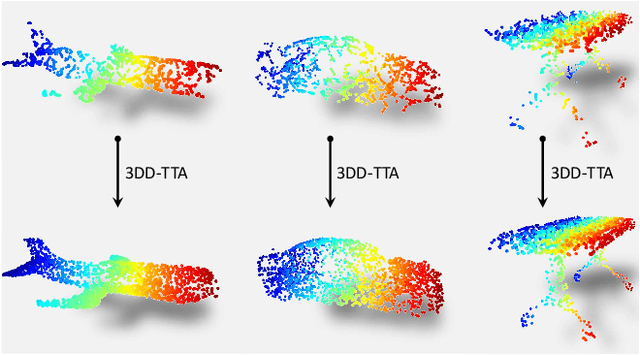

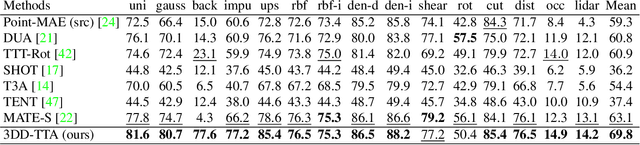

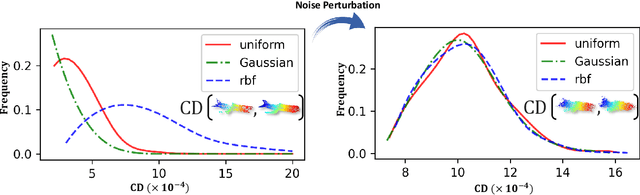

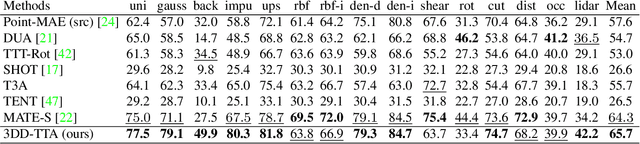

Abstract:Test-time adaptation (TTA) of 3D point clouds is crucial for mitigating discrepancies between training and testing samples in real-world scenarios, particularly when handling corrupted point clouds. LiDAR data, for instance, can be affected by sensor failures or environmental factors, causing domain gaps. Adapting models to these distribution shifts online is crucial, as training for every possible variation is impractical. Existing methods often focus on fine-tuning pre-trained models based on self-supervised learning or pseudo-labeling, which can lead to forgetting valuable source domain knowledge over time and reduce generalization on future tests. In this paper, we introduce a novel 3D test-time adaptation method, termed 3DD-TTA, which stands for 3D Denoising Diffusion Test-Time Adaptation. This method uses a diffusion strategy that adapts input point cloud samples to the source domain while keeping the source model parameters intact. The approach uses a Variational Autoencoder (VAE) to encode the corrupted point cloud into a shape latent and latent points. These latent points are corrupted with Gaussian noise and subjected to a denoising diffusion process. During this process, both the shape latent and latent points are updated to preserve fidelity, guiding the denoising toward generating consistent samples that align more closely with the source domain. We conduct extensive experiments on the ShapeNet dataset and investigate its generalizability on ModelNet40 and ScanObjectNN, achieving state-of-the-art results. The code has been released at \url{https://github.com/hamidreza-dastmalchi/3DD-TTA}.

Disease Outbreak Detection and Forecasting: A Review of Methods and Data Sources

Oct 21, 2024Abstract:Infectious diseases occur when pathogens from other individuals or animals infect a person, resulting in harm to both individuals and society as a whole. The outbreak of such diseases can pose a significant threat to human health. However, early detection and tracking of these outbreaks have the potential to reduce the mortality impact. To address these threats, public health authorities have endeavored to establish comprehensive mechanisms for collecting disease data. Many countries have implemented infectious disease surveillance systems, with the detection of epidemics being a primary objective. The clinical healthcare system, local/state health agencies, federal agencies, academic/professional groups, and collaborating governmental entities all play pivotal roles within this system. Moreover, nowadays, search engines and social media platforms can serve as valuable tools for monitoring disease trends. The Internet and social media have become significant platforms where users share information about their preferences and relationships. This real-time information can be harnessed to gauge the influence of ideas and societal opinions, making it highly useful across various domains and research areas, such as marketing campaigns, financial predictions, and public health, among others. This article provides a review of the existing standard methods developed by researchers for detecting outbreaks using time series data. These methods leverage various data sources, including conventional data sources and social media data or Internet data sources. The review particularly concentrates on works published within the timeframe of 2015 to 2022.

A Survey on Graph Representation Learning Methods

Apr 04, 2022

Abstract:Graphs representation learning has been a very active research area in recent years. The goal of graph representation learning is to generate graph representation vectors that capture the structure and features of large graphs accurately. This is especially important because the quality of the graph representation vectors will affect the performance of these vectors in downstream tasks such as node classification, link prediction and anomaly detection. Many techniques are proposed for generating effective graph representation vectors. Two of the most prevalent categories of graph representation learning are graph embedding methods without using graph neural nets (GNN), which we denote as non-GNN based graph embedding methods, and graph neural nets (GNN) based methods. Non-GNN graph embedding methods are based on techniques such as random walks, temporal point processes and neural network learning methods. GNN-based methods, on the other hand, are the application of deep learning on graph data. In this survey, we provide an overview of these two categories and cover the current state-of-the-art methods for both static and dynamic graphs. Finally, we explore some open and ongoing research directions for future work.

Extending Isolation Forest for Anomaly Detection in Big Data via K-Means

Apr 27, 2021

Abstract:Industrial Information Technology (IT) infrastructures are often vulnerable to cyberattacks. To ensure security to the computer systems in an industrial environment, it is required to build effective intrusion detection systems to monitor the cyber-physical systems (e.g., computer networks) in the industry for malicious activities. This paper aims to build such intrusion detection systems to protect the computer networks from cyberattacks. More specifically, we propose a novel unsupervised machine learning approach that combines the K-Means algorithm with the Isolation Forest for anomaly detection in industrial big data scenarios. Since our objective is to build the intrusion detection system for the big data scenario in the industrial domain, we utilize the Apache Spark framework to implement our proposed model which was trained in large network traffic data (about 123 million instances of network traffic) stored in Elasticsearch. Moreover, we evaluate our proposed model on the live streaming data and find that our proposed system can be used for real-time anomaly detection in the industrial setup. In addition, we address different challenges that we face while training our model on large datasets and explicitly describe how these issues were resolved. Based on our empirical evaluation in different use-cases for anomaly detection in real-world network traffic data, we observe that our proposed system is effective to detect anomalies in big data scenarios. Finally, we evaluate our proposed model on several academic datasets to compare with other models and find that it provides comparable performance with other state-of-the-art approaches.

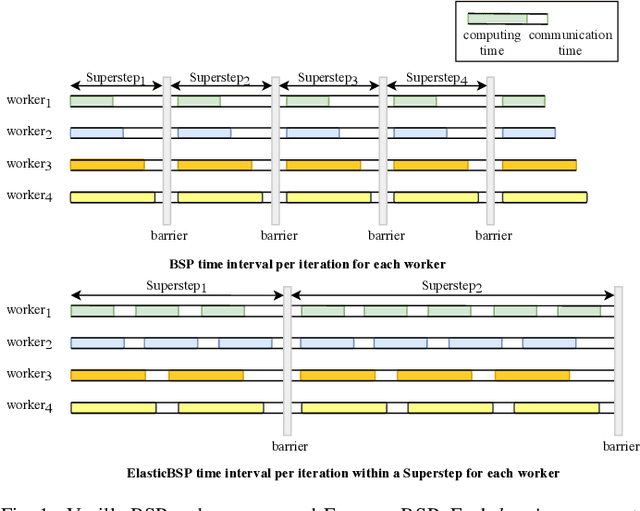

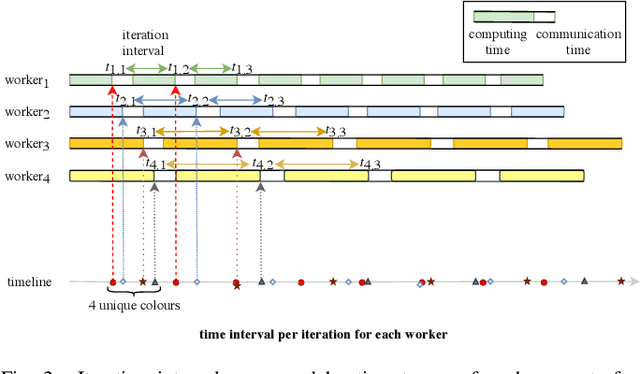

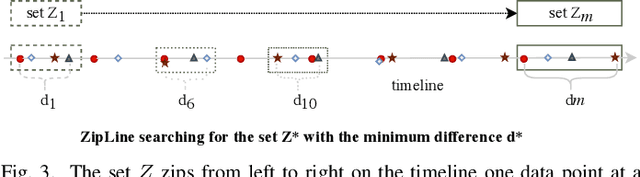

Elastic Bulk Synchronous Parallel Model for Distributed Deep Learning

Jan 06, 2020

Abstract:The bulk synchronous parallel (BSP) is a celebrated synchronization model for general-purpose parallel computing that has successfully been employed for distributed training of machine learning models. A prevalent shortcoming of the BSP is that it requires workers to wait for the straggler at every iteration. To ameliorate this shortcoming of classic BSP, we propose ELASTICBSP a model that aims to relax its strict synchronization requirement. The proposed model offers more flexibility and adaptability during the training phase, without sacrificing on the accuracy of the trained model. We also propose an efficient method that materializes the model, named ZIPLINE. The algorithm is tunable and can effectively balance the trade-off between quality of convergence and iteration throughput, in order to accommodate different environments or applications. A thorough experimental evaluation demonstrates that our proposed ELASTICBSP model converges faster and to a higher accuracy than the classic BSP. It also achieves comparable (if not higher) accuracy than the other sensible synchronization models.

* The paper was accepted in the proceedings of the IEEE International Conference on Data Mining 2019 (ICDM'19), 1504-1509

Learning to Determine the Quality of News Headlines

Nov 26, 2019

Abstract:Today, most newsreaders read the online version of news articles rather than traditional paper-based newspapers. Also, news media publishers rely heavily on the income generated from subscriptions and website visits made by newsreaders. Thus, online user engagement is a very important issue for online newspapers. Much effort has been spent on writing interesting headlines to catch the attention of online users. On the other hand, headlines should not be misleading (e.g., clickbaits); otherwise, readers would be disappointed when reading the content. In this paper, we propose four indicators to determine the quality of published news headlines based on their click count and dwell time, which are obtained by website log analysis. Then, we use soft target distribution of the calculated quality indicators to train our proposed deep learning model which can predict the quality of unpublished news headlines. The proposed model not only processes the latent features of both headline and body of the article to predict its headline quality but also considers the semantic relation between headline and body as well. To evaluate our model, we use a real dataset from a major Canadian newspaper. Results show our proposed model outperforms other state-of-the-art NLP models.

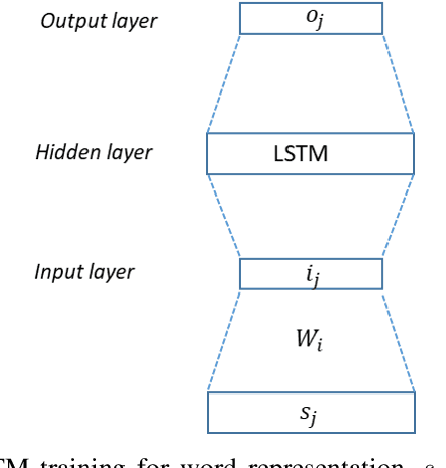

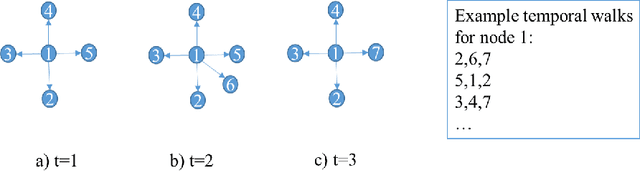

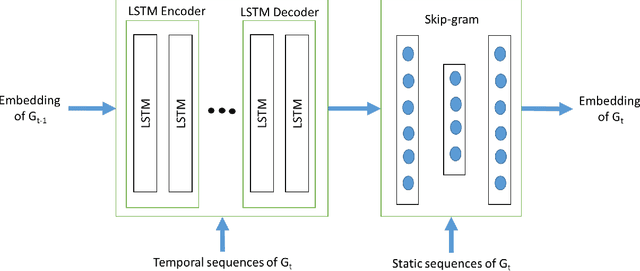

Dynamic Graph Embedding via LSTM History Tracking

Nov 05, 2019

Abstract:Many real world networks are very large and constantly change over time. These dynamic networks exist in various domains such as social networks, traffic networks and biological interactions. To handle large dynamic networks in downstream applications such as link prediction and anomaly detection, it is essential for such networks to be transferred into a low dimensional space. Recently, network embedding, a technique that converts a large graph into a low-dimensional representation, has become increasingly popular due to its strength in preserving the structure of a network. Efficient dynamic network embedding, however, has not yet been fully explored. In this paper, we present a dynamic network embedding method that integrates the history of nodes over time into the current state of nodes. The key contribution of our work is 1) generating dynamic network embedding by combining both dynamic and static node information 2) tracking history of neighbors of nodes using LSTM 3) significantly decreasing the time and memory by training an autoencoder LSTM model using temporal walks rather than adjacency matrices of graphs which are the common practice. We evaluate our method in multiple applications such as anomaly detection, link prediction and node classification in datasets from various domains.

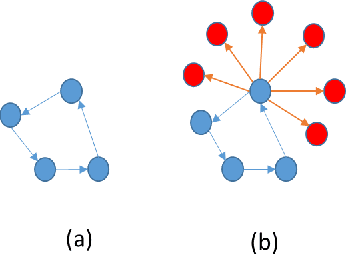

Dynamic Joint Variational Graph Autoencoders

Oct 04, 2019

Abstract:Learning network representations is a fundamental task for many graph applications such as link prediction, node classification, graph clustering, and graph visualization. Many real-world networks are interpreted as dynamic networks and evolve over time. Most existing graph embedding algorithms were developed for static graphs mainly and cannot capture the evolution of a large dynamic network. In this paper, we propose Dynamic joint Variational Graph Autoencoders (Dyn-VGAE) that can learn both local structures and temporal evolutionary patterns in a dynamic network. Dyn-VGAE provides a joint learning framework for computing temporal representations of all graph snapshots simultaneously. Each auto-encoder embeds a graph snapshot based on its local structure and can also learn temporal dependencies by collaborating with other autoencoders. We conduct experimental studies on dynamic real-world graph datasets and the results demonstrate the effectiveness of the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge