Yonggang Hu

Elastic Bulk Synchronous Parallel Model for Distributed Deep Learning

Jan 06, 2020

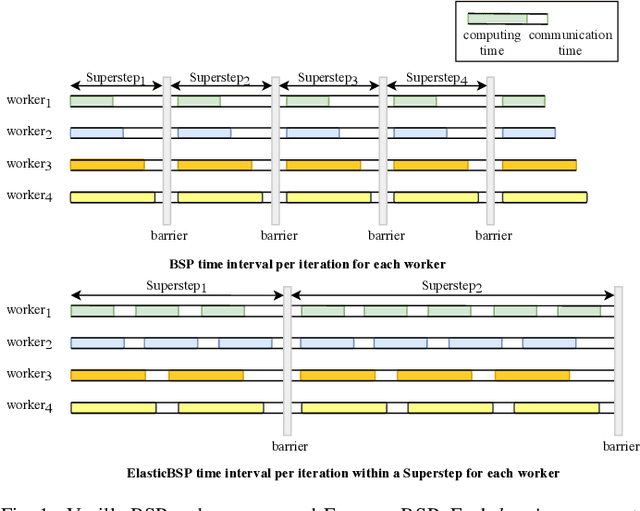

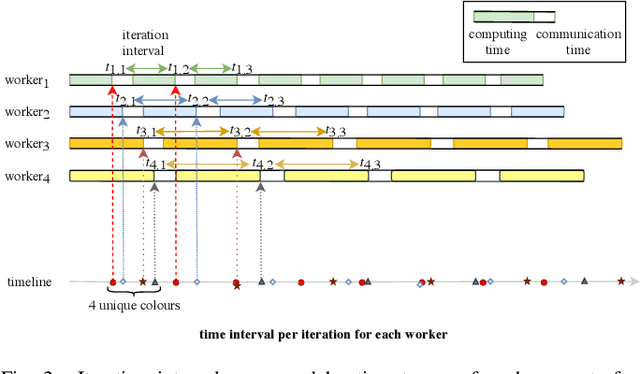

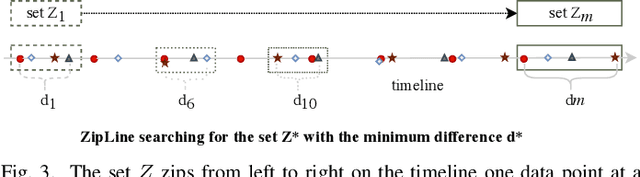

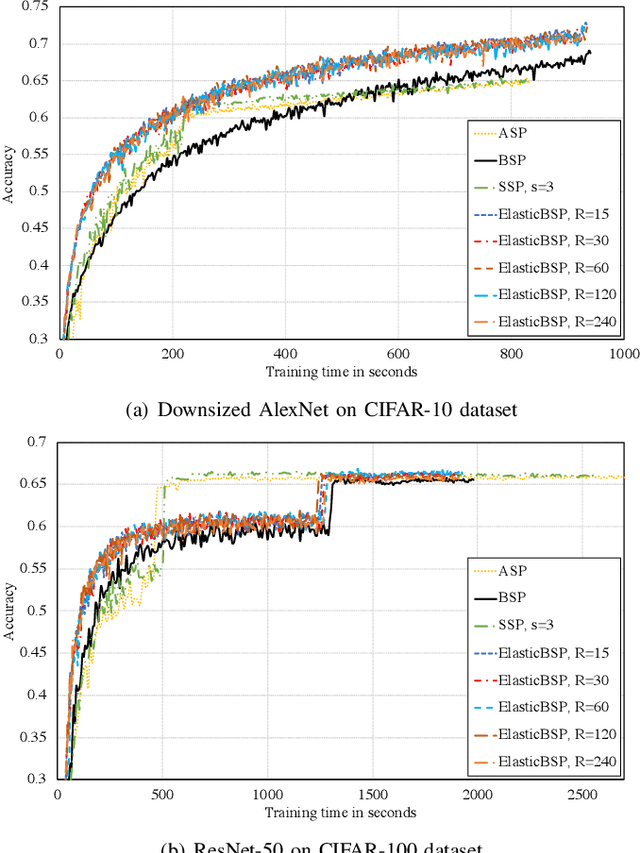

Abstract:The bulk synchronous parallel (BSP) is a celebrated synchronization model for general-purpose parallel computing that has successfully been employed for distributed training of machine learning models. A prevalent shortcoming of the BSP is that it requires workers to wait for the straggler at every iteration. To ameliorate this shortcoming of classic BSP, we propose ELASTICBSP a model that aims to relax its strict synchronization requirement. The proposed model offers more flexibility and adaptability during the training phase, without sacrificing on the accuracy of the trained model. We also propose an efficient method that materializes the model, named ZIPLINE. The algorithm is tunable and can effectively balance the trade-off between quality of convergence and iteration throughput, in order to accommodate different environments or applications. A thorough experimental evaluation demonstrates that our proposed ELASTICBSP model converges faster and to a higher accuracy than the classic BSP. It also achieves comparable (if not higher) accuracy than the other sensible synchronization models.

* The paper was accepted in the proceedings of the IEEE International Conference on Data Mining 2019 (ICDM'19), 1504-1509

Dynamic Graph Embedding via LSTM History Tracking

Nov 05, 2019

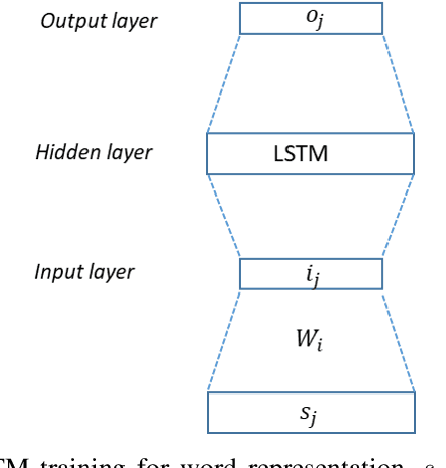

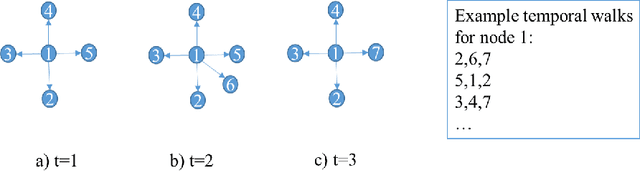

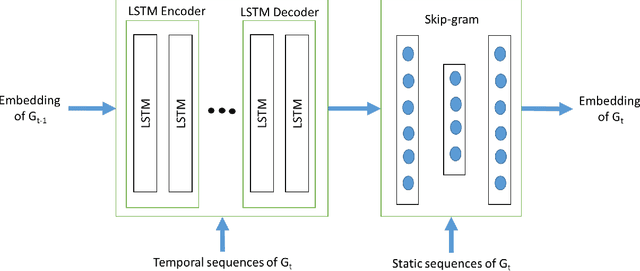

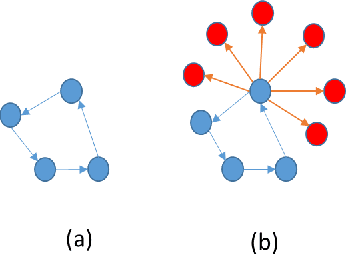

Abstract:Many real world networks are very large and constantly change over time. These dynamic networks exist in various domains such as social networks, traffic networks and biological interactions. To handle large dynamic networks in downstream applications such as link prediction and anomaly detection, it is essential for such networks to be transferred into a low dimensional space. Recently, network embedding, a technique that converts a large graph into a low-dimensional representation, has become increasingly popular due to its strength in preserving the structure of a network. Efficient dynamic network embedding, however, has not yet been fully explored. In this paper, we present a dynamic network embedding method that integrates the history of nodes over time into the current state of nodes. The key contribution of our work is 1) generating dynamic network embedding by combining both dynamic and static node information 2) tracking history of neighbors of nodes using LSTM 3) significantly decreasing the time and memory by training an autoencoder LSTM model using temporal walks rather than adjacency matrices of graphs which are the common practice. We evaluate our method in multiple applications such as anomaly detection, link prediction and node classification in datasets from various domains.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge