Ahmad Chaddad

Federated Vision Transformer with Adaptive Focal Loss for Medical Image Classification

Feb 02, 2026Abstract:While deep learning models like Vision Transformer (ViT) have achieved significant advances, they typically require large datasets. With data privacy regulations, access to many original datasets is restricted, especially medical images. Federated learning (FL) addresses this challenge by enabling global model aggregation without data exchange. However, the heterogeneity of the data and the class imbalance that exist in local clients pose challenges for the generalization of the model. This study proposes a FL framework leveraging a dynamic adaptive focal loss (DAFL) and a client-aware aggregation strategy for local training. Specifically, we design a dynamic class imbalance coefficient that adjusts based on each client's sample distribution and class data distribution, ensuring minority classes receive sufficient attention and preventing sparse data from being ignored. To address client heterogeneity, a weighted aggregation strategy is adopted, which adapts to data size and characteristics to better capture inter-client variations. The classification results on three public datasets (ISIC, Ocular Disease and RSNA-ICH) show that the proposed framework outperforms DenseNet121, ResNet50, ViT-S/16, ViT-L/32, FedCLIP, Swin Transformer, CoAtNet, and MixNet in most cases, with accuracy improvements ranging from 0.98\% to 41.69\%. Ablation studies on the imbalanced ISIC dataset validate the effectiveness of the proposed loss function and aggregation strategy compared to traditional loss functions and other FL approaches. The codes can be found at: https://github.com/AIPMLab/ViT-FLDAF.

GazeFormer-MoE: Context-Aware Gaze Estimation via CLIP and MoE Transformer

Jan 18, 2026Abstract:We present a semantics modulated, multi scale Transformer for 3D gaze estimation. Our model conditions CLIP global features with learnable prototype banks (illumination, head pose, background, direction), fuses these prototype-enriched global vectors with CLIP patch tokens and high-resolution CNN tokens in a unified attention space, and replaces several FFN blocks with routed/shared Mixture of Experts to increase conditional capacity. Evaluated on MPIIFaceGaze, EYEDIAP, Gaze360 and ETH-XGaze, our model achieves new state of the art angular errors of 2.49°, 3.22°, 10.16°, and 1.44°, demonstrating up to a 64% relative improvement over previously reported results. ablations attribute gains to prototype conditioning, cross scale fusion, MoE and hyperparameter. Our code is publicly available at https://github. com/AIPMLab/Gazeformer.

Federated CLIP for Resource-Efficient Heterogeneous Medical Image Classification

Nov 11, 2025Abstract:Despite the remarkable performance of deep models in medical imaging, they still require source data for training, which limits their potential in light of privacy concerns. Federated learning (FL), as a decentralized learning framework that trains a shared model with multiple hospitals (a.k.a., FL clients), provides a feasible solution. However, data heterogeneity and resource costs hinder the deployment of FL models, especially when using vision language models (VLM). To address these challenges, we propose a novel contrastive language-image pre-training (CLIP) based FL approach for medical image classification (FedMedCLIP). Specifically, we introduce a masked feature adaptation module (FAM) as a communication module to reduce the communication load while freezing the CLIP encoders to reduce the computational overhead. Furthermore, we propose a masked multi-layer perceptron (MLP) as a private local classifier to adapt to the client tasks. Moreover, we design an adaptive Kullback-Leibler (KL) divergence-based distillation regularization method to enable mutual learning between FAM and MLP. Finally, we incorporate model compression to transmit the FAM parameters while using ensemble predictions for classification. Extensive experiments on four publicly available medical datasets demonstrate that our model provides feasible performance (e.g., 8\% higher compared to second best baseline on ISIC2019) with reasonable resource cost (e.g., 120$\times$ faster than FedAVG).

Enhancing Dual Network Based Semi-Supervised Medical Image Segmentation with Uncertainty-Guided Pseudo-Labeling

Sep 16, 2025Abstract:Despite the remarkable performance of supervised medical image segmentation models, relying on a large amount of labeled data is impractical in real-world situations. Semi-supervised learning approaches aim to alleviate this challenge using unlabeled data through pseudo-label generation. Yet, existing semi-supervised segmentation methods still suffer from noisy pseudo-labels and insufficient supervision within the feature space. To solve these challenges, this paper proposes a novel semi-supervised 3D medical image segmentation framework based on a dual-network architecture. Specifically, we investigate a Cross Consistency Enhancement module using both cross pseudo and entropy-filtered supervision to reduce the noisy pseudo-labels, while we design a dynamic weighting strategy to adjust the contributions of pseudo-labels using an uncertainty-aware mechanism (i.e., Kullback-Leibler divergence). In addition, we use a self-supervised contrastive learning mechanism to align uncertain voxel features with reliable class prototypes by effectively differentiating between trustworthy and uncertain predictions, thus reducing prediction uncertainty. Extensive experiments are conducted on three 3D segmentation datasets, Left Atrial, NIH Pancreas and BraTS-2019. The proposed approach consistently exhibits superior performance across various settings (e.g., 89.95\% Dice score on left Atrial with 10\% labeled data) compared to the state-of-the-art methods. Furthermore, the usefulness of the proposed modules is further validated via ablation experiments.

Classification based deep learning models for lung cancer and disease using medical images

Jul 02, 2025

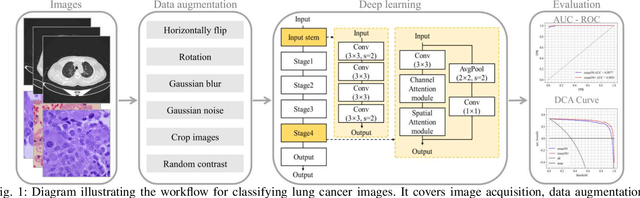

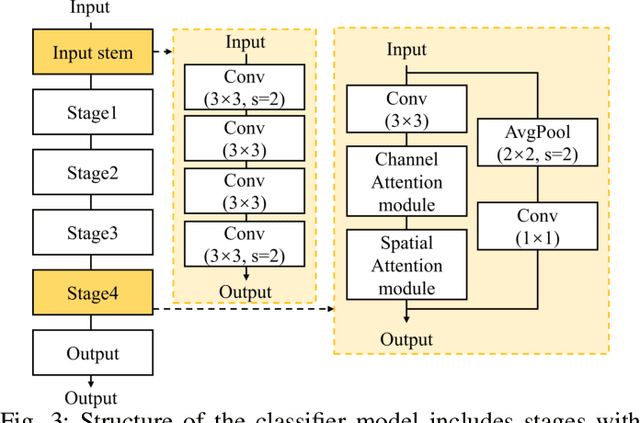

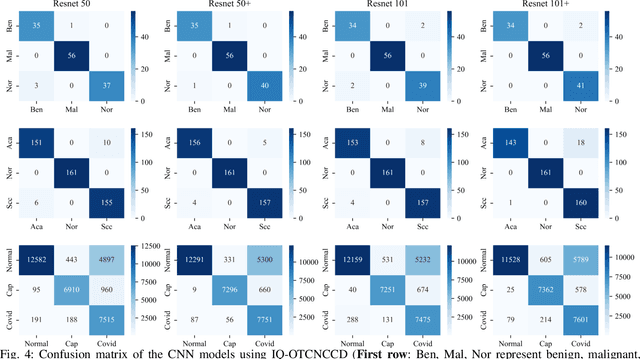

Abstract:The use of deep learning (DL) in medical image analysis has significantly improved the ability to predict lung cancer. In this study, we introduce a novel deep convolutional neural network (CNN) model, named ResNet+, which is based on the established ResNet framework. This model is specifically designed to improve the prediction of lung cancer and diseases using the images. To address the challenge of missing feature information that occurs during the downsampling process in CNNs, we integrate the ResNet-D module, a variant designed to enhance feature extraction capabilities by modifying the downsampling layers, into the traditional ResNet model. Furthermore, a convolutional attention module was incorporated into the bottleneck layers to enhance model generalization by allowing the network to focus on relevant regions of the input images. We evaluated the proposed model using five public datasets, comprising lung cancer (LC2500 $n$=3183, IQ-OTH/NCCD $n$=1336, and LCC $n$=25000 images) and lung disease (ChestXray $n$=5856, and COVIDx-CT $n$=425024 images). To address class imbalance, we used data augmentation techniques to artificially increase the representation of underrepresented classes in the training dataset. The experimental results show that ResNet+ model demonstrated remarkable accuracy/F1, reaching 98.14/98.14\% on the LC25000 dataset and 99.25/99.13\% on the IQ-OTH/NCCD dataset. Furthermore, the ResNet+ model saved computational cost compared to the original ResNet series in predicting lung cancer images. The proposed model outperformed the baseline models on publicly available datasets, achieving better performance metrics. Our codes are publicly available at https://github.com/AIPMLab/Graduation-2024/tree/main/Peng.

Deep Modeling and Optimization of Medical Image Classification

May 29, 2025Abstract:Deep models, such as convolutional neural networks (CNNs) and vision transformer (ViT), demonstrate remarkable performance in image classification. However, those deep models require large data to fine-tune, which is impractical in the medical domain due to the data privacy issue. Furthermore, despite the feasible performance of contrastive language image pre-training (CLIP) in the natural domain, the potential of CLIP has not been fully investigated in the medical field. To face these challenges, we considered three scenarios: 1) we introduce a novel CLIP variant using four CNNs and eight ViTs as image encoders for the classification of brain cancer and skin cancer, 2) we combine 12 deep models with two federated learning techniques to protect data privacy, and 3) we involve traditional machine learning (ML) methods to improve the generalization ability of those deep models in unseen domain data. The experimental results indicate that maxvit shows the highest averaged (AVG) test metrics (AVG = 87.03\%) in HAM10000 dataset with multimodal learning, while convnext\_l demonstrates remarkable test with an F1-score of 83.98\% compared to swin\_b with 81.33\% in FL model. Furthermore, the use of support vector machine (SVM) can improve the overall test metrics with AVG of $\sim 2\%$ for swin transformer series in ISIC2018. Our codes are available at https://github.com/AIPMLab/SkinCancerSimulation.

Generalizable and Explainable Deep Learning for Medical Image Computing: An Overview

Mar 11, 2025

Abstract:Objective. This paper presents an overview of generalizable and explainable artificial intelligence (XAI) in deep learning (DL) for medical imaging, aimed at addressing the urgent need for transparency and explainability in clinical applications. Methodology. We propose to use four CNNs in three medical datasets (brain tumor, skin cancer, and chest x-ray) for medical image classification tasks. In addition, we perform paired t-tests to show the significance of the differences observed between different methods. Furthermore, we propose to combine ResNet50 with five common XAI techniques to obtain explainable results for model prediction, aiming at improving model transparency. We also involve a quantitative metric (confidence increase) to evaluate the usefulness of XAI techniques. Key findings. The experimental results indicate that ResNet50 can achieve feasible accuracy and F1 score in all datasets (e.g., 86.31\% accuracy in skin cancer). Furthermore, the findings show that while certain XAI methods, such as XgradCAM, effectively highlight relevant abnormal regions in medical images, others, like EigenGradCAM, may perform less effectively in specific scenarios. In addition, XgradCAM indicates higher confidence increase (e.g., 0.12 in glioma tumor) compared to GradCAM++ (0.09) and LayerCAM (0.08). Implications. Based on the experimental results and recent advancements, we outline future research directions to enhance the robustness and generalizability of DL models in the field of biomedical imaging.

A Knowledge Distillation-Based Approach to Enhance Transparency of Classifier Models

Feb 21, 2025

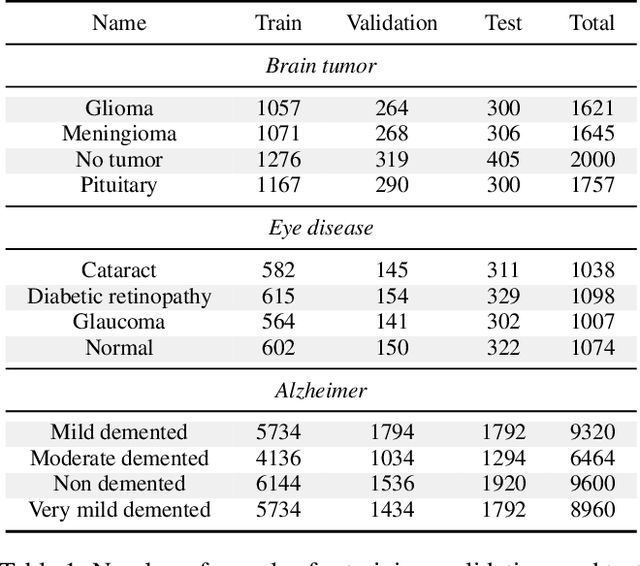

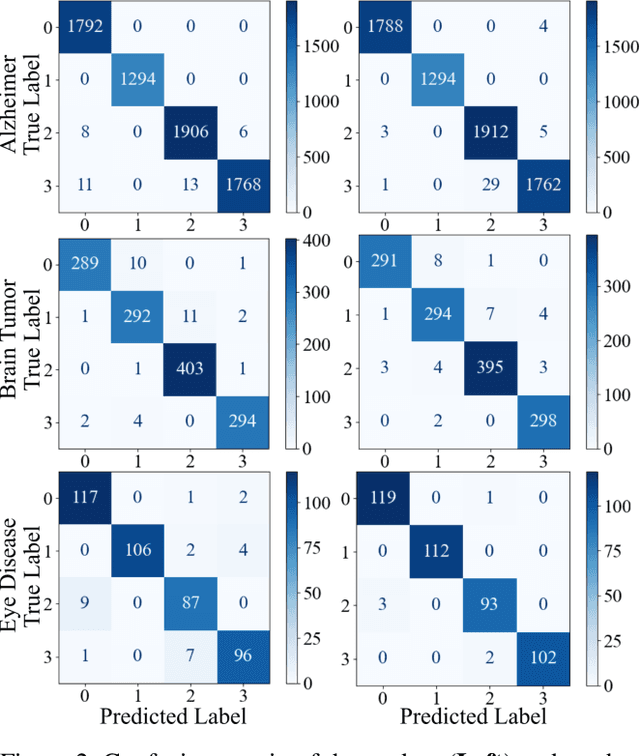

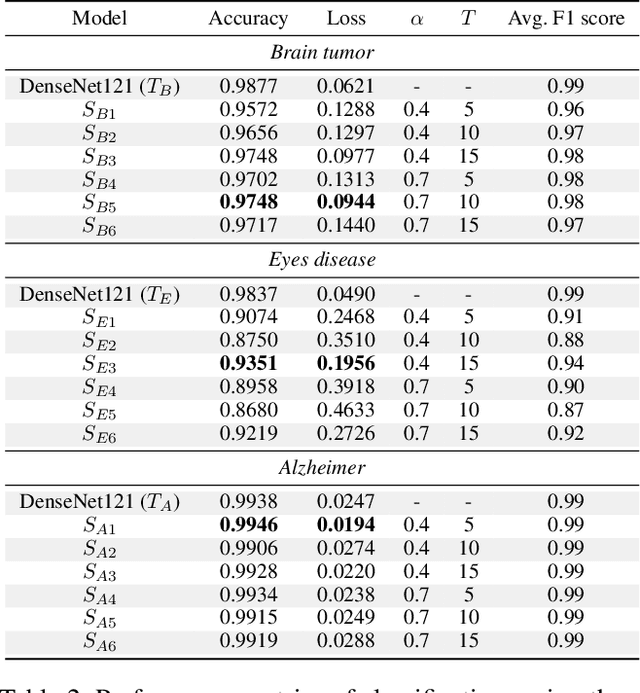

Abstract:With the rapid development of artificial intelligence (AI), especially in the medical field, the need for its explainability has grown. In medical image analysis, a high degree of transparency and model interpretability can help clinicians better understand and trust the decision-making process of AI models. In this study, we propose a Knowledge Distillation (KD)-based approach that aims to enhance the transparency of the AI model in medical image analysis. The initial step is to use traditional CNN to obtain a teacher model and then use KD to simplify the CNN architecture, retain most of the features of the data set, and reduce the number of network layers. It also uses the feature map of the student model to perform hierarchical analysis to identify key features and decision-making processes. This leads to intuitive visual explanations. We selected three public medical data sets (brain tumor, eye disease, and Alzheimer's disease) to test our method. It shows that even when the number of layers is reduced, our model provides a remarkable result in the test set and reduces the time required for the interpretability analysis.

Explainable, Domain-Adaptive, and Federated Artificial Intelligence in Medicine

Nov 17, 2022

Abstract:Artificial intelligence (AI) continues to transform data analysis in many domains. Progress in each domain is driven by a growing body of annotated data, increased computational resources, and technological innovations. In medicine, the sensitivity of the data, the complexity of the tasks, the potentially high stakes, and a requirement of accountability give rise to a particular set of challenges. In this review, we focus on three key methodological approaches that address some of the particular challenges in AI-driven medical decision making. (1) Explainable AI aims to produce a human-interpretable justification for each output. Such models increase confidence if the results appear plausible and match the clinicians expectations. However, the absence of a plausible explanation does not imply an inaccurate model. Especially in highly non-linear, complex models that are tuned to maximize accuracy, such interpretable representations only reflect a small portion of the justification. (2) Domain adaptation and transfer learning enable AI models to be trained and applied across multiple domains. For example, a classification task based on images acquired on different acquisition hardware. (3) Federated learning enables learning large-scale models without exposing sensitive personal health information. Unlike centralized AI learning, where the centralized learning machine has access to the entire training data, the federated learning process iteratively updates models across multiple sites by exchanging only parameter updates, not personal health data. This narrative review covers the basic concepts, highlights relevant corner-stone and state-of-the-art research in the field, and discusses perspectives.

Gaze Estimation Approach Using Deep Differential Residual Network

Aug 08, 2022

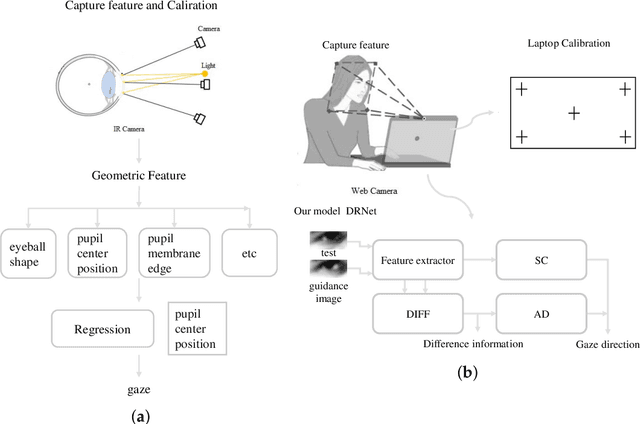

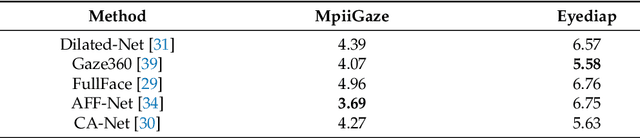

Abstract:Gaze estimation, which is a method to determine where a person is looking at given the person's full face, is a valuable clue for understanding human intention. Similarly to other domains of computer vision, deep learning (DL) methods have gained recognition in the gaze estimation domain. However, there are still gaze calibration problems in the gaze estimation domain, thus preventing existing methods from further improving the performances. An effective solution is to directly predict the difference information of two human eyes, such as the differential network (Diff-Nn). However, this solution results in a loss of accuracy when using only one inference image. We propose a differential residual model (DRNet) combined with a new loss function to make use of the difference information of two eye images. We treat the difference information as auxiliary information. We assess the proposed model (DRNet) mainly using two public datasets (1) MpiiGaze and (2) Eyediap. Considering only the eye features, DRNet outperforms the state-of-the-art gaze estimation methods with $angular-error$ of 4.57 and 6.14 using MpiiGaze and Eyediap datasets, respectively. Furthermore, the experimental results also demonstrate that DRNet is extremely robust to noise images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge