Adrian Jackson

MoE-Gen: High-Throughput MoE Inference on a Single GPU with Module-Based Batching

Mar 12, 2025Abstract:This paper presents MoE-Gen, a high-throughput MoE inference system optimized for single-GPU execution. Existing inference systems rely on model-based or continuous batching strategies, originally designed for interactive inference, which result in excessively small batches for MoE's key modules-attention and expert modules-leading to poor throughput. To address this, we introduce module-based batching, which accumulates tokens in host memory and dynamically launches large batches on GPUs to maximize utilization. Additionally, we optimize the choice of batch sizes for each module in an MoE to fully overlap GPU computation and communication, maximizing throughput. Evaluation demonstrates that MoE-Gen achieves 8-31x higher throughput compared to state-of-the-art systems employing model-based batching (FlexGen, MoE-Lightning, DeepSpeed), and offers even greater throughput improvements over continuous batching systems (e.g., vLLM and Ollama) on popular MoE models (DeepSeek and Mixtral) across offline inference tasks. MoE-Gen's source code is publicly available at https://github.com/EfficientMoE/MoE-Gen

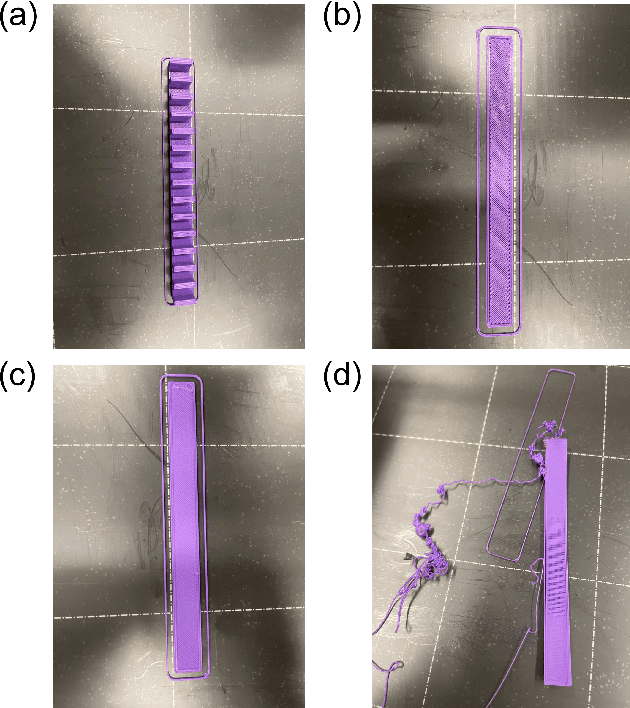

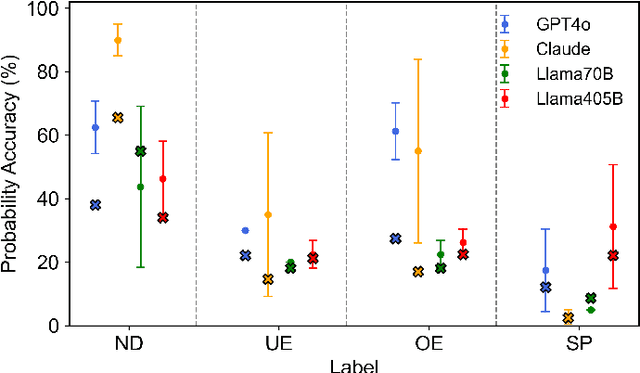

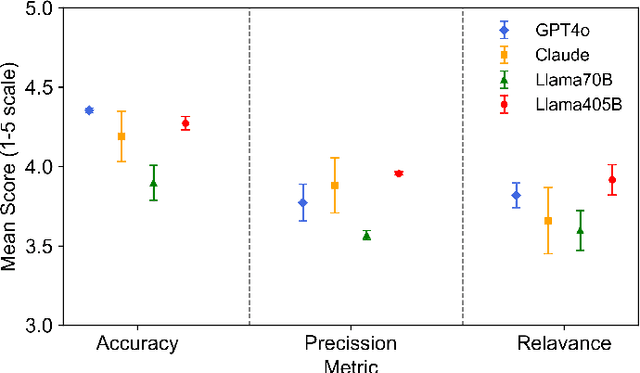

FDM-Bench: A Comprehensive Benchmark for Evaluating Large Language Models in Additive Manufacturing Tasks

Dec 13, 2024

Abstract:Fused Deposition Modeling (FDM) is a widely used additive manufacturing (AM) technique valued for its flexibility and cost-efficiency, with applications in a variety of industries including healthcare and aerospace. Recent developments have made affordable FDM machines accessible and encouraged adoption among diverse users. However, the design, planning, and production process in FDM require specialized interdisciplinary knowledge. Managing the complex parameters and resolving print defects in FDM remain challenging. These technical complexities form the most critical barrier preventing individuals without technical backgrounds and even professional engineers without training in other domains from participating in AM design and manufacturing. Large Language Models (LLMs), with their advanced capabilities in text and code processing, offer the potential for addressing these challenges in FDM. However, existing research on LLM applications in this field is limited, typically focusing on specific use cases without providing comprehensive evaluations across multiple models and tasks. To this end, we introduce FDM-Bench, a benchmark dataset designed to evaluate LLMs on FDM-specific tasks. FDM-Bench enables a thorough assessment by including user queries across various experience levels and G-code samples that represent a range of anomalies. We evaluate two closed-source models (GPT-4o and Claude 3.5 Sonnet) and two open-source models (Llama-3.1-70B and Llama-3.1-405B) on FDM-Bench. A panel of FDM experts assess the models' responses to user queries in detail. Results indicate that closed-source models generally outperform open-source models in G-code anomaly detection, whereas Llama-3.1-405B demonstrates a slight advantage over other models in responding to user queries. These findings underscore FDM-Bench's potential as a foundational tool for advancing research on LLM capabilities in FDM.

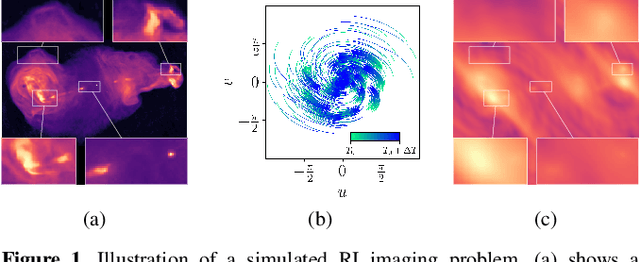

Plug-and-play imaging with model uncertainty quantification in radio astronomy

Dec 14, 2023

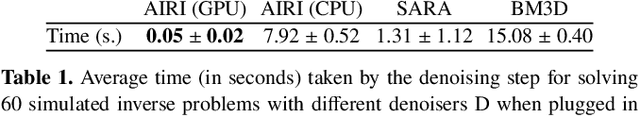

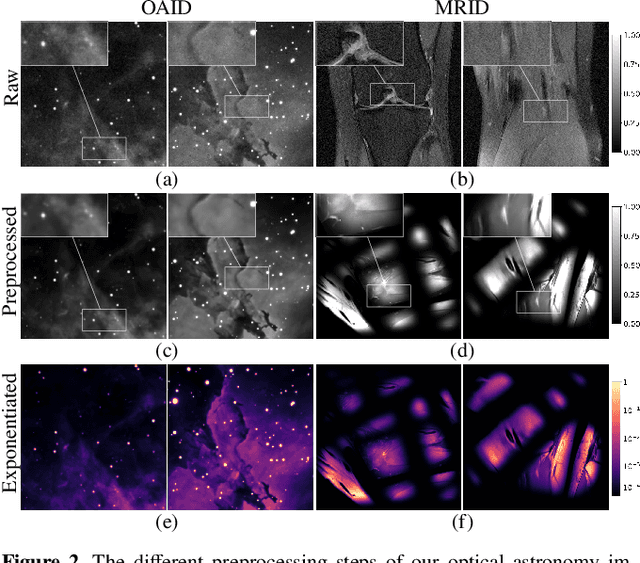

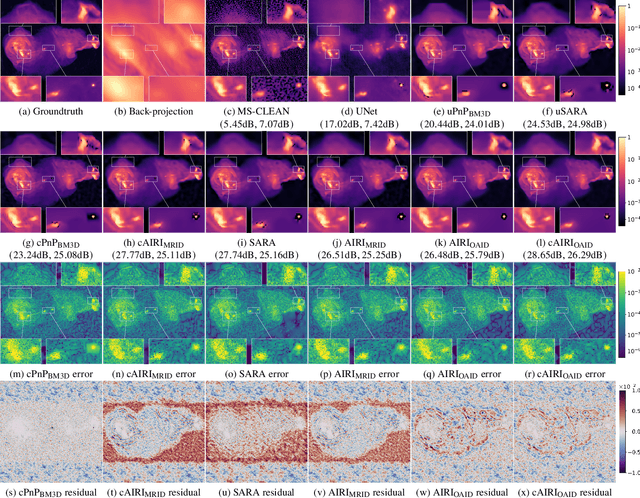

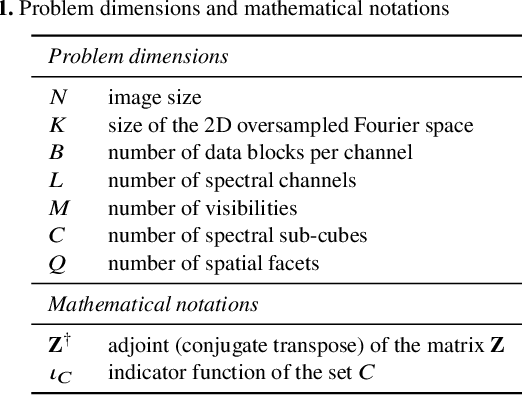

Abstract:Plug-and-Play (PnP) algorithms are appealing alternatives to proximal algorithms when solving inverse imaging problems. By learning a Deep Neural Network (DNN) behaving as a proximal operator, one waives the computational complexity of optimisation algorithms induced by sophisticated image priors, and the sub-optimality of handcrafted priors compared to DNNs. At the same time, these methods inherit the versatility of optimisation algorithms allowing the minimisation of a large class of objective functions. Such features are highly desirable in radio-interferometric (RI) imaging in astronomy, where the data size, the ill-posedness of the problem and the dynamic range of the target reconstruction are critical. In a previous work, we introduced a class of convergent PnP algorithms, dubbed AIRI, relying on a forward-backward algorithm, with a differentiable data-fidelity term and dynamic range-specific denoisers trained on highly pre-processed unrelated optical astronomy images. Here, we show that AIRI algorithms can benefit from a constrained data fidelity term at the mere cost of transferring to a primal-dual forward-backward algorithmic backbone. Moreover, we show that AIRI algorithms are robust to strong variations in the nature of the training dataset: denoisers trained on MRI images yield similar reconstructions to those trained on astronomical data. We additionally quantify the model uncertainty introduced by the randomness in the training process and suggest that AIRI algorithms are robust to model uncertainty. Finally, we propose an exhaustive comparison with methods from the radio-astronomical imaging literature and show the superiority of the proposed method over the current state-of-the-art.

Deep network series for large-scale high-dynamic range imaging

Oct 28, 2022

Abstract:We propose a new approach for large-scale high-dynamic range computational imaging. Deep Neural Networks (DNNs) trained end-to-end can solve linear inverse imaging problems almost instantaneously. While unfolded architectures provide necessary robustness to variations of the measurement setting, embedding large-scale measurement operators in DNN architectures is impractical. Alternative Plug-and-Play (PnP) approaches, where the denoising DNNs are blind to the measurement setting, have proven effective to address scalability and high-dynamic range challenges, but rely on highly iterative algorithms. We propose a residual DNN series approach, where the reconstructed image is built as a sum of residual images progressively increasing the dynamic range, and estimated iteratively by DNNs taking the back-projected data residual of the previous iteration as input. We demonstrate on simulations for radio-astronomical imaging that a series of only few terms provides a high-dynamic range reconstruction of similar quality to state-of-the-art PnP approaches, at a fraction of the cost.

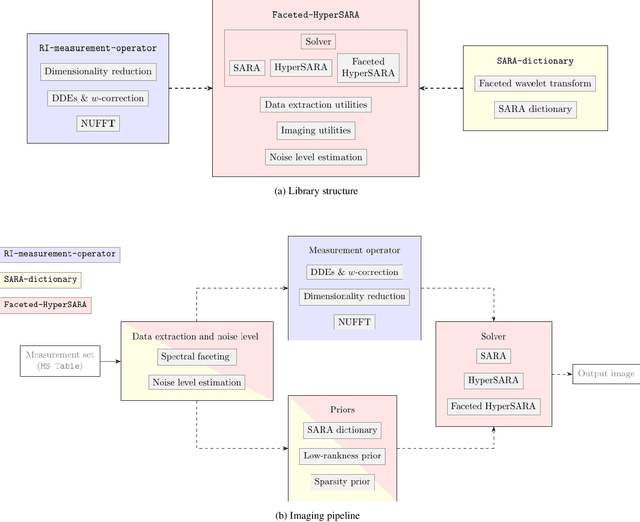

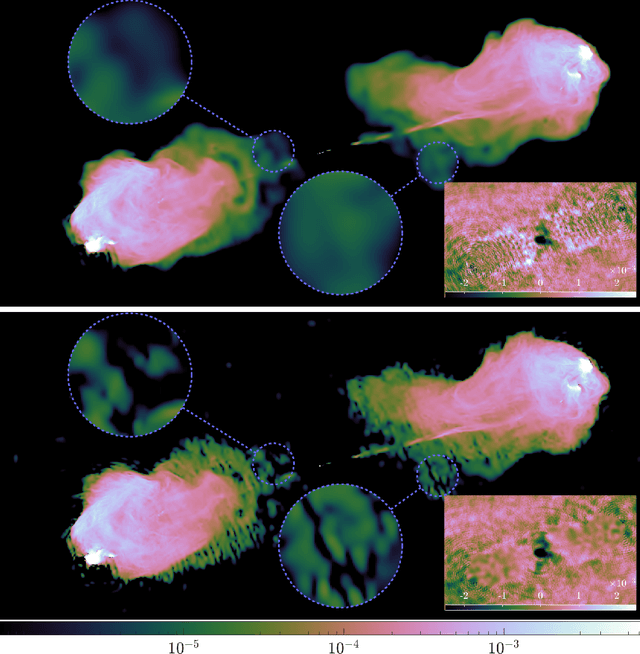

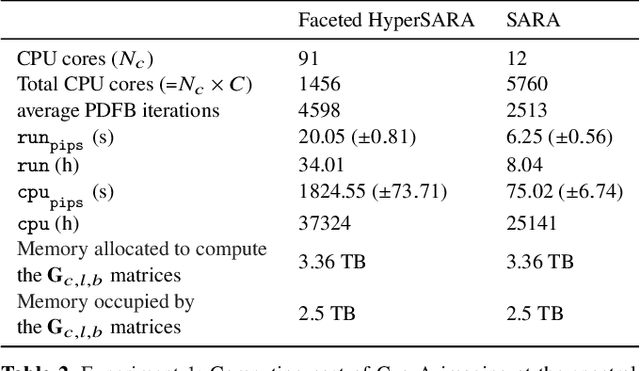

Parallel faceted imaging in radio interferometry via proximal splitting (Faceted HyperSARA): II. Code and real data proof of concept

Sep 15, 2022

Abstract:In a companion paper, a faceted wideband imaging technique for radio interferometry, dubbed Faceted HyperSARA, has been introduced and validated on synthetic data. Building on the recent HyperSARA approach, Faceted HyperSARA leverages the splitting functionality inherent to the underlying primal-dual forward-backward algorithm to decompose the image reconstruction over multiple spatio-spectral facets. The approach allows complex regularization to be injected into the imaging process while providing additional parallelization flexibility compared to HyperSARA. The present paper introduces new algorithm functionalities to address real datasets, implemented as part of a fully fledged MATLAB imaging library made available on Github. A large scale proof-of-concept is proposed to validate Faceted HyperSARA in a new data and parameter scale regime, compared to the state-of-the-art. The reconstruction of a 15 GB wideband image of Cyg A from 7.4 GB of VLA data is considered, utilizing 1440 CPU cores on a HPC system for about 9 hours. The conducted experiments illustrate the reconstruction performance of the proposed approach on real data, exploiting new functionalities to set, both an accurate model of the measurement operator accounting for known direction-dependent effects (DDEs), and an effective noise level accounting for imperfect calibration. They also demonstrate that, when combined with a further dimensionality reduction functionality, Faceted HyperSARA enables the recovery of a 3.6 GB image of Cyg A from the same data using only 91 CPU cores for 39 hours. In this setting, the proposed approach is shown to provide a superior reconstruction quality compared to the state-of-the-art wideband CLEAN-based algorithm of the WSClean software.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge