Arwa Dabbech

Towards a robust R2D2 paradigm for radio-interferometric imaging: revisiting DNN training and architecture

Mar 04, 2025Abstract:The R2D2 Deep Neural Network (DNN) series was recently introduced for image formation in radio interferometry. It can be understood as a learned version of CLEAN, whose minor cycles are substituted with DNNs. We revisit R2D2 on the grounds of series convergence, training methodology, and DNN architecture, improving its robustness in terms of generalisability beyond training conditions, capability to deliver high data fidelity, and epistemic uncertainty. Firstly, while still focusing on telescope-specific training, we enhance the learning process by randomising Fourier sampling integration times, incorporating multi-scan multi-noise configurations, and varying imaging settings, including pixel resolution and visibility-weighting scheme. Secondly, we introduce a convergence criterion whereby the reconstruction process stops when the data residual is compatible with noise, rather than simply using all available DNNs. This not only increases the reconstruction efficiency by reducing its computational cost, but also refines training by pruning out the data/image pairs for which optimal data fidelity is reached before training the next DNN. Thirdly, we substitute R2D2's early U-Net DNN with a novel architecture (U-WDSR) combining U-Net and WDSR, which leverages wide activation, dense connections, weight normalisation, and low-rank convolution to improve feature reuse and reconstruction precision. As previously, R2D2 was trained for monochromatic intensity imaging with the Very Large Array (VLA) at fixed $512 \times 512$ image size. Simulations on a wide range of inverse problems and a case study on real data reveal that the new R2D2 model consistently outperforms its earlier version in image reconstruction quality, data fidelity, and epistemic uncertainty.

R2D2 image reconstruction with model uncertainty quantification in radio astronomy

Mar 26, 2024

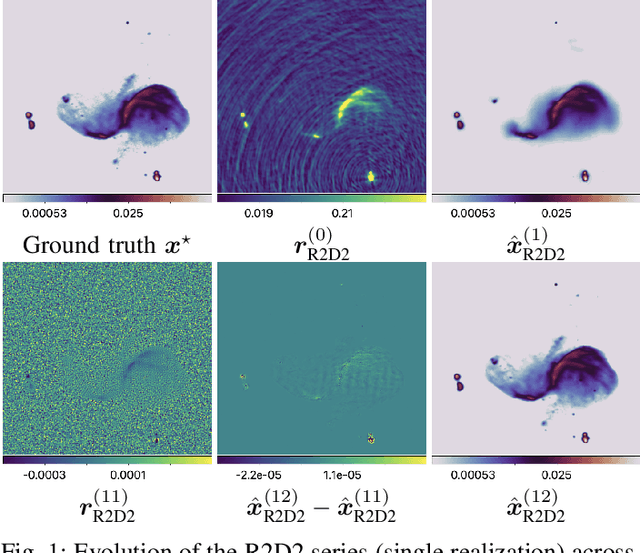

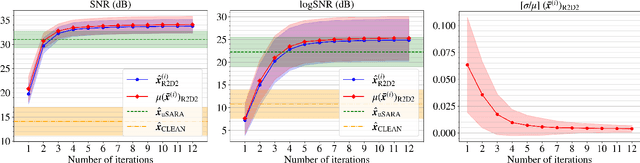

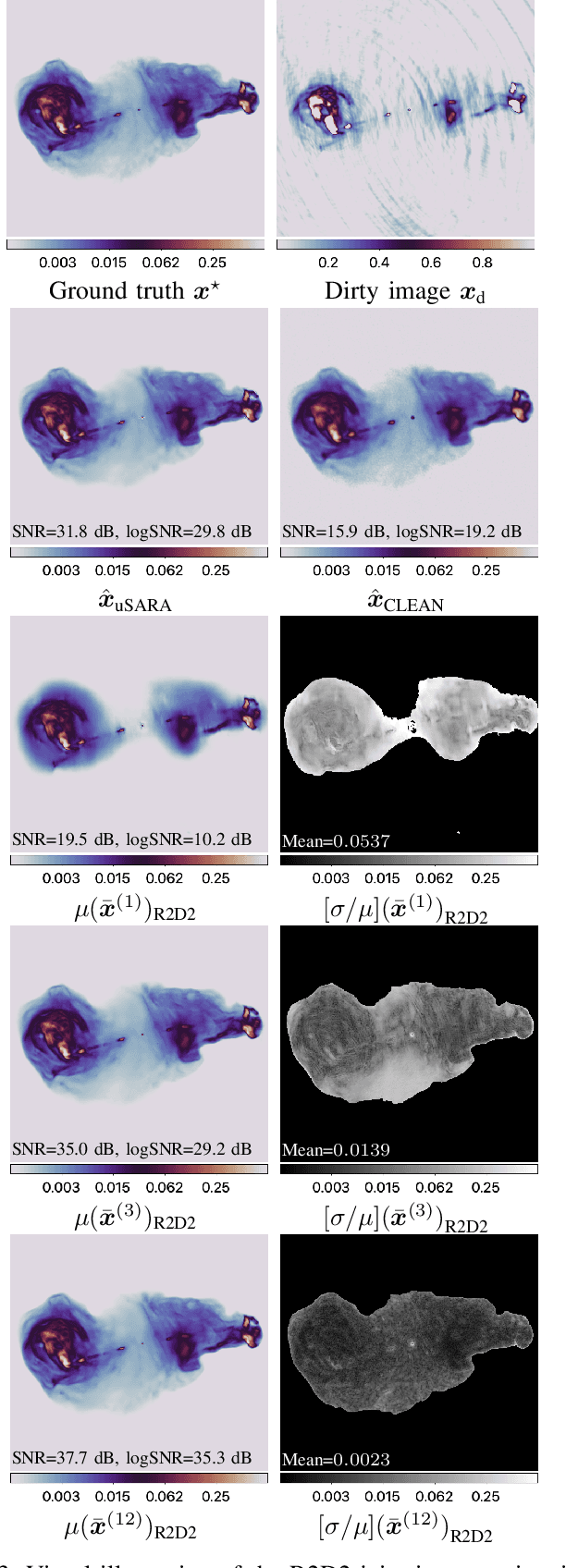

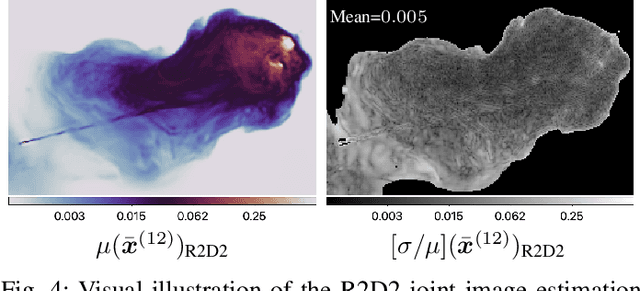

Abstract:The ``Residual-to-Residual DNN series for high-Dynamic range imaging'' (R2D2) approach was recently introduced for Radio-Interferometric (RI) imaging in astronomy. R2D2's reconstruction is formed as a series of residual images, iteratively estimated as outputs of Deep Neural Networks (DNNs) taking the previous iteration's image estimate and associated data residual as inputs. In this work, we investigate the robustness of the R2D2 image estimation process, by studying the uncertainty associated with its series of learned models. Adopting an ensemble averaging approach, multiple series can be trained, arising from different random DNN initializations of the training process at each iteration. The resulting multiple R2D2 instances can also be leveraged to generate ``R2D2 samples'', from which empirical mean and standard deviation endow the algorithm with a joint estimation and uncertainty quantification functionality. Focusing on RI imaging, and adopting a telescope-specific approach, multiple R2D2 instances were trained to encompass the most general observation setting of the Very Large Array (VLA). Simulations and real-data experiments confirm that: (i) R2D2's image estimation capability is superior to that of the state-of-the-art algorithms; (ii) its ultra-fast reconstruction capability (arising from series with only few DNNs) makes the computation of multiple reconstruction samples and of uncertainty maps practical even at large image dimension; (iii) it is characterized by a very low model uncertainty.

The R2D2 deep neural network series paradigm for fast precision imaging in radio astronomy

Mar 12, 2024Abstract:Radio-interferometric (RI) imaging entails solving high-resolution high-dynamic range inverse problems from large data volumes. Recent image reconstruction techniques grounded in optimization theory have demonstrated remarkable capability for imaging precision, well beyond CLEAN's capability. These range from advanced proximal algorithms propelled by handcrafted regularization operators, such as the SARA family, to hybrid plug-and-play (PnP) algorithms propelled by learned regularization denoisers, such as AIRI. Optimization and PnP structures are however highly iterative, which hinders their ability to handle the extreme data sizes expected from future instruments. To address this scalability challenge, we introduce a novel deep learning approach, dubbed ``Residual-to-Residual DNN series for high-Dynamic range imaging''. R2D2's reconstruction is formed as a series of residual images, iteratively estimated as outputs of Deep Neural Networks (DNNs) taking the previous iteration's image estimate and associated data residual as inputs. It thus takes a hybrid structure between a PnP algorithm and a learned version of the matching pursuit algorithm that underpins CLEAN. We present a comprehensive study of our approach, featuring its multiple incarnations distinguished by their DNN architectures. We provide a detailed description of its training process, targeting a telescope-specific approach. R2D2's capability to deliver high precision is demonstrated in simulation, across a variety of image and observation settings using the Very Large Array (VLA). Its reconstruction speed is also demonstrated: with only few iterations required to clean data residuals at dynamic ranges up to 100000, R2D2 opens the door to fast precision imaging. R2D2 codes are available in the BASPLib library on GitHub.

Parallel faceted imaging in radio interferometry via proximal splitting (Faceted HyperSARA): II. Code and real data proof of concept

Sep 15, 2022

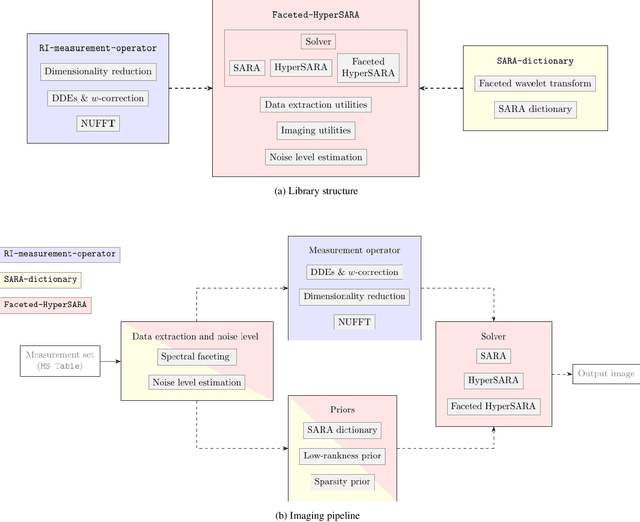

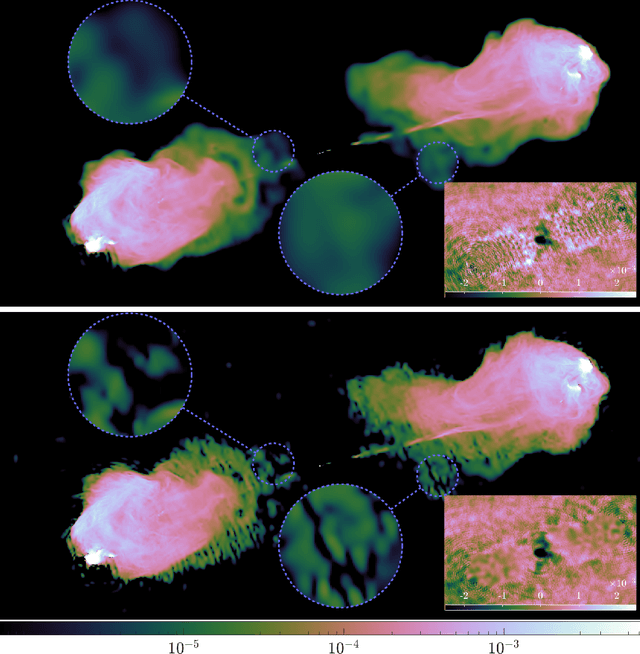

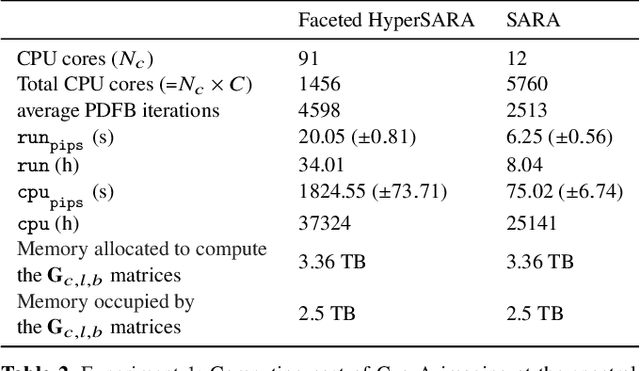

Abstract:In a companion paper, a faceted wideband imaging technique for radio interferometry, dubbed Faceted HyperSARA, has been introduced and validated on synthetic data. Building on the recent HyperSARA approach, Faceted HyperSARA leverages the splitting functionality inherent to the underlying primal-dual forward-backward algorithm to decompose the image reconstruction over multiple spatio-spectral facets. The approach allows complex regularization to be injected into the imaging process while providing additional parallelization flexibility compared to HyperSARA. The present paper introduces new algorithm functionalities to address real datasets, implemented as part of a fully fledged MATLAB imaging library made available on Github. A large scale proof-of-concept is proposed to validate Faceted HyperSARA in a new data and parameter scale regime, compared to the state-of-the-art. The reconstruction of a 15 GB wideband image of Cyg A from 7.4 GB of VLA data is considered, utilizing 1440 CPU cores on a HPC system for about 9 hours. The conducted experiments illustrate the reconstruction performance of the proposed approach on real data, exploiting new functionalities to set, both an accurate model of the measurement operator accounting for known direction-dependent effects (DDEs), and an effective noise level accounting for imperfect calibration. They also demonstrate that, when combined with a further dimensionality reduction functionality, Faceted HyperSARA enables the recovery of a 3.6 GB image of Cyg A from the same data using only 91 CPU cores for 39 hours. In this setting, the proposed approach is shown to provide a superior reconstruction quality compared to the state-of-the-art wideband CLEAN-based algorithm of the WSClean software.

Image reconstruction algorithms in radio interferometry: from handcrafted to learned denoisers

Feb 25, 2022

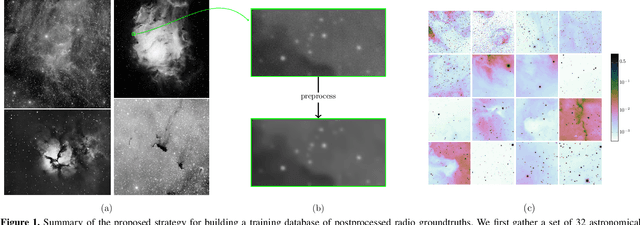

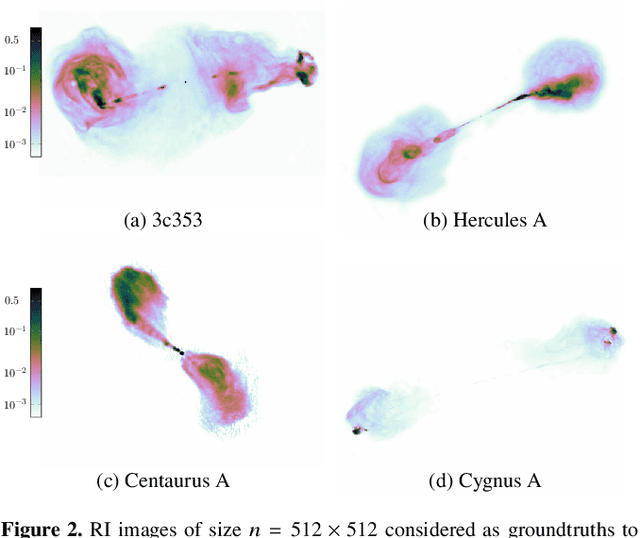

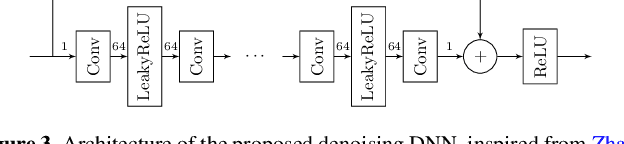

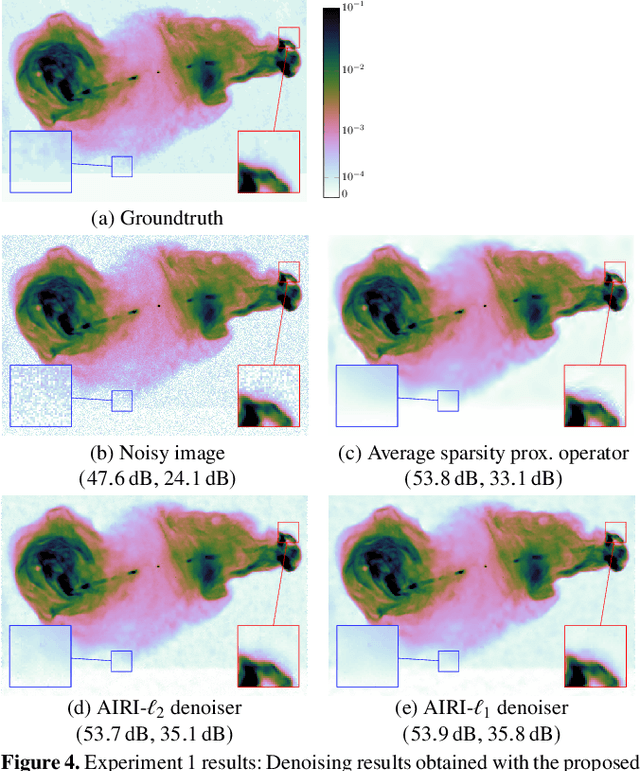

Abstract:We introduce a new class of iterative image reconstruction algorithms for radio interferometry, at the interface of convex optimization and deep learning, inspired by plug-and-play methods. The approach consists in learning a prior image model by training a deep neural network (DNN) as a denoiser, and substituting it for the handcrafted proximal regularization operator of an optimization algorithm. The proposed AIRI ("AI for Regularization in Radio-Interferometric Imaging") framework, for imaging complex intensity structure with diffuse and faint emission, inherits the robustness and interpretability of optimization, and the learning power and speed of networks. Our approach relies on three steps. Firstly, we design a low dynamic range database for supervised training from optical intensity images. Secondly, we train a DNN denoiser with basic architecture ensuring positivity of the output image, at a noise level inferred from the signal-to-noise ratio of the data. We use either $\ell_2$ or $\ell_1$ training losses, enhanced with a nonexpansiveness term ensuring algorithm convergence, and including on-the-fly database dynamic range enhancement via exponentiation. Thirdly, we plug the learned denoiser into the forward-backward optimization algorithm, resulting in a simple iterative structure alternating a denoising step with a gradient-descent data-fidelity step. The resulting AIRI-$\ell_2$ and AIRI-$\ell_1$ were validated against CLEAN and optimization algorithms of the SARA family, propelled by the "average sparsity" proximal regularization operator. Simulation results show that these first AIRI incarnations are competitive in imaging quality with SARA and its unconstrained forward-backward-based version uSARA, while providing significant acceleration. CLEAN remains faster but offers lower reconstruction quality.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge