Abhiram Gnanasambandam

Quanta Video Restoration

Oct 19, 2024Abstract:The proliferation of single-photon image sensors has opened the door to a plethora of high-speed and low-light imaging applications. However, data collected by these sensors are often 1-bit or few-bit, and corrupted by noise and strong motion. Conventional video restoration methods are not designed to handle this situation, while specialized quanta burst algorithms have limited performance when the number of input frames is low. In this paper, we introduce Quanta Video Restoration (QUIVER), an end-to-end trainable network built on the core ideas of classical quanta restoration methods, i.e., pre-filtering, flow estimation, fusion, and refinement. We also collect and publish I2-2000FPS, a high-speed video dataset with the highest temporal resolution of 2000 frames-per-second, for training and testing. On simulated and real data, QUIVER outperforms existing quanta restoration methods by a significant margin. Code and dataset available at https://github.com/chennuriprateek/Quanta_Video_Restoration-QUIVER-

The Secrets of Non-Blind Poisson Deconvolution

Sep 06, 2023Abstract:Non-blind image deconvolution has been studied for several decades but most of the existing work focuses on blur instead of noise. In photon-limited conditions, however, the excessive amount of shot noise makes traditional deconvolution algorithms fail. In searching for reasons why these methods fail, we present a systematic analysis of the Poisson non-blind deconvolution algorithms reported in the literature, covering both classical and deep learning methods. We compile a list of five "secrets" highlighting the do's and don'ts when designing algorithms. Based on this analysis, we build a proof-of-concept method by combining the five secrets. We find that the new method performs on par with some of the latest methods while outperforming some older ones.

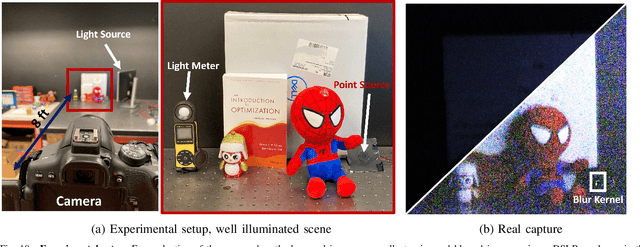

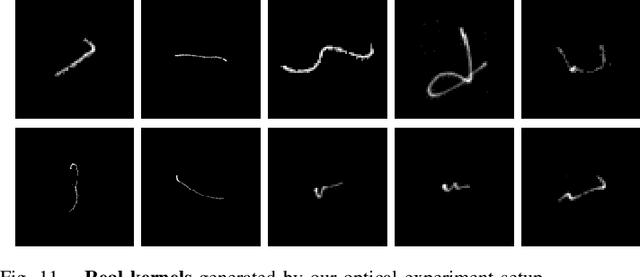

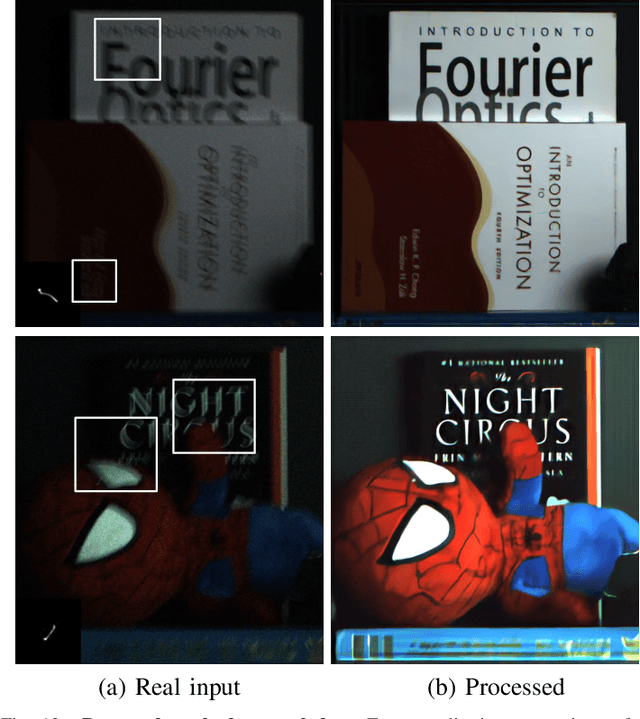

Photon-Limited Blind Deconvolution using Unsupervised Iterative Kernel Estimation

Aug 02, 2022

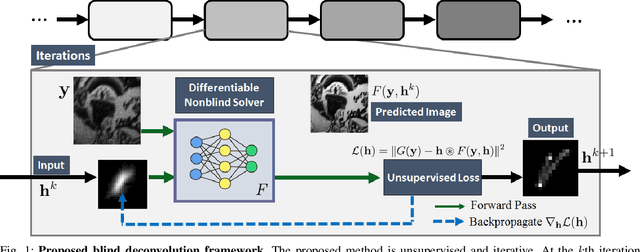

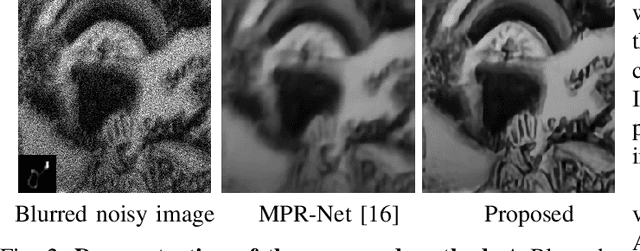

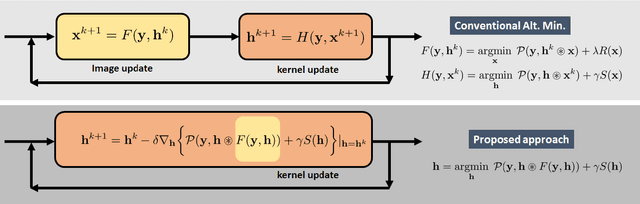

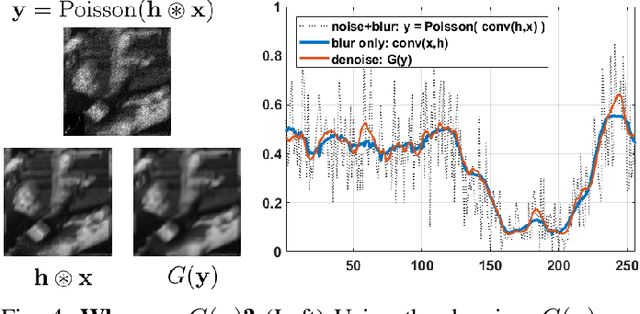

Abstract:Blind deconvolution in low-light is one of the more challenging problems in image restoration because of the photon shot noise. However, existing algorithms -- both classical and deep-learning based -- are not designed for this condition. When the shot noise is strong, conventional deconvolution methods fail because (1) the presence of noise makes the estimation of the blur kernel difficult; (2) generic deep-restoration models rarely model the forward process explicitly; (3) there are currently no iterative strategies to incorporate a non-blind solver in a kernel estimation stage. This paper addresses these challenges by presenting an unsupervised blind deconvolution method. At the core of this method is a reformulation of the general blind deconvolution framework from the conventional image-kernel alternating minimization to a purely kernel-based minimization. This kernel-based minimization leads to a new iterative scheme that backpropagates an unsupervised loss through a pre-trained non-blind solver to update the blur kernel. Experimental results show that the proposed framework achieves superior results than state-of-the-art blind deconvolution algorithms in low-light conditions.

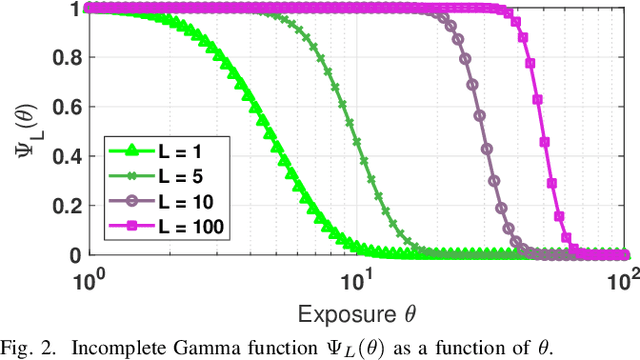

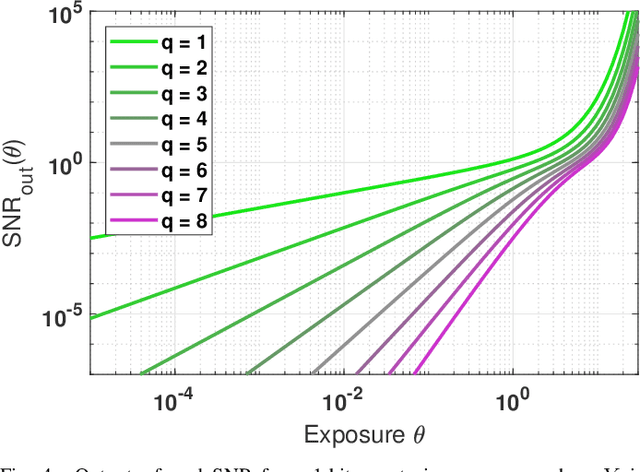

Exposure-Referred Signal-to-Noise Ratio for Digital Image Sensors

Dec 10, 2021

Abstract:The signal-to-noise ratio (SNR) of a digital image sensor is typically defined as the ratio between the mean over the standard deviation of the sensor's output, thus known as the output-referred SNR. For sensors with a large full-well capacity, the output-referred SNR demonstrates the well-known linear response in the log-log scale. However, as the input exposure approaches the full-well capacity, the vanishing randomness of the saturated pixel will cause this output-referred SNR to artificially go to infinity. Since modern digital image sensors have a small pitch and hence a small full-well capacity, the shortcomings of the output-referred SNR motivated the development of a theoretical concept known as the exposure-referred SNR, first reported in some sensors and computer vision papers in the 1990's and more since 2010. Some intuitions of the exposure-referred SNR have been discussed in the past, but little is known how the exposure-referred SNR can be rigorously derived. Recognizing the significance of such an analysis to all present and future small pixels, this paper presents a theoretical analysis to justify the definition and answer four questions: (1) What is the correct definition of SNR? (2) How is the output-referred SNR related to the exposure-referred SNR? (3) For simple noise models, the SNRs can be analytically derived, but for complex noise models, how to numerically compute the SNR? (4) What utilities can the exposure-referred SNR bring to solving imaging tasks? New theoretical results are shown to confirm the validity of the exposure-referred SNR for image sensors of any bit-depth and full-well capacity.

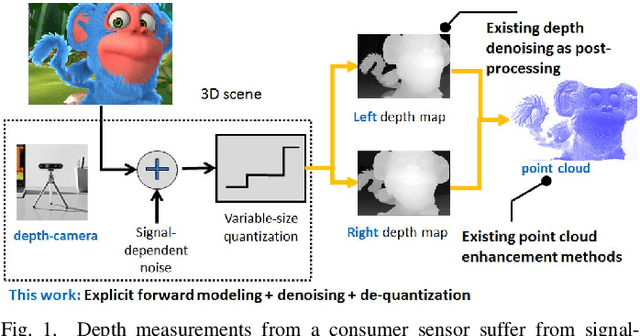

Graph-Based Depth Denoising & Dequantization for Point Cloud Enhancement

Nov 09, 2021

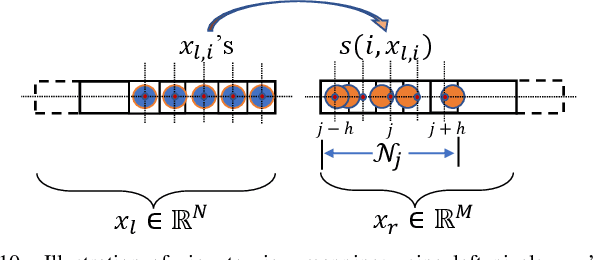

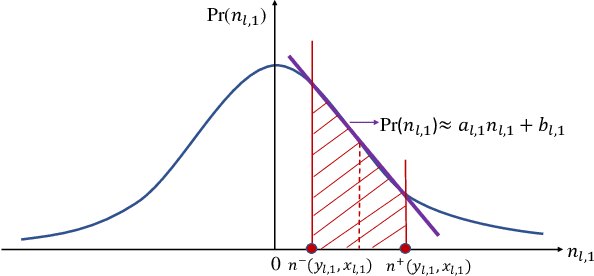

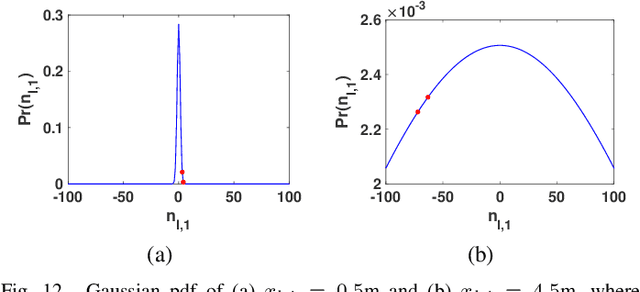

Abstract:A 3D point cloud is typically constructed from depth measurements acquired by sensors at one or more viewpoints. The measurements suffer from both quantization and noise corruption. To improve quality, previous works denoise a point cloud \textit{a posteriori} after projecting the imperfect depth data onto 3D space. Instead, we enhance depth measurements directly on the sensed images \textit{a priori}, before synthesizing a 3D point cloud. By enhancing near the physical sensing process, we tailor our optimization to our depth formation model before subsequent processing steps that obscure measurement errors. Specifically, we model depth formation as a combined process of signal-dependent noise addition and non-uniform log-based quantization. The designed model is validated (with parameters fitted) using collected empirical data from an actual depth sensor. To enhance each pixel row in a depth image, we first encode intra-view similarities between available row pixels as edge weights via feature graph learning. We next establish inter-view similarities with another rectified depth image via viewpoint mapping and sparse linear interpolation. This leads to a maximum a posteriori (MAP) graph filtering objective that is convex and differentiable. We optimize the objective efficiently using accelerated gradient descent (AGD), where the optimal step size is approximated via Gershgorin circle theorem (GCT). Experiments show that our method significantly outperformed recent point cloud denoising schemes and state-of-the-art image denoising schemes, in two established point cloud quality metrics.

Photon Limited Non-Blind Deblurring Using Algorithm Unrolling

Oct 29, 2021

Abstract:Image deblurring in photon-limited conditions is ubiquitous in a variety of low-light applications such as photography, microscopy and astronomy. However, the presence of photon shot noise due to low-illumination and/or short exposure makes the deblurring task substantially more challenging than the conventional deblurring problems. In this paper we present an algorithm unrolling approach for the photon-limited deblurring problem by unrolling a Plug-and-Play algorithm for a fixed number of iterations. By introducing a three-operator splitting formation of the Plug-and-Play framework, we obtain a series of differentiable steps which allows the fixed iteration unrolled network to be trained end-to-end. The proposed algorithm demonstrates significantly better image recovery compared to existing state-of-the-art deblurring approaches. We also present a new photon-limited deblurring dataset for evaluating the performance of algorithms.

Optical Adversarial Attack

Aug 16, 2021

Abstract:We introduce OPtical ADversarial attack (OPAD). OPAD is an adversarial attack in the physical space aiming to fool image classifiers without physically touching the objects (e.g., moving or painting the objects). The principle of OPAD is to use structured illumination to alter the appearance of the target objects. The system consists of a low-cost projector, a camera, and a computer. The challenge of the problem is the non-linearity of the radiometric response of the projector and the spatially varying spectral response of the scene. Attacks generated in a conventional approach do not work in this setting unless they are calibrated to compensate for such a projector-camera model. The proposed solution incorporates the projector-camera model into the adversarial attack optimization, where a new attack formulation is derived. Experimental results prove the validity of the solution. It is demonstrated that OPAD can optically attack a real 3D object in the presence of background lighting for white-box, black-box, targeted, and untargeted attacks. Theoretical analysis is presented to quantify the fundamental performance limit of the system.

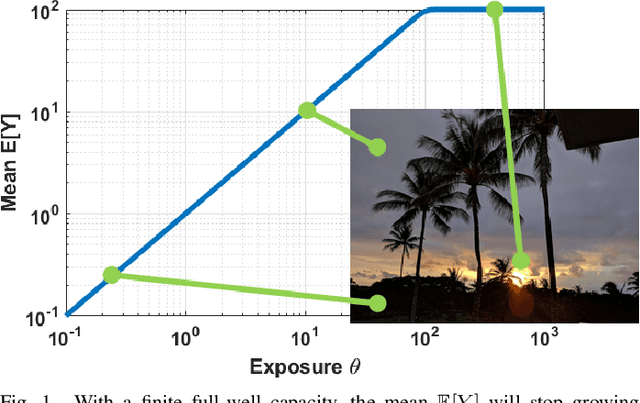

HDR Imaging with Quanta Image Sensors: Theoretical Limits and Optimal Reconstruction

Nov 06, 2020

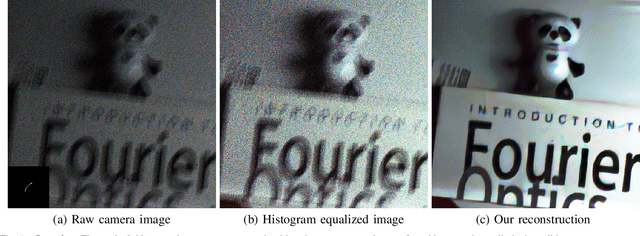

Abstract:High dynamic range (HDR) imaging is one of the biggest achievements in modern photography. Traditional solutions to HDR imaging are designed for and applied to CMOS image sensors (CIS). However, the mainstream one-micron CIS cameras today generally have a high read noise and low frame-rate. These, in turn, limit the acquisition speed and quality, making the cameras slow in the HDR mode. In this paper, we propose a new computational photography technique for HDR imaging. Recognizing the limitations of CIS, we use the Quanta Image Sensor (QIS) to trade the spatial-temporal resolution with bit-depth. QIS is a single-photon image sensor that has comparable pixel pitch to CIS but substantially lower dark current and read noise. We provide a complete theoretical characterization of the sensor in the context of HDR imaging, by proving the fundamental limits in the dynamic range that QIS can offer and the trade-offs with noise and speed. In addition, we derive an optimal reconstruction algorithm for single-bit and multi-bit QIS. Our algorithm is theoretically optimal for \emph{all} linear reconstruction schemes based on exposure bracketing. Experimental results confirm the validity of the theory and algorithm, based on synthetic and real QIS data.

Dynamic Low-light Imaging with Quanta Image Sensors

Jul 16, 2020

Abstract:Imaging in low light is difficult because the number of photons arriving at the sensor is low. Imaging dynamic scenes in low-light environments is even more difficult because as the scene moves, pixels in adjacent frames need to be aligned before they can be denoised. Conventional CMOS image sensors (CIS) are at a particular disadvantage in dynamic low-light settings because the exposure cannot be too short lest the read noise overwhelms the signal. We propose a solution using Quanta Image Sensors (QIS) and present a new image reconstruction algorithm. QIS are single-photon image sensors with photon counting capabilities. Studies over the past decade have confirmed the effectiveness of QIS for low-light imaging but reconstruction algorithms for dynamic scenes in low light remain an open problem. We fill the gap by proposing a student-teacher training protocol that transfers knowledge from a motion teacher and a denoising teacher to a student network. We show that dynamic scenes can be reconstructed from a burst of frames at a photon level of 1 photon per pixel per frame. Experimental results confirm the advantages of the proposed method compared to existing methods.

Image Classification in the Dark using Quanta Image Sensors

Jun 23, 2020

Abstract:State-of-the-art image classifiers are trained and tested using well-illuminated images. These images are typically captured by CMOS image sensors with at least tens of photons per pixel. However, in dark environments when the photon flux is low, image classification becomes difficult because the measured signal is suppressed by noise. In this paper, we present a new low-light image classification solution using Quanta Image Sensors (QIS). QIS are a new type of image sensors that possess photon counting ability without compromising on pixel size and spatial resolution. Numerous studies over the past decade have demonstrated the feasibility of QIS for low-light imaging, but their usage for image classification has not been studied. This paper fills the gap by presenting a student-teacher learning scheme which allows us to classify the noisy QIS raw data. We show that with student-teacher learning, we are able to achieve image classification at a photon level of one photon per pixel or lower. Experimental results verify the effectiveness of the proposed method compared to existing solutions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge