Abhineet Singh

Deep MDP: A Modular Framework for Multi-Object Tracking

Oct 22, 2023Abstract:This paper presents a fast and modular framework for Multi-Object Tracking (MOT) based on the Markov descision process (MDP) tracking-by-detection paradigm. It is designed to allow its various functional components to be replaced by custom-designed alternatives to suit a given application. An interactive GUI with integrated object detection, segmentation, MOT and semi-automated labeling is also provided to help make it easier to get started with this framework. Though not breaking new ground in terms of performance, Deep MDP has a large code-base that should be useful for the community to try out new ideas or simply to have an easy-to-use and easy-to-adapt system for any MOT application. Deep MDP is available at https://github.com/abhineet123/deep_mdp.

Towards Early Prediction of Human iPSC Reprogramming Success

May 23, 2023Abstract:This paper presents advancements in automated early-stage prediction of the success of reprogramming human induced pluripotent stem cells (iPSCs) as a potential source for regenerative cell therapies.The minuscule success rate of iPSC-reprogramming of around $ 0.01% $ to $ 0.1% $ makes it labor-intensive, time-consuming, and exorbitantly expensive to generate a stable iPSC line. Since that requires culturing of millions of cells and intense biological scrutiny of multiple clones to identify a single optimal clone. The ability to reliably predict which cells are likely to establish as an optimal iPSC line at an early stage of pluripotency would therefore be ground-breaking in rendering this a practical and cost-effective approach to personalized medicine. Temporal information about changes in cellular appearance over time is crucial for predicting its future growth outcomes. In order to generate this data, we first performed continuous time-lapse imaging of iPSCs in culture using an ultra-high resolution microscope. We then annotated the locations and identities of cells in late-stage images where reliable manual identification is possible. Next, we propagated these labels backwards in time using a semi-automated tracking system to obtain labels for early stages of growth. Finally, we used this data to train deep neural networks to perform automatic cell segmentation and classification. Our code and data are available at https://github.com/abhineet123/ipsc_prediction.

To filter prune, or to layer prune, that is the question

Jul 11, 2020

Abstract:Recent advances in pruning of neural networks have made it possible to remove a large number of filters or weights without any perceptible drop in accuracy. The number of parameters and that of FLOPs are usually the reported metrics to measure the quality of the pruned models. However, the gain in speed for these pruned methods is often overlooked in the literature due to the complex nature of latency measurements. In this paper, we show the limitation of filter pruning methods in terms of latency reduction and propose LayerPrune framework. LayerPrune presents set of layer pruning methods based on different criteria that achieve higher latency reduction than filter pruning methods on similar accuracy. The advantage of layer pruning over filter pruning in terms of latency reduction is a result of the fact that the former is not constrained by the original model's depth and thus allows for a larger range of latency reduction. For each filter pruning method we examined, we use the same filter importance criterion to calculate a per-layer importance score in one-shot. We then prune the least important layers and fine-tune the shallower model which obtains comparable or better accuracy than its filter-based pruning counterpart. This one-shot process allows to remove layers from single path networks like VGG before fine-tuning, unlike in iterative filter pruning, a minimum number of filters per layer is required to allow for data flow which constraint the search space. To the best of our knowledge, we are the first to examine the effect of pruning methods on latency metric instead of FLOPs for multiple networks, datasets and hardware targets. LayerPrune also outperforms handcrafted architectures such as Shufflenet, MobileNet, MNASNet and ResNet18 by 7.3%, 4.6%, 2.8% and 0.5% respectively on similar latency budget on ImageNet dataset.

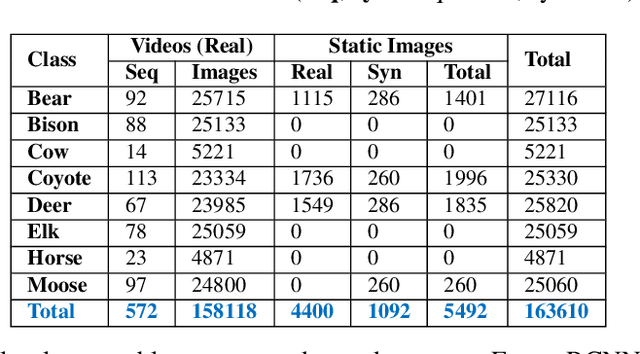

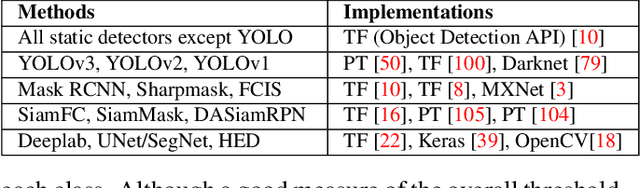

Animal Detection in Man-made Environments

Oct 24, 2019

Abstract:Automatic detection of animals that have strayed into human inhabited areas has important security and road safety applications. This paper attempts to solve this problem using deep learning techniques from a variety of computer vision fields including object detection, tracking, segmentation and edge detection. Several interesting insights are elicited into transfer learning while adapting models trained on benchmark datasets for real world deployment. Empirical evidence is presented to demonstrate the inability of detectors to generalize from training images of animals in their natural habitats to deployment scenarios of man-made environments. A solution is also proposed using semi-automated synthetic data generation for domain specific training. Code and data used in the experiments are made available to facilitate further work in this domain.

River Ice Segmentation with Deep Learning

Jan 14, 2019

Abstract:This paper deals with the problem of computing surface ice concentration for two different types of ice from river ice images. It presents the results of attempting to solve this problem using several state of the art semantic segmentation methods based on deep convolutional neural networks (CNNs). This task presents two main challenges - very limited availability of labeled training data and the great difficulty of visually distinguishing the two types of ice, even for humans, leading to noisy labels.. The results are used to analyze the extent to which some of the best deep learning methods currently in existence can handle these challenges.

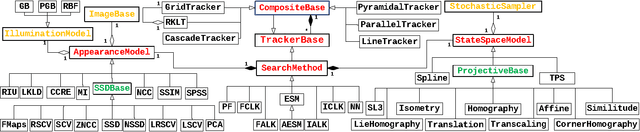

Modular Tracking Framework: A Unified Approach to Registration based Tracking

May 18, 2018

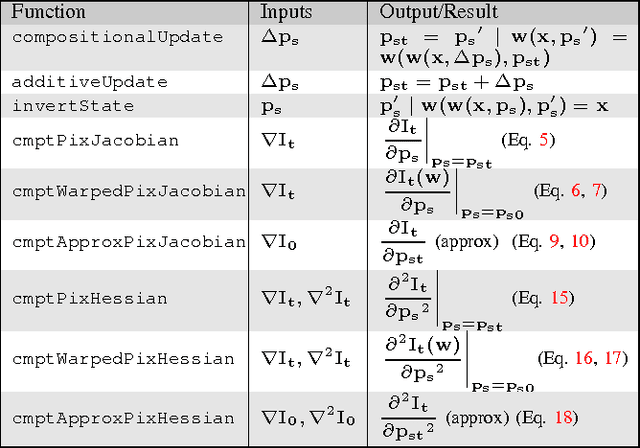

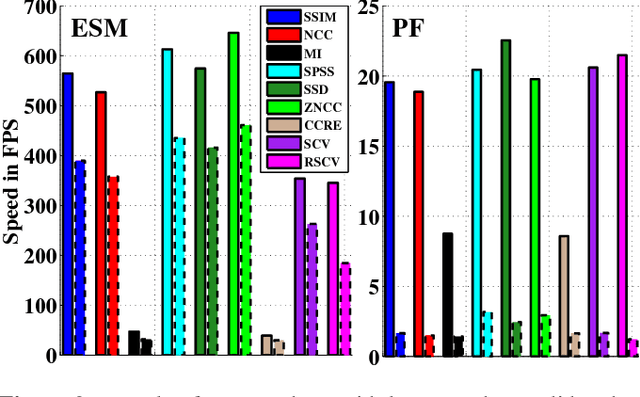

Abstract:This paper presents a modular, extensible and highly efficient open source framework for registration based tracking called Modular Tracking Framework (MTF). Targeted at robotics applications, it is implemented entirely in C++ and designed from the ground up to easily integrate with systems that support any of several major vision and robotics libraries including OpenCV, ROS, ViSP and Eigen. It implements more methods, is faster, and more precise than other existing systems. Further, the theoretical basis for its design is a new way to conceptualize registration based trackers that decomposes them into three constituent sub modules - Search Method (SM), Appearance Model (AM) and State Space Model (SSM). In the process, we integrate many important advances published after Baker \& Matthews' landmark work in 2004. In addition to being a practical solution for fast and high precision tracking, MTF can also serve as a useful research tool by allowing existing and new methods for any of the sub modules to be studied better. When a new method is introduced for one of these, the breakdown can help to experimentally find the combination of methods for the others that is optimum for it. By extensive use of generic programming, MTF makes it easy to plug in a new method for any of the sub modules so that it can not only be tested comprehensively with existing methods but also become immediately available for deployment in any project that uses the framework. With 16 AMs, 11 SMs and 13 SSMs implemented already, MTF provides over 2000 distinct single layer trackers. It also allows two or more of these to be combined together in several ways to create a practically unlimited variety of novel multi layer trackers.

Real-Time Salient Closed Boundary Tracking via Line Segments Perceptual Grouping

Aug 09, 2017

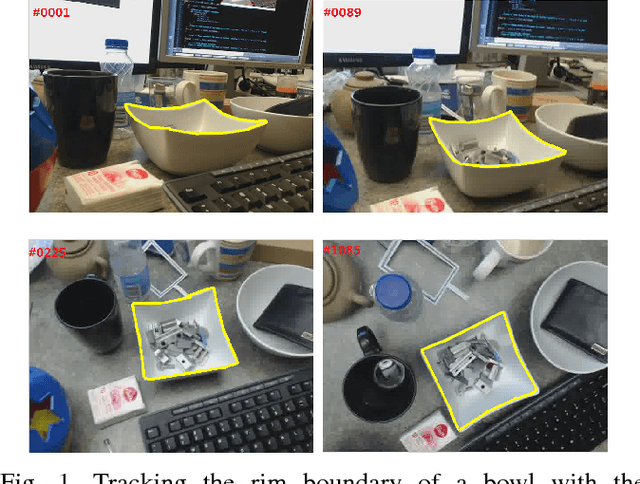

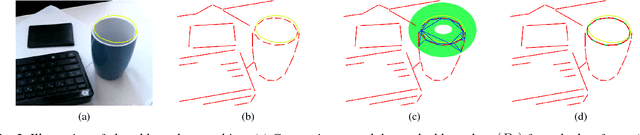

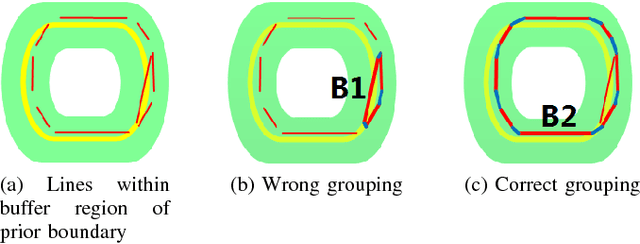

Abstract:This paper presents a novel real-time method for tracking salient closed boundaries from video image sequences. This method operates on a set of straight line segments that are produced by line detection. The tracking scheme is coherently integrated into a perceptual grouping framework in which the visual tracking problem is tackled by identifying a subset of these line segments and connecting them sequentially to form a closed boundary with the largest saliency and a certain similarity to the previous one. Specifically, we define a new tracking criterion which combines a grouping cost and an area similarity constraint. The proposed criterion makes the resulting boundary tracking more robust to local minima. To achieve real-time tracking performance, we use Delaunay Triangulation to build a graph model with the detected line segments and then reduce the tracking problem to finding the optimal cycle in this graph. This is solved by our newly proposed closed boundary candidates searching algorithm called "Bidirectional Shortest Path (BDSP)". The efficiency and robustness of the proposed method are tested on real video sequences as well as during a robot arm pouring experiment.

4-DoF Tracking for Robot Fine Manipulation Tasks

Apr 04, 2017

Abstract:This paper presents two visual trackers from the different paradigms of learning and registration based tracking and evaluates their application in image based visual servoing. They can track object motion with four degrees of freedom (DoF) which, as we will show here, is sufficient for many fine manipulation tasks. One of these trackers is a newly developed learning based tracker that relies on learning discriminative correlation filters while the other is a refinement of a recent 8 DoF RANSAC based tracker adapted with a new appearance model for tracking 4 DoF motion. Both trackers are shown to provide superior performance to several state of the art trackers on an existing dataset for manipulation tasks. Further, a new dataset with challenging sequences for fine manipulation tasks captured from robot mounted eye-in-hand (EIH) cameras is also presented. These sequences have a variety of challenges encountered during real tasks including jittery camera movement, motion blur, drastic scale changes and partial occlusions. Quantitative and qualitative results on these sequences are used to show that these two trackers are robust to failures while providing high precision that makes them suitable for such fine manipulation tasks.

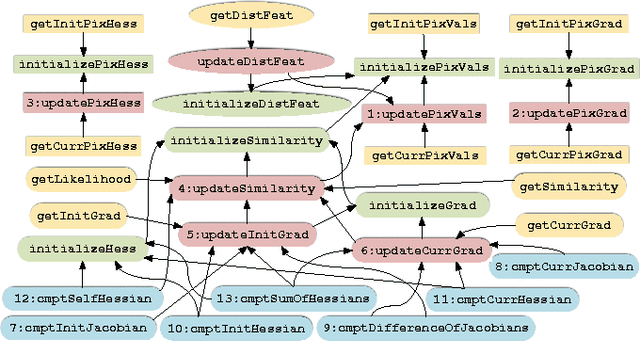

Unifying Registration based Tracking: A Case Study with Structural Similarity

Jan 30, 2017

Abstract:This paper adapts a popular image quality measure called structural similarity for high precision registration based tracking while also introducing a simpler and faster variant of the same. Further, these are evaluated comprehensively against existing measures using a unified approach to study registration based trackers that decomposes them into three constituent sub modules - appearance model, state space model and search method. Several popular trackers in literature are broken down using this method so that their contributions - as of this paper - are shown to be limited to only one or two of these submodules. An open source tracking framework is made available that follows this decomposition closely through extensive use of generic programming. It is used to perform all experiments on four publicly available datasets so the results are easily reproducible. This framework provides a convenient interface to plug in a new method for any sub module and combine it with existing methods for the other two. It can also serve as a fast and flexible solution for practical tracking needs due to its highly efficient implementation.

Modular Decomposition and Analysis of Registration based Trackers

Mar 25, 2016

Abstract:This paper presents a new way to study registration based trackers by decomposing them into three constituent sub modules: appearance model, state space model and search method. It is often the case that when a new tracker is introduced in literature, it only contributes to one or two of these sub modules while using existing methods for the rest. Since these are often selected arbitrarily by the authors, they may not be optimal for the new method. In such cases, our breakdown can help to experimentally find the best combination of methods for these sub modules while also providing a framework within which the contributions of the new tracker can be clearly demarcated and thus studied better. We show how existing trackers can be broken down using the suggested methodology and compare the performance of the default configuration chosen by the authors against other possible combinations to demonstrate the new insights that can be gained by such an approach. We also present an open source system that provides a convenient interface to plug in a new method for any sub module and test it against all possible combinations of methods for the other two sub modules while also serving as a fast and efficient solution for practical tracking requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge