Micro-doppler Signature

Papers and Code

Practical Evaluation of Quantum Kernel Methods for Radar Micro-Doppler Classification on Noisy Intermediate-Scale Quantum (NISQ) Hardware

Jan 29, 2026This paper examines the application of a Quantum Support Vector Machine (QSVM) for radarbased aerial target classification using micro-Doppler signatures. Classical features are extracted and reduced via Principal Component Analysis (PCA) to enable efficient quantum encoding. The reduced feature vectors are embedded into a quantum kernel-induced feature space using a fully entangled ZZFeatureMap and classified using a kernel based QSVM. Performance is first evaluated on a quantum simulator and subsequently validated on NISQ-era superconducting quantum hardware, specifically the IBM Torino (133-qubit) and IBM Fez (156-qubit) processors. Experimental results demonstrate that the QSVM achieves competitive classification performance relative to classical SVM baselines while operating on substantially reduced feature dimensionality. Hardware experiments reveal the impact of noise and decoherence and measurement shot count on quantum kernel estimation, and further show improved stability and fidelity on newer Heron r2 architecture. This study provides a systematic comparison between simulator-based and hardware-based QSVM implementations and highlights both the feasibility and current limitations of deploying quantum kernel methods for practical radar signal classification tasks.

μDopplerTag: CNN-Based Drone Recognition via Cooperative Micro-Doppler Tagging

Jan 12, 2026The rapid deployment of drones poses significant challenges for airspace management, security, and surveillance. Current detection and classification technologies, including cameras, LiDAR, and conventional radar systems, often struggle to reliably identify and differentiate drones, especially those of similar models, under diverse environmental conditions and at extended ranges. Moreover, low radar cross sections and clutter further complicate accurate drone identification. To address these limitations, we propose a novel drone classification method based on artificial micro-Doppler signatures encoded by resonant electromagnetic stickers attached to drone blades. These tags generate distinctive, configuration-specific radar returns, enabling robust identification. We develop a tailored convolutional neural network (CNN) capable of processing raw radar signals, achieving high classification accuracy. Extensive experiments were conducted both in anechoic chambers with 43 tag configurations and outdoors under realistic flight trajectories and noise conditions. Dimensionality reduction techniques, including Principal Component Analysis (PCA) and Uniform Manifold Approximation and Projection (UMAP), provided insight into code separability and robustness. Our results demonstrate reliable drone classification performance at signal-to-noise ratios as low as 7 dB, indicating the feasibility of long-range detection with advanced surveillance radar systems. Preliminary range estimations indicate potential operational distances of several kilometers, suitable for critical applications such as airport airspace monitoring. The integration of electromagnetic tagging with machine learning enables scalable and efficient drone identification, paving the way for enhanced aerial traffic management and security in increasingly congested airspaces.

MoCap2Radar: A Spatiotemporal Transformer for Synthesizing Micro-Doppler Radar Signatures from Motion Capture

Nov 14, 2025

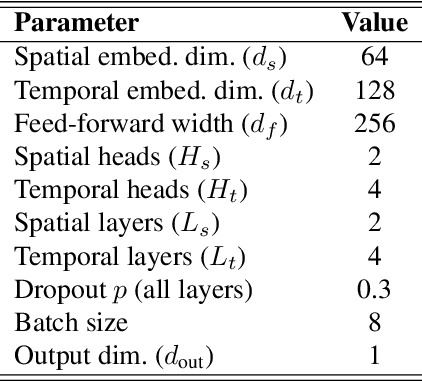

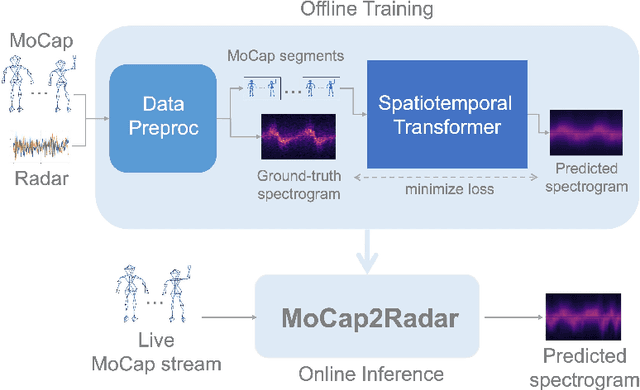

We present a pure machine learning process for synthesizing radar spectrograms from Motion-Capture (MoCap) data. We formulate MoCap-to-spectrogram translation as a windowed sequence-to-sequence task using a transformer-based model that jointly captures spatial relations among MoCap markers and temporal dynamics across frames. Real-world experiments show that the proposed approach produces visually and quantitatively plausible doppler radar spectrograms and achieves good generalizability. Ablation experiments show that the learned model includes both the ability to convert multi-part motion into doppler signatures and an understanding of the spatial relations between different parts of the human body. The result is an interesting example of using transformers for time-series signal processing. It is especially applicable to edge computing and Internet of Things (IoT) radars. It also suggests the ability to augment scarce radar datasets using more abundant MoCap data for training higher-level applications. Finally, it requires far less computation than physics-based methods for generating radar data.

The micro-Doppler Attack Against AI-based Human Activity Classification from Wireless Signals

Jul 28, 2025A subset of Human Activity Classification (HAC) systems are based on AI algorithms that use passively collected wireless signals. This paper presents the micro-Doppler attack targeting HAC from wireless orthogonal frequency division multiplexing (OFDM) signals. The attack is executed by inserting artificial variations in a transmitted OFDM waveform to alter its micro-Doppler signature when it reflects off a human target. We investigate two variants of our scheme that manipulate the waveform at different time scales resulting in altered receiver spectrograms. HAC accuracy with a deep convolutional neural network (CNN) can be reduced to less than 10%.

Deep Learning-based Human Gesture Channel Modeling for Integrated Sensing and Communication Scenarios

Jul 09, 2025With the development of Integrated Sensing and Communication (ISAC) for Sixth-Generation (6G) wireless systems, contactless human recognition has emerged as one of the key application scenarios. Since human gesture motion induces subtle and random variations in wireless multipath propagation, how to accurately model human gesture channels has become a crucial issue for the design and validation of ISAC systems. To this end, this paper proposes a deep learning-based human gesture channel modeling framework for ISAC scenarios, in which the human body is decomposed into multiple body parts, and the mapping between human gestures and their corresponding multipath characteristics is learned from real-world measurements. Specifically, a Poisson neural network is employed to predict the number of Multi-Path Components (MPCs) for each human body part, while Conditional Variational Auto-Encoders (C-VAEs) are reused to generate the scattering points, which are further used to reconstruct continuous channel impulse responses and micro-Doppler signatures. Simulation results demonstrate that the proposed method achieves high accuracy and generalization across different gestures and subjects, providing an interpretable approach for data augmentation and the evaluation of gesture-based ISAC systems.

Through-the-Wall Radar Human Activity Recognition WITHOUT Using Neural Networks

Jun 05, 2025After a few years of research in the field of through-the-wall radar (TWR) human activity recognition (HAR), I found that we seem to be stuck in the mindset of training on radar image data through neural network models. The earliest related works in this field based on template matching did not require a training process, and I believe they have never died. Because these methods possess a strong physical interpretability and are closer to the basis of theoretical signal processing research. In this paper, I would like to try to return to the original path by attempting to eschew neural networks to achieve the TWR HAR task and challenge to achieve intelligent recognition as neural network models. In detail, the range-time map and Doppler-time map of TWR are first generated. Then, the initial regions of the human target foreground and noise background on the maps are determined using corner detection method, and the micro-Doppler signature is segmented using the multiphase active contour model. The micro-Doppler segmentation feature is discretized into a two-dimensional point cloud. Finally, the topological similarity between the resulting point cloud and the point clouds of the template data is calculated using Mapper algorithm to obtain the recognition results. The effectiveness of the proposed method is demonstrated by numerical simulated and measured experiments. The open-source code of this work is released at: https://github.com/JoeyBGOfficial/Through-the-Wall-Radar-Human-Activity-Recognition-Without-Using-Neural-Networks.

Modeling Micro-Doppler Signature of Multi-Propeller Drones in Distributed ISAC

Apr 07, 2025Integrated Sensing and Communication (ISAC) will be one key feature of future 6G networks, enabling simultaneous communication and radar sensing. The radar sensing geometry of ISAC will be multistatic since that corresponds to the common distributed structure of a mobile communication network. Within this framework, micro-Doppler analysis plays a vital role in classifying targets based on their micromotions, such as rotating propellers, vibration, or moving limbs. However, research on bistatic micro-Doppler effects, particularly in ISAC systems utilizing OFDM waveforms, remains limited. Existing methods, including electromagnetic simulations often lack scalability for generating the large datasets required to train machine learning algorithms. To address this gap, this work introduces an OFDM-based bistatic micro-Doppler model for multi-propeller drones. The proposed model adapts the classic thin-wire model to include bistatic sensing configuration with an OFDM-like signal. Then, it extends further by incorporating multiple propellers and integrating the reflectivity of the drone's static parts. Measurements were performed to collect ground truth data for verification of the proposed model. Validation results show that the model generates micro-Doppler signatures closely resembling those obtained from measurements, demonstrating its potential as a tool for data generation. In addition, it offers a comprehensive approach to analyzing bistatic micro-Doppler effects.

One Snapshot is All You Need: A Generalized Method for mmWave Signal Generation

Mar 27, 2025Wireless sensing systems, particularly those using mmWave technology, offer distinct advantages over traditional vision-based approaches, such as enhanced privacy and effectiveness in poor lighting conditions. These systems, leveraging FMCW signals, have shown success in human-centric applications like localization, gesture recognition, and so on. However, comprehensive mmWave datasets for diverse applications are scarce, often constrained by pre-processed signatures (e.g., point clouds or RA heatmaps) and inconsistent annotation formats. To overcome these limitations, we propose mmGen, a novel and generalized framework tailored for full-scene mmWave signal generation. By constructing physical signal transmission models, mmGen synthesizes human-reflected and environment-reflected mmWave signals from the constructed 3D meshes. Additionally, we incorporate methods to account for material properties, antenna gains, and multipath reflections, enhancing the realism of the synthesized signals. We conduct extensive experiments using a prototype system with commercial mmWave devices and Kinect sensors. The results show that the average similarity of Range-Angle and micro-Doppler signatures between the synthesized and real-captured signals across three different environments exceeds 0.91 and 0.89, respectively, demonstrating the effectiveness and practical applicability of mmGen.

Bistatic Micro-Doppler Analysis of a Vertical Takeoff and Landing (VTOL) Drone in ICAS Framework

Feb 12, 2025Integrated Communication and Sensing (ICAS) is a key technology that enables sensing functionalities within the next-generation mobile communication (6G). Joint design and optimization of both functionalities could allow coexistence, therefore it advances toward joint signal processing and using the same hardware platform and common spectrum. Contributing to ICAS sensing, this paper presents the measurement and analysis of the micro-Doppler signature of Vertical Takeoff and Landing (VTOL) drones. Measurement is performed with an OFDM-like communication signal and bistatic constellation, which is a typical case in ICAS scenarios. This work shows that micro-Doppler signatures can be used to precisely distinguish flight modes, such as take-off, landing, hovering, transition, and cruising.

Open-Set Gait Recognition from Sparse mmWave Radar Point Clouds

Mar 10, 2025The adoption of Millimeter-Wave (mmWave) radar devices for human sensing, particularly gait recognition, has recently gathered significant attention due to their efficiency, resilience to environmental conditions, and privacy-preserving nature. In this work, we tackle the challenging problem of Open-set Gait Recognition (OSGR) from sparse mmWave radar point clouds. Unlike most existing research, which assumes a closed-set scenario, our work considers the more realistic open-set case, where unknown subjects might be present at inference time, and should be correctly recognized by the system. Point clouds are well-suited for edge computing applications with resource constraints, but are more significantly affected by noise and random fluctuations than other representations, like the more common micro-Doppler signature. This is the first work addressing open-set gait recognition with sparse point cloud data. To do so, we propose a novel neural network architecture that combines supervised classification with unsupervised reconstruction of the point clouds, creating a robust, rich, and highly regularized latent space of gait features. To detect unknown subjects at inference time, we introduce a probabilistic novelty detection algorithm that leverages the structured latent space and offers a tunable trade-off between inference speed and prediction accuracy. Along with this paper, we release mmGait10, an original human gait dataset featuring over five hours of measurements from ten subjects, under varied walking modalities. Extensive experimental results show that our solution attains F1-Score improvements by 24% over state-of-the-art methods, on average, and across multiple openness levels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge