Zied Bouraoui

Skim-Aware Contrastive Learning for Efficient Document Representation

Dec 30, 2025Abstract:Although transformer-based models have shown strong performance in word- and sentence-level tasks, effectively representing long documents, especially in fields like law and medicine, remains difficult. Sparse attention mechanisms can handle longer inputs, but are resource-intensive and often fail to capture full-document context. Hierarchical transformer models offer better efficiency but do not clearly explain how they relate different sections of a document. In contrast, humans often skim texts, focusing on important sections to understand the overall message. Drawing from this human strategy, we introduce a new self-supervised contrastive learning framework that enhances long document representation. Our method randomly masks a section of the document and uses a natural language inference (NLI)-based contrastive objective to align it with relevant parts while distancing it from unrelated ones. This mimics how humans synthesize information, resulting in representations that are both richer and more computationally efficient. Experiments on legal and biomedical texts confirm significant gains in both accuracy and efficiency.

MODE: Multi-Objective Adaptive Coreset Selection

Dec 24, 2025Abstract:We present Mode(Multi-Objective adaptive Data Efficiency), a framework that dynamically combines coreset selection strategies based on their evolving contribution to model performance. Unlike static methods, \mode adapts selection criteria to training phases: emphasizing class balance early, diversity during representation learning, and uncertainty at convergence. We show that MODE achieves (1-1/e)-approximation with O(n \log n) complexity and demonstrates competitive accuracy while providing interpretable insights into data utility evolution. Experiments show \mode reduces memory requirements

Generalizing Analogical Inference from Boolean to Continuous Domains

Nov 13, 2025Abstract:Analogical reasoning is a powerful inductive mechanism, widely used in human cognition and increasingly applied in artificial intelligence. Formal frameworks for analogical inference have been developed for Boolean domains, where inference is provably sound for affine functions and approximately correct for functions close to affine. These results have informed the design of analogy-based classifiers. However, they do not extend to regression tasks or continuous domains. In this paper, we revisit analogical inference from a foundational perspective. We first present a counterexample showing that existing generalization bounds fail even in the Boolean setting. We then introduce a unified framework for analogical reasoning in real-valued domains based on parameterized analogies defined via generalized means. This model subsumes both Boolean classification and regression, and supports analogical inference over continuous functions. We characterize the class of analogy-preserving functions in this setting and derive both worst-case and average-case error bounds under smoothness assumptions. Our results offer a general theory of analogical inference across discrete and continuous domains.

Towards a Neurosymbolic Reasoning System Grounded in Schematic Representations

Sep 03, 2025Abstract:Despite significant progress in natural language understanding, Large Language Models (LLMs) remain error-prone when performing logical reasoning, often lacking the robust mental representations that enable human-like comprehension. We introduce a prototype neurosymbolic system, Embodied-LM, that grounds understanding and logical reasoning in schematic representations based on image schemas-recurring patterns derived from sensorimotor experience that structure human cognition. Our system operationalizes the spatial foundations of these cognitive structures using declarative spatial reasoning within Answer Set Programming. Through evaluation on logical deduction problems, we demonstrate that LLMs can be guided to interpret scenarios through embodied cognitive structures, that these structures can be formalized as executable programs, and that the resulting representations support effective logical reasoning with enhanced interpretability. While our current implementation focuses on spatial primitives, it establishes the computational foundation for incorporating more complex and dynamic representations.

Enhancing DR Classification with Swin Transformer and Shifted Window Attention

Apr 20, 2025Abstract:Diabetic retinopathy (DR) is a leading cause of blindness worldwide, underscoring the importance of early detection for effective treatment. However, automated DR classification remains challenging due to variations in image quality, class imbalance, and pixel-level similarities that hinder model training. To address these issues, we propose a robust preprocessing pipeline incorporating image cropping, Contrast-Limited Adaptive Histogram Equalization (CLAHE), and targeted data augmentation to improve model generalization and resilience. Our approach leverages the Swin Transformer, which utilizes hierarchical token processing and shifted window attention to efficiently capture fine-grained features while maintaining linear computational complexity. We validate our method on the Aptos and IDRiD datasets for multi-class DR classification, achieving accuracy rates of 89.65% and 97.40%, respectively. These results demonstrate the effectiveness of our model, particularly in detecting early-stage DR, highlighting its potential for improving automated retinal screening in clinical settings.

Grounding Agent Reasoning in Image Schemas: A Neurosymbolic Approach to Embodied Cognition

Mar 31, 2025Abstract:Despite advances in embodied AI, agent reasoning systems still struggle to capture the fundamental conceptual structures that humans naturally use to understand and interact with their environment. To address this, we propose a novel framework that bridges embodied cognition theory and agent systems by leveraging a formal characterization of image schemas, which are defined as recurring patterns of sensorimotor experience that structure human cognition. By customizing LLMs to translate natural language descriptions into formal representations based on these sensorimotor patterns, we will be able to create a neurosymbolic system that grounds the agent's understanding in fundamental conceptual structures. We argue that such an approach enhances both efficiency and interpretability while enabling more intuitive human-agent interactions through shared embodied understanding.

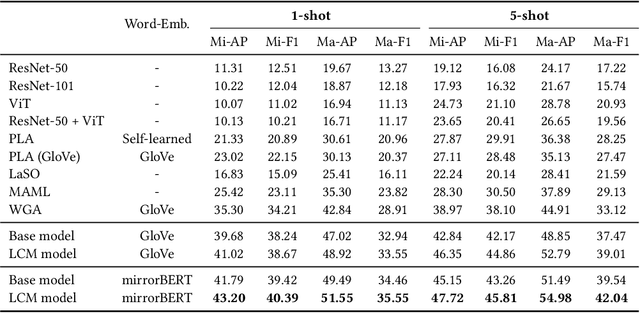

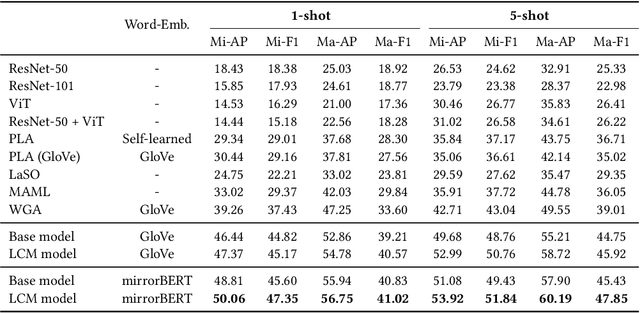

Modelling Multi-modal Cross-interaction for ML-FSIC Based on Local Feature Selection

Dec 18, 2024

Abstract:The aim of multi-label few-shot image classification (ML-FSIC) is to assign semantic labels to images, in settings where only a small number of training examples are available for each label. A key feature of the multi-label setting is that images often have several labels, which typically refer to objects appearing in different regions of the image. When estimating label prototypes, in a metric-based setting, it is thus important to determine which regions are relevant for which labels, but the limited amount of training data and the noisy nature of local features make this highly challenging. As a solution, we propose a strategy in which label prototypes are gradually refined. First, we initialize the prototypes using word embeddings, which allows us to leverage prior knowledge about the meaning of the labels. Second, taking advantage of these initial prototypes, we then use a Loss Change Measurement~(LCM) strategy to select the local features from the training images (i.e.\ the support set) that are most likely to be representative of a given label. Third, we construct the final prototype of the label by aggregating these representative local features using a multi-modal cross-interaction mechanism, which again relies on the initial word embedding-based prototypes. Experiments on COCO, PASCAL VOC, NUS-WIDE, and iMaterialist show that our model substantially improves the current state-of-the-art.

REFINE-LM: Mitigating Language Model Stereotypes via Reinforcement Learning

Aug 18, 2024

Abstract:With the introduction of (large) language models, there has been significant concern about the unintended bias such models may inherit from their training data. A number of studies have shown that such models propagate gender stereotypes, as well as geographical and racial bias, among other biases. While existing works tackle this issue by preprocessing data and debiasing embeddings, the proposed methods require a lot of computational resources and annotation effort while being limited to certain types of biases. To address these issues, we introduce REFINE-LM, a debiasing method that uses reinforcement learning to handle different types of biases without any fine-tuning. By training a simple model on top of the word probability distribution of a LM, our bias agnostic reinforcement learning method enables model debiasing without human annotations or significant computational resources. Experiments conducted on a wide range of models, including several LMs, show that our method (i) significantly reduces stereotypical biases while preserving LMs performance; (ii) is applicable to different types of biases, generalizing across contexts such as gender, ethnicity, religion, and nationality-based biases; and (iii) it is not expensive to train.

Ontology Completion with Natural Language Inference and Concept Embeddings: An Analysis

Mar 25, 2024Abstract:We consider the problem of finding plausible knowledge that is missing from a given ontology, as a generalisation of the well-studied taxonomy expansion task. One line of work treats this task as a Natural Language Inference (NLI) problem, thus relying on the knowledge captured by language models to identify the missing knowledge. Another line of work uses concept embeddings to identify what different concepts have in common, taking inspiration from cognitive models for category based induction. These two approaches are intuitively complementary, but their effectiveness has not yet been compared. In this paper, we introduce a benchmark for evaluating ontology completion methods and thoroughly analyse the strengths and weaknesses of both approaches. We find that both approaches are indeed complementary, with hybrid strategies achieving the best overall results. We also find that the task is highly challenging for Large Language Models, even after fine-tuning.

Modelling Commonsense Commonalities with Multi-Facet Concept Embeddings

Mar 25, 2024Abstract:Concept embeddings offer a practical and efficient mechanism for injecting commonsense knowledge into downstream tasks. Their core purpose is often not to predict the commonsense properties of concepts themselves, but rather to identify commonalities, i.e.\ sets of concepts which share some property of interest. Such commonalities are the basis for inductive generalisation, hence high-quality concept embeddings can make learning easier and more robust. Unfortunately, standard embeddings primarily reflect basic taxonomic categories, making them unsuitable for finding commonalities that refer to more specific aspects (e.g.\ the colour of objects or the materials they are made of). In this paper, we address this limitation by explicitly modelling the different facets of interest when learning concept embeddings. We show that this leads to embeddings which capture a more diverse range of commonsense properties, and consistently improves results in downstream tasks such as ultra-fine entity typing and ontology completion.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge