Zhonggui Chen

RMAvatar: Photorealistic Human Avatar Reconstruction from Monocular Video Based on Rectified Mesh-embedded Gaussians

Jan 13, 2025

Abstract:We introduce RMAvatar, a novel human avatar representation with Gaussian splatting embedded on mesh to learn clothed avatar from a monocular video. We utilize the explicit mesh geometry to represent motion and shape of a virtual human and implicit appearance rendering with Gaussian Splatting. Our method consists of two main modules: Gaussian initialization module and Gaussian rectification module. We embed Gaussians into triangular faces and control their motion through the mesh, which ensures low-frequency motion and surface deformation of the avatar. Due to the limitations of LBS formula, the human skeleton is hard to control complex non-rigid transformations. We then design a pose-related Gaussian rectification module to learn fine-detailed non-rigid deformations, further improving the realism and expressiveness of the avatar. We conduct extensive experiments on public datasets, RMAvatar shows state-of-the-art performance on both rendering quality and quantitative evaluations. Please see our project page at https://rm-avatar.github.io.

Continual Local Replacement for Few-shot Image Recognition

Jan 23, 2020

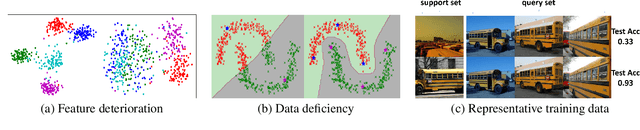

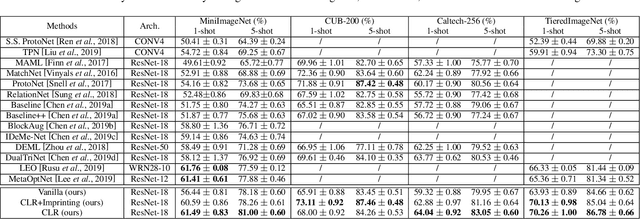

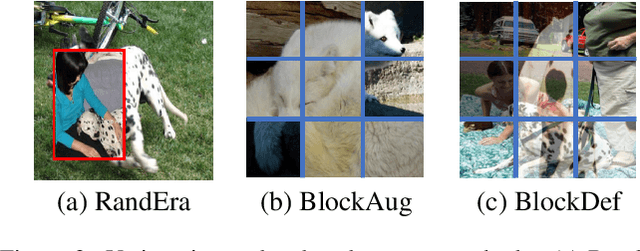

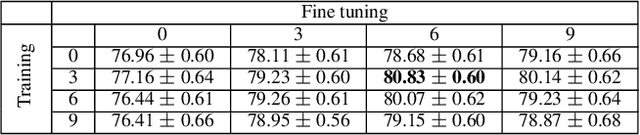

Abstract:The goal of few-shot learning is to learn a model that can recognize novel classes based on one or few training data. It is challenging mainly due to two aspects: (1) it lacks good feature representation of novel classes; (2) a few labeled data could not accurately represent the true data distribution. In this work, we use a sophisticated network architecture to learn better feature representation and focus on the second issue. A novel continual local replacement strategy is proposed to address the data deficiency problem. It takes advantage of the content in unlabeled images to continually enhance labeled ones. Specifically, a pseudo labeling strategy is adopted to constantly select semantic similar images on the fly. Original labeled images will be locally replaced by the selected images for the next epoch training. In this way, the model can directly learn new semantic information from unlabeled images and the capacity of supervised signals in the embedding space can be significantly enlarged. This allows the model to improve generalization and learn a better decision boundary for classification. Extensive experiments demonstrate that our approach can achieve highly competitive results over existing methods on various few-shot image recognition benchmarks.

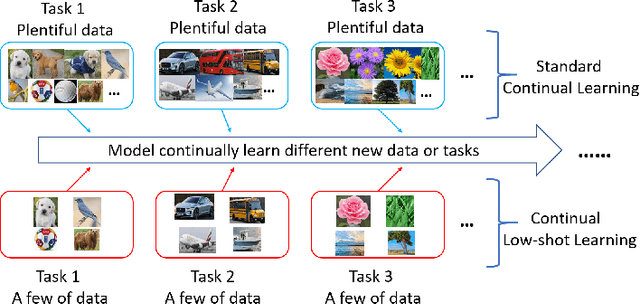

Learning Continually from Low-shot Data Stream

Sep 04, 2019

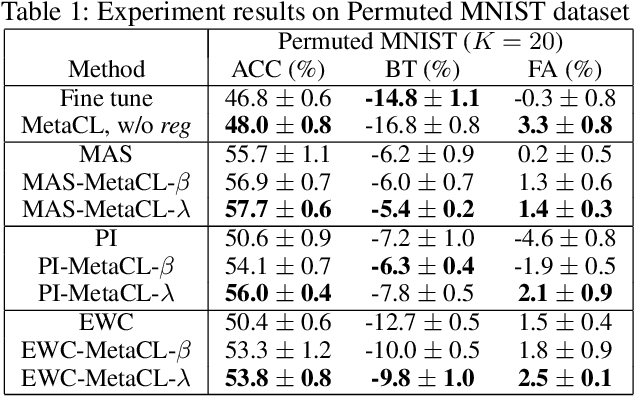

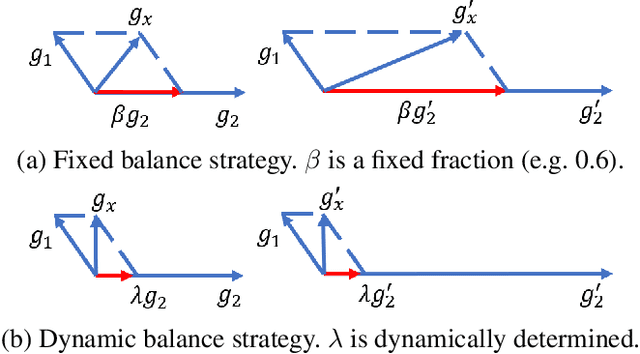

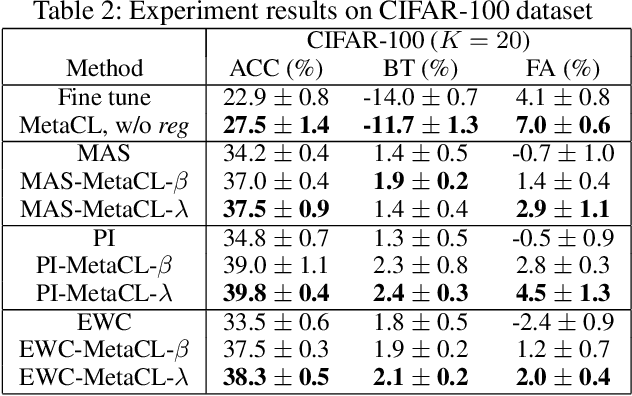

Abstract:While deep learning has achieved remarkable results on various applications, it is usually data hungry and struggles to learn over non-stationary data stream. To solve these two limits, the deep learning model should not only be able to learn from a few of data, but also incrementally learn new concepts from data stream over time without forgetting the previous knowledge. Limited literature simultaneously address both problems. In this work, we propose a novel approach, MetaCL, which enables neural networks to effectively learn meta knowledge from low-shot data stream without catastrophic forgetting. MetaCL trains a model to exploit the intrinsic feature of data (i.e. meta knowledge) and dynamically penalize the important model parameters change to preserve learned knowledge. In this way, the deep learning model can efficiently obtain new knowledge from small volume of data and still keep high performance on previous tasks. MetaCL is conceptually simple, easy to implement and model-agnostic. We implement our method on three recent regularization-based methods. Extensive experiments show that our approach leads to state-of-the-art performance on image classification benchmarks.

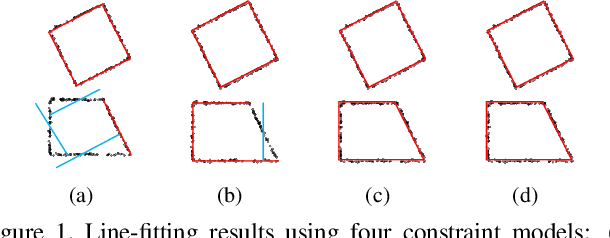

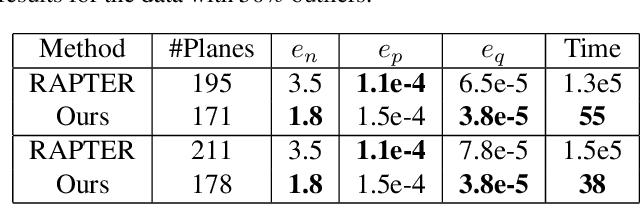

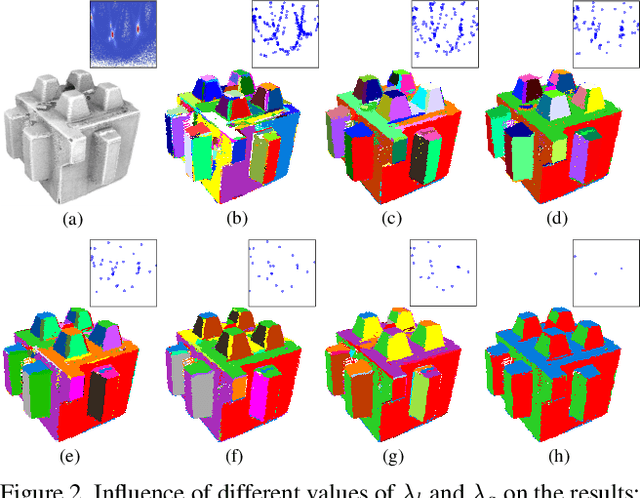

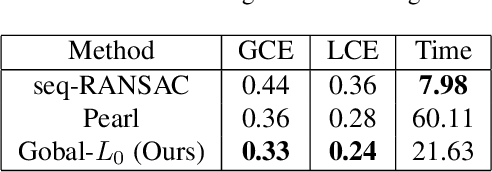

Fast Regularity-Constrained Plane Reconstruction

May 20, 2019

Abstract:Man-made environments typically comprise planar structures that exhibit numerous geometric relationships, such as parallelism, coplanarity, and orthogonality. Making full use of these relationships can considerably improve the robustness of algorithmic plane reconstruction of complex scenes. This research leverages a constraint model requiring minimal prior knowledge to implicitly establish relationships among planes. We introduce a method based on energy minimization to reconstruct the planes consistent with our constraint model. The proposed algorithm is efficient, easily to understand, and simple to implement. The experimental results show that our algorithm successfully reconstructs planes under high percentages of noise and outliers. This is superior to other state-of-the-art regularity-constrained plane reconstruction methods in terms of speed and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge