Zhirong Yang

Aalto University

Associative Poisoning to Generative Machine Learning

Nov 07, 2025

Abstract:The widespread adoption of generative models such as Stable Diffusion and ChatGPT has made them increasingly attractive targets for malicious exploitation, particularly through data poisoning. Existing poisoning attacks compromising synthesised data typically either cause broad degradation of generated data or require control over the training process, limiting their applicability in real-world scenarios. In this paper, we introduce a novel data poisoning technique called associative poisoning, which compromises fine-grained features of the generated data without requiring control of the training process. This attack perturbs only the training data to manipulate statistical associations between specific feature pairs in the generated outputs. We provide a formal mathematical formulation of the attack and prove its theoretical feasibility and stealthiness. Empirical evaluations using two state-of-the-art generative models demonstrate that associative poisoning effectively induces or suppresses feature associations while preserving the marginal distributions of the targeted features and maintaining high-quality outputs, thereby evading visual detection. These results suggest that generative systems used in image synthesis, synthetic dataset generation, and natural language processing are susceptible to subtle, stealthy manipulations that compromise their statistical integrity. To address this risk, we examine the limitations of existing defensive strategies and propose a novel countermeasure strategy.

MaTrRec: Uniting Mamba and Transformer for Sequential Recommendation

Jul 27, 2024Abstract:Sequential recommendation systems aim to provide personalized recommendations by analyzing dynamic preferences and dependencies within user behavior sequences. Recently, Transformer models can effectively capture user preferences. However, their quadratic computational complexity limits recommendation performance on long interaction sequence data. Inspired by the State Space Model (SSM)representative model, Mamba, which efficiently captures user preferences in long interaction sequences with linear complexity, we find that Mamba's recommendation effectiveness is limited in short interaction sequences, with failing to recall items of actual interest to users and exacerbating the data sparsity cold start problem. To address this issue, we innovatively propose a new model, MaTrRec, which combines the strengths of Mamba and Transformer. This model fully leverages Mamba's advantages in handling long-term dependencies and Transformer's global attention advantages in short-term dependencies, thereby enhances predictive capabilities on both long and short interaction sequence datasets while balancing model efficiency. Notably, our model significantly improves the data sparsity cold start problem, with an improvement of up to 33% on the highly sparse Amazon Musical Instruments dataset. We conducted extensive experimental evaluations on five widely used public datasets. The experimental results show that our model outperforms the current state-of-the-art sequential recommendation models on all five datasets. The code is available at https://github.com/Unintelligentmumu/MaTrRec.

Self-Distillation Improves DNA Sequence Inference

May 14, 2024Abstract:Self-supervised pretraining (SSP) has been recognized as a method to enhance prediction accuracy in various downstream tasks. However, its efficacy for DNA sequences remains somewhat constrained. This limitation stems primarily from the fact that most existing SSP approaches in genomics focus on masked language modeling of individual sequences, neglecting the crucial aspect of encoding statistics across multiple sequences. To overcome this challenge, we introduce an innovative deep neural network model, which incorporates collaborative learning between a `student' and a `teacher' subnetwork. In this model, the student subnetwork employs masked learning on nucleotides and progressively adapts its parameters to the teacher subnetwork through an exponential moving average approach. Concurrently, both subnetworks engage in contrastive learning, deriving insights from two augmented representations of the input sequences. This self-distillation process enables our model to effectively assimilate both contextual information from individual sequences and distributional data across the sequence population. We validated our approach with preliminary pretraining using the human reference genome, followed by applying it to 20 downstream inference tasks. The empirical results from these experiments demonstrate that our novel method significantly boosts inference performance across the majority of these tasks. Our code is available at https://github.com/wiedersehne/FinDNA.

NLEBench+NorGLM: A Comprehensive Empirical Analysis and Benchmark Dataset for Generative Language Models in Norwegian

Dec 03, 2023

Abstract:Recent advancements in Generative Language Models (GLMs) have transformed Natural Language Processing (NLP) by showcasing the effectiveness of the "pre-train, prompt, and predict" paradigm in utilizing pre-trained GLM knowledge for diverse applications. Despite their potential, these capabilities lack adequate quantitative characterization due to the absence of comprehensive benchmarks, particularly for low-resource languages. Existing low-resource benchmarks focus on discriminative language models like BERT, neglecting the evaluation of generative language models. Moreover, current benchmarks often overlook measuring generalization performance across multiple tasks, a crucial metric for GLMs. To bridge these gaps, we introduce NLEBench, a comprehensive benchmark tailored for evaluating natural language generation capabilities in Norwegian, a low-resource language. We use Norwegian as a case study to explore whether current GLMs and benchmarks in mainstream languages like English can reveal the unique characteristics of underrepresented languages. NLEBench encompasses a suite of real-world NLP tasks ranging from news storytelling, summarization, open-domain conversation, natural language understanding, instruction fine-tuning, toxicity and bias evaluation, to self-curated Chain-of-Thought investigation. It features two high-quality, human-annotated datasets: an instruction dataset covering traditional Norwegian cultures, idioms, slang, and special expressions, and a document-grounded multi-label dataset for topic classification, question answering, and summarization. This paper also introduces foundational Norwegian Generative Language Models (NorGLMs) developed with diverse parameter scales and Transformer-based architectures. Systematic evaluations on the proposed benchmark suite provide insights into the capabilities and scalability of NorGLMs across various downstream tasks.

ChordMixer: A Scalable Neural Attention Model for Sequences with Different Lengths

Jun 12, 2022

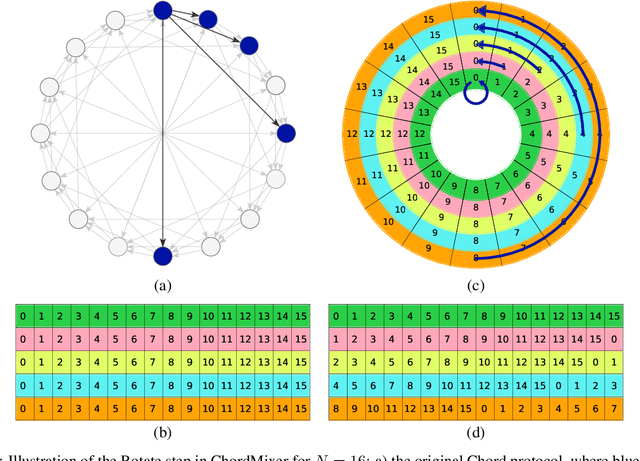

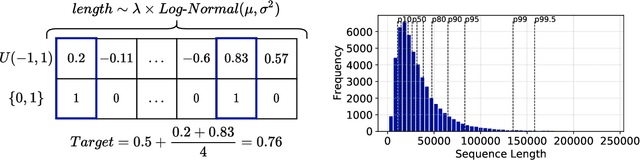

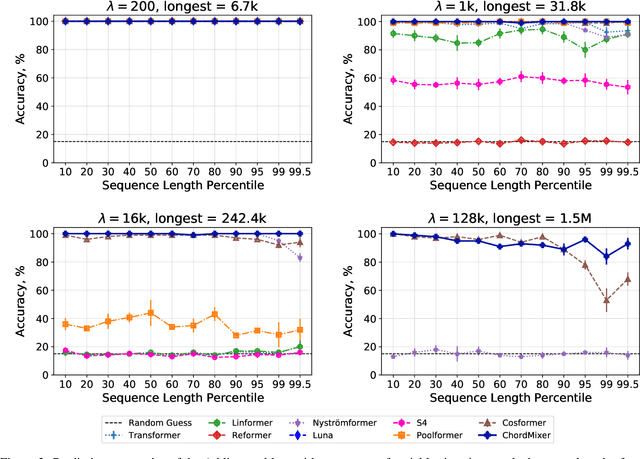

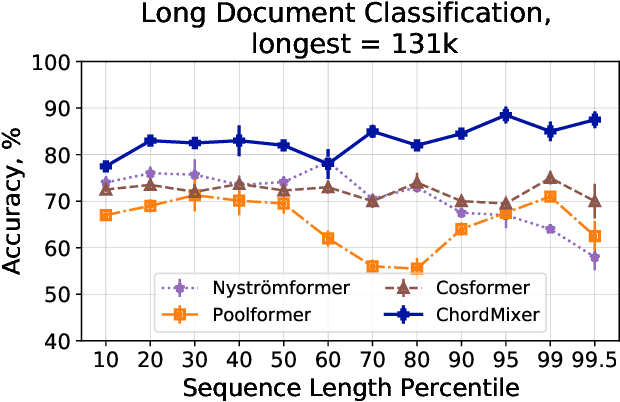

Abstract:Sequential data naturally have different lengths in many domains, with some very long sequences. As an important modeling tool, neural attention should capture long-range interaction in such sequences. However, most existing neural attention models admit only short sequences, or they have to employ chunking or padding to enforce a constant input length. Here we propose a simple neural network building block called ChordMixer which can model the attention for long sequences with variable lengths. Each ChordMixer block consists of a position-wise rotation layer without learnable parameters and an element-wise MLP layer. Repeatedly applying such blocks forms an effective network backbone that mixes the input signals towards the learning targets. We have tested ChordMixer on the synthetic adding problem, long document classification, and DNA sequence-based taxonomy classification. The experiment results show that our method substantially outperforms other neural attention models.

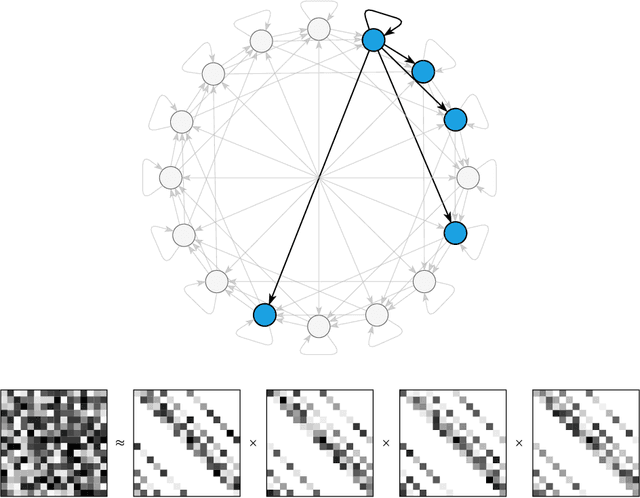

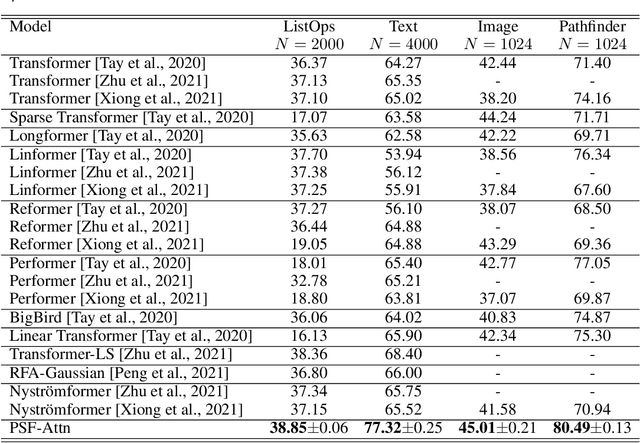

Paramixer: Parameterizing Mixing Links in Sparse Factors Works Better than Dot-Product Self-Attention

Apr 22, 2022

Abstract:Self-Attention is a widely used building block in neural modeling to mix long-range data elements. Most self-attention neural networks employ pairwise dot-products to specify the attention coefficients. However, these methods require $O(N^2)$ computing cost for sequence length $N$. Even though some approximation methods have been introduced to relieve the quadratic cost, the performance of the dot-product approach is still bottlenecked by the low-rank constraint in the attention matrix factorization. In this paper, we propose a novel scalable and effective mixing building block called Paramixer. Our method factorizes the interaction matrix into several sparse matrices, where we parameterize the non-zero entries by MLPs with the data elements as input. The overall computing cost of the new building block is as low as $O(N \log N)$. Moreover, all factorizing matrices in Paramixer are full-rank, so it does not suffer from the low-rank bottleneck. We have tested the new method on both synthetic and various real-world long sequential data sets and compared it with several state-of-the-art attention networks. The experimental results show that Paramixer has better performance in most learning tasks.

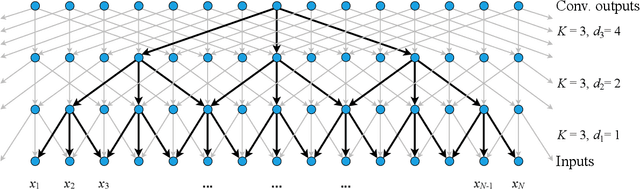

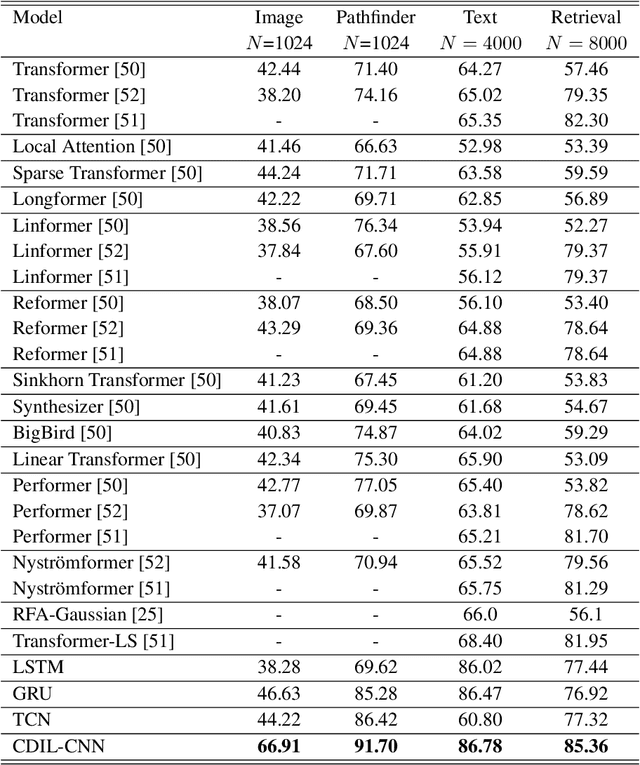

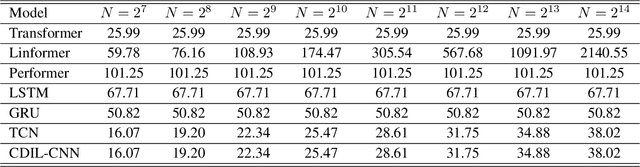

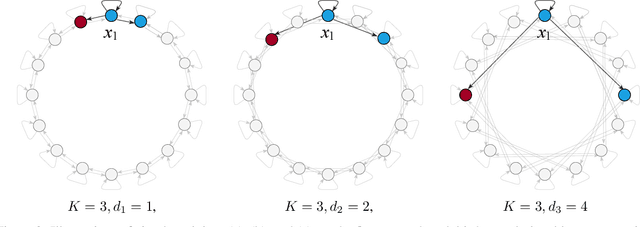

Classification of Long Sequential Data using Circular Dilated Convolutional Neural Networks

Jan 06, 2022

Abstract:Classification of long sequential data is an important Machine Learning task and appears in many application scenarios. Recurrent Neural Networks, Transformers, and Convolutional Neural Networks are three major techniques for learning from sequential data. Among these methods, Temporal Convolutional Networks (TCNs) which are scalable to very long sequences have achieved remarkable progress in time series regression. However, the performance of TCNs for sequence classification is not satisfactory because they use a skewed connection protocol and output classes at the last position. Such asymmetry restricts their performance for classification which depends on the whole sequence. In this work, we propose a symmetric multi-scale architecture called Circular Dilated Convolutional Neural Network (CDIL-CNN), where every position has an equal chance to receive information from other positions at the previous layers. Our model gives classification logits in all positions, and we can apply a simple ensemble learning to achieve a better decision. We have tested CDIL-CNN on various long sequential datasets. The experimental results show that our method has superior performance over many state-of-the-art approaches.

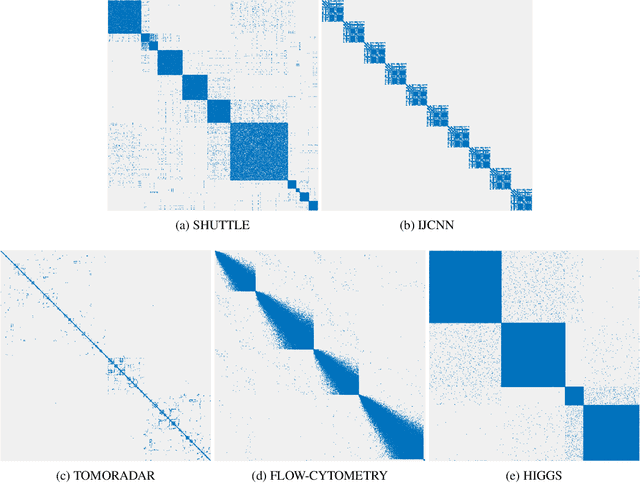

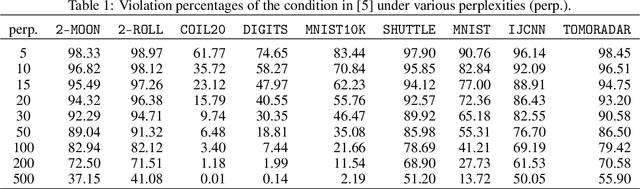

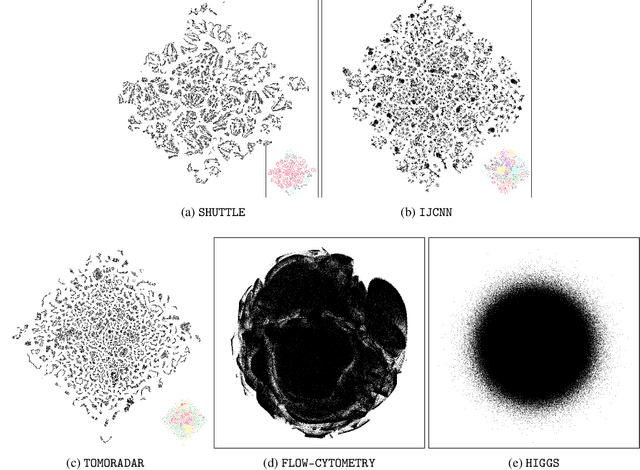

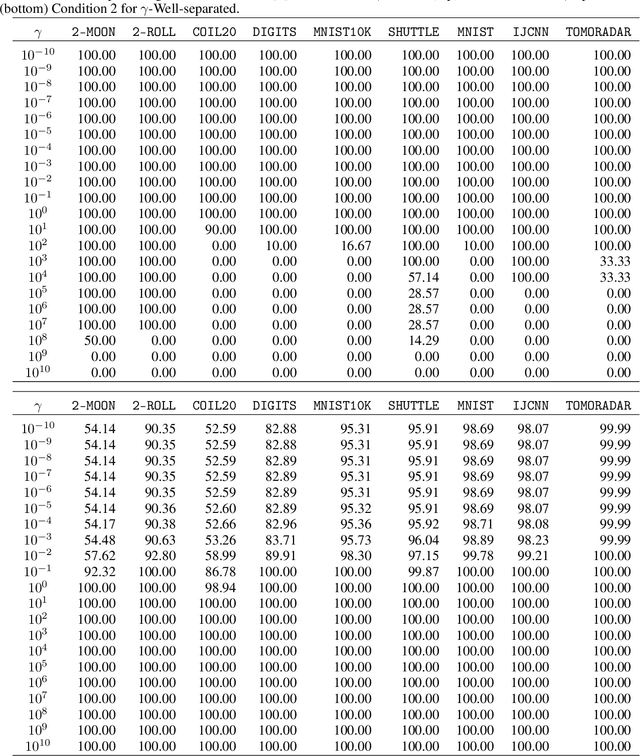

T-SNE Is Not Optimized to Reveal Clusters in Data

Oct 06, 2021

Abstract:Cluster visualization is an essential task for nonlinear dimensionality reduction as a data analysis tool. It is often believed that Student t-Distributed Stochastic Neighbor Embedding (t-SNE) can show clusters for well clusterable data, with a smaller Kullback-Leibler divergence corresponding to a better quality. There was even theoretical proof for the guarantee of this property. However, we point out that this is not necessarily the case -- t-SNE may leave clustering patterns hidden despite strong signals present in the data. Extensive empirical evidence is provided to support our claim. First, several real-world counter-examples are presented, where t-SNE fails even if the input neighborhoods are well clusterable. Tuning hyperparameters in t-SNE or using better optimization algorithms does not help solve this issue because a better t-SNE learning objective can correspond to a worse cluster embedding. Second, we check the assumptions in the clustering guarantee of t-SNE and find they are often violated for real-world data sets.

Sparse Factorization of Large Square Matrices

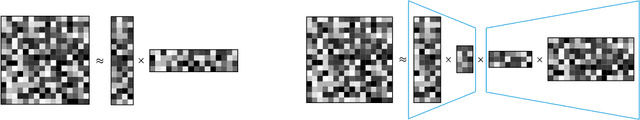

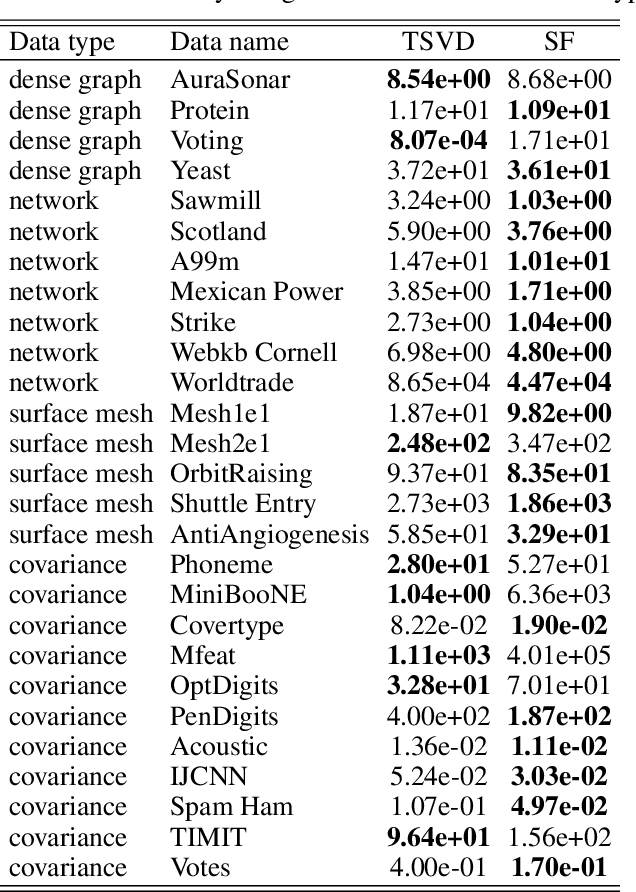

Sep 16, 2021

Abstract:Square matrices appear in many machine learning problems and models. Optimization over a large square matrix is expensive in memory and in time. Therefore an economic approximation is needed. Conventional approximation approaches factorize the square matrix into a number matrices of much lower ranks. However, the low-rank constraint is a performance bottleneck if the approximated matrix is intrinsically high-rank or close to full rank. In this paper, we propose to approximate a large square matrix with a product of sparse full-rank matrices. In the approximation, our method needs only $N(\log N)^2$ non-zero numbers for an $N\times N$ full matrix. We present both non-parametric and parametric ways to find the factorization. In the former, we learn the factorizing matrices directly, and in the latter, we train neural networks to map input data to the non-zero matrix entries. The sparse factorization method is tested for a variety of synthetic and real-world square matrices. The experimental results demonstrate that our method gives a better approximation when the approximated matrix is sparse and high-rank. Based on this finding, we use our parametric method as a scalable attention architecture that performs strongly in learning tasks for long sequential data and defeats Transformer and its several variants.

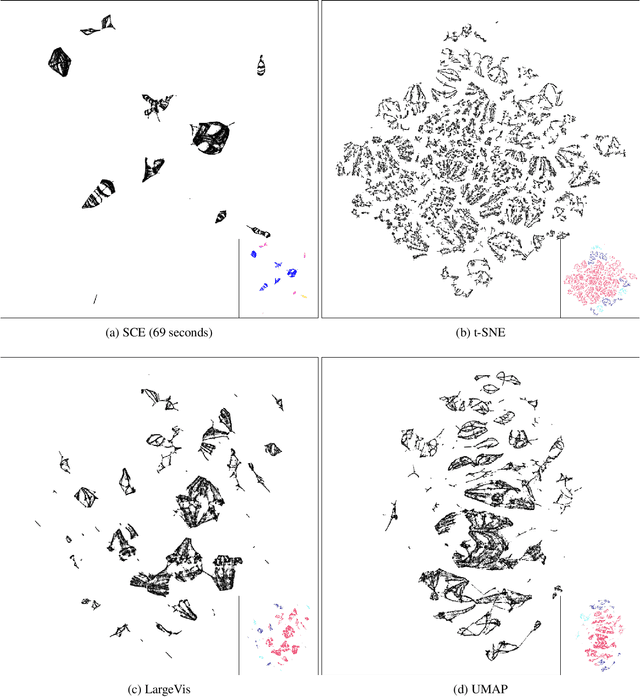

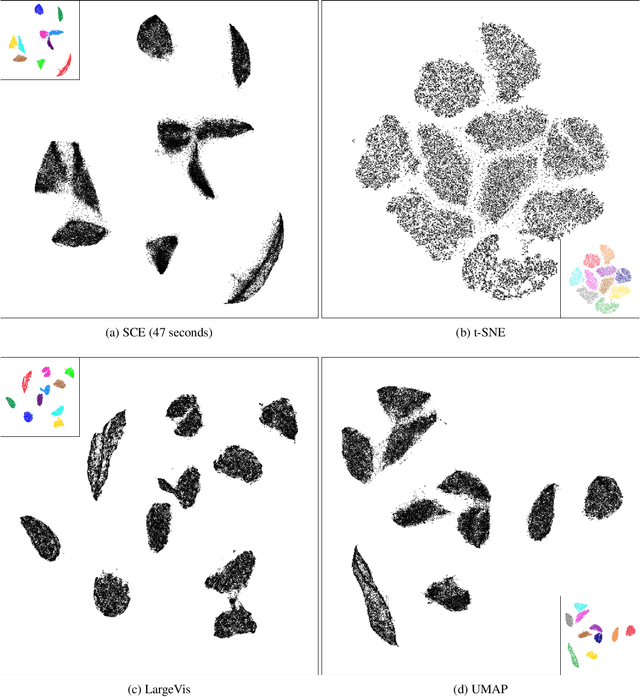

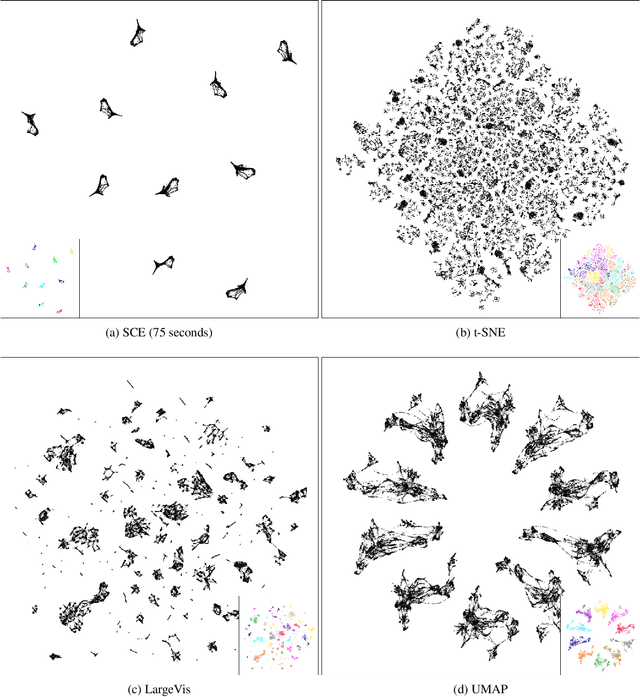

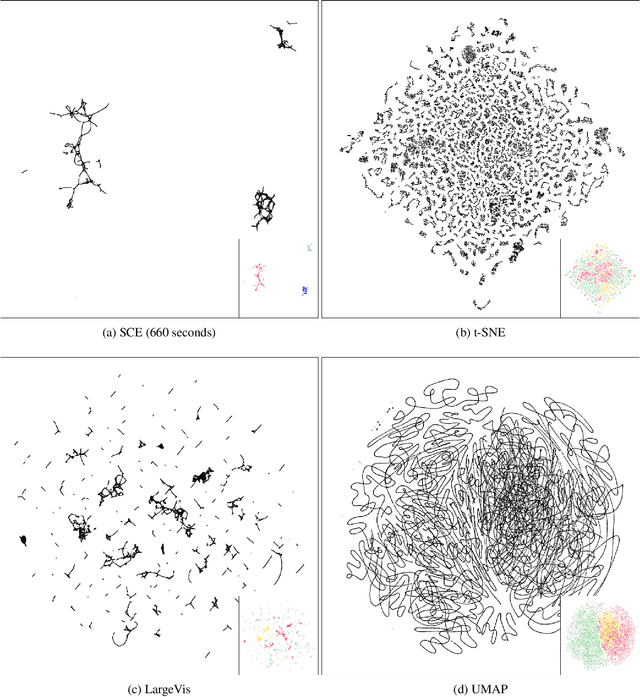

Stochastic Cluster Embedding

Aug 18, 2021

Abstract:Neighbor Embedding (NE) that aims to preserve pairwise similarities between data items has been shown to yield an effective principle for data visualization. However, even the currently best NE methods such as Stochastic Neighbor Embedding (SNE) may leave large-scale patterns such as clusters hidden despite of strong signals being present in the data. To address this, we propose a new cluster visualization method based on Neighbor Embedding. We first present a family of Neighbor Embedding methods which generalizes SNE by using non-normalized Kullback-Leibler divergence with a scale parameter. In this family, much better cluster visualizations often appear with a parameter value different from the one corresponding to SNE. We also develop an efficient software which employs asynchronous stochastic block coordinate descent to optimize the new family of objective functions. The experimental results demonstrate that our method consistently and substantially improves visualization of data clusters compared with the state-of-the-art NE approaches.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge