Zhiqiang Yan

See through the Dark: Learning Illumination-affined Representations for Nighttime Occupancy Prediction

May 28, 2025Abstract:Occupancy prediction aims to estimate the 3D spatial distribution of occupied regions along with their corresponding semantic labels. Existing vision-based methods perform well on daytime benchmarks but struggle in nighttime scenarios due to limited visibility and challenging lighting conditions. To address these challenges, we propose \textbf{LIAR}, a novel framework that learns illumination-affined representations. LIAR first introduces Selective Low-light Image Enhancement (SLLIE), which leverages the illumination priors from daytime scenes to adaptively determine whether a nighttime image is genuinely dark or sufficiently well-lit, enabling more targeted global enhancement. Building on the illumination maps generated by SLLIE, LIAR further incorporates two illumination-aware components: 2D Illumination-guided Sampling (2D-IGS) and 3D Illumination-driven Projection (3D-IDP), to respectively tackle local underexposure and overexposure. Specifically, 2D-IGS modulates feature sampling positions according to illumination maps, assigning larger offsets to darker regions and smaller ones to brighter regions, thereby alleviating feature degradation in underexposed areas. Subsequently, 3D-IDP enhances semantic understanding in overexposed regions by constructing illumination intensity fields and supplying refined residual queries to the BEV context refinement process. Extensive experiments on both real and synthetic datasets demonstrate the superior performance of LIAR under challenging nighttime scenarios. The source code and pretrained models are available \href{https://github.com/yanzq95/LIAR}{here}.

Event-Driven Dynamic Scene Depth Completion

May 19, 2025

Abstract:Depth completion in dynamic scenes poses significant challenges due to rapid ego-motion and object motion, which can severely degrade the quality of input modalities such as RGB images and LiDAR measurements. Conventional RGB-D sensors often struggle to align precisely and capture reliable depth under such conditions. In contrast, event cameras with their high temporal resolution and sensitivity to motion at the pixel level provide complementary cues that are %particularly beneficial in dynamic environments.To this end, we propose EventDC, the first event-driven depth completion framework. It consists of two key components: Event-Modulated Alignment (EMA) and Local Depth Filtering (LDF). Both modules adaptively learn the two fundamental components of convolution operations: offsets and weights conditioned on motion-sensitive event streams. In the encoder, EMA leverages events to modulate the sampling positions of RGB-D features to achieve pixel redistribution for improved alignment and fusion. In the decoder, LDF refines depth estimations around moving objects by learning motion-aware masks from events. Additionally, EventDC incorporates two loss terms to further benefit global alignment and enhance local depth recovery. Moreover, we establish the first benchmark for event-based depth completion comprising one real-world and two synthetic datasets to facilitate future research. Extensive experiments on this benchmark demonstrate the superiority of our EventDC.

DuCos: Duality Constrained Depth Super-Resolution via Foundation Model

Mar 06, 2025Abstract:We introduce DuCos, a novel depth super-resolution framework grounded in Lagrangian duality theory, offering a flexible integration of multiple constraints and reconstruction objectives to enhance accuracy and robustness. Our DuCos is the first to significantly improve generalization across diverse scenarios with foundation models as prompts. The prompt design consists of two key components: Correlative Fusion (CF) and Gradient Regulation (GR). CF facilitates precise geometric alignment and effective fusion between prompt and depth features, while GR refines depth predictions by enforcing consistency with sharp-edged depth maps derived from foundation models. Crucially, these prompts are seamlessly embedded into the Lagrangian constraint term, forming a synergistic and principled framework. Extensive experiments demonstrate that DuCos outperforms existing state-of-the-art methods, achieving superior accuracy, robustness, and generalization. The source codes and pre-trained models will be publicly available.

Learning Inverse Laplacian Pyramid for Progressive Depth Completion

Feb 11, 2025Abstract:Depth completion endeavors to reconstruct a dense depth map from sparse depth measurements, leveraging the information provided by a corresponding color image. Existing approaches mostly hinge on single-scale propagation strategies that iteratively ameliorate initial coarse depth estimates through pixel-level message passing. Despite their commendable outcomes, these techniques are frequently hampered by computational inefficiencies and a limited grasp of scene context. To circumvent these challenges, we introduce LP-Net, an innovative framework that implements a multi-scale, progressive prediction paradigm based on Laplacian Pyramid decomposition. Diverging from propagation-based approaches, LP-Net initiates with a rudimentary, low-resolution depth prediction to encapsulate the global scene context, subsequently refining this through successive upsampling and the reinstatement of high-frequency details at incremental scales. We have developed two novel modules to bolster this strategy: 1) the Multi-path Feature Pyramid module, which segregates feature maps into discrete pathways, employing multi-scale transformations to amalgamate comprehensive spatial information, and 2) the Selective Depth Filtering module, which dynamically learns to apply both smoothness and sharpness filters to judiciously mitigate noise while accentuating intricate details. By integrating these advancements, LP-Net not only secures state-of-the-art (SOTA) performance across both outdoor and indoor benchmarks such as KITTI, NYUv2, and TOFDC, but also demonstrates superior computational efficiency. At the time of submission, LP-Net ranks 1st among all peer-reviewed methods on the official KITTI leaderboard.

Completion as Enhancement: A Degradation-Aware Selective Image Guided Network for Depth Completion

Dec 26, 2024

Abstract:In this paper, we introduce the Selective Image Guided Network (SigNet), a novel degradation-aware framework that transforms depth completion into depth enhancement for the first time. Moving beyond direct completion using convolutional neural networks (CNNs), SigNet initially densifies sparse depth data through non-CNN densification tools to obtain coarse yet dense depth. This approach eliminates the mismatch and ambiguity caused by direct convolution over irregularly sampled sparse data. Subsequently, SigNet redefines completion as enhancement, establishing a self-supervised degradation bridge between the coarse depth and the targeted dense depth for effective RGB-D fusion. To achieve this, SigNet leverages the implicit degradation to adaptively select high-frequency components (e.g., edges) of RGB data to compensate for the coarse depth. This degradation is further integrated into a multi-modal conditional Mamba, dynamically generating the state parameters to enable efficient global high-frequency information interaction. We conduct extensive experiments on the NYUv2, DIML, SUN RGBD, and TOFDC datasets, demonstrating the state-of-the-art (SOTA) performance of SigNet.

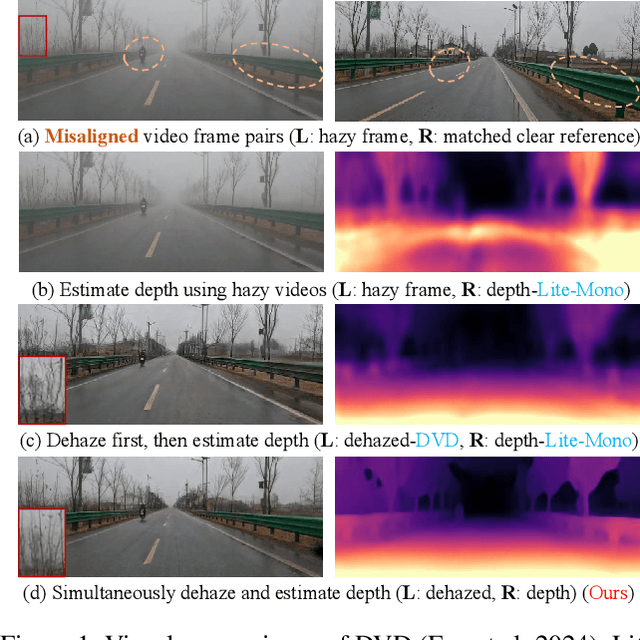

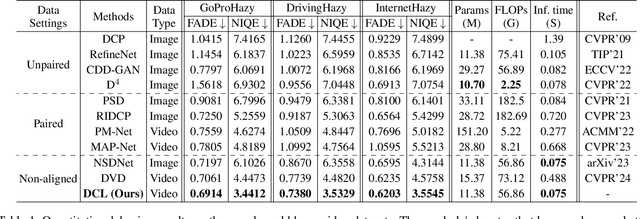

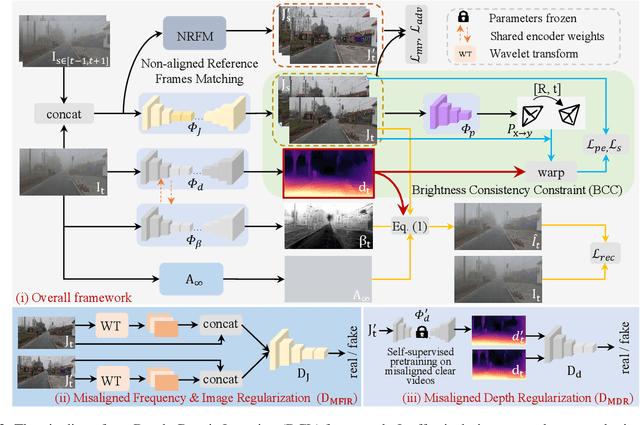

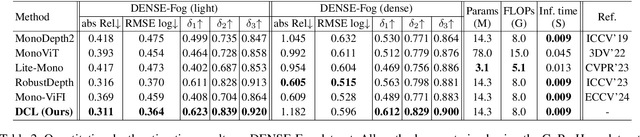

Depth-Centric Dehazing and Depth-Estimation from Real-World Hazy Driving Video

Dec 16, 2024

Abstract:In this paper, we study the challenging problem of simultaneously removing haze and estimating depth from real monocular hazy videos. These tasks are inherently complementary: enhanced depth estimation improves dehazing via the atmospheric scattering model (ASM), while superior dehazing contributes to more accurate depth estimation through the brightness consistency constraint (BCC). To tackle these intertwined tasks, we propose a novel depth-centric learning framework that integrates the ASM model with the BCC constraint. Our key idea is that both ASM and BCC rely on a shared depth estimation network. This network simultaneously exploits adjacent dehazed frames to enhance depth estimation via BCC and uses the refined depth cues to more effectively remove haze through ASM. Additionally, we leverage a non-aligned clear video and its estimated depth to independently regularize the dehazing and depth estimation networks. This is achieved by designing two discriminator networks: $D_{MFIR}$ enhances high-frequency details in dehazed videos, and $D_{MDR}$ reduces the occurrence of black holes in low-texture regions. Extensive experiments demonstrate that the proposed method outperforms current state-of-the-art techniques in both video dehazing and depth estimation tasks, especially in real-world hazy scenes. Project page: https://fanjunkai1.github.io/projectpage/DCL/index.html.

DCDepth: Progressive Monocular Depth Estimation in Discrete Cosine Domain

Oct 19, 2024

Abstract:In this paper, we introduce DCDepth, a novel framework for the long-standing monocular depth estimation task. Moving beyond conventional pixel-wise depth estimation in the spatial domain, our approach estimates the frequency coefficients of depth patches after transforming them into the discrete cosine domain. This unique formulation allows for the modeling of local depth correlations within each patch. Crucially, the frequency transformation segregates the depth information into various frequency components, with low-frequency components encapsulating the core scene structure and high-frequency components detailing the finer aspects. This decomposition forms the basis of our progressive strategy, which begins with the prediction of low-frequency components to establish a global scene context, followed by successive refinement of local details through the prediction of higher-frequency components. We conduct comprehensive experiments on NYU-Depth-V2, TOFDC, and KITTI datasets, and demonstrate the state-of-the-art performance of DCDepth. Code is available at https://github.com/w2kun/DCDepth.

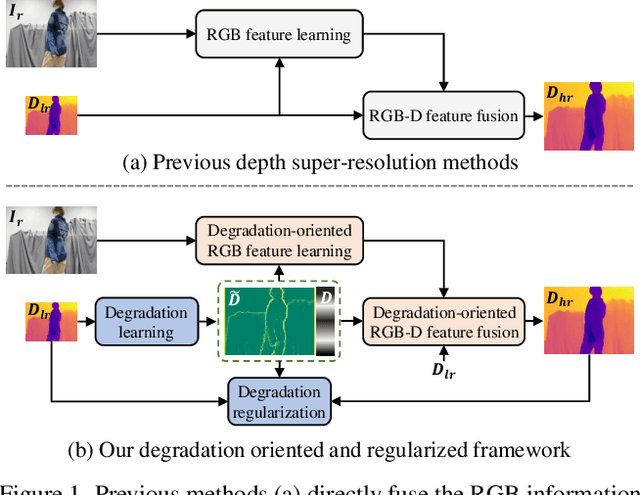

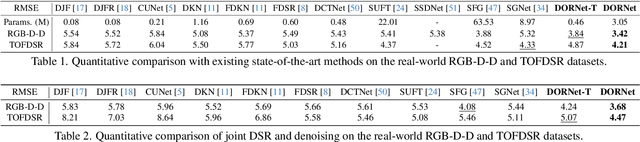

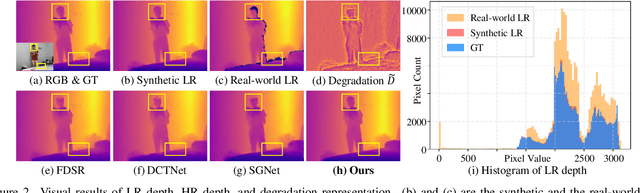

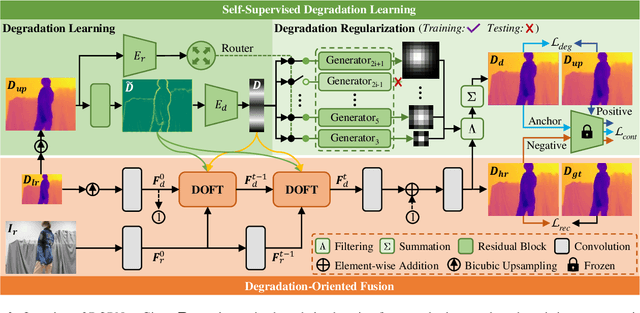

Degradation Oriented and Regularized Network for Real-World Depth Super-Resolution

Oct 15, 2024

Abstract:Recently, existing RGB-guided depth super-resolution methods achieve excellent performance based on the assumption of fixed and known degradation (e.g., bicubic downsampling). However, in real-world scenarios, the captured depth often suffers from unconventional and agnostic degradation due to sensor limitations and the complexity of imaging environments (e.g., low reflective surface, illumination). Their performance significantly declines when these real degradation differ from their assumptions. To address these issues, we propose a Degradation Oriented and Regularized Network, DORNet, which pays more attention on learning degradation representation of low-resolution depth that can provide targeted guidance for depth recovery. Specifically, we first design a self-supervised Degradation Learning to model the discriminative degradation representation of low-resolution depth using routing selection-based Degradation Regularization. Then, we present a Degradation Awareness that recursively conducts multiple Degradation-Oriented Feature Transformations, each of which selectively embeds RGB information into the depth based on the learned degradation representation. Extensive experimental results on both real and synthetic datasets demonstrate that our method achieves state-of-the-art performance.

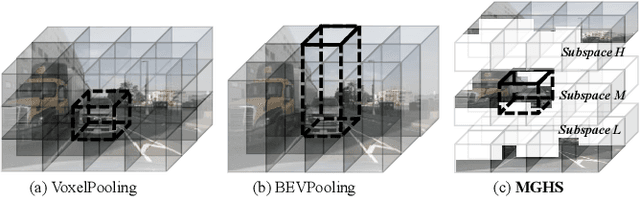

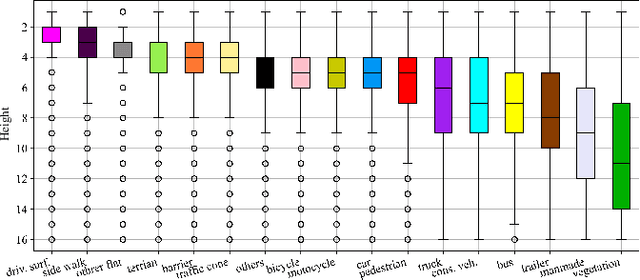

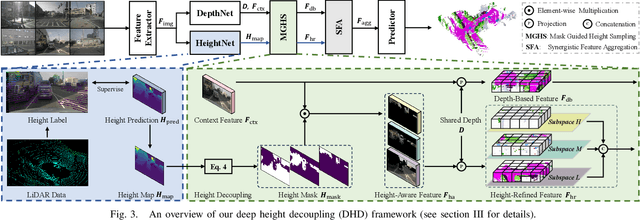

Deep Height Decoupling for Precise Vision-based 3D Occupancy Prediction

Sep 12, 2024

Abstract:The task of vision-based 3D occupancy prediction aims to reconstruct 3D geometry and estimate its semantic classes from 2D color images, where the 2D-to-3D view transformation is an indispensable step. Most previous methods conduct forward projection, such as BEVPooling and VoxelPooling, both of which map the 2D image features into 3D grids. However, the current grid representing features within a certain height range usually introduces many confusing features that belong to other height ranges. To address this challenge, we present Deep Height Decoupling (DHD), a novel framework that incorporates explicit height prior to filter out the confusing features. Specifically, DHD first predicts height maps via explicit supervision. Based on the height distribution statistics, DHD designs Mask Guided Height Sampling (MGHS) to adaptively decoupled the height map into multiple binary masks. MGHS projects the 2D image features into multiple subspaces, where each grid contains features within reasonable height ranges. Finally, a Synergistic Feature Aggregation (SFA) module is deployed to enhance the feature representation through channel and spatial affinities, enabling further occupancy refinement. On the popular Occ3D-nuScenes benchmark, our method achieves state-of-the-art performance even with minimal input frames. Code is available at https://github.com/yanzq95/DHD.

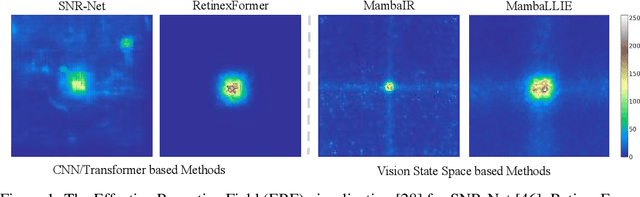

MambaLLIE: Implicit Retinex-Aware Low Light Enhancement with Global-then-Local State Space

May 25, 2024

Abstract:Recent advances in low light image enhancement have been dominated by Retinex-based learning framework, leveraging convolutional neural networks (CNNs) and Transformers. However, the vanilla Retinex theory primarily addresses global illumination degradation and neglects local issues such as noise and blur in dark conditions. Moreover, CNNs and Transformers struggle to capture global degradation due to their limited receptive fields. While state space models (SSMs) have shown promise in the long-sequence modeling, they face challenges in combining local invariants and global context in visual data. In this paper, we introduce MambaLLIE, an implicit Retinex-aware low light enhancer featuring a global-then-local state space design. We first propose a Local-Enhanced State Space Module (LESSM) that incorporates an augmented local bias within a 2D selective scan mechanism, enhancing the original SSMs by preserving local 2D dependency. Additionally, an Implicit Retinex-aware Selective Kernel module (IRSK) dynamically selects features using spatially-varying operations, adapting to varying inputs through an adaptive kernel selection process. Our Global-then-Local State Space Block (GLSSB) integrates LESSM and IRSK with LayerNorm as its core. This design enables MambaLLIE to achieve comprehensive global long-range modeling and flexible local feature aggregation. Extensive experiments demonstrate that MambaLLIE significantly outperforms state-of-the-art CNN and Transformer-based methods. Project Page: https://mamballie.github.io/anon/

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge