Zhibo Pang

ABB Corporate Research, KTH Royal Institute of Technology

Millimeter-Wave ISAC Testbed Using Programmable Digital Coding Dynamic Metasurface Antenna: Practical Design and Implementation

Feb 19, 2025Abstract:Dynamic Metasurface Antennas (DMAs) are transforming reconfigurable antenna technology by enabling energy-efficient, cost-effective beamforming through programmable meta-elements, eliminating the need for traditional phase shifters and delay lines. This breakthrough technology is emerging to revolutionize beamforming for next-generation wireless communication and sensing networks. In this paper, we present the design and real-world implementation of a DMA-assisted wireless communication platform operating in the license-free 60 GHz millimeter-wave (mmWave) band. Our system employs high-speed binary-coded sequences generated via a field-programmable gate array (FPGA), enabling real-time beam steering for spatial multiplexing and independent data transmission. A proof-of-concept experiment successfully demonstrates high-definition quadrature phase-shift keying (QPSK) modulated video transmission at 62 GHz. Furthermore, leveraging the DMA's multi-beam capability, we simultaneously transmit video to two spatially separated receivers, achieving accurate demodulation. We envision the proposed mmWave testbed as a platform for enabling the seamless integration of sensing and communication by allowing video transmission to be replaced with sensing data or utilizing an auxiliary wireless channel to transmit sensing information to multiple receivers. This synergy paves the way for advancing integrated sensing and communication (ISAC) in beyond-5G and 6G networks. Additionally, our testbed demonstrates potential for real-world use cases, including mmWave backhaul links and massive multiple-input multiple-output (MIMO) mmWave base stations.

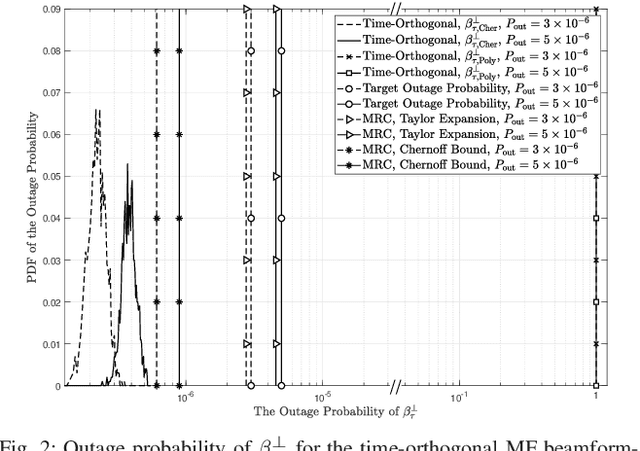

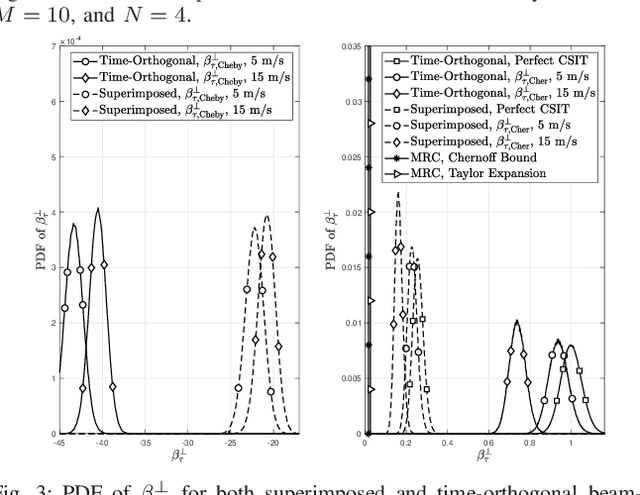

On Chernoff Lower-Bound of Outage Threshold for Non-Central $χ^2$-Distributed Beamforming Gain in URLLC Systems

Dec 28, 2023

Abstract:The cumulative distribution function (CDF) of a non-central $\chi^2$-distributed random variable (RV) is often used when measuring the outage probability of communication systems. For ultra-reliable low-latency communication (URLLC), it is important but mathematically challenging to determine the outage threshold for an extremely small outage target. This motivates us to investigate lower bounds of the outage threshold, and it is found that the one derived from the Chernoff inequality (named Cher-LB) is the most effective lower bound. This finding is associated with three rigorously established properties of the Cher-LB with respect to the mean, variance, reliability requirement, and degrees of freedom of the non-central $\chi^2$-distributed RV. The Cher-LB is then employed to predict the beamforming gain in URLLC for both conventional multi-antenna systems (i.e., MIMO) under first-order Markov time-varying channel and reconfigurable intellgent surface (RIS) systems. It is exhibited that, with the proposed Cher-LB, the pessimistic prediction of the beamforming gain is made sufficiently accurate for guaranteed reliability as well as the transmit-energy efficiency.

IRS Assisted Federated Learning A Broadband Over-the-Air Aggregation Approach

Oct 11, 2023Abstract:We consider a broadband over-the-air computation empowered model aggregation approach for wireless federated learning (FL) systems and propose to leverage an intelligent reflecting surface (IRS) to combat wireless fading and noise. We first investigate the conventional node-selection based framework, where a few edge nodes are dropped in model aggregation to control the aggregation error. We analyze the performance of this node-selection based framework and derive an upper bound on its performance loss, which is shown to be related to the selected edge nodes. Then, we seek to minimize the mean-squared error (MSE) between the desired global gradient parameters and the actually received ones by optimizing the selected edge nodes, their transmit equalization coefficients, the IRS phase shifts, and the receive factors of the cloud server. By resorting to the matrix lifting technique and difference-of-convex programming, we successfully transform the formulated optimization problem into a convex one and solve it using off-the-shelf solvers. To improve learning performance, we further propose a weight-selection based FL framework. In such a framework, we assign each edge node a proper weight coefficient in model aggregation instead of discarding any of them to reduce the aggregation error, i.e., amplitude alignment of the received local gradient parameters from different edge nodes is not required. We also analyze the performance of this weight-selection based framework and derive an upper bound on its performance loss, followed by minimizing the MSE via optimizing the weight coefficients of the edge nodes, their transmit equalization coefficients, the IRS phase shifts, and the receive factors of the cloud server. Furthermore, we use the MNIST dataset for simulations to evaluate the performance of both node-selection and weight-selection based FL frameworks.

Hardware-in-the-Loop Simulation for Evaluating Communication Impacts on the Wireless-Network-Controlled Robots

Jul 14, 2022

Abstract:More and more robot automation applications have changed to wireless communication, and network performance has a growing impact on robotic systems. This study proposes a hardware-in-the-loop (HiL) simulation methodology for connecting the simulated robot platform to real network devices. This project seeks to provide robotic engineers and researchers with the capability to experiment without heavily modifying the original controller and get more realistic test results that correlate with actual network conditions. We deployed this HiL simulation system in two common cases for wireless-network-controlled robotic applications: (1) safe multi-robot coordination for mobile robots, and (2) human-motion-based teleoperation for manipulators. The HiL simulation system is deployed and tested under various network conditions in all circumstances. The experiment results are analyzed and compared with the previous simulation methods, demonstrating that the proposed HiL simulation methodology can identify a more reliable communication impact on robot systems.

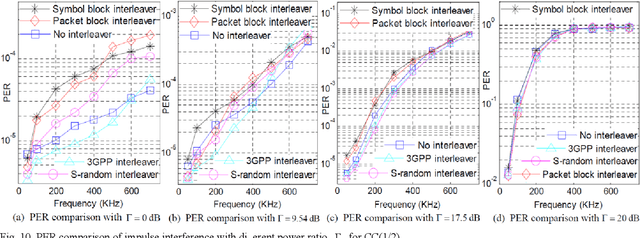

Short-Packet Interleaver against Impulse Interference in Practical Industrial Environments

Mar 01, 2022

Abstract:The most common cause of transmission failure in Wireless High Performance (WirelessHP) target industry environments is impulse interference. As interleavers are commonly used to improve the reliability on the Orthogonal Frequency Division Multiplexing (OFDM) symbol level for long packet transmission, this paper considers the feasibility of applying short-packet bit interleaving to enhance the impulse/burst interference resisting capability on both OFDM symbol and frame level. Using the Universal Software Radio Peripherals (USRP) and PC hardware platform, the Packet Error Rate (PER) performance of interleaved coded short-packet transmission with Convolutional Codes (CC), Reed-Solomon codes (RS) and RS+CC concatenated codes are tested and analyzed. Applying the IEEE 1613 standard for impulse interference generation, extensive PER tests of CC(1=2) and RS(31; 21)+CC(1=2) concatenated codes are performed. With practical experiments, we prove the effectiveness of bit in terleaved coded short-packet transmission in real factory environments. We also investigate how PER performance depends on the interleavers, codes and impulse interference power and frequency.

Satellite Based Computing Networks with Federated Learning

Nov 20, 2021

Abstract:Driven by the ever-increasing penetration and proliferation of data-driven applications, a new generation of wireless communication, the sixth-generation (6G) mobile system enhanced by artificial intelligence (AI), has attracted substantial research interests. Among various candidate technologies of 6G, low earth orbit (LEO) satellites have appealing characteristics of ubiquitous wireless access. However, the costs of satellite communication (SatCom) are still high, relative to counterparts of ground mobile networks. To support massively interconnected devices with intelligent adaptive learning and reduce expensive traffic in SatCom, we propose federated learning (FL) in LEO-based satellite communication networks. We first review the state-of-the-art LEO-based SatCom and related machine learning (ML) techniques, and then analyze four possible ways of combining ML with satellite networks. The learning performance of the proposed strategies is evaluated by simulation and results reveal that FL-based computing networks improve the performance of communication overheads and latency. Finally, we discuss future research topics along this research direction.

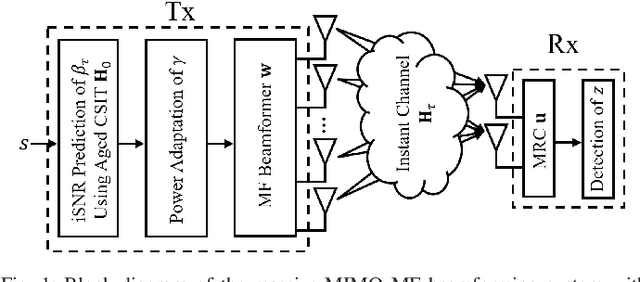

Massive-MIMO MF Beamforming with or without Grouped STBC for Ultra-Reliable Single-Shot Transmission Using Aged CSIT

Oct 06, 2021

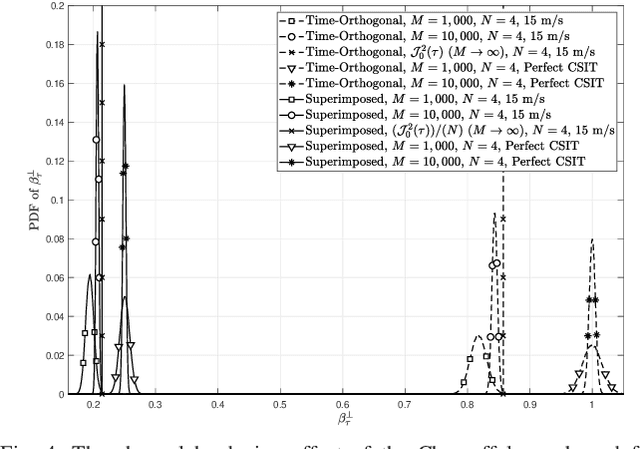

Abstract:The technology of using massive transmit-antennas to enable ultra-reliable single-shot transmission (URSST) is challenged by the transmitter-side channel knowledge (i.e., CSIT) imperfection. When the imperfectness mainly comes from the channel time-variation, the outage probability of the matched filter (MF) transmitter beamforming is investigated based on the first-order Markov model of the aged CSIT. With a fixed transmit-power, the transmitter-side uncertainty of the instantaneous signal-to-noise ratio (iSNR) is mathematically characterized. In order to guarantee the outage probability for every single shot, a transmit-power adaptation approach is proposed to satisfy a pessimistic iSNR requirement, which is predicted using the Chernoff lower bound of the beamforming gain. Our numerical results demonstrate a remarkable transmit-power efficiency when comparing with power control approaches using other lower bounds. In addition, a combinatorial approach of the MF beamforming and grouped space-time block code (G-STBC) is proposed to further mitigate the detrimental impact of the CSIT uncertainty. It is shown, through both theoretical analysis and computer simulations, that the combinatorial approach can further improve the transmit-power efficiency with a good tradeoff between the outage probability and the latency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge