Zhi-Song Liu

PUFM++: Point Cloud Upsampling via Enhanced Flow Matching

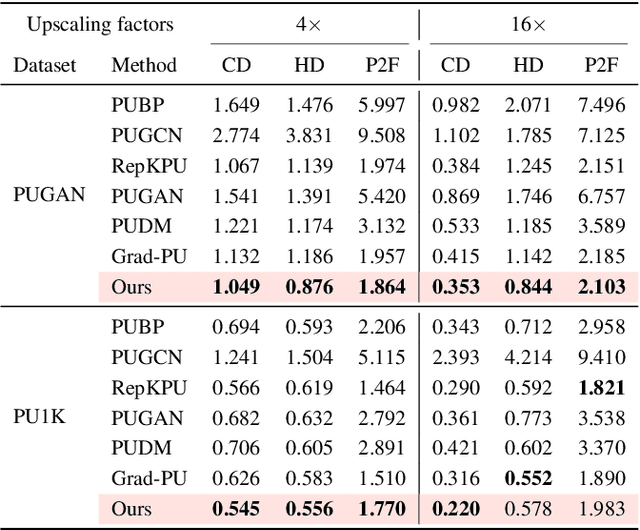

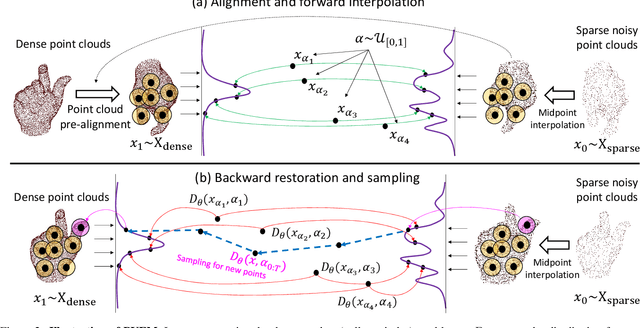

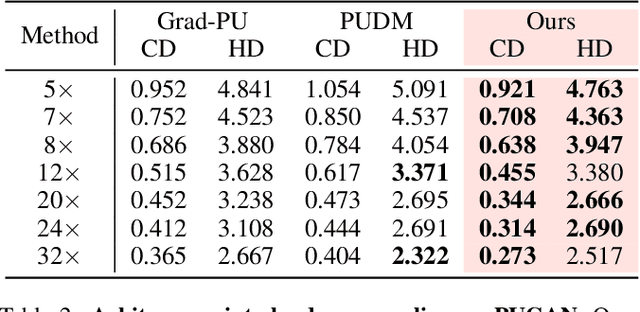

Dec 24, 2025Abstract:Recent advances in generative modeling have demonstrated strong promise for high-quality point cloud upsampling. In this work, we present PUFM++, an enhanced flow-matching framework for reconstructing dense and accurate point clouds from sparse, noisy, and partial observations. PUFM++ improves flow matching along three key axes: (i) geometric fidelity, (ii) robustness to imperfect input, and (iii) consistency with downstream surface-based tasks. We introduce a two-stage flow-matching strategy that first learns a direct, straight-path flow from sparse inputs to dense targets, and then refines it using noise-perturbed samples to approximate the terminal marginal distribution better. To accelerate and stabilize inference, we propose a data-driven adaptive time scheduler that improves sampling efficiency based on interpolation behavior. We further impose on-manifold constraints during sampling to ensure that generated points remain aligned with the underlying surface. Finally, we incorporate a recurrent interface network~(RIN) to strengthen hierarchical feature interactions and boost reconstruction quality. Extensive experiments on synthetic benchmarks and real-world scans show that PUFM++ sets a new state of the art in point cloud upsampling, delivering superior visual fidelity and quantitative accuracy across a wide range of tasks. Code and pretrained models are publicly available at https://github.com/Holmes-Alan/Enhanced_PUFM.

SPIN-ODE: Stiff Physics-Informed Neural ODE for Chemical Reaction Rate Estimation

May 08, 2025Abstract:Estimating rate constants from complex chemical reactions is essential for advancing detailed chemistry. However, the stiffness inherent in real-world atmospheric chemistry systems poses severe challenges, leading to training instability and poor convergence that hinder effective rate constant estimation using learning-based approaches. To address this, we propose a Stiff Physics-Informed Neural ODE framework (SPIN-ODE) for chemical reaction modelling. Our method introduces a three-stage optimisation process: first, a latent neural ODE learns the continuous and differentiable trajectory between chemical concentrations and their time derivatives; second, an explicit Chemical Reaction Neural Network (CRNN) extracts the underlying rate coefficients based on the learned dynamics; and third, fine-tune CRNN using a neural ODE solver to further improve rate coefficient estimation. Extensive experiments on both synthetic and newly proposed real-world datasets validate the effectiveness and robustness of our approach. As the first work on stiff Neural ODEs for chemical rate coefficient discovery, our study opens promising directions for integrating neural networks with detailed chemistry.

Data-Efficient Limited-Angle CT Using Deep Priors and Regularization

Feb 19, 2025Abstract:Reconstructing an image from its Radon transform is a fundamental computed tomography (CT) task arising in applications such as X-ray scans. In many practical scenarios, a full 180-degree scan is not feasible, or there is a desire to reduce radiation exposure. In these limited-angle settings, the problem becomes ill-posed, and methods designed for full-view data often leave significant artifacts. We propose a very low-data approach to reconstruct the original image from its Radon transform under severe angle limitations. Because the inverse problem is ill-posed, we combine multiple regularization methods, including Total Variation, a sinogram filter, Deep Image Prior, and a patch-level autoencoder. We use a differentiable implementation of the Radon transform, which allows us to use gradient-based techniques to solve the inverse problem. Our method is evaluated on a dataset from the Helsinki Tomography Challenge 2022, where the goal is to reconstruct a binary disk from its limited-angle sinogram. We only use a total of 12 data points--eight for learning a prior and four for hyperparameter selection--and achieve results comparable to the best synthetic data-driven approaches.

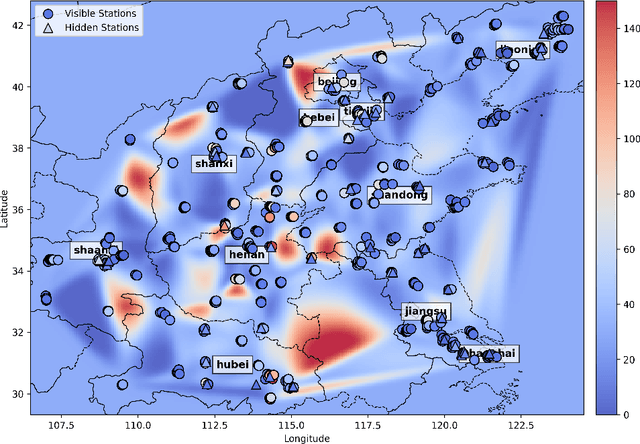

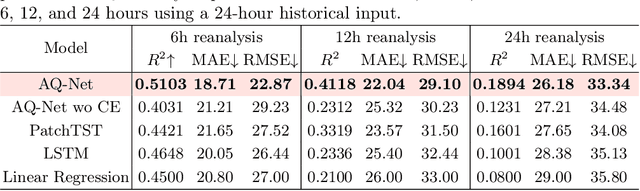

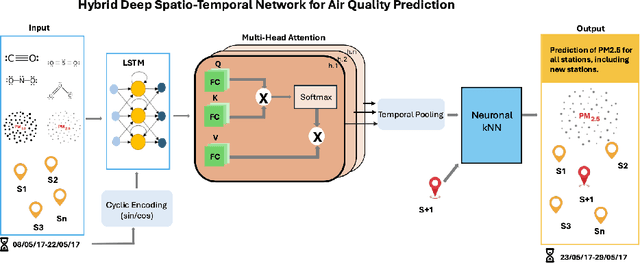

Deep Spatio-Temporal Neural Network for Air Quality Reanalysis

Feb 17, 2025

Abstract:Air quality prediction is key to mitigating health impacts and guiding decisions, yet existing models tend to focus on temporal trends while overlooking spatial generalization. We propose AQ-Net, a spatiotemporal reanalysis model for both observed and unobserved stations in the near future. AQ-Net utilizes the LSTM and multi-head attention for the temporal regression. We also propose a cyclic encoding technique to ensure continuous time representation. To learn fine-grained spatial air quality estimation, we incorporate AQ-Net with the neural kNN to explore feature-based interpolation, such that we can fill the spatial gaps given coarse observation stations. To demonstrate the efficiency of our model for spatiotemporal reanalysis, we use data from 2013-2017 collected in northern China for PM2.5 analysis. Extensive experiments show that AQ-Net excels in air quality reanalysis, highlighting the potential of hybrid spatio-temporal models to better capture environmental dynamics, especially in urban areas where both spatial and temporal variability are critical.

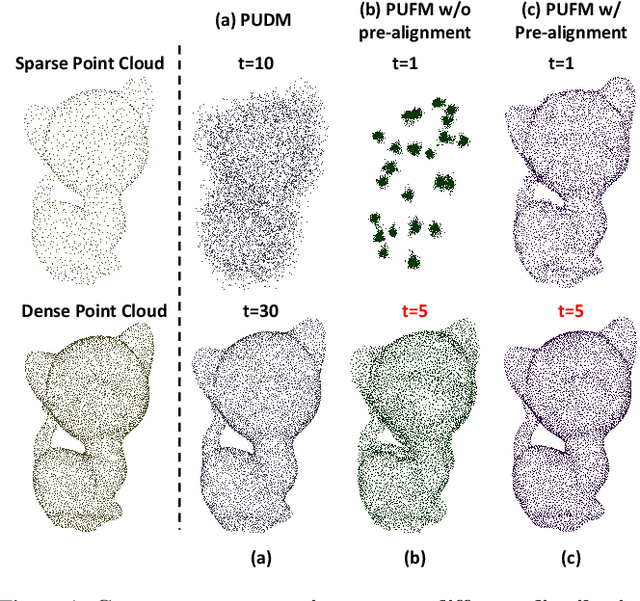

Efficient Point Clouds Upsampling via Flow Matching

Jan 25, 2025

Abstract:Diffusion models are a powerful framework for tackling ill-posed problems, with recent advancements extending their use to point cloud upsampling. Despite their potential, existing diffusion models struggle with inefficiencies as they map Gaussian noise to real point clouds, overlooking the geometric information inherent in sparse point clouds. To address these inefficiencies, we propose PUFM, a flow matching approach to directly map sparse point clouds to their high-fidelity dense counterparts. Our method first employs midpoint interpolation to sparse point clouds, resolving the density mismatch between sparse and dense point clouds. Since point clouds are unordered representations, we introduce a pre-alignment method based on Earth Mover's Distance (EMD) optimization to ensure coherent interpolation between sparse and dense point clouds, which enables a more stable learning path in flow matching. Experiments on synthetic datasets demonstrate that our method delivers superior upsampling quality but with fewer sampling steps. Further experiments on ScanNet and KITTI also show that our approach generalizes well on RGB-D point clouds and LiDAR point clouds, making it more practical for real-world applications.

Bridging Text and Image for Artist Style Transfer via Contrastive Learning

Oct 12, 2024

Abstract:Image style transfer has attracted widespread attention in the past few years. Despite its remarkable results, it requires additional style images available as references, making it less flexible and inconvenient. Using text is the most natural way to describe the style. More importantly, text can describe implicit abstract styles, like styles of specific artists or art movements. In this paper, we propose a Contrastive Learning for Artistic Style Transfer (CLAST) that leverages advanced image-text encoders to control arbitrary style transfer. We introduce a supervised contrastive training strategy to effectively extract style descriptions from the image-text model (i.e., CLIP), which aligns stylization with the text description. To this end, we also propose a novel and efficient adaLN based state space models that explore style-content fusion. Finally, we achieve a text-driven image style transfer. Extensive experiments demonstrate that our approach outperforms the state-of-the-art methods in artistic style transfer. More importantly, it does not require online fine-tuning and can render a 512x512 image in 0.03s.

Super-Resolution works for coastal simulations

Aug 29, 2024

Abstract:Learning fine-scale details of a coastal ocean simulation from a coarse representation is a challenging task. For real-world applications, high-resolution simulations are necessary to advance understanding of many coastal processes, specifically, to predict flooding resulting from tsunamis and storm surges. We propose a Deep Network for Coastal Super-Resolution (DNCSR) for spatiotemporal enhancement to efficiently learn the high-resolution numerical solution. Given images of coastal simulations produced on low-resolution computational meshes using low polynomial order discontinuous Galerkin discretizations and a coarse temporal resolution, the proposed DNCSR learns to produce high-resolution free surface elevation and velocity visualizations in both time and space. To efficiently model the dynamic changes over time and space, we propose grid-aware spatiotemporal attention to project the temporal features to the spatial domain for non-local feature matching. The coordinate information is also utilized via positional encoding. For the final reconstruction, we use the spatiotemporal bilinear operation to interpolate the missing frames and then expand the feature maps to the frequency domain for residual mapping. Besides data-driven losses, the proposed physics-informed loss guarantees gradient consistency and momentum changes. Their combination contributes to the overall 24% improvements in RMSE. To train the proposed model, we propose a large-scale coastal simulation dataset and use it for model optimization and evaluation. Our method shows superior super-resolution quality and fast computation compared to the state-of-the-art methods.

Neural Network Emulator for Atmospheric Chemical ODE

Aug 06, 2024

Abstract:Modeling atmospheric chemistry is complex and computationally intense. Given the recent success of Deep neural networks in digital signal processing, we propose a Neural Network Emulator for fast chemical concentration modeling. We consider atmospheric chemistry as a time-dependent Ordinary Differential Equation. To extract the hidden correlations between initial states and future time evolution, we propose ChemNNE, an Attention based Neural Network Emulator (NNE) that can model the atmospheric chemistry as a neural ODE process. To efficiently simulate the chemical changes, we propose the sinusoidal time embedding to estimate the oscillating tendency over time. More importantly, we use the Fourier neural operator to model the ODE process for efficient computation. We also propose three physical-informed losses to supervise the training optimization. To evaluate our model, we propose a large-scale chemical dataset that can be used for neural network training and evaluation. The extensive experiments show that our approach achieves state-of-the-art performance in modeling accuracy and computational speed.

StyleMamba : State Space Model for Efficient Text-driven Image Style Transfer

May 08, 2024Abstract:We present StyleMamba, an efficient image style transfer framework that translates text prompts into corresponding visual styles while preserving the content integrity of the original images. Existing text-guided stylization requires hundreds of training iterations and takes a lot of computing resources. To speed up the process, we propose a conditional State Space Model for Efficient Text-driven Image Style Transfer, dubbed StyleMamba, that sequentially aligns the image features to the target text prompts. To enhance the local and global style consistency between text and image, we propose masked and second-order directional losses to optimize the stylization direction to significantly reduce the training iterations by 5 times and the inference time by 3 times. Extensive experiments and qualitative evaluation confirm the robust and superior stylization performance of our methods compared to the existing baselines.

FunnyNet-W: Multimodal Learning of Funny Moments in Videos in the Wild

Jan 08, 2024Abstract:Automatically understanding funny moments (i.e., the moments that make people laugh) when watching comedy is challenging, as they relate to various features, such as body language, dialogues and culture. In this paper, we propose FunnyNet-W, a model that relies on cross- and self-attention for visual, audio and text data to predict funny moments in videos. Unlike most methods that rely on ground truth data in the form of subtitles, in this work we exploit modalities that come naturally with videos: (a) video frames as they contain visual information indispensable for scene understanding, (b) audio as it contains higher-level cues associated with funny moments, such as intonation, pitch and pauses and (c) text automatically extracted with a speech-to-text model as it can provide rich information when processed by a Large Language Model. To acquire labels for training, we propose an unsupervised approach that spots and labels funny audio moments. We provide experiments on five datasets: the sitcoms TBBT, MHD, MUStARD, Friends, and the TED talk UR-Funny. Extensive experiments and analysis show that FunnyNet-W successfully exploits visual, auditory and textual cues to identify funny moments, while our findings reveal FunnyNet-W's ability to predict funny moments in the wild. FunnyNet-W sets the new state of the art for funny moment detection with multimodal cues on all datasets with and without using ground truth information.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge