Yutian Li

On the Decomposition of Differential Game

Nov 06, 2024Abstract:To understand the complexity of the dynamic of learning in differential games, we decompose the game into components where the dynamic is well understood. One of the possible tools is Helmholtz's theorem, which can decompose a vector field into a potential and a harmonic component. This has been shown to be effective in finite and normal-form games. However, applying Helmholtz's theorem by connecting it with the Hodge theorem on $\mathbb{R}^n$ (which is the strategy space of differential game) is non-trivial due to the non-compactness of $\mathbb{R}^n$. Bridging the dynamic-strategic disconnect through Hodge/Helmoltz's theorem in differential games is then left as an open problem \cite{letcher2019differentiable}. In this work, we provide two decompositions of differential games to answer this question: the first as an exact scalar potential part, a near vector potential part, and a non-strategic part; the second as a near scalar potential part, an exact vector potential part, and a non-strategic part. We show that scalar potential games coincide with potential games proposed by \cite{monderer1996potential}, where the gradient descent dynamic can successfully find the Nash equilibrium. For the vector potential game, we show that the individual gradient field is divergence-free, in which case the gradient descent dynamic may either be divergent or recurrent.

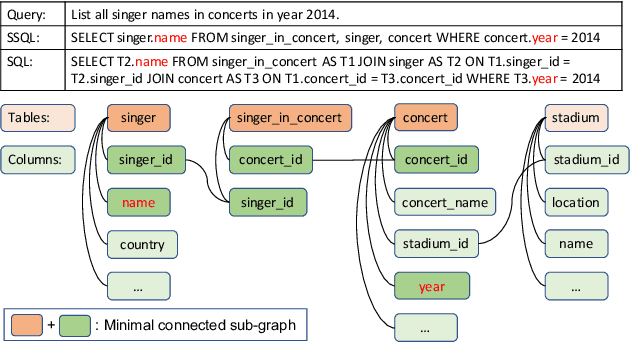

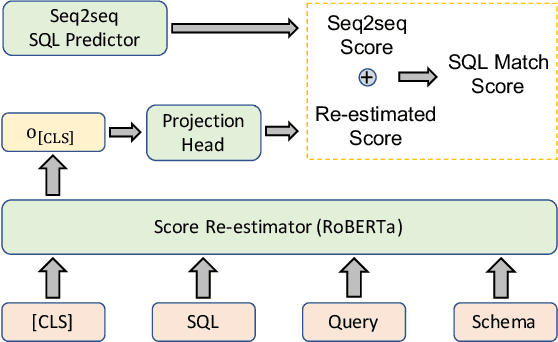

T5-SR: A Unified Seq-to-Seq Decoding Strategy for Semantic Parsing

Jun 14, 2023

Abstract:Translating natural language queries into SQLs in a seq2seq manner has attracted much attention recently. However, compared with abstract-syntactic-tree-based SQL generation, seq2seq semantic parsers face much more challenges, including poor quality on schematical information prediction and poor semantic coherence between natural language queries and SQLs. This paper analyses the above difficulties and proposes a seq2seq-oriented decoding strategy called SR, which includes a new intermediate representation SSQL and a reranking method with score re-estimator to solve the above obstacles respectively. Experimental results demonstrate the effectiveness of our proposed techniques and T5-SR-3b achieves new state-of-the-art results on the Spider dataset.

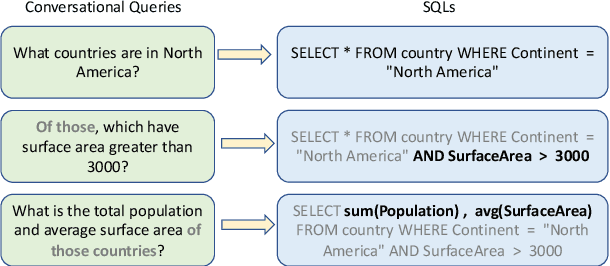

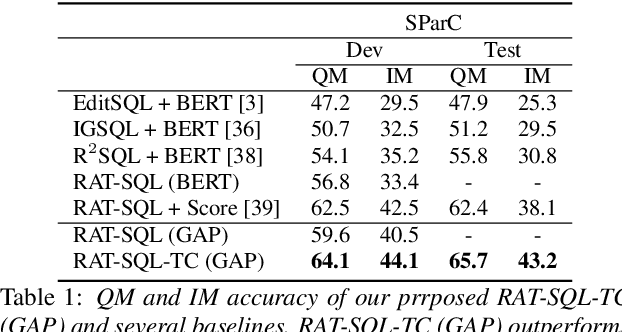

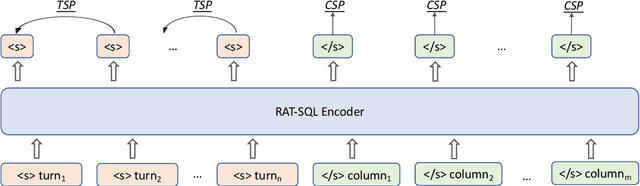

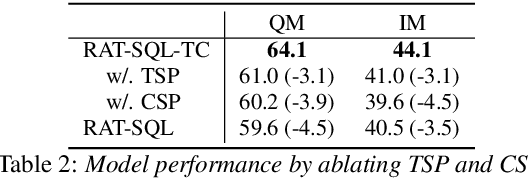

Pay More Attention to History: A Context Modeling Strategy for Conversational Text-to-SQL

Dec 16, 2021

Abstract:Conversational text-to-SQL aims at converting multi-turn natural language queries into their corresponding SQL representations. One of the most intractable problem of conversational text-to-SQL is modeling the semantics of multi-turn queries and gathering proper information required for the current query. This paper shows that explicit modeling the semantic changes by adding each turn and the summarization of the whole context can bring better performance on converting conversational queries into SQLs. In particular, we propose two conversational modeling tasks in both turn grain and conversation grain. These two tasks simply work as auxiliary training tasks to help with multi-turn conversational semantic parsing. We conducted empirical studies and achieve new state-of-the-art results on large-scale open-domain conversational text-to-SQL dataset. The results demonstrate that the proposed mechanism significantly improves the performance of multi-turn semantic parsing.

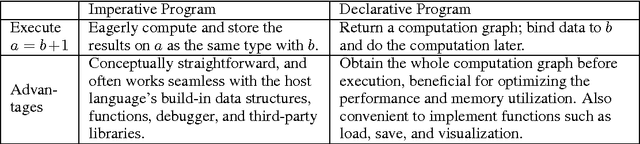

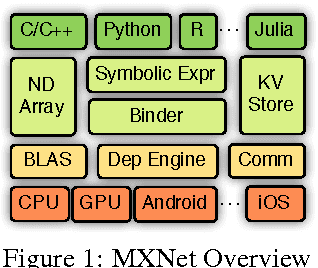

MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems

Dec 03, 2015

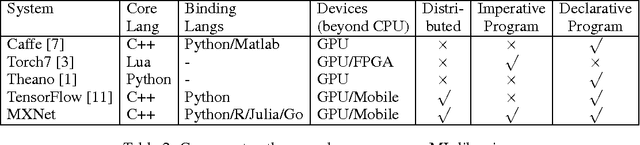

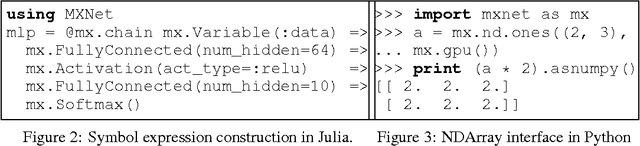

Abstract:MXNet is a multi-language machine learning (ML) library to ease the development of ML algorithms, especially for deep neural networks. Embedded in the host language, it blends declarative symbolic expression with imperative tensor computation. It offers auto differentiation to derive gradients. MXNet is computation and memory efficient and runs on various heterogeneous systems, ranging from mobile devices to distributed GPU clusters. This paper describes both the API design and the system implementation of MXNet, and explains how embedding of both symbolic expression and tensor operation is handled in a unified fashion. Our preliminary experiments reveal promising results on large scale deep neural network applications using multiple GPU machines.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge