Yuki Uranishi

3DGesPolicy: Phoneme-Aware Holistic Co-Speech Gesture Generation Based on Action Control

Jan 26, 2026Abstract:Generating holistic co-speech gestures that integrate full-body motion with facial expressions suffers from semantically incoherent coordination on body motion and spatially unstable meaningless movements due to existing part-decomposed or frame-level regression methods, We introduce 3DGesPolicy, a novel action-based framework that reformulates holistic gesture generation as a continuous trajectory control problem through diffusion policy from robotics. By modeling frame-to-frame variations as unified holistic actions, our method effectively learns inter-frame holistic gesture motion patterns and ensures both spatially and semantically coherent movement trajectories that adhere to realistic motion manifolds. To further bridge the gap in expressive alignment, we propose a Gesture-Audio-Phoneme (GAP) fusion module that can deeply integrate and refine multi-modal signals, ensuring structured and fine-grained alignment between speech semantics, body motion, and facial expressions. Extensive quantitative and qualitative experiments on the BEAT2 dataset demonstrate the effectiveness of our 3DGesPolicy across other state-of-the-art methods in generating natural, expressive, and highly speech-aligned holistic gestures.

MR-UBi: Mixed Reality-Based Underwater Robot Arm Teleoperation System with Reaction Torque Indicator via Bilateral Control

Oct 23, 2025Abstract:We present a mixed reality-based underwater robot arm teleoperation system with a reaction torque indicator via bilateral control (MR-UBi). The reaction torque indicator (RTI) overlays a color and length-coded torque bar in the MR-HMD, enabling seamless integration of visual and haptic feedback during underwater robot arm teleoperation. User studies with sixteen participants compared MR-UBi against a bilateral-control baseline. MR-UBi significantly improved grasping-torque control accuracy, increasing the time within the optimal torque range and reducing both low and high grasping torque range during lift and pick-and-place tasks with objects of different stiffness. Subjective evaluations further showed higher usability (SUS) and lower workload (NASA--TLX). Overall, the results confirm that \textit{MR-UBi} enables more stable, accurate, and user-friendly underwater robot-arm teleoperation through the integration of visual and haptic feedback. For additional material, please check: https://mertcookimg.github.io/mr-ubi

Bi-LAT: Bilateral Control-Based Imitation Learning via Natural Language and Action Chunking with Transformers

Apr 02, 2025Abstract:We present Bi-LAT, a novel imitation learning framework that unifies bilateral control with natural language processing to achieve precise force modulation in robotic manipulation. Bi-LAT leverages joint position, velocity, and torque data from leader-follower teleoperation while also integrating visual and linguistic cues to dynamically adjust applied force. By encoding human instructions such as "softly grasp the cup" or "strongly twist the sponge" through a multimodal Transformer-based model, Bi-LAT learns to distinguish nuanced force requirements in real-world tasks. We demonstrate Bi-LAT's performance in (1) unimanual cup-stacking scenario where the robot accurately modulates grasp force based on language commands, and (2) bimanual sponge-twisting task that requires coordinated force control. Experimental results show that Bi-LAT effectively reproduces the instructed force levels, particularly when incorporating SigLIP among tested language encoders. Our findings demonstrate the potential of integrating natural language cues into imitation learning, paving the way for more intuitive and adaptive human-robot interaction. For additional material, please visit: https://mertcookimg.github.io/bi-lat/

MRHaD: Mixed Reality-based Hand-Drawn Map Editing Interface for Mobile Robot Navigation

Apr 01, 2025Abstract:Mobile robot navigation systems are increasingly relied upon in dynamic and complex environments, yet they often struggle with map inaccuracies and the resulting inefficient path planning. This paper presents MRHaD, a Mixed Reality-based Hand-drawn Map Editing Interface that enables intuitive, real-time map modifications through natural hand gestures. By integrating the MR head-mounted display with the robotic navigation system, operators can directly create hand-drawn restricted zones (HRZ), thereby bridging the gap between 2D map representations and the real-world environment. Comparative experiments against conventional 2D editing methods demonstrate that MRHaD significantly improves editing efficiency, map accuracy, and overall usability, contributing to safer and more efficient mobile robot operations. The proposed approach provides a robust technical foundation for advancing human-robot collaboration and establishing innovative interaction models that enhance the hybrid future of robotics and human society. For additional material, please check: https://mertcookimg.github.io/mrhad/

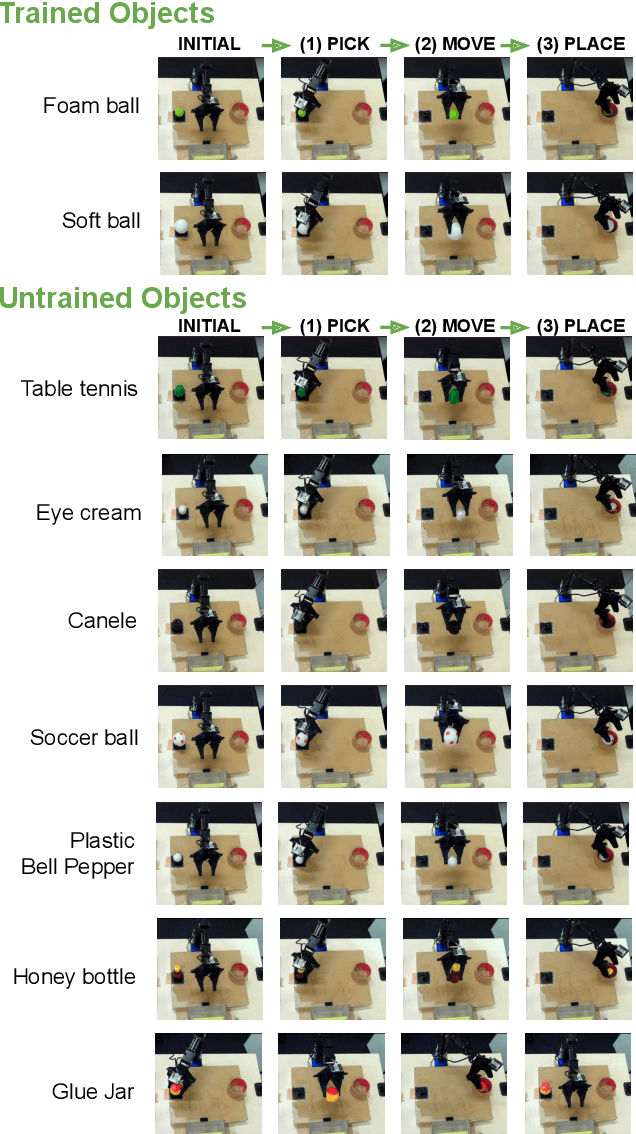

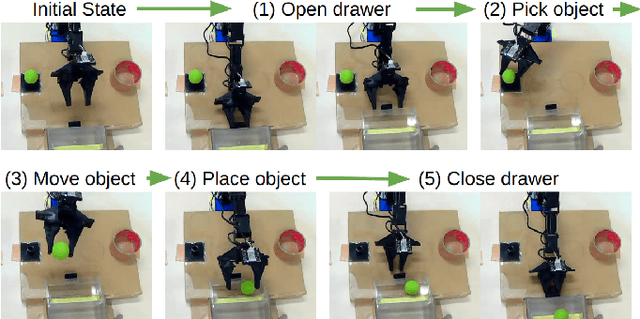

DABI: Evaluation of Data Augmentation Methods Using Downsampling in Bilateral Control-Based Imitation Learning with Images

Oct 06, 2024

Abstract:Autonomous robot manipulation is a complex and continuously evolving robotics field. This paper focuses on data augmentation methods in imitation learning. Imitation learning consists of three stages: data collection from experts, learning model, and execution. However, collecting expert data requires manual effort and is time-consuming. Additionally, as sensors have different data acquisition intervals, preprocessing such as downsampling to match the lowest frequency is necessary. Downsampling enables data augmentation and also contributes to the stabilization of robot operations. In light of this background, this paper proposes the Data Augmentation Method for Bilateral Control-Based Imitation Learning with Images, called "DABI". DABI collects robot joint angles, velocities, and torques at 1000 Hz, and uses images from gripper and environmental cameras captured at 100 Hz as the basis for data augmentation. This enables a tenfold increase in data. In this paper, we collected just 5 expert demonstration datasets. We trained the bilateral control Bi-ACT model with the unaltered dataset and two augmentation methods for comparative experiments and conducted real-world experiments. The results confirmed a significant improvement in success rates, thereby proving the effectiveness of DABI. For additional material, please check https://mertcookimg.github.io/dabi

3DFacePolicy: Speech-Driven 3D Facial Animation with Diffusion Policy

Sep 17, 2024Abstract:Audio-driven 3D facial animation has made immersive progress both in research and application developments. The newest approaches focus on Transformer-based methods and diffusion-based methods, however, there is still gap in the vividness and emotional expression between the generated animation and real human face. To tackle this limitation, we propose 3DFacePolicy, a diffusion policy model for 3D facial animation prediction. This method generates variable and realistic human facial movements by predicting the 3D vertex trajectory on the 3D facial template with diffusion policy instead of facial generation for every frame. It takes audio and vertex states as observations to predict the vertex trajectory and imitate real human facial expressions, which keeps the continuous and natural flow of human emotions. The experiments show that our approach is effective in variable and dynamic facial motion synthesizing.

MRNaB: Mixed Reality-based Robot Navigation Interface using Optical-see-through MR-beacon

Mar 28, 2024Abstract:Recent advancements in robotics have led to the development of numerous interfaces to enhance the intuitiveness of robot navigation. However, the reliance on traditional 2D displays imposes limitations on the simultaneous visualization of information. Mixed Reality (MR) technology addresses this issue by enhancing the dimensionality of information visualization, allowing users to perceive multiple pieces of information concurrently. This paper proposes Mixed reality-based robot navigation interface using an optical-see-through MR-beacon (MRNaB), a novel approach that incorporates an MR-beacon, situated atop the real-world environment, to function as a signal transmitter for robot navigation. This MR-beacon is designed to be persistent, eliminating the need for repeated navigation inputs for the same location. Our system is mainly constructed into four primary functions: "Add", "Move", "Delete", and "Select". These allow for the addition of a MR-beacon, location movement, its deletion, and the selection of MR-beacon for navigation purposes, respectively. The effectiveness of the proposed method was then validated through experiments by comparing it with the traditional 2D system. As the result, MRNaB was proven to increase the performance of the user when doing navigation to a certain place subjectively and objectively. For additional material, please check: https://mertcookimg.github.io/mrnab

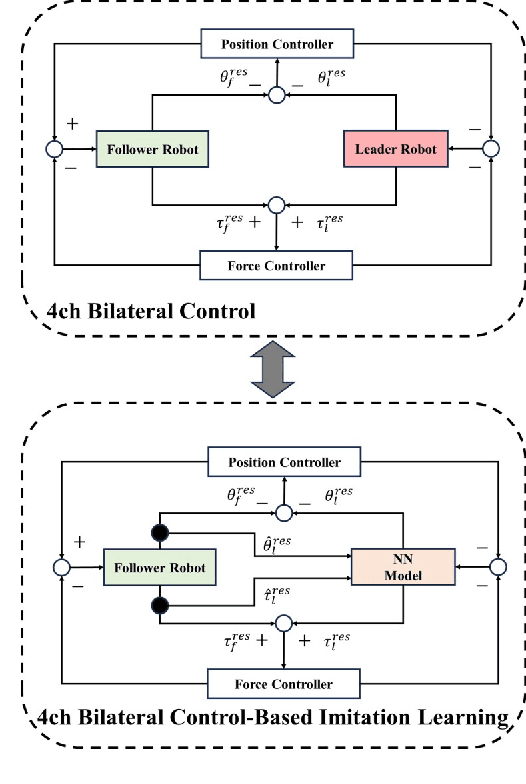

ILBiT: Imitation Learning for Robot Using Position and Torque Information based on Bilateral Control with Transformer

Feb 05, 2024Abstract:Autonomous manipulation in robot arms is a complex and evolving field of study in robotics. This paper introduces an innovative approach to this challenge by focusing on imitation learning (IL). Unlike traditional imitation methods, our approach uses IL based on bilateral control, allowing for more precise and adaptable robot movements. The conventional IL based on bilateral control method have relied on Long Short-Term Memory (LSTM) networks. In this paper, we present the IL for robot using position and torque information based on Bilateral control with Transformer (ILBiT). This proposed method employs the Transformer model, known for its robust performance in handling diverse datasets and its capability to surpass LSTM's limitations, especially in tasks requiring detailed force adjustments. A standout feature of ILBiT is its high-frequency operation at 100 Hz, which significantly improves the system's adaptability and response to varying environments and objects of different hardness levels. The effectiveness of the Transformer-based ILBiT method can be seen through comprehensive real-world experiments.

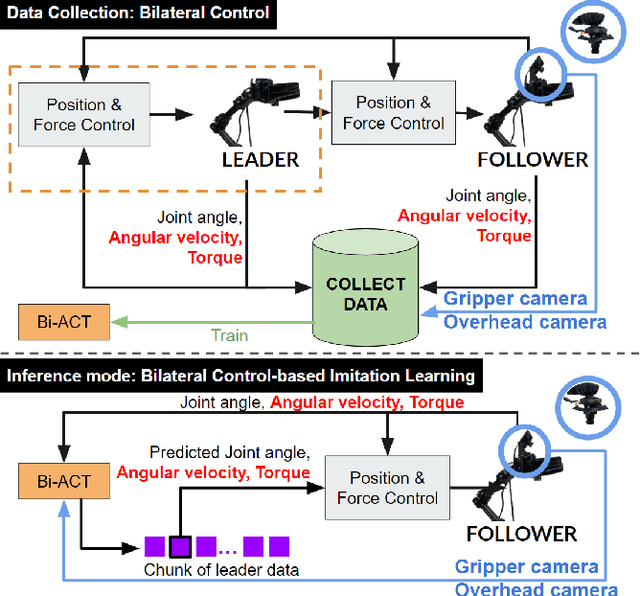

Bi-ACT: Bilateral Control-Based Imitation Learning via Action Chunking with Transformer

Jan 31, 2024

Abstract:Autonomous manipulation in robot arms is a complex and evolving field of study in robotics. This paper proposes work stands at the intersection of two innovative approaches in the field of robotics and machine learning. Inspired by the Action Chunking with Transformer (ACT) model, which employs joint location and image data to predict future movements, our work integrates principles of Bilateral Control-Based Imitation Learning to enhance robotic control. Our objective is to synergize these techniques, thereby creating a more robust and efficient control mechanism. In our approach, the data collected from the environment are images from the gripper and overhead cameras, along with the joint angles, angular velocities, and forces of the follower robot using bilateral control. The model is designed to predict the subsequent steps for the joint angles, angular velocities, and forces of the leader robot. This predictive capability is crucial for implementing effective bilateral control in the follower robot, allowing for more nuanced and responsive maneuvering.

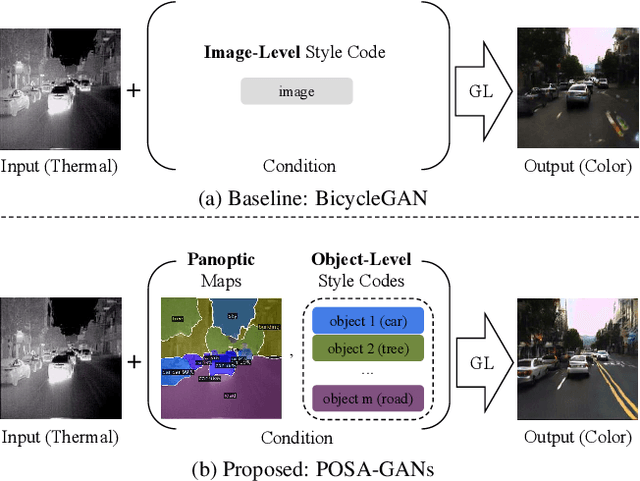

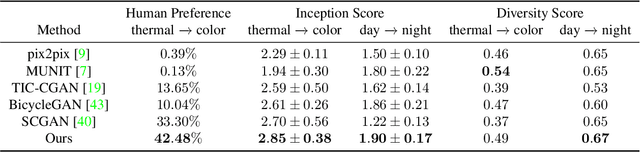

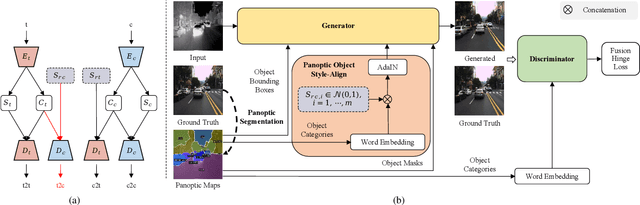

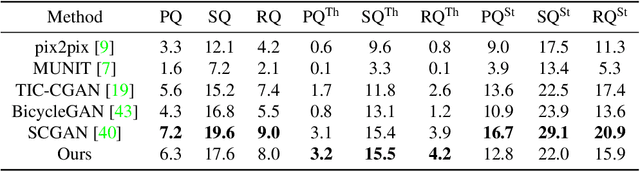

Panoptic-based Object Style-Align for Image-to-Image Translation

Dec 03, 2021

Abstract:Despite remarkable recent progress in image translation, the complex scene with multiple discrepant objects remains a challenging problem. Because the translated images have low fidelity and tiny objects in fewer details and obtain unsatisfactory performance in object recognition. Without the thorough object perception (i.e., bounding boxes, categories, and masks) of the image as prior knowledge, the style transformation of each object will be difficult to track in the image translation process. We propose panoptic-based object style-align generative adversarial networks (POSA-GANs) for image-to-image translation together with a compact panoptic segmentation dataset. The panoptic segmentation model is utilized to extract panoptic-level perception (i.e., overlap-removed foreground object instances and background semantic regions in the image). This is utilized to guide the alignment between the object content codes of the input domain image and object style codes sampled from the style space of the target domain. The style-aligned object representations are further transformed to obtain precise boundaries layout for higher fidelity object generation. The proposed method was systematically compared with different competing methods and obtained significant improvement on both image quality and object recognition performance for translated images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge