Yuanhang Zou

SlotGAT: Slot-based Message Passing for Heterogeneous Graph Neural Network

May 03, 2024Abstract:Heterogeneous graphs are ubiquitous to model complex data. There are urgent needs on powerful heterogeneous graph neural networks to effectively support important applications. We identify a potential semantic mixing issue in existing message passing processes, where the representations of the neighbors of a node $v$ are forced to be transformed to the feature space of $v$ for aggregation, though the neighbors are in different types. That is, the semantics in different node types are entangled together into node $v$'s representation. To address the issue, we propose SlotGAT with separate message passing processes in slots, one for each node type, to maintain the representations in their own node-type feature spaces. Moreover, in a slot-based message passing layer, we design an attention mechanism for effective slot-wise message aggregation. Further, we develop a slot attention technique after the last layer of SlotGAT, to learn the importance of different slots in downstream tasks. Our analysis indicates that the slots in SlotGAT can preserve different semantics in various feature spaces. The superiority of SlotGAT is evaluated against 13 baselines on 6 datasets for node classification and link prediction. Our code is at https://github.com/scottjiao/SlotGAT_ICML23/.

Pairwise Learning for Neural Link Prediction

Dec 22, 2021

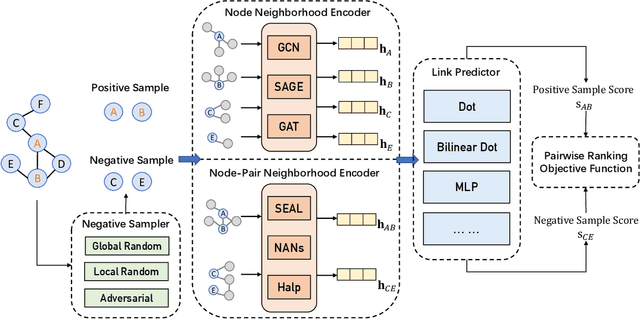

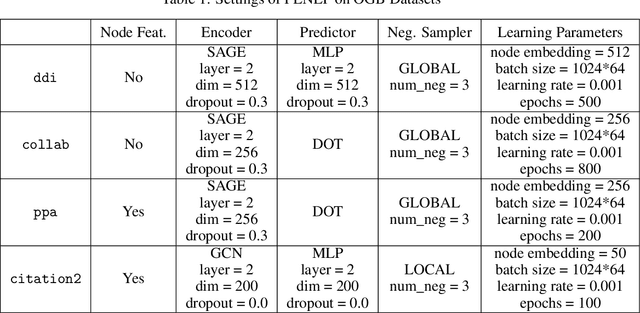

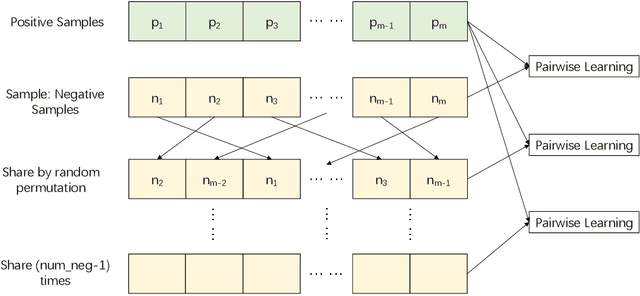

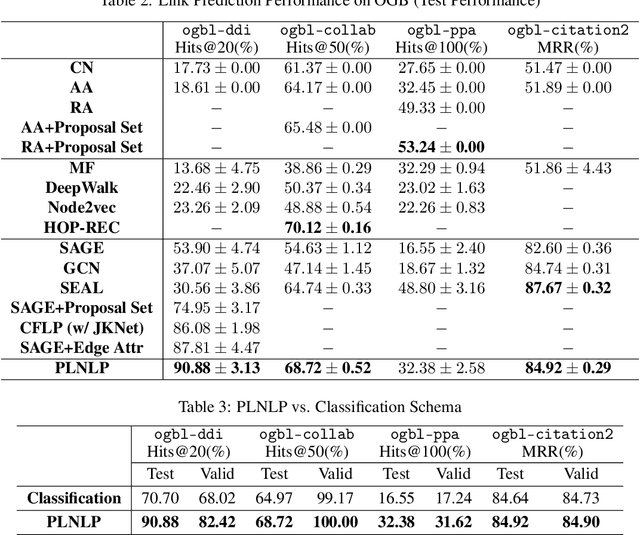

Abstract:In this paper, we aim at providing an effective Pairwise Learning Neural Link Prediction (PLNLP) framework. The framework treats link prediction as a pairwise learning to rank problem and consists of four main components, i.e., neighborhood encoder, link predictor, negative sampler and objective function. The framework is flexible that any generic graph neural convolution or link prediction specific neural architecture could be employed as neighborhood encoder. For link predictor, we design different scoring functions, which could be selected based on different types of graphs. In negative sampler, we provide several sampling strategies, which are problem specific. As for objective function, we propose to use an effective ranking loss, which approximately maximizes the standard ranking metric AUC. We evaluate the proposed PLNLP framework on 4 link property prediction datasets of Open Graph Benchmark, including ogbl-ddi, ogbl-collab, ogbl-ppa and ogbl-ciation2. PLNLP achieves top 1 performance on ogbl-ddi and ogbl-collab, and top 2 performance on ogbl-ciation2 only with basic neural architecture. The performance demonstrates the effectiveness of PLNLP.

Long Short-Term Temporal Meta-learning in Online Recommendation

May 08, 2021

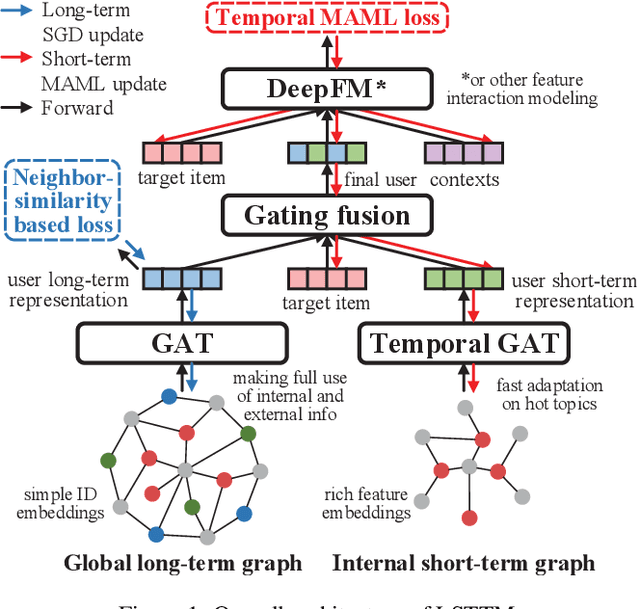

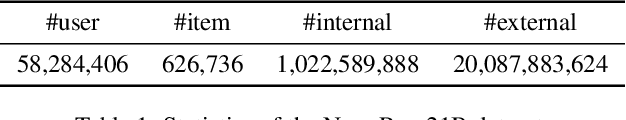

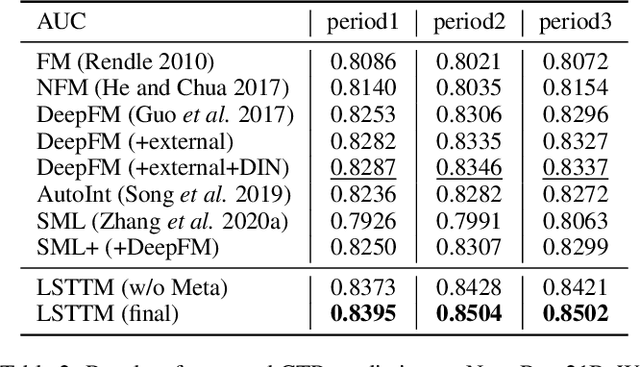

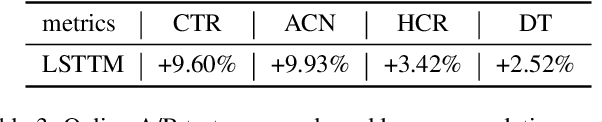

Abstract:An effective online recommendation system should jointly capture user long-term and short-term preferences in both user internal and external behaviors. However, it is challenging to conduct fast adaptations to variable new topics while making full use of all information in large-scale systems, due to the online efficiency limitation and complexity of real-world systems. To address this, we propose a novel Long Short-Term Temporal Meta-learning framework (LSTTM) for online recommendation, which captures user preferences from a global long-term graph and an internal short-term graph. To improve online learning for short-term interests, we propose a temporal MAML method with asynchronous online updating for fast adaptation, which regards recommendations at different time periods as different tasks. In experiments, LSTTM achieves significant improvements on both offline and online evaluations. LSTTM has also been deployed on a widely-used online system, affecting millions of users. The idea of temporal MAML can be easily transferred to other models and temporal tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge