Long Short-Term Temporal Meta-learning in Online Recommendation

Paper and Code

May 08, 2021

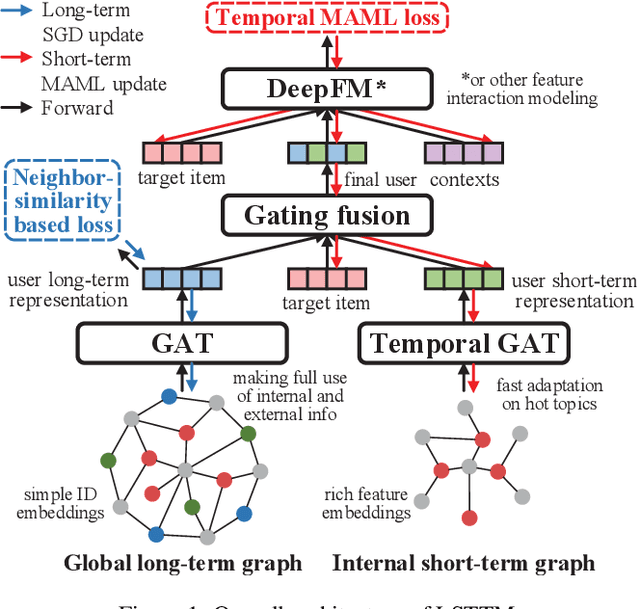

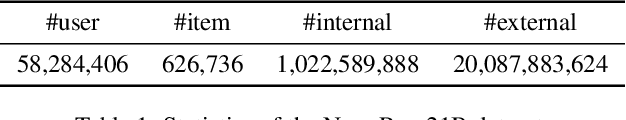

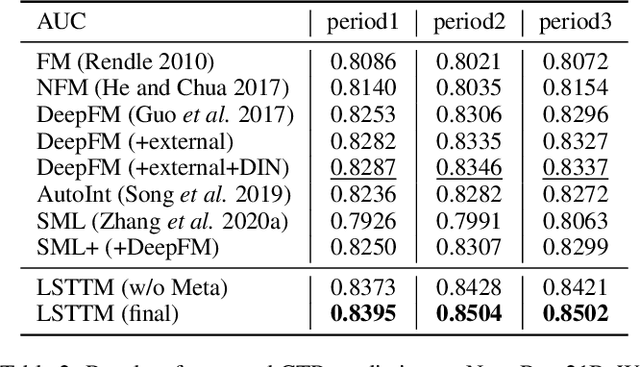

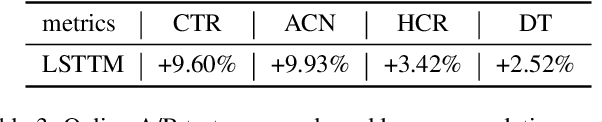

An effective online recommendation system should jointly capture user long-term and short-term preferences in both user internal and external behaviors. However, it is challenging to conduct fast adaptations to variable new topics while making full use of all information in large-scale systems, due to the online efficiency limitation and complexity of real-world systems. To address this, we propose a novel Long Short-Term Temporal Meta-learning framework (LSTTM) for online recommendation, which captures user preferences from a global long-term graph and an internal short-term graph. To improve online learning for short-term interests, we propose a temporal MAML method with asynchronous online updating for fast adaptation, which regards recommendations at different time periods as different tasks. In experiments, LSTTM achieves significant improvements on both offline and online evaluations. LSTTM has also been deployed on a widely-used online system, affecting millions of users. The idea of temporal MAML can be easily transferred to other models and temporal tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge