Yalong Wang

DualGR: Generative Retrieval with Long and Short-Term Interests Modeling

Nov 16, 2025Abstract:In large-scale industrial recommendation systems, retrieval must produce high-quality candidates from massive corpora under strict latency. Recently, Generative Retrieval (GR) has emerged as a viable alternative to Embedding-Based Retrieval (EBR), which quantizes items into a finite token space and decodes candidates autoregressively, providing a scalable path that explicitly models target-history interactions via cross-attention. However, three challenges persist: 1) how to balance users' long-term and short-term interests , 2) noise interference when generating hierarchical semantic IDs (SIDs), 3) the absence of explicit modeling for negative feedback such as exposed items without clicks. To address these challenges, we propose DualGR, a generative retrieval framework that explicitly models dual horizons of user interests with selective activation. Specifically, DualGR utilizes Dual-Branch Long/Short-Term Router (DBR) to cover both stable preferences and transient intents by explicitly modeling users' long- and short-term behaviors. Meanwhile, Search-based SID Decoding (S2D) is presented to control context-induced noise and enhance computational efficiency by constraining candidate interactions to the current coarse (level-1) bucket during fine-grained (level-2/3) SID prediction. % also reinforcing intra-class consistency. Finally, we propose an Exposure-aware Next-Token Prediction Loss (ENTP-Loss) that treats "exposed-but-unclicked" items as hard negatives at level-1, enabling timely interest fade-out. On the large-scale Kuaishou short-video recommendation system, DualGR has achieved outstanding performance. Online A/B testing shows +0.527% video views and +0.432% watch time lifts, validating DualGR as a practical and effective paradigm for industrial generative retrieval.

Non-autoregressive Generative Models for Reranking Recommendation

Feb 10, 2024Abstract:In a multi-stage recommendation system, reranking plays a crucial role by modeling the intra-list correlations among items.The key challenge of reranking lies in the exploration of optimal sequences within the combinatorial space of permutations. Recent research proposes a generator-evaluator learning paradigm, where the generator generates multiple feasible sequences and the evaluator picks out the best sequence based on the estimated listwise score. Generator is of vital importance, and generative models are well-suited for the generator function. Current generative models employ an autoregressive strategy for sequence generation. However, deploying autoregressive models in real-time industrial systems is challenging. Hence, we propose a Non-AutoRegressive generative model for reranking Recommendation (NAR4Rec) designed to enhance efficiency and effectiveness. To address challenges related to sparse training samples and dynamic candidates impacting model convergence, we introduce a matching model. Considering the diverse nature of user feedback, we propose a sequence-level unlikelihood training objective to distinguish feasible from unfeasible sequences. Additionally, to overcome the lack of dependency modeling in non-autoregressive models regarding target items, we introduce contrastive decoding to capture correlations among these items. Extensive offline experiments on publicly available datasets validate the superior performance of our proposed approach compared to the existing state-of-the-art reranking methods. Furthermore, our method has been fully deployed in a popular video app Kuaishou with over 300 million daily active users, significantly enhancing online recommendation quality, and demonstrating the effectiveness and efficiency of our approach.

Long Short-Term Temporal Meta-learning in Online Recommendation

May 08, 2021

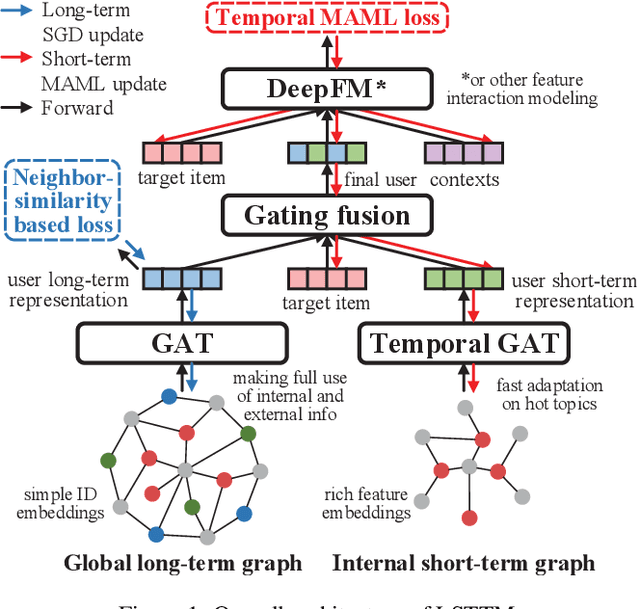

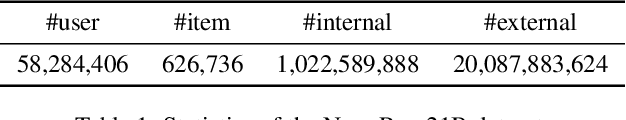

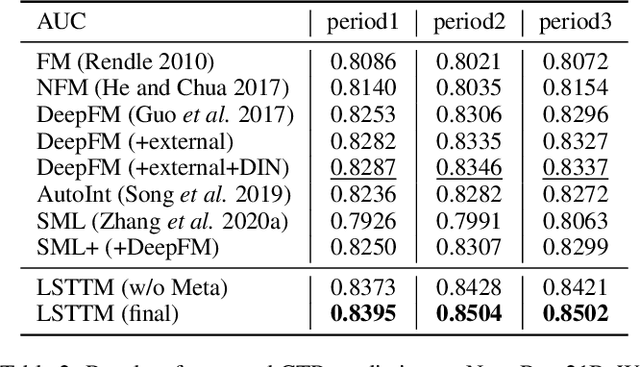

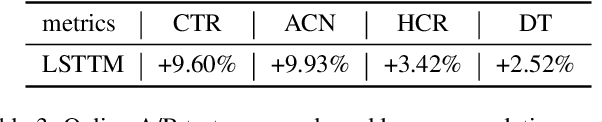

Abstract:An effective online recommendation system should jointly capture user long-term and short-term preferences in both user internal and external behaviors. However, it is challenging to conduct fast adaptations to variable new topics while making full use of all information in large-scale systems, due to the online efficiency limitation and complexity of real-world systems. To address this, we propose a novel Long Short-Term Temporal Meta-learning framework (LSTTM) for online recommendation, which captures user preferences from a global long-term graph and an internal short-term graph. To improve online learning for short-term interests, we propose a temporal MAML method with asynchronous online updating for fast adaptation, which regards recommendations at different time periods as different tasks. In experiments, LSTTM achieves significant improvements on both offline and online evaluations. LSTTM has also been deployed on a widely-used online system, affecting millions of users. The idea of temporal MAML can be easily transferred to other models and temporal tasks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge