Youdan Feng

Enhancing Lesion Segmentation in PET/CT Imaging with Deep Learning and Advanced Data Preprocessing Techniques

Sep 15, 2024

Abstract:The escalating global cancer burden underscores the critical need for precise diagnostic tools in oncology. This research employs deep learning to enhance lesion segmentation in PET/CT imaging, utilizing a dataset of 900 whole-body FDG-PET/CT and 600 PSMA-PET/CT studies from the AutoPET challenge III. Our methodical approach includes robust preprocessing and data augmentation techniques to ensure model robustness and generalizability. We investigate the influence of non-zero normalization and modifications to the data augmentation pipeline, such as the introduction of RandGaussianSharpen and adjustments to the Gamma transform parameter. This study aims to contribute to the standardization of preprocessing and augmentation strategies in PET/CT imaging, potentially improving the diagnostic accuracy and the personalized management of cancer patients. Our code will be open-sourced and available at https://github.com/jiayiliu-pku/DC2024.

Shuffle Instances-based Vision Transformer for Pancreatic Cancer ROSE Image Classification

Aug 14, 2022

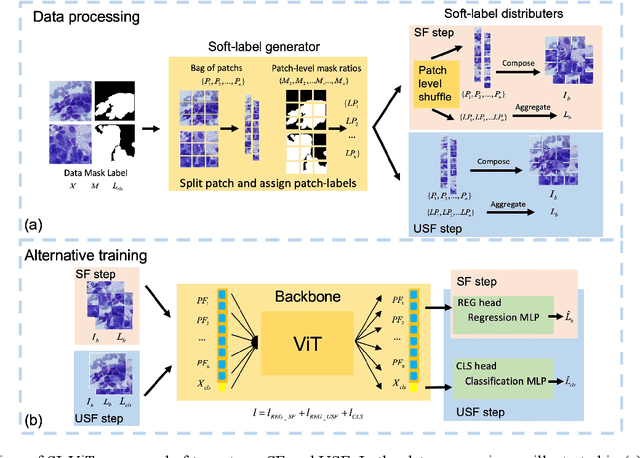

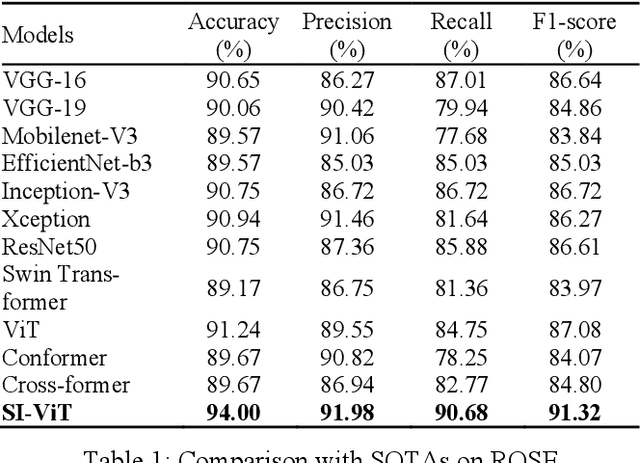

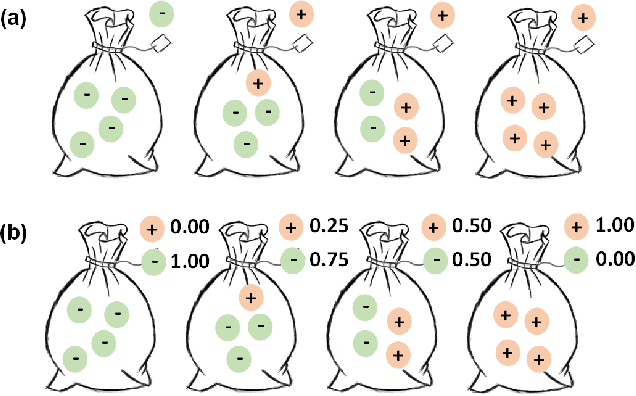

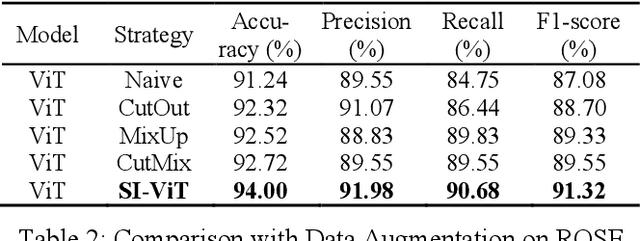

Abstract:The rapid on-site evaluation (ROSE) technique can signifi-cantly accelerate the diagnosis of pancreatic cancer by im-mediately analyzing the fast-stained cytopathological images. Computer-aided diagnosis (CAD) can potentially address the shortage of pathologists in ROSE. However, the cancerous patterns vary significantly between different samples, making the CAD task extremely challenging. Besides, the ROSE images have complicated perturbations regarding color distribution, brightness, and contrast due to different staining qualities and various acquisition device types. To address these challenges, we proposed a shuffle instances-based Vision Transformer (SI-ViT) approach, which can reduce the perturbations and enhance the modeling among the instances. With the regrouped bags of shuffle instances and their bag-level soft labels, the approach utilizes a regression head to make the model focus on the cells rather than various perturbations. Simultaneously, combined with a classification head, the model can effectively identify the general distributive patterns among different instances. The results demonstrate significant improvements in the classification accuracy with more accurate attention regions, indicating that the diverse patterns of ROSE images are effectively extracted, and the complicated perturbations are significantly reduced. It also suggests that the SI-ViT has excellent potential in analyzing cytopathological images. The code and experimental results are available at https://github.com/sagizty/MIL-SI.

Pancreatic Cancer ROSE Image Classification Based on Multiple Instance Learning with Shuffle Instances

Jun 07, 2022

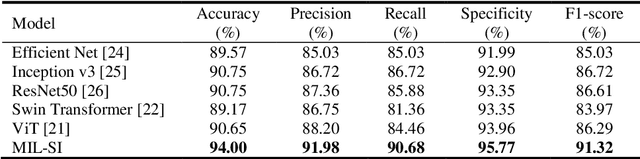

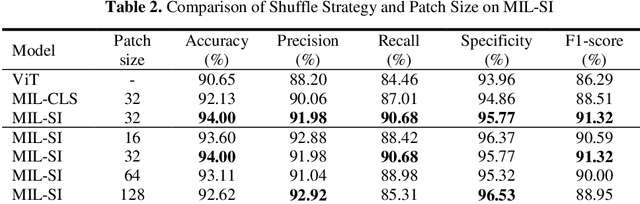

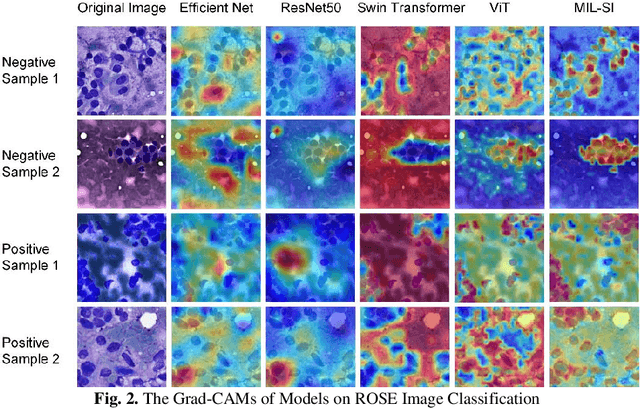

Abstract:The rapid on-site evaluation (ROSE) technique can significantly ac-celerate the diagnostic workflow of pancreatic cancer by immediately analyzing the fast-stained cytopathological images with on-site pathologists. Computer-aided diagnosis (CAD) using the deep learning method has the potential to solve the problem of insufficient pathology staffing. However, the cancerous patterns of ROSE images vary greatly between different samples, making the CAD task extremely challenging. Besides, due to different staining qualities and various types of acquisition devices, the ROSE images also have compli-cated perturbations in terms of color distribution, brightness, and contrast. To address these challenges, we proposed a novel multiple instance learning (MIL) approach using shuffle patches containing the instances, which adopts the patch-based learning strategy of Vision Transformers. With the re-grouped bags of shuffle instances and their bag-level soft labels, the approach utilizes a MIL head to make the model focus on the features from the pancreatic cancer cells, rather than that from various perturbations in ROSE images. Simultaneously, combined with a classification head, the model can effectively identify the gen-eral distributive patterns across different instances. The results demonstrate the significant improvements in the classification accuracy with more accurate at-tention regions, indicating that the diverse patterns of ROSE images are effec-tively extracted, and the complicated perturbations of ROSE images are signifi-cantly eliminated. It also suggests that the MIL with shuffle instances has great potential in the analysis of cytopathological images.

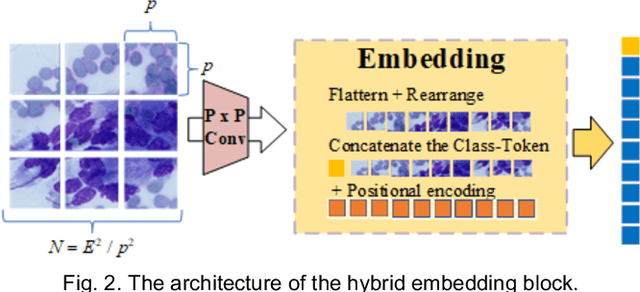

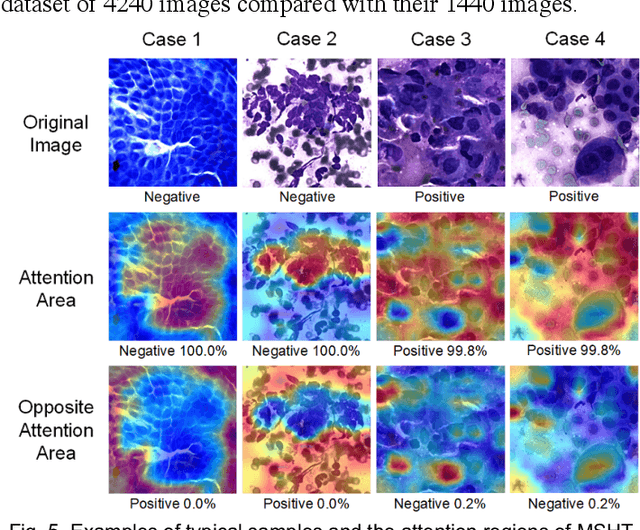

MSHT: Multi-stage Hybrid Transformer for the ROSE Image Analysis of Pancreatic Cancer

Dec 27, 2021

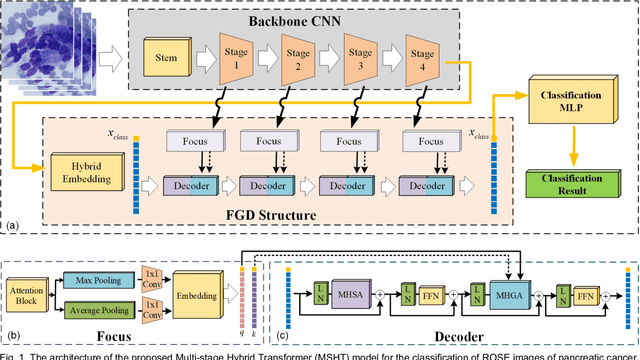

Abstract:Pancreatic cancer is one of the most malignant cancers in the world, which deteriorates rapidly with very high mortality. The rapid on-site evaluation (ROSE) technique innovates the workflow by immediately analyzing the fast stained cytopathological images with on-site pathologists, which enables faster diagnosis in this time-pressured process. However, the wider expansion of ROSE diagnosis has been hindered by the lack of experienced pathologists. To overcome this problem, we propose a hybrid high-performance deep learning model to enable the automated workflow, thus freeing the occupation of the valuable time of pathologists. By firstly introducing the Transformer block into this field with our particular multi-stage hybrid design, the spatial features generated by the convolutional neural network (CNN) significantly enhance the Transformer global modeling. Turning multi-stage spatial features as global attention guidance, this design combines the robustness from the inductive bias of CNN with the sophisticated global modeling power of Transformer. A dataset of 4240 ROSE images is collected to evaluate the method in this unexplored field. The proposed multi-stage hybrid Transformer (MSHT) achieves 95.68% in classification accuracy, which is distinctively higher than the state-of-the-art models. Facing the need for interpretability, MSHT outperforms its counterparts with more accurate attention regions. The results demonstrate that the MSHT can distinguish cancer samples accurately at an unprecedented image scale, laying the foundation for deploying automatic decision systems and enabling the expansion of ROSE in clinical practice. The code and records are available at: https://github.com/sagizty/Multi-Stage-Hybrid-Transformer.

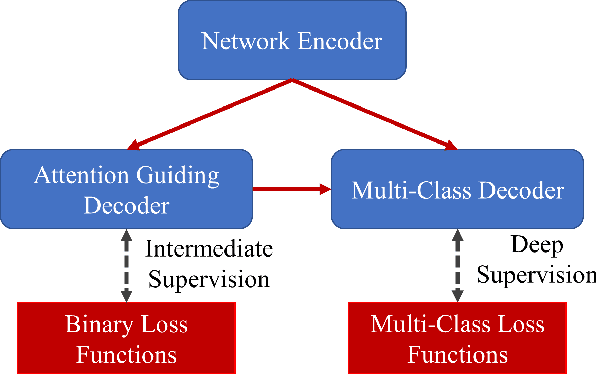

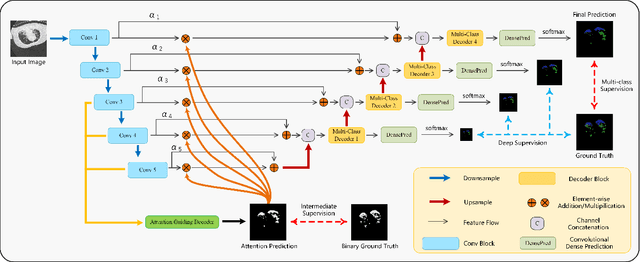

Prior Attention Network for Multi-Lesion Segmentation in Medical Images

Oct 10, 2021

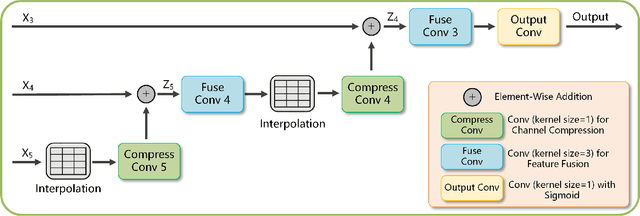

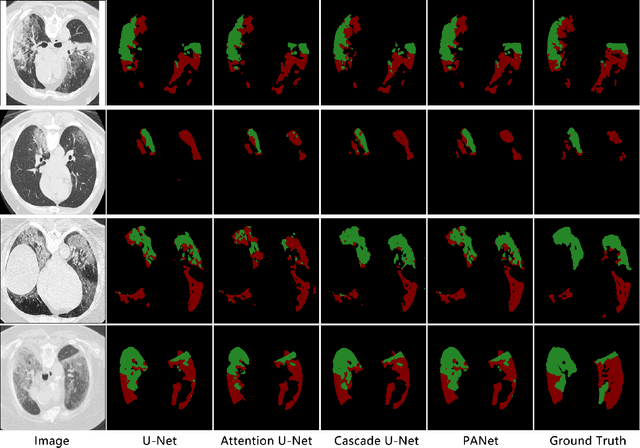

Abstract:The accurate segmentation of multiple types of lesions from adjacent tissues in medical images is significant in clinical practice. Convolutional neural networks (CNNs) based on the coarse-to-fine strategy have been widely used in this field. However, multi-lesion segmentation remains to be challenging due to the uncertainty in size, contrast, and high interclass similarity of tissues. In addition, the commonly adopted cascaded strategy is rather demanding in terms of hardware, which limits the potential of clinical deployment. To address the problems above,we propose a novel Prior Attention Network (PANet) that follows the coarse-to-fine strategy to perform multi-lesion segmentation in medical images. The proposed network achieves the two steps of segmentation in a single network by inserting lesion-related spatial attention mechanism in the network. Further, we also propose the intermediate supervision strategy for generating lesion-related attention to acquire the regions of interest (ROIs), which accelerates the convergence and obviously improves the segmentation performance. We have investigated the proposed segmentation framework in two applications: 2D segmentation of multiple lung infections in lung CT slices and 3D segmentation of multiple lesions in brain MRIs. Experimental results show that in both 2D and 3D segmentation tasks our proposed network achieves better performance with less computational cost compared with cascaded networks. The proposed network can be regarded as a universal solution to multi-lesion segmentation in both 2D and 3D tasks. The source code is available at: https://github.com/hsiangyuzhao/PANet.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge