Yixuan Lyu

Unlocking Attributes' Contribution to Successful Camouflage: A Combined Textual and VisualAnalysis Strategy

Aug 22, 2024

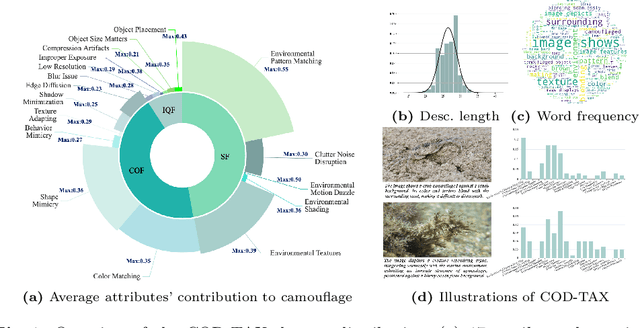

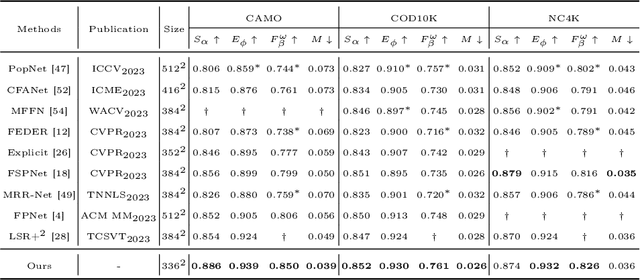

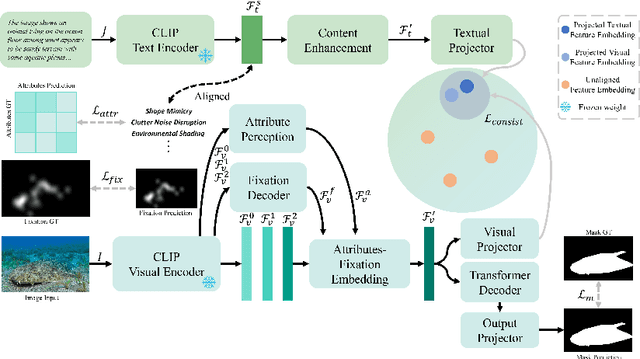

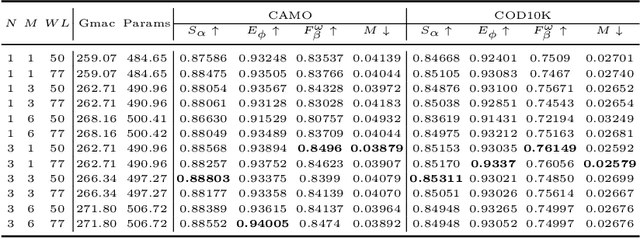

Abstract:In the domain of Camouflaged Object Segmentation (COS), despite continuous improvements in segmentation performance, the underlying mechanisms of effective camouflage remain poorly understood, akin to a black box. To address this gap, we present the first comprehensive study to examine the impact of camouflage attributes on the effectiveness of camouflage patterns, offering a quantitative framework for the evaluation of camouflage designs. To support this analysis, we have compiled the first dataset comprising descriptions of camouflaged objects and their attribute contributions, termed COD-Text And X-attributions (COD-TAX). Moreover, drawing inspiration from the hierarchical process by which humans process information: from high-level textual descriptions of overarching scenarios, through mid-level summaries of local areas, to low-level pixel data for detailed analysis. We have developed a robust framework that combines textual and visual information for the task of COS, named Attribution CUe Modeling with Eye-fixation Network (ACUMEN). ACUMEN demonstrates superior performance, outperforming nine leading methods across three widely-used datasets. We conclude by highlighting key insights derived from the attributes identified in our study. Code: https://github.com/lyu-yx/ACUMEN.

Understanding the Impact of Image Quality and Distance of Objects to Object Detection Performance

Sep 17, 2022

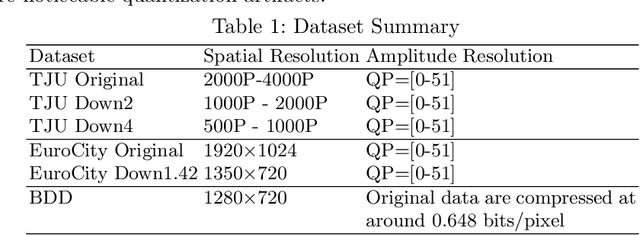

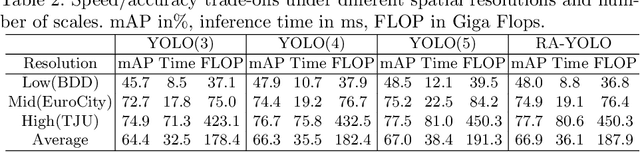

Abstract:Deep learning has made great strides for object detection in images. The detection accuracy and computational cost of object detection depend on the spatial resolution of an image, which may be constrained by both the camera and storage considerations. Compression is often achieved by reducing either spatial or amplitude resolution or, at times, both, both of which have well-known effects on performance. Detection accuracy also depends on the distance of the object of interest from the camera. Our work examines the impact of spatial and amplitude resolution, as well as object distance, on object detection accuracy and computational cost. We develop a resolution-adaptive variant of YOLOv5 (RA-YOLO), which varies the number of scales in the feature pyramid and detection head based on the spatial resolution of the input image. To train and evaluate this new method, we created a dataset of images with diverse spatial and amplitude resolutions by combining images from the TJU and Eurocity datasets and generating different resolutions by applying spatial resizing and compression. We first show that RA-YOLO achieves a good trade-off between detection accuracy and inference time over a large range of spatial resolutions. We then evaluate the impact of spatial and amplitude resolutions on object detection accuracy using the proposed RA-YOLO model. We demonstrate that the optimal spatial resolution that leads to the highest detection accuracy depends on the 'tolerated' image size. We further assess the impact of the distance of an object to the camera on the detection accuracy and show that higher spatial resolution enables a greater detection range. These results provide important guidelines for choosing the image spatial resolution and compression settings predicated on available bandwidth, storage, desired inference time, and/or desired detection range, in practical applications.

Network-Aware 5G Edge Computing for Object Detection: Augmenting Wearables to "See'' More, Farther and Faster

Dec 25, 2021

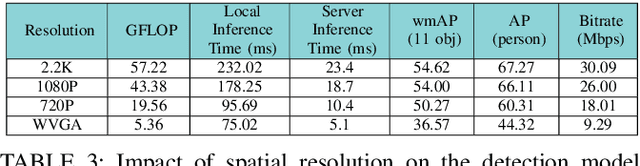

Abstract:Advanced wearable devices are increasingly incorporating high-resolution multi-camera systems. As state-of-the-art neural networks for processing the resulting image data are computationally demanding, there has been growing interest in leveraging fifth generation (5G) wireless connectivity and mobile edge computing for offloading this processing to the cloud. To assess this possibility, this paper presents a detailed simulation and evaluation of 5G wireless offloading for object detection within a powerful, new smart wearable called VIS4ION, for the Blind-and-Visually Impaired (BVI). The current VIS4ION system is an instrumented book-bag with high-resolution cameras, vision processing and haptic and audio feedback. The paper considers uploading the camera data to a mobile edge cloud to perform real-time object detection and transmitting the detection results back to the wearable. To determine the video requirements, the paper evaluates the impact of video bit rate and resolution on object detection accuracy and range. A new street scene dataset with labeled objects relevant to BVI navigation is leveraged for analysis. The vision evaluation is combined with a detailed full-stack wireless network simulation to determine the distribution of throughputs and delays with real navigation paths and ray-tracing from new high-resolution 3D models in an urban environment. For comparison, the wireless simulation considers both a standard 4G-Long Term Evolution (LTE) carrier and high-rate 5G millimeter-wave (mmWave) carrier. The work thus provides a thorough and realistic assessment of edge computing with mmWave connectivity in an application with both high bandwidth and low latency requirements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge