Yixing Wang

BatteryAgent: Synergizing Physics-Informed Interpretation with LLM Reasoning for Intelligent Battery Fault Diagnosis

Dec 31, 2025Abstract:Fault diagnosis of lithium-ion batteries is critical for system safety. While existing deep learning methods exhibit superior detection accuracy, their "black-box" nature hinders interpretability. Furthermore, restricted by binary classification paradigms, they struggle to provide root cause analysis and maintenance recommendations. To address these limitations, this paper proposes BatteryAgent, a hierarchical framework that integrates physical knowledge features with the reasoning capabilities of Large Language Models (LLMs). The framework comprises three core modules: (1) A Physical Perception Layer that utilizes 10 mechanism-based features derived from electrochemical principles, balancing dimensionality reduction with physical fidelity; (2) A Detection and Attribution Layer that employs Gradient Boosting Decision Trees and SHAP to quantify feature contributions; and (3) A Reasoning and Diagnosis Layer that leverages an LLM as the agent core. This layer constructs a "numerical-semantic" bridge, combining SHAP attributions with a mechanism knowledge base to generate comprehensive reports containing fault types, root cause analysis, and maintenance suggestions. Experimental results demonstrate that BatteryAgent effectively corrects misclassifications on hard boundary samples, achieving an AUROC of 0.986, which significantly outperforms current state-of-the-art methods. Moreover, the framework extends traditional binary detection to multi-type interpretable diagnosis, offering a new paradigm shift from "passive detection" to "intelligent diagnosis" for battery safety management.

The Second Monocular Depth Estimation Challenge

Apr 26, 2023

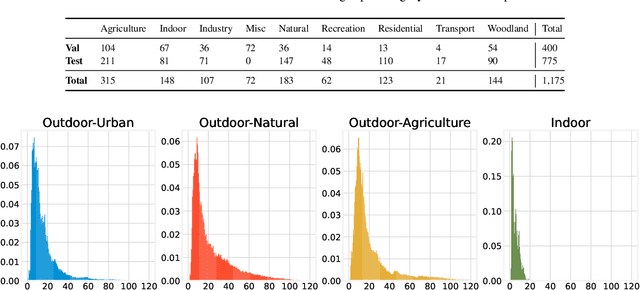

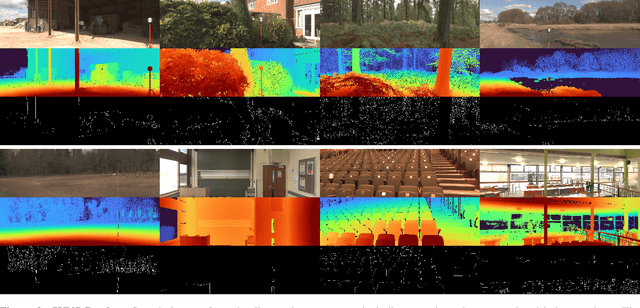

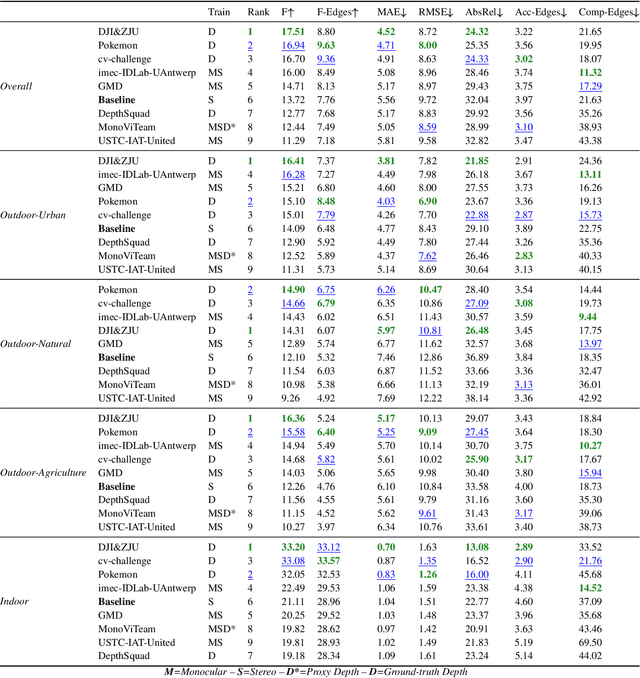

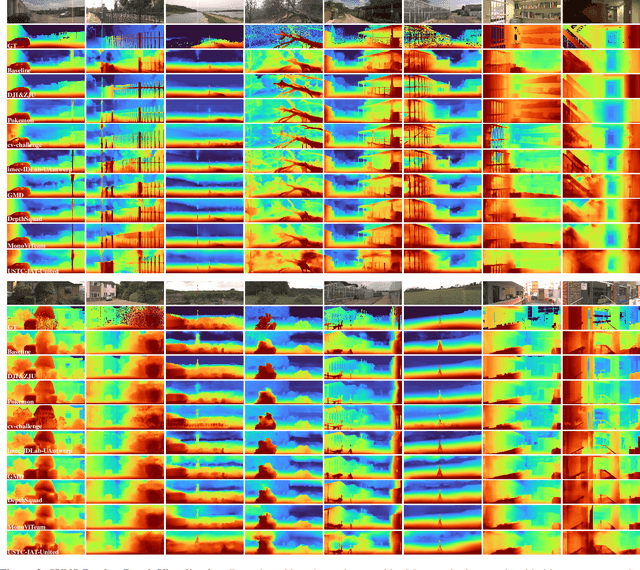

Abstract:This paper discusses the results for the second edition of the Monocular Depth Estimation Challenge (MDEC). This edition was open to methods using any form of supervision, including fully-supervised, self-supervised, multi-task or proxy depth. The challenge was based around the SYNS-Patches dataset, which features a wide diversity of environments with high-quality dense ground-truth. This includes complex natural environments, e.g. forests or fields, which are greatly underrepresented in current benchmarks. The challenge received eight unique submissions that outperformed the provided SotA baseline on any of the pointcloud- or image-based metrics. The top supervised submission improved relative F-Score by 27.62%, while the top self-supervised improved it by 16.61%. Supervised submissions generally leveraged large collections of datasets to improve data diversity. Self-supervised submissions instead updated the network architecture and pretrained backbones. These results represent a significant progress in the field, while highlighting avenues for future research, such as reducing interpolation artifacts at depth boundaries, improving self-supervised indoor performance and overall natural image accuracy.

Scene Synthesis from Human Motion

Jan 04, 2023Abstract:Large-scale capture of human motion with diverse, complex scenes, while immensely useful, is often considered prohibitively costly. Meanwhile, human motion alone contains rich information about the scene they reside in and interact with. For example, a sitting human suggests the existence of a chair, and their leg position further implies the chair's pose. In this paper, we propose to synthesize diverse, semantically reasonable, and physically plausible scenes based on human motion. Our framework, Scene Synthesis from HUMan MotiON (SUMMON), includes two steps. It first uses ContactFormer, our newly introduced contact predictor, to obtain temporally consistent contact labels from human motion. Based on these predictions, SUMMON then chooses interacting objects and optimizes physical plausibility losses; it further populates the scene with objects that do not interact with humans. Experimental results demonstrate that SUMMON synthesizes feasible, plausible, and diverse scenes and has the potential to generate extensive human-scene interaction data for the community.

Data-Centric Mixed-Variable Bayesian Optimization For Materials Design

Jul 04, 2019

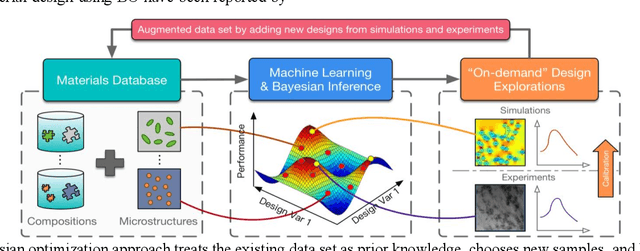

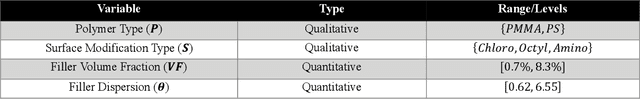

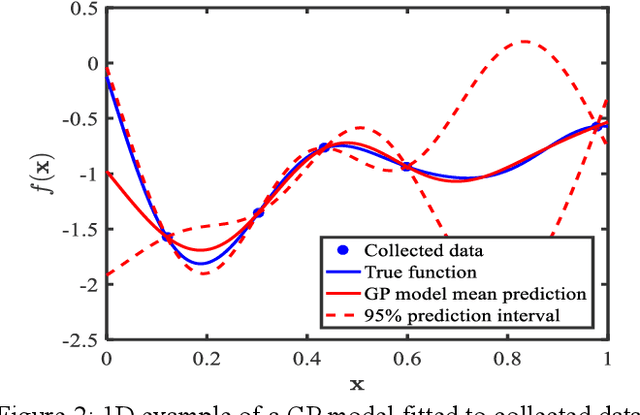

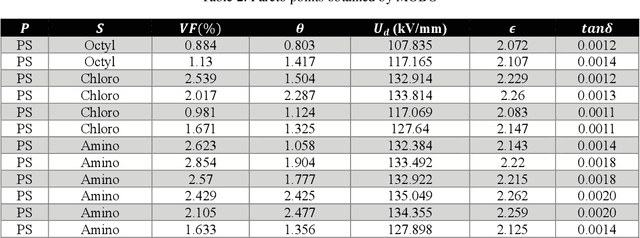

Abstract:Materials design can be cast as an optimization problem with the goal of achieving desired properties, by varying material composition, microstructure morphology, and processing conditions. Existence of both qualitative and quantitative material design variables leads to disjointed regions in property space, making the search for optimal design challenging. Limited availability of experimental data and the high cost of simulations magnify the challenge. This situation calls for design methodologies that can extract useful information from existing data and guide the search for optimal designs efficiently. To this end, we present a data-centric, mixed-variable Bayesian Optimization framework that integrates data from literature, experiments, and simulations for knowledge discovery and computational materials design. Our framework pivots around the Latent Variable Gaussian Process (LVGP), a novel Gaussian Process technique which projects qualitative variables on a continuous latent space for covariance formulation, as the surrogate model to quantify "lack of data" uncertainty. Expected improvement, an acquisition criterion that balances exploration and exploitation, helps navigate a complex, nonlinear design space to locate the optimum design. The proposed framework is tested through a case study which seeks to concurrently identify the optimal composition and morphology for insulating polymer nanocomposites. We also present an extension of mixed-variable Bayesian Optimization for multiple objectives to identify the Pareto Frontier within tens of iterations. These findings project Bayesian Optimization as a powerful tool for design of engineered material systems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge