Yifeng Cai

Connect the Dots: Knowledge Graph-Guided Crawler Attack on Retrieval-Augmented Generation Systems

Jan 22, 2026Abstract:Retrieval-augmented generation (RAG) systems integrate document retrieval with large language models and have been widely adopted. However, in privacy-related scenarios, RAG introduces a new privacy risk: adversaries can issue carefully crafted queries to exfiltrate sensitive content from the underlying corpus gradually. Although recent studies have demonstrated multi-turn extraction attacks, they rely on heuristics and fail to perform long-term extraction planning. To address these limitations, we formulate the RAG extraction attack as an adaptive stochastic coverage problem (ASCP). In ASCP, each query is treated as a probabilistic action that aims to maximize conditional marginal gain (CMG), enabling principled long-term planning under uncertainty. However, integrating ASCP with practical RAG attack faces three key challenges: unobservable CMG, intractability in the action space, and feasibility constraints. To overcome these challenges, we maintain a global attacker-side state to guide the attack. Building on this idea, we introduce RAGCRAWLER, which builds a knowledge graph to represent revealed information, uses this global state to estimate CMG, and plans queries in semantic space that target unretrieved regions. In comprehensive experiments across diverse RAG architectures and datasets, our proposed method, RAGCRAWLER, consistently outperforms all baselines. It achieves up to 84.4% corpus coverage within a fixed query budget and deliver an average improvement of 20.7% over the top-performing baseline. It also maintains high semantic fidelity and strong content reconstruction accuracy with low attack cost. Crucially, RAGCRAWLER proves its robustness by maintaining effectiveness against advanced RAG systems employing query rewriting and multi-query retrieval strategies. Our work reveals significant security gaps and highlights the pressing need for stronger safeguards for RAG.

Membership and Memorization in LLM Knowledge Distillation

Aug 09, 2025Abstract:Recent advances in Knowledge Distillation (KD) aim to mitigate the high computational demands of Large Language Models (LLMs) by transferring knowledge from a large ''teacher'' to a smaller ''student'' model. However, students may inherit the teacher's privacy when the teacher is trained on private data. In this work, we systematically characterize and investigate membership and memorization privacy risks inherent in six LLM KD techniques. Using instruction-tuning settings that span seven NLP tasks, together with three teacher model families (GPT-2, LLAMA-2, and OPT), and various size student models, we demonstrate that all existing LLM KD approaches carry membership and memorization privacy risks from the teacher to its students. However, the extent of privacy risks varies across different KD techniques. We systematically analyse how key LLM KD components (KD objective functions, student training data and NLP tasks) impact such privacy risks. We also demonstrate a significant disagreement between memorization and membership privacy risks of LLM KD techniques. Finally, we characterize per-block privacy risk and demonstrate that the privacy risk varies across different blocks by a large margin.

Moss: Proxy Model-based Full-Weight Aggregation in Federated Learning with Heterogeneous Models

Mar 13, 2025

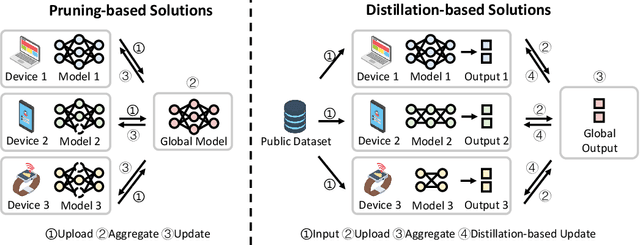

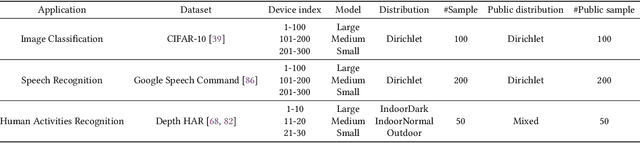

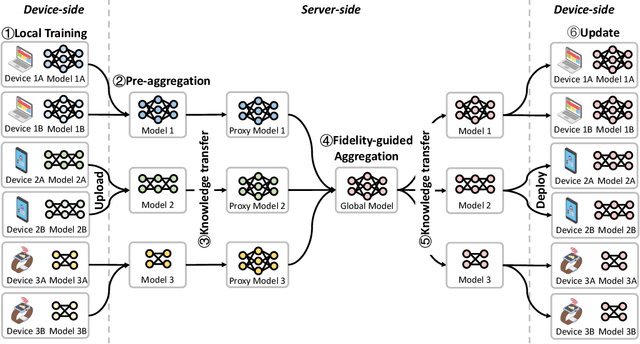

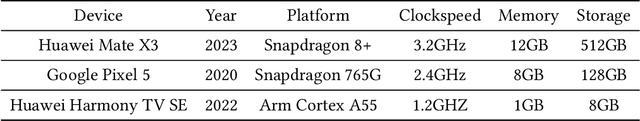

Abstract:Modern Federated Learning (FL) has become increasingly essential for handling highly heterogeneous mobile devices. Current approaches adopt a partial model aggregation paradigm that leads to sub-optimal model accuracy and higher training overhead. In this paper, we challenge the prevailing notion of partial-model aggregation and propose a novel "full-weight aggregation" method named Moss, which aggregates all weights within heterogeneous models to preserve comprehensive knowledge. Evaluation across various applications demonstrates that Moss significantly accelerates training, reduces on-device training time and energy consumption, enhances accuracy, and minimizes network bandwidth utilization when compared to state-of-the-art baselines.

TEESlice: Protecting Sensitive Neural Network Models in Trusted Execution Environments When Attackers have Pre-Trained Models

Nov 15, 2024

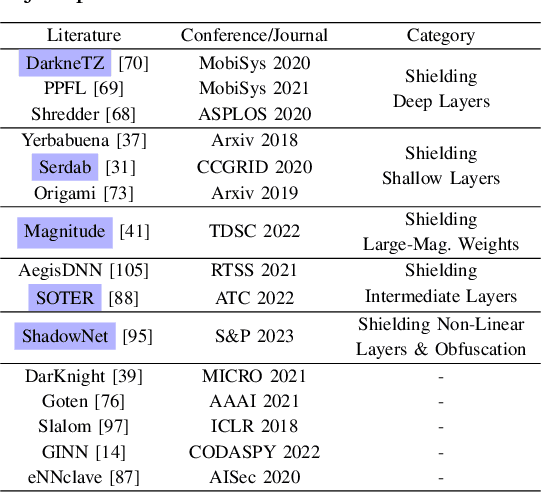

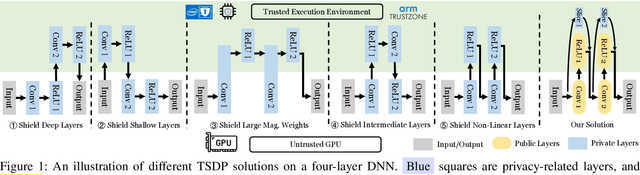

Abstract:Trusted Execution Environments (TEE) are used to safeguard on-device models. However, directly employing TEEs to secure the entire DNN model is challenging due to the limited computational speed. Utilizing GPU can accelerate DNN's computation speed but commercial widely-available GPUs usually lack security protection. To this end, scholars introduce TSDP, a method that protects privacy-sensitive weights within TEEs and offloads insensitive weights to GPUs. Nevertheless, current methods do not consider the presence of a knowledgeable adversary who can access abundant publicly available pre-trained models and datasets. This paper investigates the security of existing methods against such a knowledgeable adversary and reveals their inability to fulfill their security promises. Consequently, we introduce a novel partition before training strategy, which effectively separates privacy-sensitive weights from other components of the model. Our evaluation demonstrates that our approach can offer full model protection with a computational cost reduced by a factor of 10. In addition to traditional CNN models, we also demonstrate the scalability to large language models. Our approach can compress the private functionalities of the large language model to lightweight slices and achieve the same level of protection as the shielding-whole-model baseline.

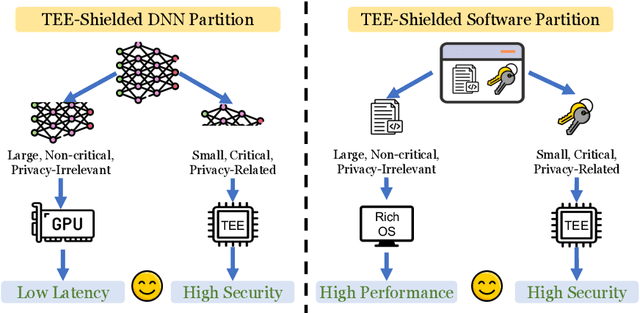

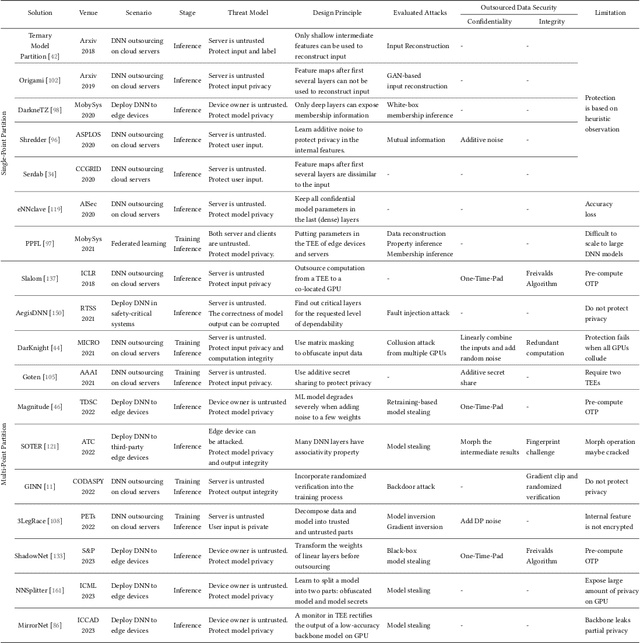

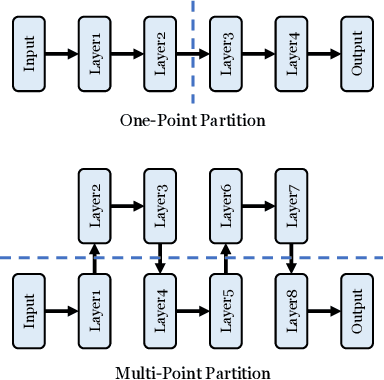

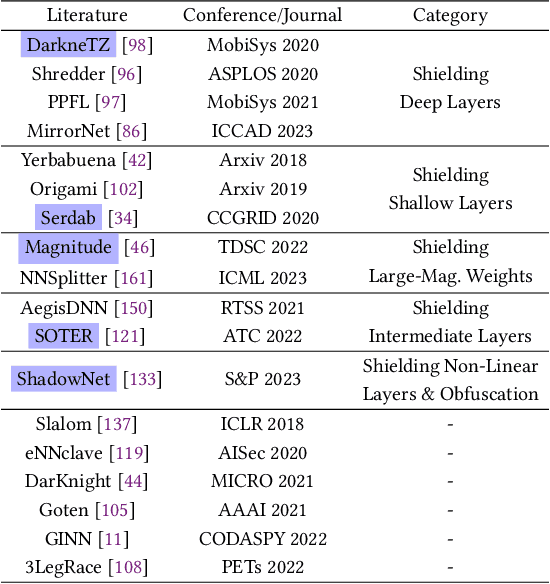

No Privacy Left Outside: On the Security of TEE-Shielded DNN Partition for On-Device ML

Oct 11, 2023

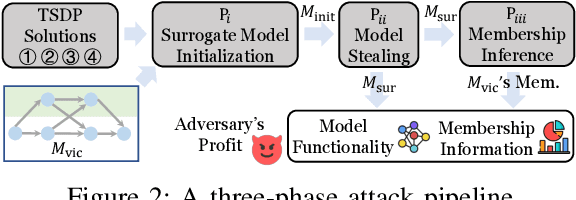

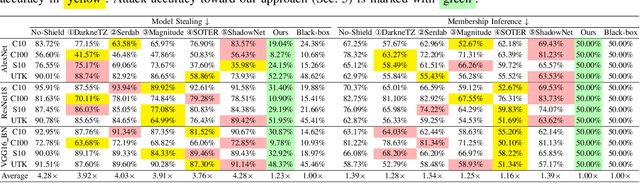

Abstract:On-device ML introduces new security challenges: DNN models become white-box accessible to device users. Based on white-box information, adversaries can conduct effective model stealing (MS) and membership inference attack (MIA). Using Trusted Execution Environments (TEEs) to shield on-device DNN models aims to downgrade (easy) white-box attacks to (harder) black-box attacks. However, one major shortcoming is the sharply increased latency (up to 50X). To accelerate TEE-shield DNN computation with GPUs, researchers proposed several model partition techniques. These solutions, referred to as TEE-Shielded DNN Partition (TSDP), partition a DNN model into two parts, offloading the privacy-insensitive part to the GPU while shielding the privacy-sensitive part within the TEE. This paper benchmarks existing TSDP solutions using both MS and MIA across a variety of DNN models, datasets, and metrics. We show important findings that existing TSDP solutions are vulnerable to privacy-stealing attacks and are not as safe as commonly believed. We also unveil the inherent difficulty in deciding optimal DNN partition configurations (i.e., the highest security with minimal utility cost) for present TSDP solutions. The experiments show that such ``sweet spot'' configurations vary across datasets and models. Based on lessons harvested from the experiments, we present TEESlice, a novel TSDP method that defends against MS and MIA during DNN inference. TEESlice follows a partition-before-training strategy, which allows for accurate separation between privacy-related weights from public weights. TEESlice delivers the same security protection as shielding the entire DNN model inside TEE (the ``upper-bound'' security guarantees) with over 10X less overhead (in both experimental and real-world environments) than prior TSDP solutions and no accuracy loss.

DistFL: Distribution-aware Federated Learning for Mobile Scenarios

Oct 22, 2021

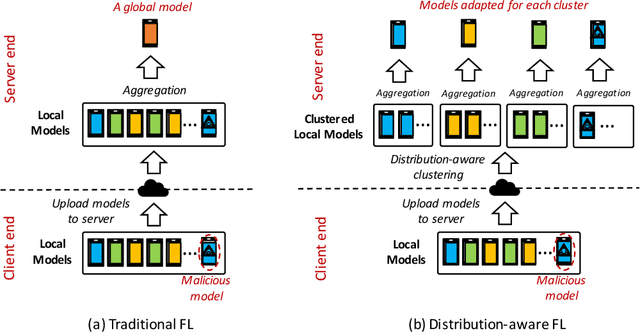

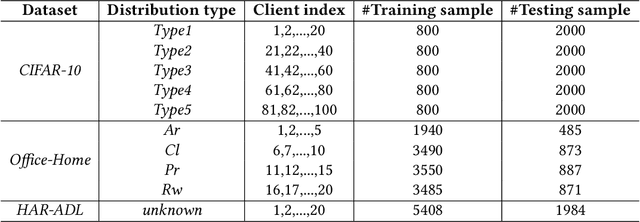

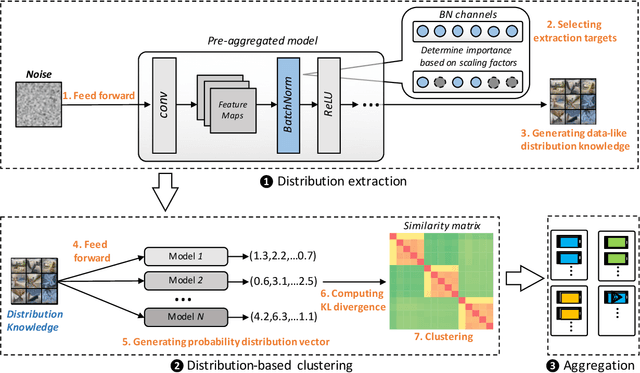

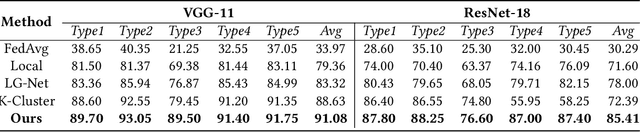

Abstract:Federated learning (FL) has emerged as an effective solution to decentralized and privacy-preserving machine learning for mobile clients. While traditional FL has demonstrated its superiority, it ignores the non-iid (independently identically distributed) situation, which widely exists in mobile scenarios. Failing to handle non-iid situations could cause problems such as performance decreasing and possible attacks. Previous studies focus on the "symptoms" directly, as they try to improve the accuracy or detect possible attacks by adding extra steps to conventional FL models. However, previous techniques overlook the root causes for the "symptoms": blindly aggregating models with the non-iid distributions. In this paper, we try to fundamentally address the issue by decomposing the overall non-iid situation into several iid clusters and conducting aggregation in each cluster. Specifically, we propose \textbf{DistFL}, a novel framework to achieve automated and accurate \textbf{Dist}ribution-aware \textbf{F}ederated \textbf{L}earning in a cost-efficient way. DistFL achieves clustering via extracting and comparing the \textit{distribution knowledge} from the uploaded models. With this framework, we are able to generate multiple personalized models with distinctive distributions and assign them to the corresponding clients. Extensive experiments on mobile scenarios with popular model architectures have demonstrated the effectiveness of DistFL.

TransTailor: Pruning the Pre-trained Model for Improved Transfer Learning

Mar 02, 2021

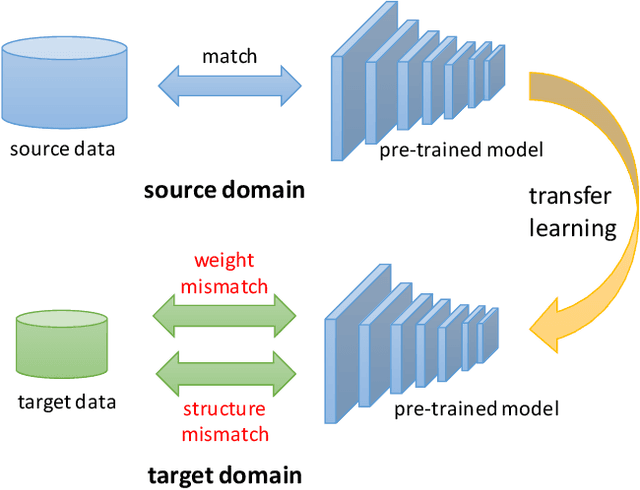

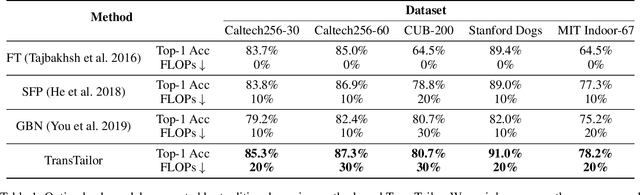

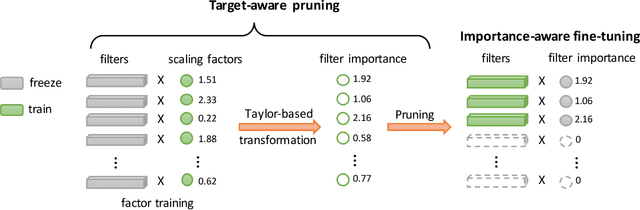

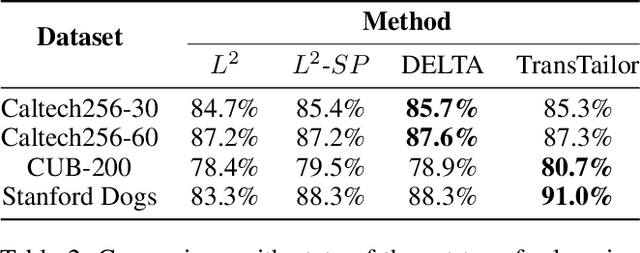

Abstract:The increasing of pre-trained models has significantly facilitated the performance on limited data tasks with transfer learning. However, progress on transfer learning mainly focuses on optimizing the weights of pre-trained models, which ignores the structure mismatch between the model and the target task. This paper aims to improve the transfer performance from another angle - in addition to tuning the weights, we tune the structure of pre-trained models, in order to better match the target task. To this end, we propose TransTailor, targeting at pruning the pre-trained model for improved transfer learning. Different from traditional pruning pipelines, we prune and fine-tune the pre-trained model according to the target-aware weight importance, generating an optimal sub-model tailored for a specific target task. In this way, we transfer a more suitable sub-structure that can be applied during fine-tuning to benefit the final performance. Extensive experiments on multiple pre-trained models and datasets demonstrate that TransTailor outperforms the traditional pruning methods and achieves competitive or even better performance than other state-of-the-art transfer learning methods while using a smaller model. Notably, on the Stanford Dogs dataset, TransTailor can achieve 2.7% accuracy improvement over other transfer methods with 20% fewer FLOPs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge