Yi Isaac Yang

Enhanced Sampling, Public Dataset and Generative Model for Drug-Protein Dissociation Dynamics

Apr 25, 2025Abstract:Drug-protein binding and dissociation dynamics are fundamental to understanding molecular interactions in biological systems. While many tools for drug-protein interaction studies have emerged, especially artificial intelligence (AI)-based generative models, predictive tools on binding/dissociation kinetics and dynamics are still limited. We propose a novel research paradigm that combines molecular dynamics (MD) simulations, enhanced sampling, and AI generative models to address this issue. We propose an enhanced sampling strategy to efficiently implement the drug-protein dissociation process in MD simulations and estimate the free energy surface (FES). We constructed a program pipeline of MD simulations based on this sampling strategy, thus generating a dataset including 26,612 drug-protein dissociation trajectories containing about 13 million frames. We named this dissociation dynamics dataset DD-13M and used it to train a deep equivariant generative model UnbindingFlow, which can generate collision-free dissociation trajectories. The DD-13M database and UnbindingFlow model represent a significant advancement in computational structural biology, and we anticipate its broad applicability in machine learning studies of drug-protein interactions. Our ongoing efforts focus on expanding this methodology to encompass a broader spectrum of drug-protein complexes and exploring novel applications in pathway prediction.

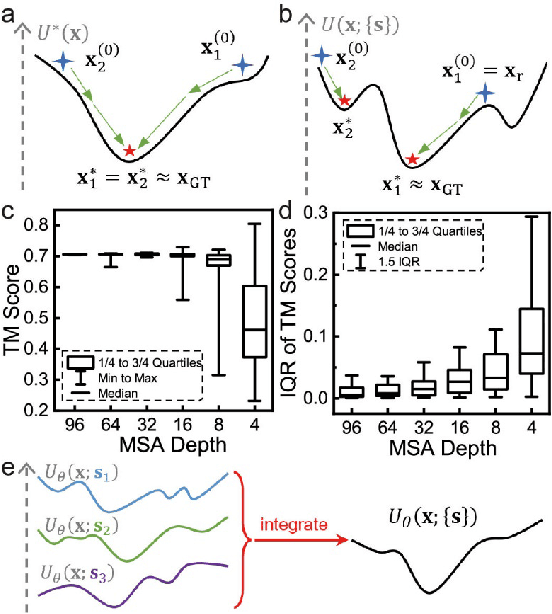

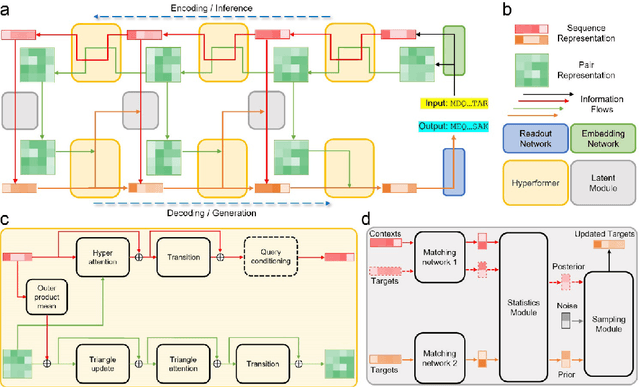

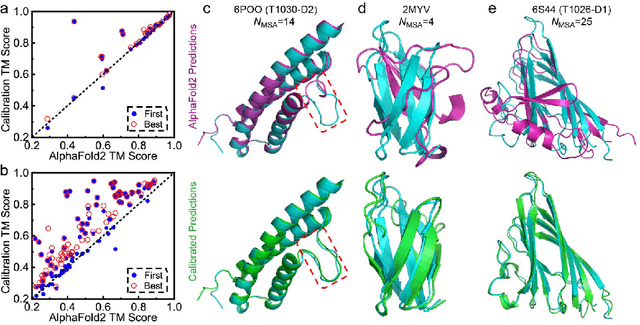

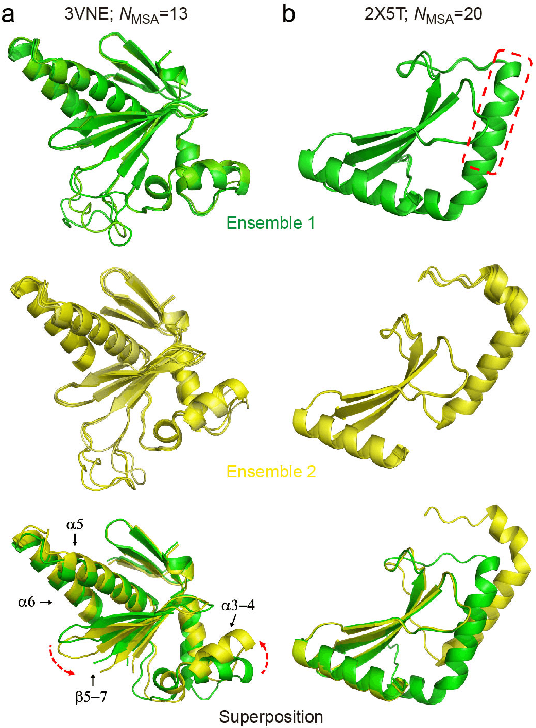

Few-Shot Learning of Accurate Folding Landscape for Protein Structure Prediction

Aug 20, 2022

Abstract:Data-driven predictive methods which can efficiently and accurately transform protein sequences into biologically active structures are highly valuable for scientific research and therapeutical development. Determining accurate folding landscape using co-evolutionary information is fundamental to the success of modern protein structure prediction methods. As the state of the art, AlphaFold2 has dramatically raised the accuracy without performing explicit co-evolutionary analysis. Nevertheless, its performance still shows strong dependence on available sequence homologs. We investigated the cause of such dependence and presented EvoGen, a meta generative model, to remedy the underperformance of AlphaFold2 for poor MSA targets. EvoGen allows us to manipulate the folding landscape either by denoising the searched MSA or by generating virtual MSA, and helps AlphaFold2 fold accurately in low-data regime or even achieve encouraging performance with single-sequence predictions. Being able to make accurate predictions with few-shot MSA not only generalizes AlphaFold2 better for orphan sequences, but also democratizes its use for high-throughput applications. Besides, EvoGen combined with AlphaFold2 yields a probabilistic structure generation method which could explore alternative conformations of protein sequences, and the task-aware differentiable algorithm for sequence generation will benefit other related tasks including protein design.

Molecular CT: Unifying Geometry and Representation Learning for Molecules at Different Scales

Dec 24, 2020

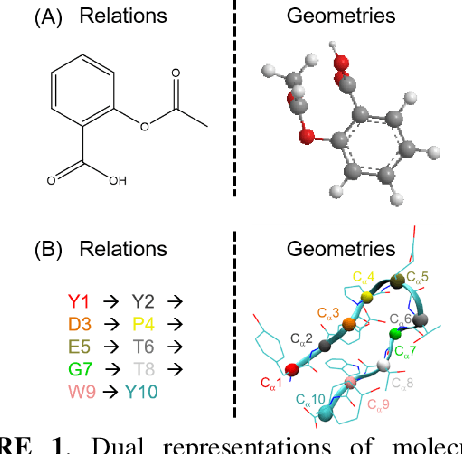

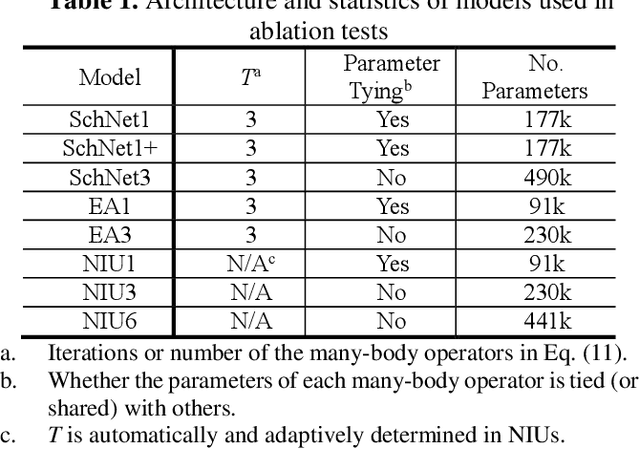

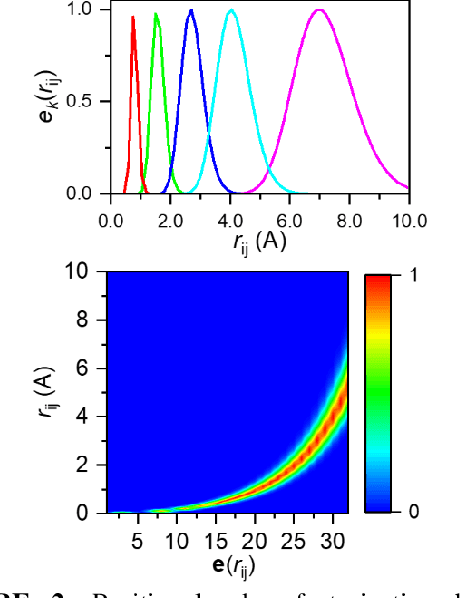

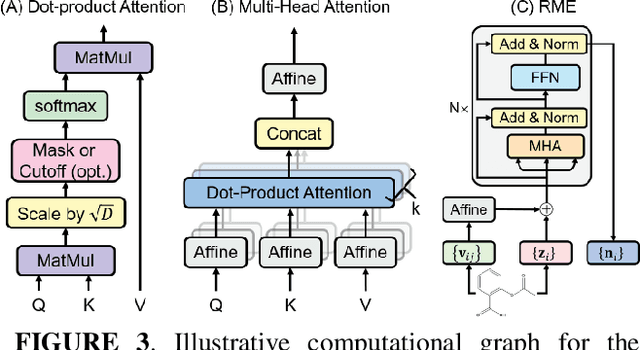

Abstract:Deep learning is changing many areas in molecular physics, and it has shown great potential to deliver new solutions to challenging molecular modeling problems. Along with this trend arises the increasing demand of expressive and versatile neural network architectures which are compatible with molecular systems. A new deep neural network architecture, Molecular Configuration Transformer (Molecular CT), is introduced for this purpose. Molecular CT is composed of a relation-aware encoder module and a computationally universal geometry learning unit, thus able to account for the relational constraints between particles meanwhile scalable to different particle numbers and invariant w.r.t. the trans-rotational transforms. The computational efficiency and universality make Molecular CT versatile for a variety of molecular learning scenarios and especially appealing for transferable representation learning across different molecular systems. As examples, we show that Molecular CT enables representational learning for molecular systems at different scales, and achieves comparable or improved results on common benchmarks using a more light-weighted structure compared to baseline models.

Deep Reinforcement Learning of Transition States

Nov 13, 2020

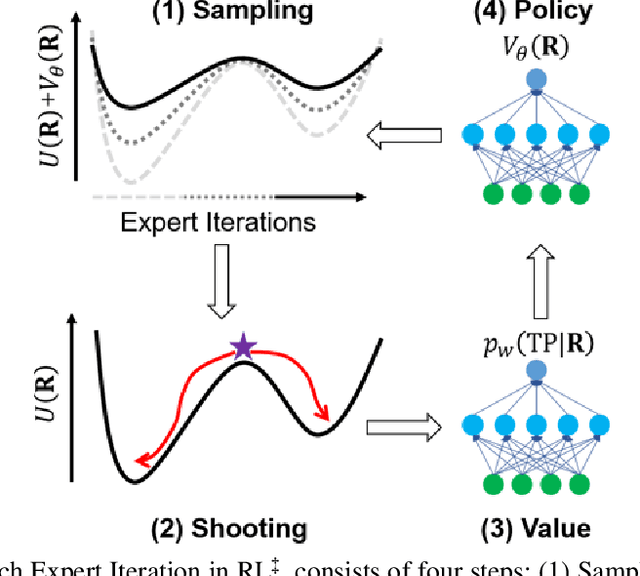

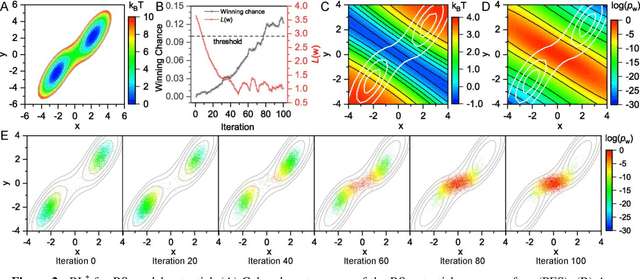

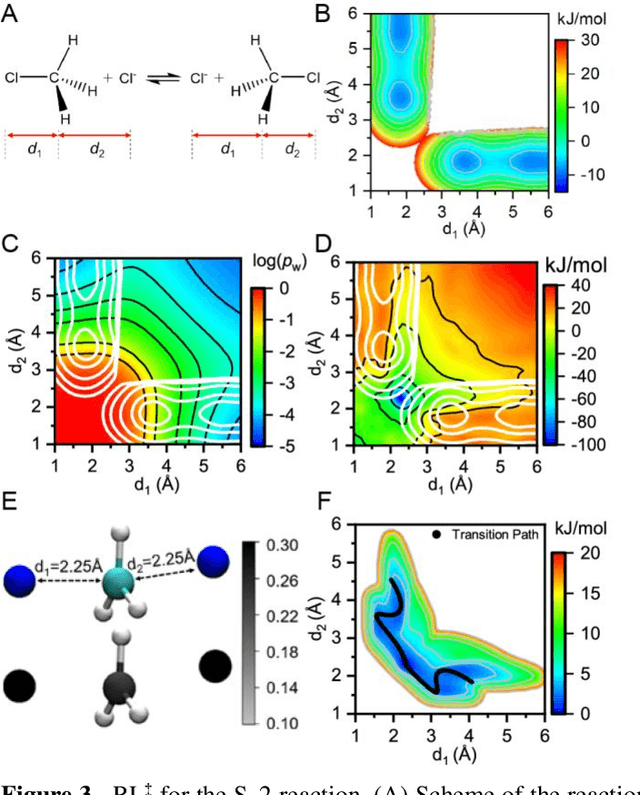

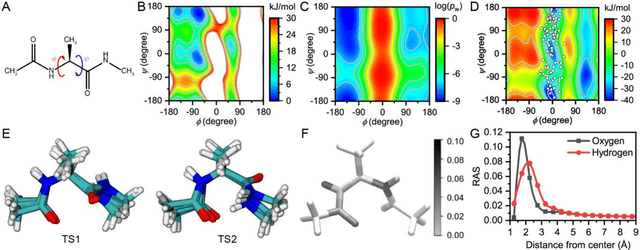

Abstract:Combining reinforcement learning (RL) and molecular dynamics (MD) simulations, we propose a machine-learning approach (RL$^\ddag$) to automatically unravel chemical reaction mechanisms. In RL$^\ddag$, locating the transition state of a chemical reaction is formulated as a game, where a virtual player is trained to shoot simulation trajectories connecting the reactant and product. The player utilizes two functions, one for value estimation and the other for policy making, to iteratively improve the chance of winning this game. We can directly interpret the reaction mechanism according to the value function. Meanwhile, the policy function enables efficient sampling of the transition paths, which can be further used to analyze the reaction dynamics and kinetics. Through multiple experiments, we show that RL{\ddag} can be trained tabula rasa hence allows us to reveal chemical reaction mechanisms with minimal subjective biases.

A Perspective on Deep Learning for Molecular Modeling and Simulations

Apr 25, 2020

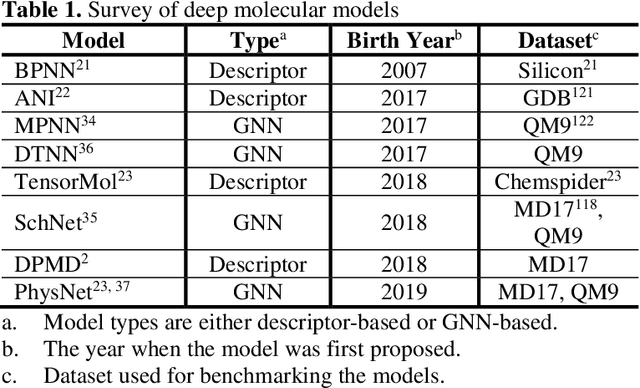

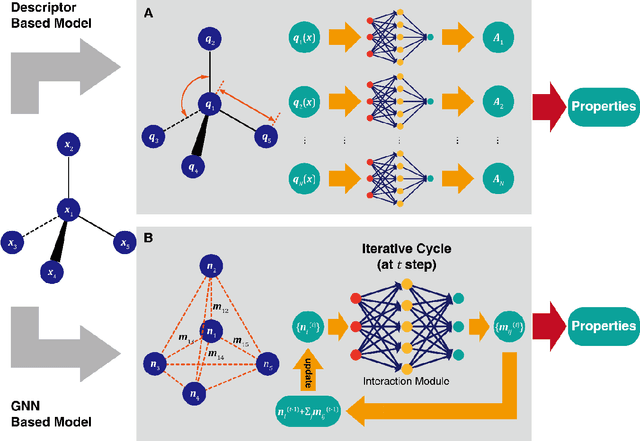

Abstract:Deep learning is transforming many areas in science, and it has great potential in modeling molecular systems. However, unlike the mature deployment of deep learning in computer vision and natural language processing, its development in molecular modeling and simulations is still at an early stage, largely because the inductive biases of molecules are completely different from those of images or texts. Footed on these differences, we first reviewed the limitations of traditional deep learning models from the perspective of molecular physics, and wrapped up some relevant technical advancement at the interface between molecular modeling and deep learning. We do not focus merely on the ever more complex neural network models, instead, we emphasize the theories and ideas behind modern deep learning. We hope that transacting these ideas into molecular modeling will create new opportunities. For this purpose, we summarized several representative applications, ranging from supervised to unsupervised and reinforcement learning, and discussed their connections with the emerging trends in deep learning. Finally, we outlook promising directions which may help address the existing issues in the current framework of deep molecular modeling.

Learning Clustered Representation for Complex Free Energy Landscapes

Jun 07, 2019

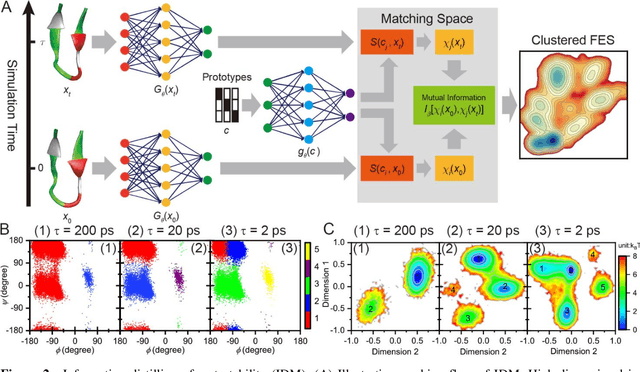

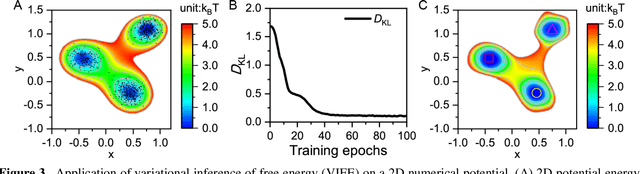

Abstract:In this paper we first analyzed the inductive bias underlying the data scattered across complex free energy landscapes (FEL), and exploited it to train deep neural networks which yield reduced and clustered representation for the FEL. Our parametric method, called Information Distilling of Metastability (IDM), is end-to-end differentiable thus scalable to ultra-large dataset. IDM is also a clustering algorithm and is able to cluster the samples in the meantime of reducing the dimensions. Besides, as an unsupervised learning method, IDM differs from many existing dimensionality reduction and clustering methods in that it neither requires a cherry-picked distance metric nor the ground-true number of clusters, and that it can be used to unroll and zoom-in the hierarchical FEL with respect to different timescales. Through multiple experiments, we show that IDM can achieve physically meaningful representations which partition the FEL into well-defined metastable states hence are amenable for downstream tasks such as mechanism analysis and kinetic modeling.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge