Yann Soullard

Have convolutions already made recurrence obsolete for unconstrained handwritten text recognition ?

Dec 09, 2020

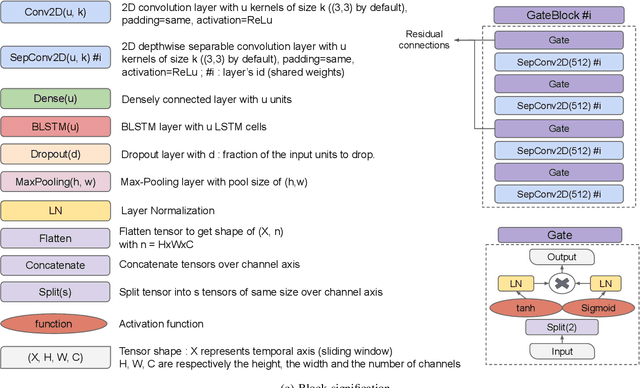

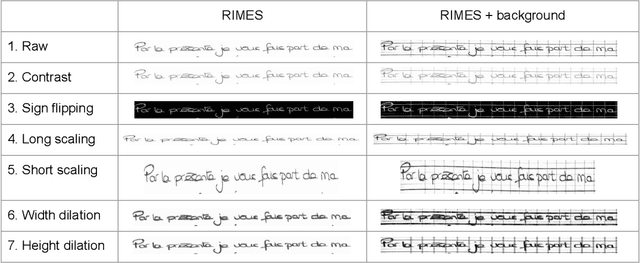

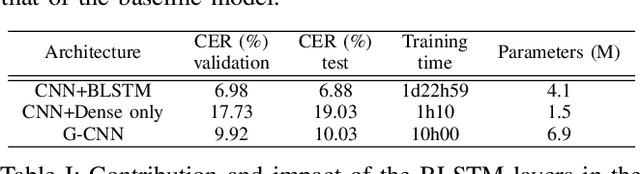

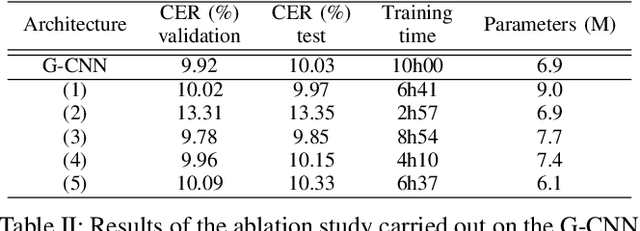

Abstract:Unconstrained handwritten text recognition remains an important challenge for deep neural networks. These last years, recurrent networks and more specifically Long Short-Term Memory networks have achieved state-of-the-art performance in this field. Nevertheless, they are made of a large number of trainable parameters and training recurrent neural networks does not support parallelism. This has a direct influence on the training time of such architectures, with also a direct consequence on the time required to explore various architectures. Recently, recurrence-free architectures such as Fully Convolutional Networks with gated mechanisms have been proposed as one possible alternative achieving competitive results. In this paper, we explore convolutional architectures and compare them to a CNN+BLSTM baseline. We propose an experimental study regarding different architectures on an offline handwriting recognition task using the RIMES dataset, and a modified version of it that consists of augmenting the images with notebook backgrounds that are printed grids.

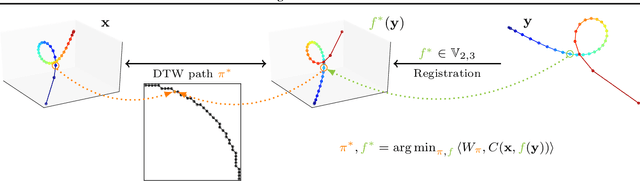

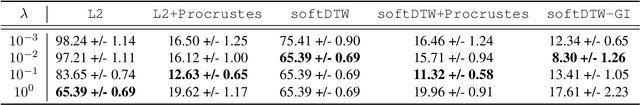

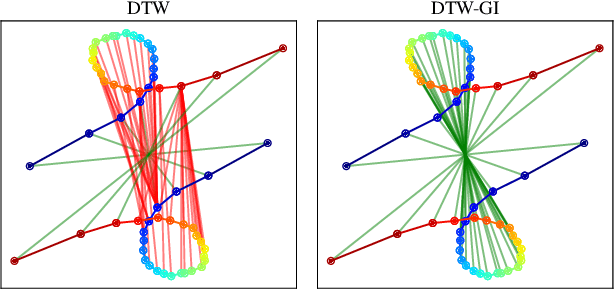

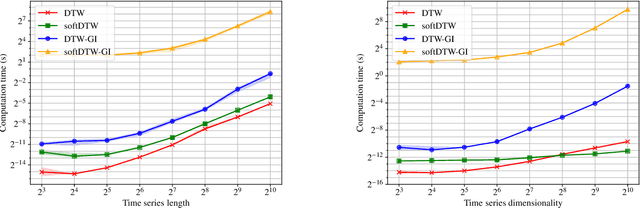

Time Series Alignment with Global Invariances

Feb 10, 2020

Abstract:In this work we address the problem of comparing time series while taking into account both feature space transformation and temporal variability. The proposed framework combines a latent global transformation of the feature space with the widely used Dynamic Time Warping (DTW). The latent global transformation captures the feature invariance while the DTW (or its smooth counterpart soft-DTW) deals with the temporal shifts. We cast the problem as a joint optimization over the global transformation and the temporal alignments. The versatility of our framework allows for several variants depending on the invariance class at stake. Among our contributions we define a differentiable loss for time series and present two algorithms for the computation of time series barycenters under our new geometry. We illustrate the interest of our approach on both simulated and real world data.

CTCModel: a Keras Model for Connectionist Temporal Classification

Jan 23, 2019

Abstract:We report an extension of a Keras Model, called CTCModel, to perform the Connectionist Temporal Classification (CTC) in a transparent way. Combined with Recurrent Neural Networks, the Connectionist Temporal Classification is the reference method for dealing with unsegmented input sequences, i.e. with data that are a couple of observation and label sequences where each label is related to a subset of observation frames. CTCModel makes use of the CTC implementation in the Tensorflow backend for training and predicting sequences of labels using Keras. It consists of three branches made of Keras models: one for training, computing the CTC loss function; one for predicting, providing sequences of labels; and one for evaluating that returns standard metrics for analyzing sequences of predictions.

A Unified Multilingual Handwriting Recognition System using multigrams sub-lexical units

Aug 28, 2018

Abstract:We address the design of a unified multilingual system for handwriting recognition. Most of multi- lingual systems rests on specialized models that are trained on a single language and one of them is selected at test time. While some recognition systems are based on a unified optical model, dealing with a unified language model remains a major issue, as traditional language models are generally trained on corpora composed of large word lexicons per language. Here, we bring a solution by con- sidering language models based on sub-lexical units, called multigrams. Dealing with multigrams strongly reduces the lexicon size and thus decreases the language model complexity. This makes pos- sible the design of an end-to-end unified multilingual recognition system where both a single optical model and a single language model are trained on all the languages. We discuss the impact of the language unification on each model and show that our system reaches state-of-the-art methods perfor- mance with a strong reduction of the complexity.

* preprint

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge