Thierry Paquet

LITIS

Classifying the Unknown: In-Context Learning for Open-Vocabulary Text and Symbol Recognition

Apr 09, 2025Abstract:We introduce Rosetta, a multimodal model that leverages Multimodal In-Context Learning (MICL) to classify sequences of novel script patterns in documents by leveraging minimal examples, thus eliminating the need for explicit retraining. To enhance contextual learning, we designed a dataset generation process that ensures varying degrees of contextual informativeness, improving the model's adaptability in leveraging context across different scenarios. A key strength of our method is the use of a Context-Aware Tokenizer (CAT), which enables open-vocabulary classification. This allows the model to classify text and symbol patterns across an unlimited range of classes, extending its classification capabilities beyond the scope of its training alphabet of patterns. As a result, it unlocks applications such as the recognition of new alphabets and languages. Experiments on synthetic datasets demonstrate the potential of Rosetta to successfully classify Out-Of-Distribution visual patterns and diverse sets of alphabets and scripts, including but not limited to Chinese, Greek, Russian, French, Spanish, and Japanese.

VISTA-OCR: Towards generative and interactive end to end OCR models

Apr 04, 2025Abstract:We introduce \textbf{VISTA-OCR} (Vision and Spatially-aware Text Analysis OCR), a lightweight architecture that unifies text detection and recognition within a single generative model. Unlike conventional methods that require separate branches with dedicated parameters for text recognition and detection, our approach leverages a Transformer decoder to sequentially generate text transcriptions and their spatial coordinates in a unified branch. Built on an encoder-decoder architecture, VISTA-OCR is progressively trained, starting with the visual feature extraction phase, followed by multitask learning with multimodal token generation. To address the increasing demand for versatile OCR systems capable of advanced tasks, such as content-based text localization \ref{content_based_localization}, we introduce new prompt-controllable OCR tasks during pre-training.To enhance the model's capabilities, we built a new dataset composed of real-world examples enriched with bounding box annotations and synthetic samples. Although recent Vision Large Language Models (VLLMs) can efficiently perform these tasks, their high computational cost remains a barrier for practical deployment. In contrast, our VISTA$_{\text{omni}}$ variant processes both handwritten and printed documents with only 150M parameters, interactively, by prompting. Extensive experiments on multiple datasets demonstrate that VISTA-OCR achieves better performance compared to state-of-the-art specialized models on standard OCR tasks while showing strong potential for more sophisticated OCR applications, addressing the growing need for interactive OCR systems. All code and annotations for VISTA-OCR will be made publicly available upon acceptance.

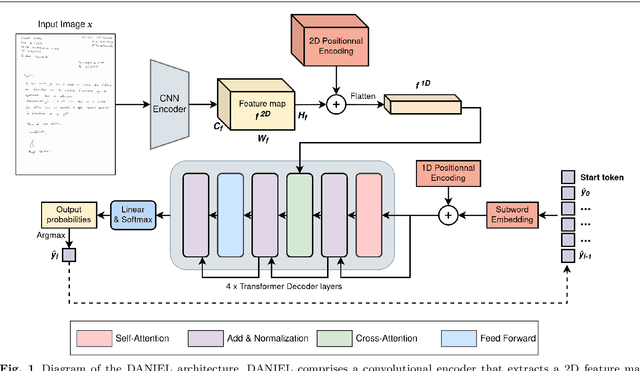

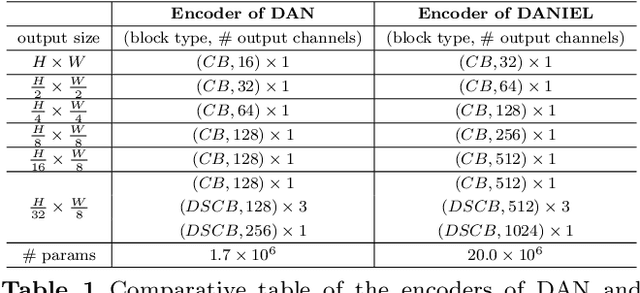

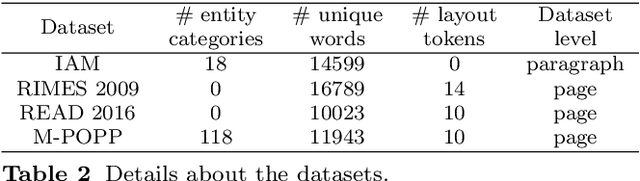

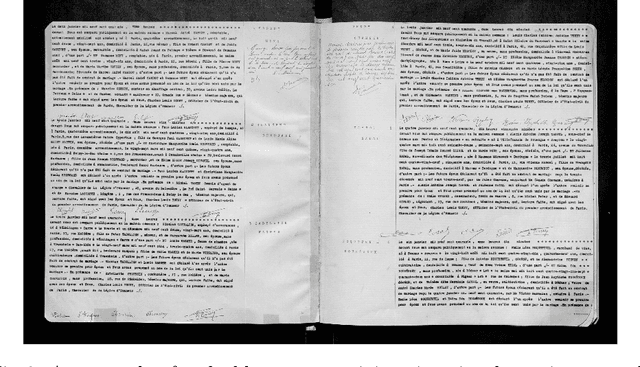

DANIEL: A fast Document Attention Network for Information Extraction and Labelling of handwritten documents

Jul 12, 2024

Abstract:Information extraction from handwritten documents involves traditionally three distinct steps: Document Layout Analysis, Handwritten Text Recognition, and Named Entity Recognition. Recent approaches have attempted to integrate these steps into a single process using fully end-to-end architectures. Despite this, these integrated approaches have not yet matched the performance of language models, when applied to information extraction in plain text. In this paper, we introduce DANIEL (Document Attention Network for Information Extraction and Labelling), a fully end-to-end architecture integrating a language model and designed for comprehensive handwritten document understanding. DANIEL performs layout recognition, handwriting recognition, and named entity recognition on full-page documents. Moreover, it can simultaneously learn across multiple languages, layouts, and tasks. For named entity recognition, the ontology to be applied can be specified via the input prompt. The architecture employs a convolutional encoder capable of processing images of any size without resizing, paired with an autoregressive decoder based on a transformer-based language model. DANIEL achieves competitive results on four datasets, including a new state-of-the-art performance on RIMES 2009 and M-POPP for Handwriting Text Recognition, and IAM NER for Named Entity Recognition. Furthermore, DANIEL is much faster than existing approaches. We provide the source code and the weights of the trained models at \url{https://github.com/Shulk97/daniel}.

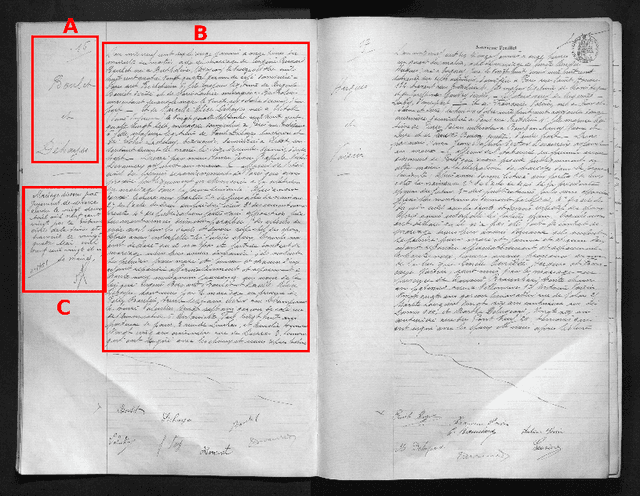

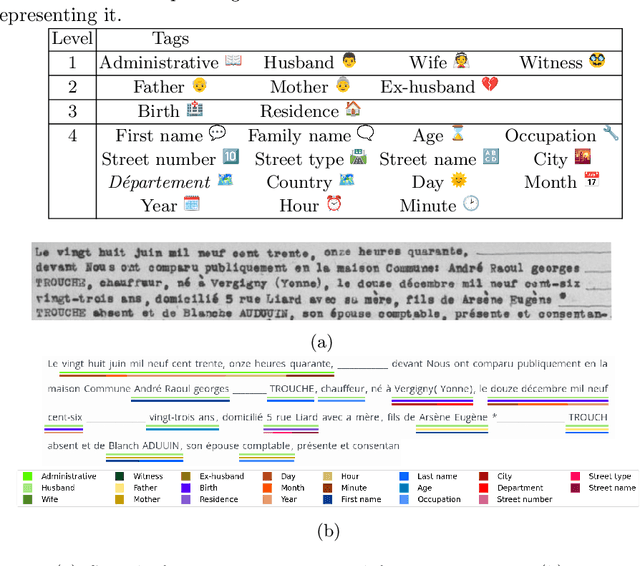

End-to-end information extraction in handwritten documents: Understanding Paris marriage records from 1880 to 1940

Apr 30, 2024

Abstract:The EXO-POPP project aims to establish a comprehensive database comprising 300,000 marriage records from Paris and its suburbs, spanning the years 1880 to 1940, which are preserved in over 130,000 scans of double pages. Each marriage record may encompass up to 118 distinct types of information that require extraction from plain text. In this paper, we introduce the M-POPP dataset, a subset of the M-POPP database with annotations for full-page text recognition and information extraction in both handwritten and printed documents, and which is now publicly available. We present a fully end-to-end architecture adapted from the DAN, designed to perform both handwritten text recognition and information extraction directly from page images without the need for explicit segmentation. We showcase the information extraction capabilities of this architecture by achieving a new state of the art for full-page Information Extraction on Esposalles and we use this architecture as a baseline for the M-POPP dataset. We also assess and compare how different encoding strategies for named entities in the text affect the performance of jointly recognizing handwritten text and extracting information, from full pages.

Sheet Music Transformer: End-To-End Optical Music Recognition Beyond Monophonic Transcription

Feb 12, 2024Abstract:State-of-the-art end-to-end Optical Music Recognition (OMR) has, to date, primarily been carried out using monophonic transcription techniques to handle complex score layouts, such as polyphony, often by resorting to simplifications or specific adaptations. Despite their efficacy, these approaches imply challenges related to scalability and limitations. This paper presents the Sheet Music Transformer, the first end-to-end OMR model designed to transcribe complex musical scores without relying solely on monophonic strategies. Our model employs a Transformer-based image-to-sequence framework that predicts score transcriptions in a standard digital music encoding format from input images. Our model has been tested on two polyphonic music datasets and has proven capable of handling these intricate music structures effectively. The experimental outcomes not only indicate the competence of the model, but also show that it is better than the state-of-the-art methods, thus contributing to advancements in end-to-end OMR transcription.

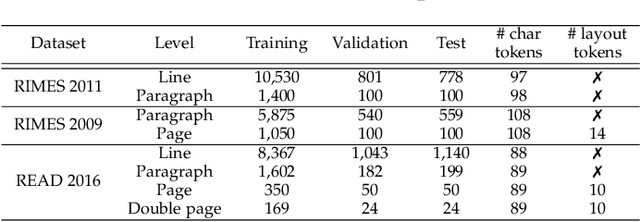

Faster DAN: Multi-target Queries with Document Positional Encoding for End-to-end Handwritten Document Recognition

Jan 25, 2023Abstract:Recent advances in handwritten text recognition enabled to recognize whole documents in an end-to-end way: the Document Attention Network (DAN) recognizes the characters one after the other through an attention-based prediction process until reaching the end of the document. However, this autoregressive process leads to inference that cannot benefit from any parallelization optimization. In this paper, we propose Faster DAN, a two-step strategy to speed up the recognition process at prediction time: the model predicts the first character of each text line in the document, and then completes all the text lines in parallel through multi-target queries and a specific document positional encoding scheme. Faster DAN reaches competitive results compared to standard DAN, while being at least 4 times faster on whole single-page and double-page images of the RIMES 2009, READ 2016 and MAURDOR datasets. Source code and trained model weights are available at https://github.com/FactoDeepLearning/FasterDAN.

Stochastic gradient descent with gradient estimator for categorical features

Sep 08, 2022

Abstract:Categorical data are present in key areas such as health or supply chain, and this data require specific treatment. In order to apply recent machine learning models on such data, encoding is needed. In order to build interpretable models, one-hot encoding is still a very good solution, but such encoding creates sparse data. Gradient estimators are not suited for sparse data: the gradient is mainly considered as zero while it simply does not always exists, thus a novel gradient estimator is introduced. We show what this estimator minimizes in theory and show its efficiency on different datasets with multiple model architectures. This new estimator performs better than common estimators under similar settings. A real world retail dataset is also released after anonymization. Overall, the aim of this paper is to thoroughly consider categorical data and adapt models and optimizers to these key features.

Confidence Estimation for Object Detection in Document Images

Aug 29, 2022

Abstract:Deep neural networks are becoming increasingly powerful and large and always require more labelled data to be trained. However, since annotating data is time-consuming, it is now necessary to develop systems that show good performance while learning on a limited amount of data. These data must be correctly chosen to obtain models that are still efficient. For this, the systems must be able to determine which data should be annotated to achieve the best results. In this paper, we propose four estimators to estimate the confidence of object detection predictions. The first two are based on Monte Carlo dropout, the third one on descriptive statistics and the last one on the detector posterior probabilities. In the active learning framework, the three first estimators show a significant improvement in performance for the detection of document physical pages and text lines compared to a random selection of images. We also show that the proposed estimator based on descriptive statistics can replace MC dropout, reducing the computational cost without compromising the performances.

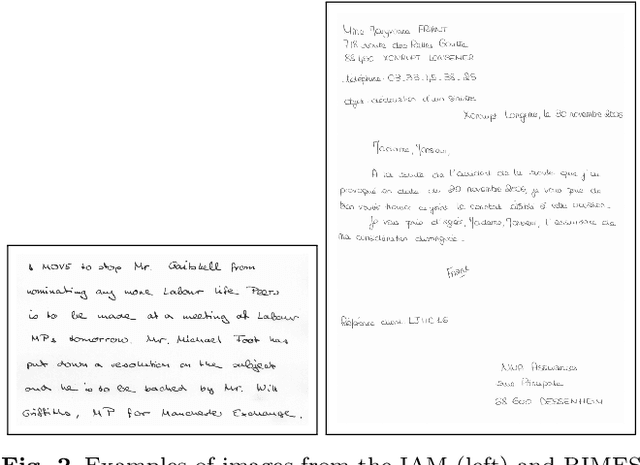

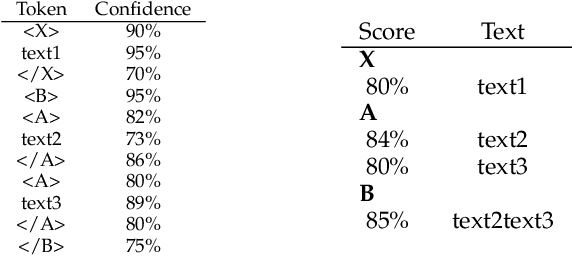

DAN: a Segmentation-free Document Attention Network for Handwritten Document Recognition

Apr 07, 2022

Abstract:Unconstrained handwritten text recognition is a challenging computer vision task. It is traditionally handled by a two-step approach combining line segmentation followed by text line recognition. For the first time, we propose an end-to-end segmentation-free architecture for the task of handwritten document recognition: the Document Attention Network. In addition to the text recognition, the model is trained to label text parts using begin and end tags in an XML-like fashion. This model is made up of an FCN encoder for feature extraction and a stack of transformer decoder layers for a recurrent token-by-token prediction process. It takes whole text documents as input and sequentially outputs characters, as well as logical layout tokens. Contrary to the existing segmentation-based approaches, the model is trained without using any segmentation label. We achieve competitive results on the READ 2016 dataset at page level, as well as double-page level with a CER of 3.53% and 3.69%, respectively. We also provide results for the RIMES 2009 dataset at page level, reaching 4.54% of CER. We provide all source code and pre-trained model weights at https://github.com/FactoDeepLearning/DAN.

Robust Text Line Detection in Historical Documents: Learning and Evaluation Methods

Mar 23, 2022

Abstract:Text line segmentation is one of the key steps in historical document understanding. It is challenging due to the variety of fonts, contents, writing styles and the quality of documents that have degraded through the years. In this paper, we address the limitations that currently prevent people from building line segmentation models with a high generalization capacity. We present a study conducted using three state-of-the-art systems Doc-UFCN, dhSegment and ARU-Net and show that it is possible to build generic models trained on a wide variety of historical document datasets that can correctly segment diverse unseen pages. This paper also highlights the importance of the annotations used during training: each existing dataset is annotated differently. We present a unification of the annotations and show its positive impact on the final text recognition results. In this end, we present a complete evaluation strategy using standard pixel-level metrics, object-level ones and introducing goal-oriented metrics.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge