Pierrick Tranouez

LITIS

Classifying the Unknown: In-Context Learning for Open-Vocabulary Text and Symbol Recognition

Apr 09, 2025Abstract:We introduce Rosetta, a multimodal model that leverages Multimodal In-Context Learning (MICL) to classify sequences of novel script patterns in documents by leveraging minimal examples, thus eliminating the need for explicit retraining. To enhance contextual learning, we designed a dataset generation process that ensures varying degrees of contextual informativeness, improving the model's adaptability in leveraging context across different scenarios. A key strength of our method is the use of a Context-Aware Tokenizer (CAT), which enables open-vocabulary classification. This allows the model to classify text and symbol patterns across an unlimited range of classes, extending its classification capabilities beyond the scope of its training alphabet of patterns. As a result, it unlocks applications such as the recognition of new alphabets and languages. Experiments on synthetic datasets demonstrate the potential of Rosetta to successfully classify Out-Of-Distribution visual patterns and diverse sets of alphabets and scripts, including but not limited to Chinese, Greek, Russian, French, Spanish, and Japanese.

DANIEL: A fast Document Attention Network for Information Extraction and Labelling of handwritten documents

Jul 12, 2024

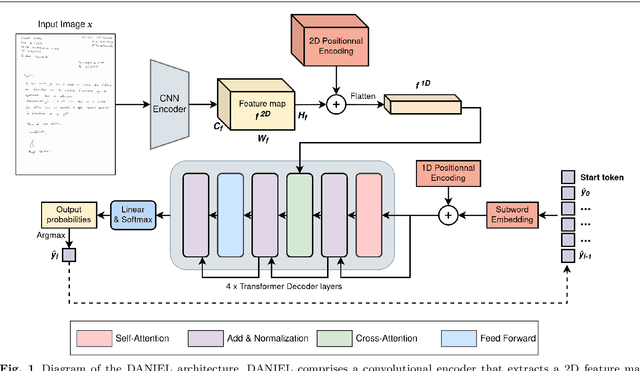

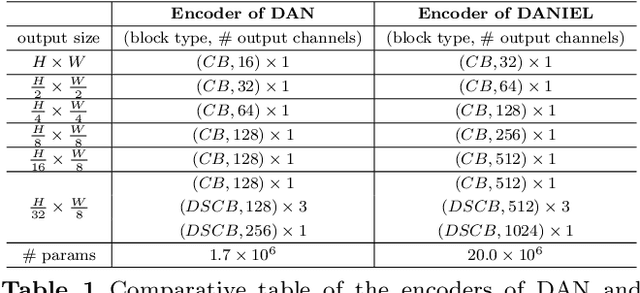

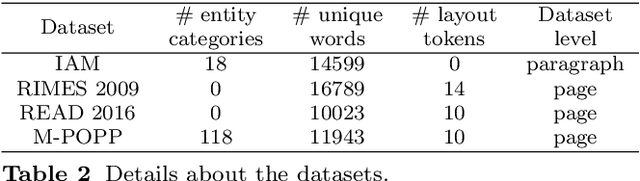

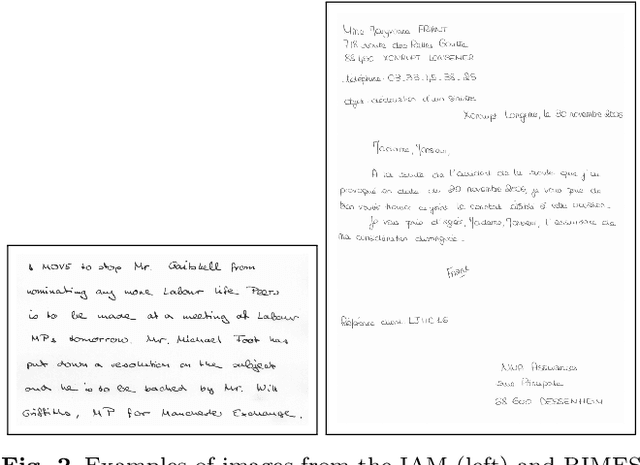

Abstract:Information extraction from handwritten documents involves traditionally three distinct steps: Document Layout Analysis, Handwritten Text Recognition, and Named Entity Recognition. Recent approaches have attempted to integrate these steps into a single process using fully end-to-end architectures. Despite this, these integrated approaches have not yet matched the performance of language models, when applied to information extraction in plain text. In this paper, we introduce DANIEL (Document Attention Network for Information Extraction and Labelling), a fully end-to-end architecture integrating a language model and designed for comprehensive handwritten document understanding. DANIEL performs layout recognition, handwriting recognition, and named entity recognition on full-page documents. Moreover, it can simultaneously learn across multiple languages, layouts, and tasks. For named entity recognition, the ontology to be applied can be specified via the input prompt. The architecture employs a convolutional encoder capable of processing images of any size without resizing, paired with an autoregressive decoder based on a transformer-based language model. DANIEL achieves competitive results on four datasets, including a new state-of-the-art performance on RIMES 2009 and M-POPP for Handwriting Text Recognition, and IAM NER for Named Entity Recognition. Furthermore, DANIEL is much faster than existing approaches. We provide the source code and the weights of the trained models at \url{https://github.com/Shulk97/daniel}.

End-to-end information extraction in handwritten documents: Understanding Paris marriage records from 1880 to 1940

Apr 30, 2024

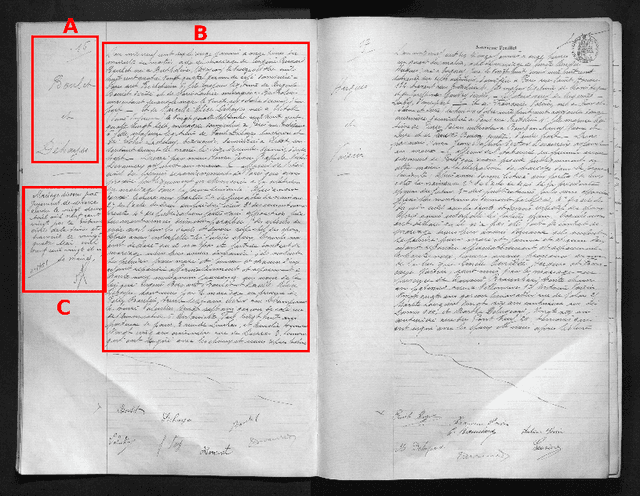

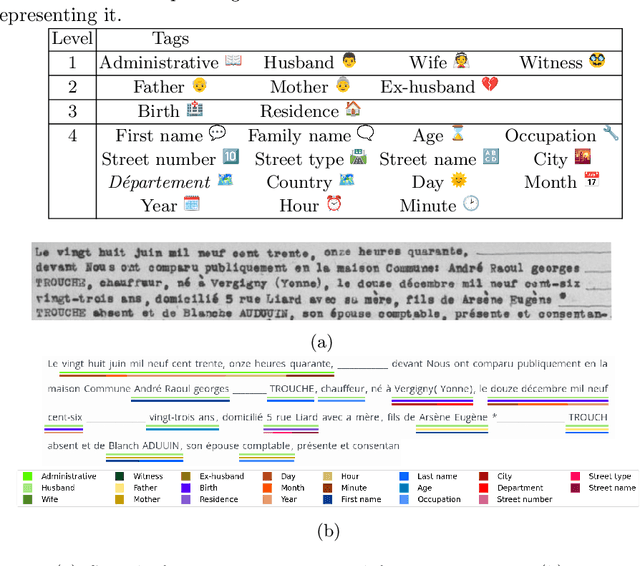

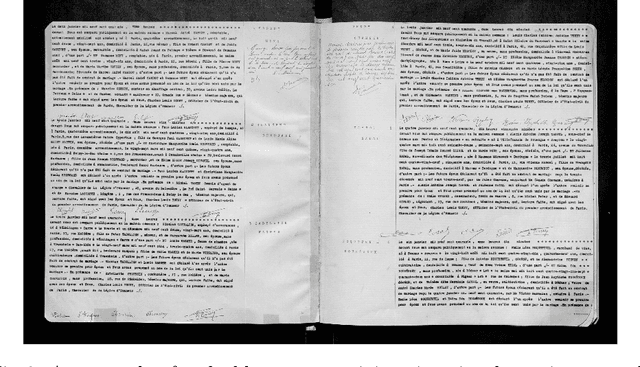

Abstract:The EXO-POPP project aims to establish a comprehensive database comprising 300,000 marriage records from Paris and its suburbs, spanning the years 1880 to 1940, which are preserved in over 130,000 scans of double pages. Each marriage record may encompass up to 118 distinct types of information that require extraction from plain text. In this paper, we introduce the M-POPP dataset, a subset of the M-POPP database with annotations for full-page text recognition and information extraction in both handwritten and printed documents, and which is now publicly available. We present a fully end-to-end architecture adapted from the DAN, designed to perform both handwritten text recognition and information extraction directly from page images without the need for explicit segmentation. We showcase the information extraction capabilities of this architecture by achieving a new state of the art for full-page Information Extraction on Esposalles and we use this architecture as a baseline for the M-POPP dataset. We also assess and compare how different encoding strategies for named entities in the text affect the performance of jointly recognizing handwritten text and extracting information, from full pages.

Logical segmentation for article extraction in digitized old newspapers

Oct 03, 2012

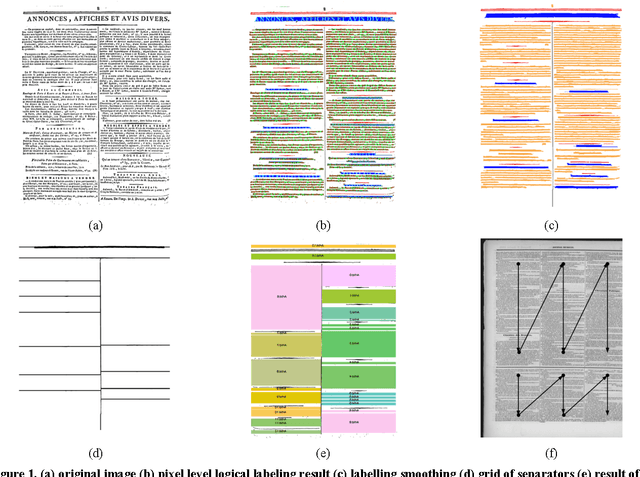

Abstract:Newspapers are documents made of news item and informative articles. They are not meant to be red iteratively: the reader can pick his items in any order he fancies. Ignoring this structural property, most digitized newspaper archives only offer access by issue or at best by page to their content. We have built a digitization workflow that automatically extracts newspaper articles from images, which allows indexing and retrieval of information at the article level. Our back-end system extracts the logical structure of the page to produce the informative units: the articles. Each image is labelled at the pixel level, through a machine learning based method, then the page logical structure is constructed up from there by the detection of structuring entities such as horizontal and vertical separators, titles and text lines. This logical structure is stored in a METS wrapper associated to the ALTO file produced by the system including the OCRed text. Our front-end system provides a web high definition visualisation of images, textual indexing and retrieval facilities, searching and reading at the article level. Articles transcriptions can be collaboratively corrected, which as a consequence allows for better indexing. We are currently testing our system on the archives of the Journal de Rouen, one of France eldest local newspaper. These 250 years of publication amount to 300 000 pages of very variable image quality and layout complexity. Test year 1808 can be consulted at plair.univ-rouen.fr.

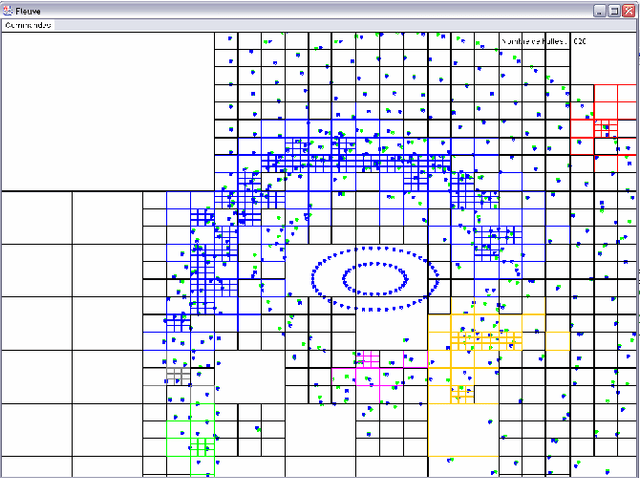

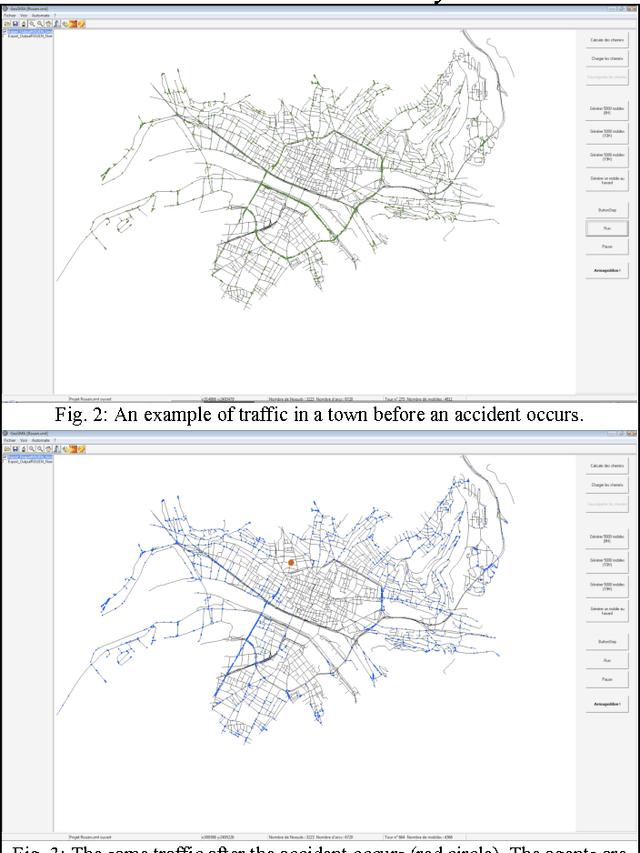

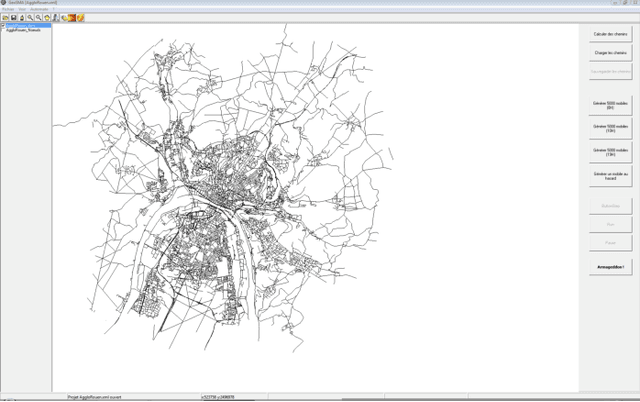

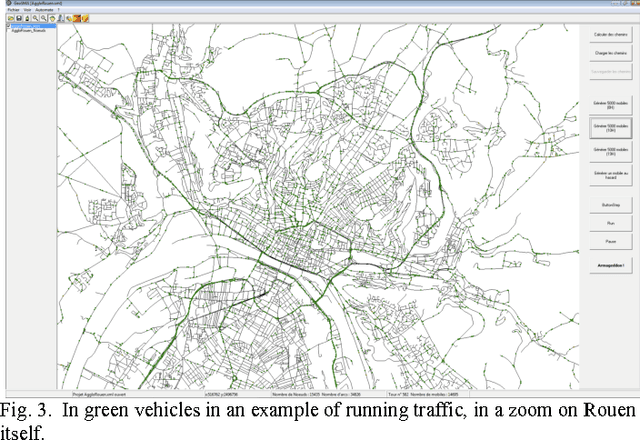

A multiagent urban traffic simulation

Jan 26, 2012

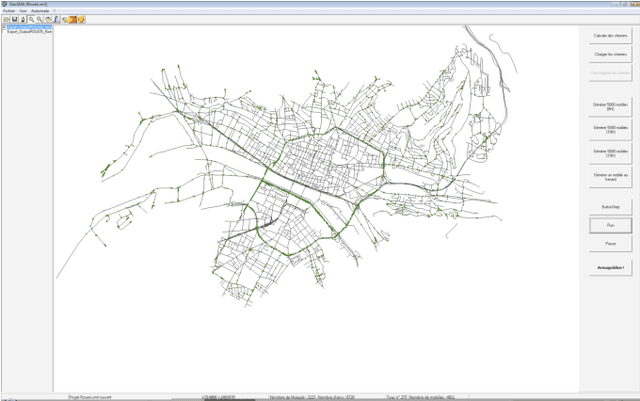

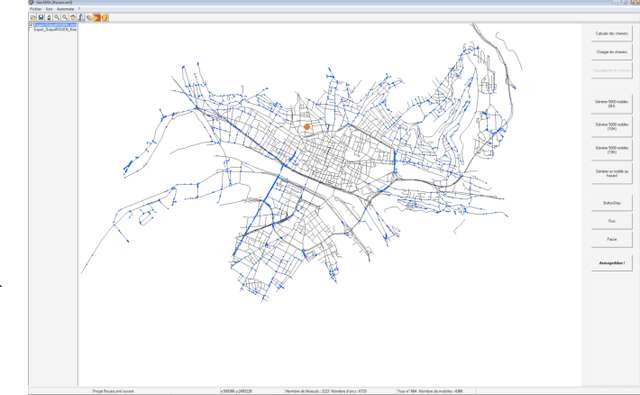

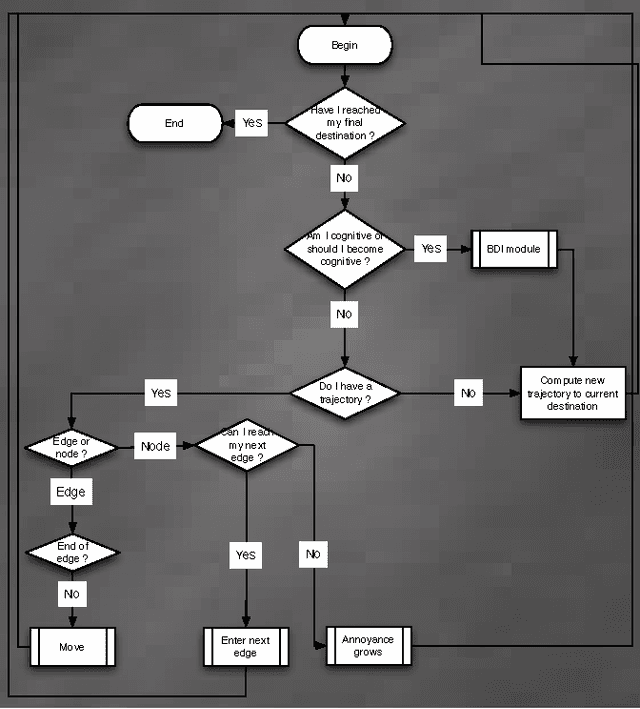

Abstract:We built a multiagent simulation of urban traffic to model both ordinary traffic and emergency or crisis mode traffic. This simulation first builds a modeled road network based on detailed geographical information. On this network, the simulation creates two populations of agents: the Transporters and the Mobiles. Transporters embody the roads themselves; they are utilitarian and meant to handle the low level realism of the simulation. Mobile agents embody the vehicles that circulate on the network. They have one or several destinations they try to reach using initially their beliefs of the structure of the network (length of the edges, speed limits, number of lanes etc.). Nonetheless, when confronted to a dynamic, emergent prone environment (other vehicles, unexpectedly closed ways or lanes, traffic jams etc.), the rather reactive agent will activate more cognitive modules to adapt its beliefs, desires and intentions. It may change its destination(s), change the tactics used to reach the destination (favoring less used roads, following other agents, using general headings), etc. We describe our current validation of our model and the next planned improvements, both in validation and in functionalities.

* arXiv admin note: significant text overlap with arXiv:0909.1021 and arXiv:0910.1026

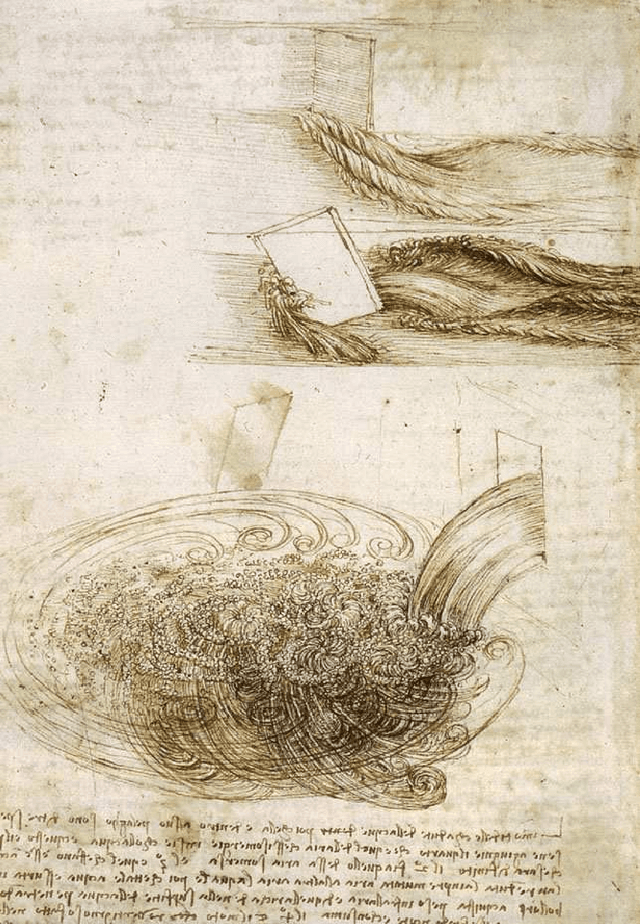

Different goals in multiscale simulations and how to reach them

Nov 09, 2009

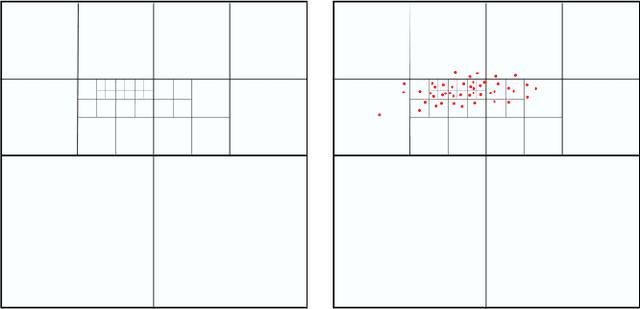

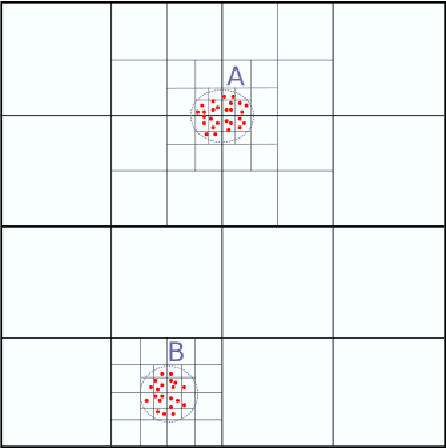

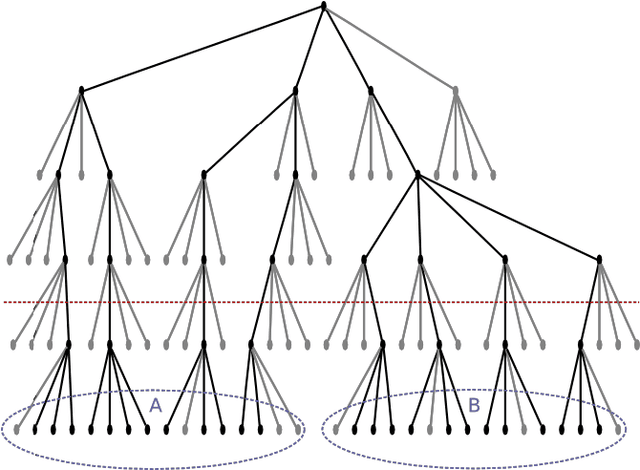

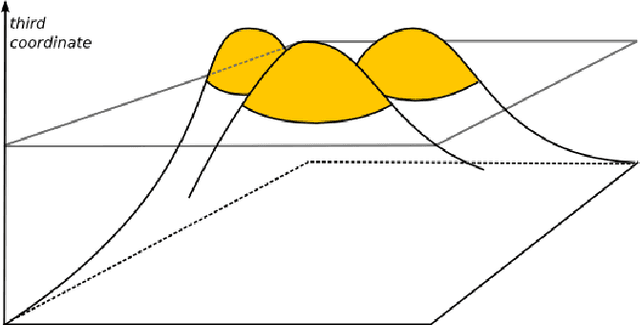

Abstract:In this paper we sum up our works on multiscale programs, mainly simulations. We first start with describing what multiscaling is about, how it helps perceiving signal from a background noise in a ?ow of data for example, for a direct perception by a user or for a further use by another program. We then give three examples of multiscale techniques we used in the past, maintaining a summary, using an environmental marker introducing an history in the data and finally using a knowledge on the behavior of the different scales to really handle them at the same time.

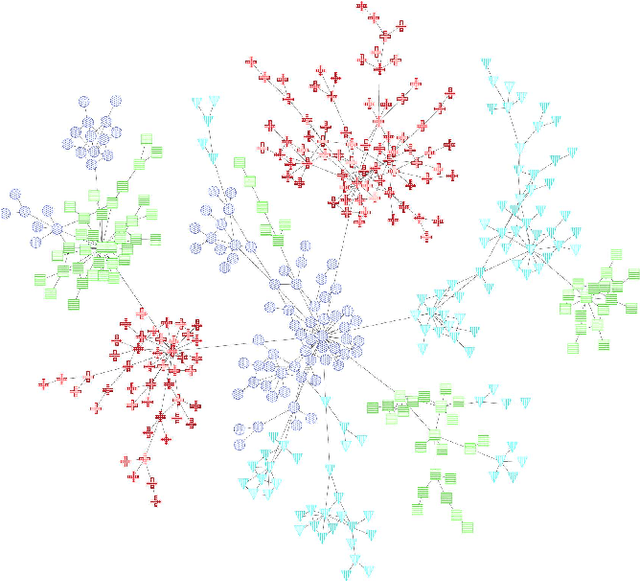

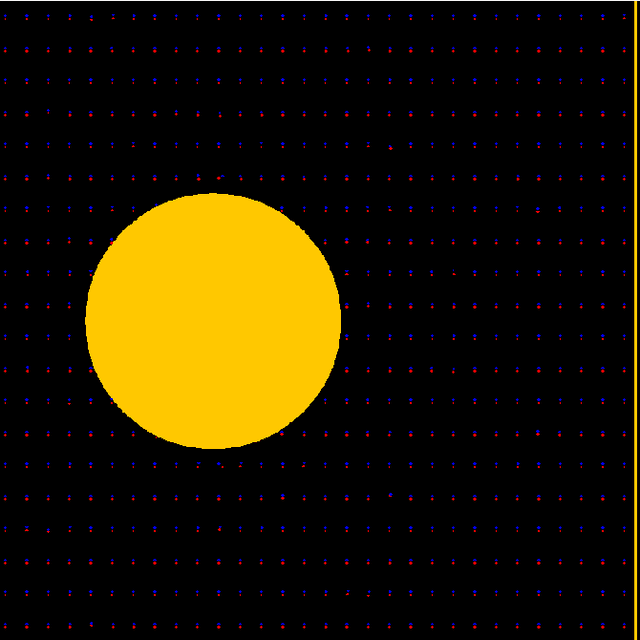

Building upon Fast Multipole Methods to Detect and Model Organizations

Oct 08, 2009

Abstract:Many models in natural and social sciences are comprised of sets of inter-acting entities whose intensity of interaction decreases with distance. This often leads to structures of interest in these models composed of dense packs of entities. Fast Multipole Methods are a family of methods developed to help with the calculation of a number of computable models such as described above. We propose a method that builds upon FMM to detect and model the dense structures of these systems.

A multiagent urban traffic simulation. Part II: dealing with the extraordinary

Oct 06, 2009

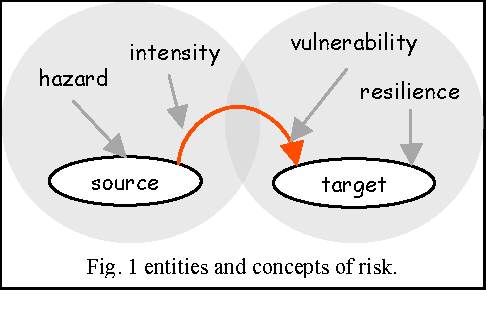

Abstract:In Probabilistic Risk Management, risk is characterized by two quantities: the magnitude (or severity) of the adverse consequences that can potentially result from the given activity or action, and by the likelihood of occurrence of the given adverse consequences. But a risk seldom exists in isolation: chain of consequences must be examined, as the outcome of one risk can increase the likelihood of other risks. Systemic theory must complement classic PRM. Indeed these chains are composed of many different elements, all of which may have a critical importance at many different levels. Furthermore, when urban catastrophes are envisioned, space and time constraints are key determinants of the workings and dynamics of these chains of catastrophes: models must include a correct spatial topology of the studied risk. Finally, literature insists on the importance small events can have on the risk on a greater scale: urban risks management models belong to self-organized criticality theory. We chose multiagent systems to incorporate this property in our model: the behavior of an agent can transform the dynamics of important groups of them.

A multiagent urban traffic simulation Part I: dealing with the ordinary

Sep 05, 2009

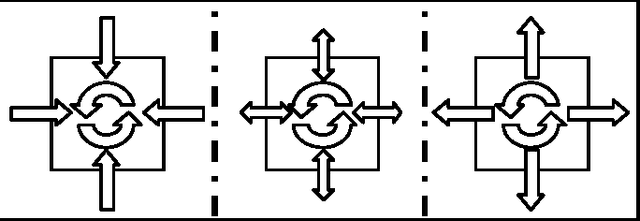

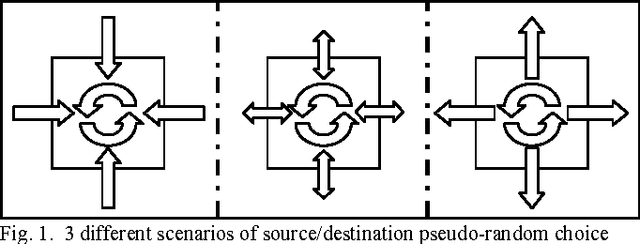

Abstract:We describe in this article a multiagent urban traffic simulation, as we believe individual-based modeling is necessary to encompass the complex influence the actions of an individual vehicle can have on the overall flow of vehicles. We first describe how we build a graph description of the network from purely geometric data, ESRI shapefiles. We then explain how we include traffic related data to this graph. We go on after that with the model of the vehicle agents: origin and destination, driving behavior, multiple lanes, crossroads, and interactions with the other vehicles in day-to-day, ?ordinary? traffic. We conclude with the presentation of the resulting simulation of this model on the Rouen agglomeration.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge