Clément Chatelain

LITIS

See Without Decoding: Motion-Vector-Based Tracking in Compressed Video

Jan 29, 2026Abstract:We propose a lightweight compressed-domain tracking model that operates directly on video streams, without requiring full RGB video decoding. Using motion vectors and transform coefficients from compressed data, our deep model propagates object bounding boxes across frames, achieving a computational speed-up of order up to 3.7 with only a slight 4% mAP@0.5 drop vs RGB baseline on MOTS15/17/20 datasets. These results highlight codec-domain motion modeling efficiency for real-time analytics in large monitoring systems.

Temporal receptive field in dynamic graph learning: A comprehensive analysis

Jul 19, 2024

Abstract:Dynamic link prediction is a critical task in the analysis of evolving networks, with applications ranging from recommender systems to economic exchanges. However, the concept of the temporal receptive field, which refers to the temporal context that models use for making predictions, has been largely overlooked and insufficiently analyzed in existing research. In this study, we present a comprehensive analysis of the temporal receptive field in dynamic graph learning. By examining multiple datasets and models, we formalize the role of temporal receptive field and highlight their crucial influence on predictive accuracy. Our results demonstrate that appropriately chosen temporal receptive field can significantly enhance model performance, while for some models, overly large windows may introduce noise and reduce accuracy. We conduct extensive benchmarking to validate our findings, ensuring that all experiments are fully reproducible. Code is available at https://github.com/ykrmm/BenchmarkTW .

Dynamic Graph Representation Learning with Neural Networks: A Survey

Apr 12, 2023

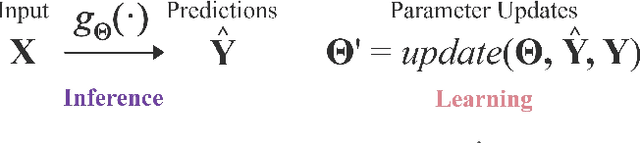

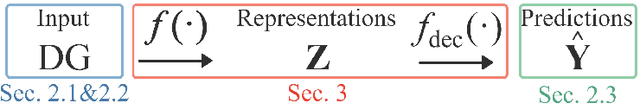

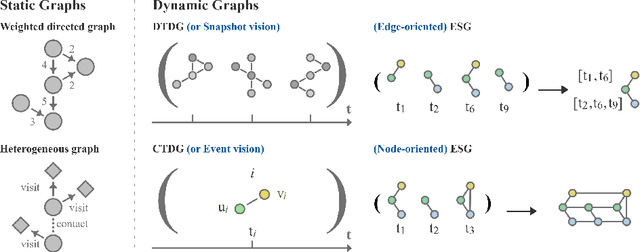

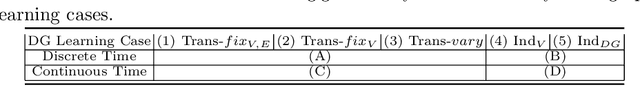

Abstract:In recent years, Dynamic Graph (DG) representations have been increasingly used for modeling dynamic systems due to their ability to integrate both topological and temporal information in a compact representation. Dynamic graphs allow to efficiently handle applications such as social network prediction, recommender systems, traffic forecasting or electroencephalography analysis, that can not be adressed using standard numeric representations. As a direct consequence of the emergence of dynamic graph representations, dynamic graph learning has emerged as a new machine learning problem, combining challenges from both sequential/temporal data processing and static graph learning. In this research area, Dynamic Graph Neural Network (DGNN) has became the state of the art approach and plethora of models have been proposed in the very recent years. This paper aims at providing a review of problems and models related to dynamic graph learning. The various dynamic graph supervised learning settings are analysed and discussed. We identify the similarities and differences between existing models with respect to the way time information is modeled. Finally, general guidelines for a DGNN designer when faced with a dynamic graph learning problem are provided.

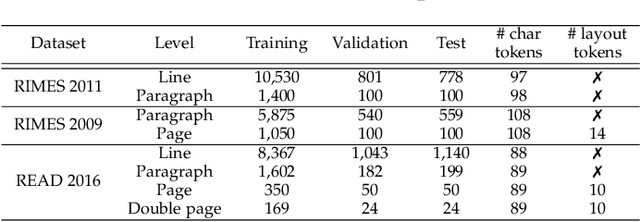

Faster DAN: Multi-target Queries with Document Positional Encoding for End-to-end Handwritten Document Recognition

Jan 25, 2023Abstract:Recent advances in handwritten text recognition enabled to recognize whole documents in an end-to-end way: the Document Attention Network (DAN) recognizes the characters one after the other through an attention-based prediction process until reaching the end of the document. However, this autoregressive process leads to inference that cannot benefit from any parallelization optimization. In this paper, we propose Faster DAN, a two-step strategy to speed up the recognition process at prediction time: the model predicts the first character of each text line in the document, and then completes all the text lines in parallel through multi-target queries and a specific document positional encoding scheme. Faster DAN reaches competitive results compared to standard DAN, while being at least 4 times faster on whole single-page and double-page images of the RIMES 2009, READ 2016 and MAURDOR datasets. Source code and trained model weights are available at https://github.com/FactoDeepLearning/FasterDAN.

DAN: a Segmentation-free Document Attention Network for Handwritten Document Recognition

Apr 07, 2022

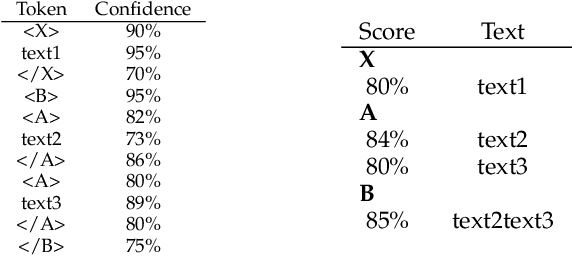

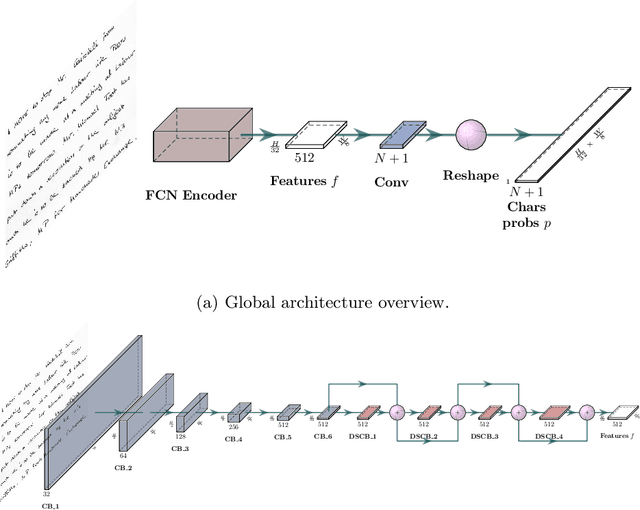

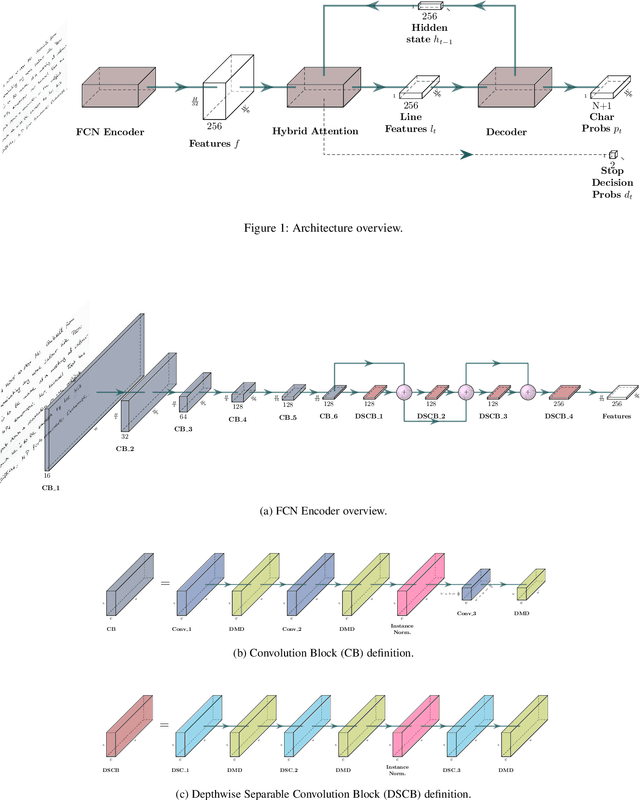

Abstract:Unconstrained handwritten text recognition is a challenging computer vision task. It is traditionally handled by a two-step approach combining line segmentation followed by text line recognition. For the first time, we propose an end-to-end segmentation-free architecture for the task of handwritten document recognition: the Document Attention Network. In addition to the text recognition, the model is trained to label text parts using begin and end tags in an XML-like fashion. This model is made up of an FCN encoder for feature extraction and a stack of transformer decoder layers for a recurrent token-by-token prediction process. It takes whole text documents as input and sequentially outputs characters, as well as logical layout tokens. Contrary to the existing segmentation-based approaches, the model is trained without using any segmentation label. We achieve competitive results on the READ 2016 dataset at page level, as well as double-page level with a CER of 3.53% and 3.69%, respectively. We also provide results for the RIMES 2009 dataset at page level, reaching 4.54% of CER. We provide all source code and pre-trained model weights at https://github.com/FactoDeepLearning/DAN.

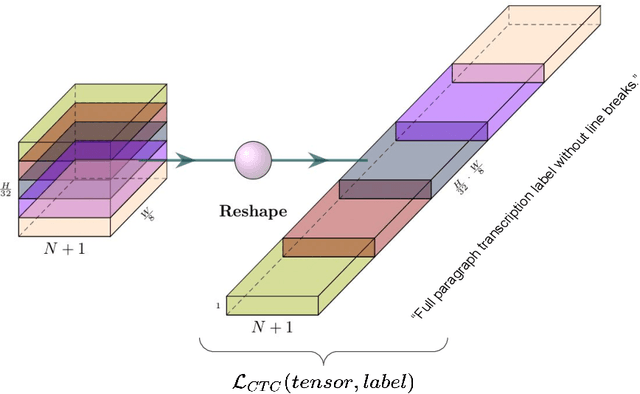

SPAN: a Simple Predict & Align Network for Handwritten Paragraph Recognition

Feb 17, 2021

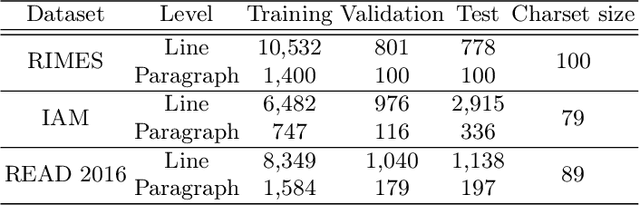

Abstract:Unconstrained handwriting recognition is an essential task in document analysis. It is usually carried out in two steps. First, the document is segmented into text lines. Second, an Optical Character Recognition model is applied on these line images. We propose the Simple Predict & Align Network: an end-to-end recurrence-free Fully Convolutional Network performing OCR at paragraph level without any prior segmentation stage. The framework is as simple as the one used for the recognition of isolated lines and we achieve competitive results on three popular datasets: RIMES, IAM and READ 2016. The proposed model does not require any dataset adaptation, it can be trained from scratch, without segmentation labels, and it does not require line breaks in the transcription labels. Our code and trained model weights are available at https://github.com/FactoDeepLearning/SPAN.

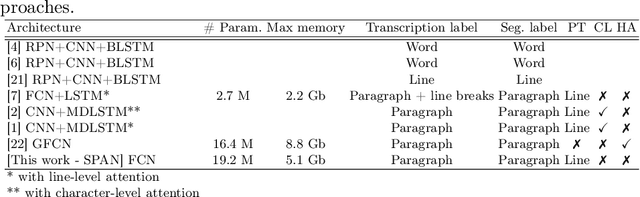

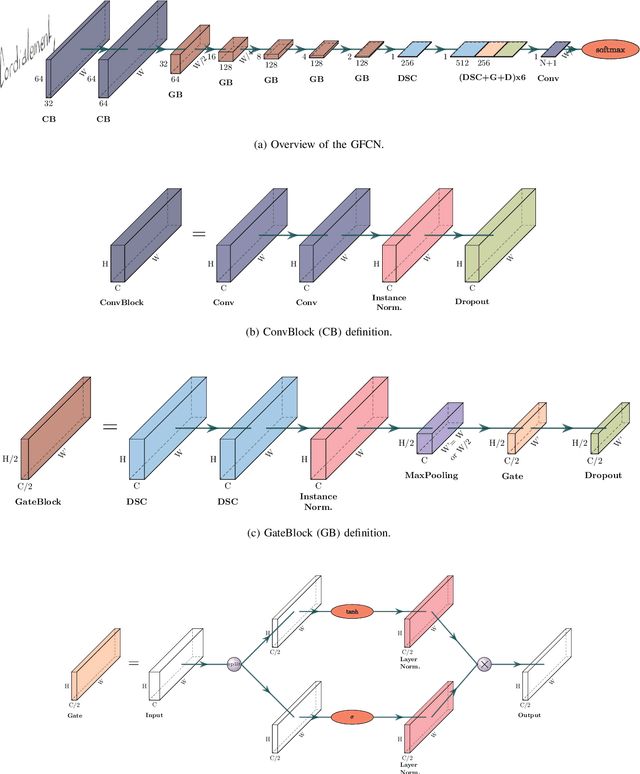

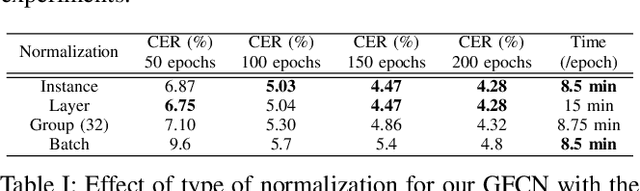

Recurrence-free unconstrained handwritten text recognition using gated fully convolutional network

Dec 09, 2020

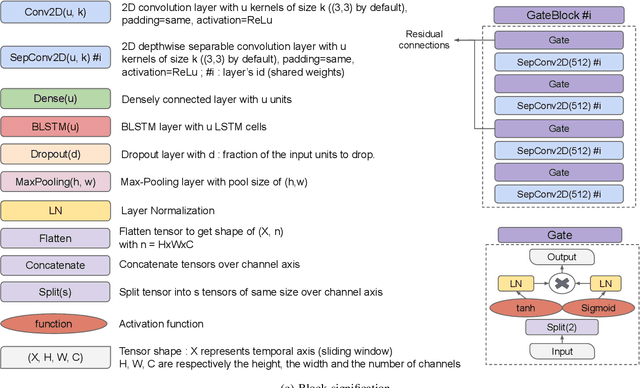

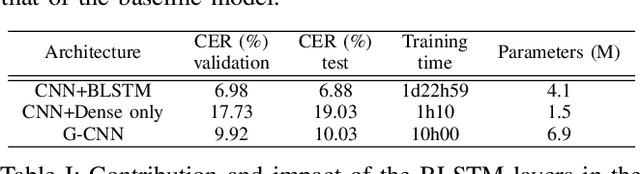

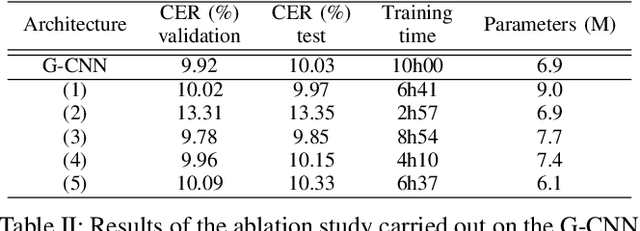

Abstract:Unconstrained handwritten text recognition is a major step in most document analysis tasks. This is generally processed by deep recurrent neural networks and more specifically with the use of Long Short-Term Memory cells. The main drawbacks of these components are the large number of parameters involved and their sequential execution during training and prediction. One alternative solution to using LSTM cells is to compensate the long time memory loss with an heavy use of convolutional layers whose operations can be executed in parallel and which imply fewer parameters. In this paper we present a Gated Fully Convolutional Network architecture that is a recurrence-free alternative to the well-known CNN+LSTM architectures. Our model is trained with the CTC loss and shows competitive results on both the RIMES and IAM datasets. We release all code to enable reproduction of our experiments: https://github.com/FactoDeepLearning/LinePytorchOCR.

Have convolutions already made recurrence obsolete for unconstrained handwritten text recognition ?

Dec 09, 2020

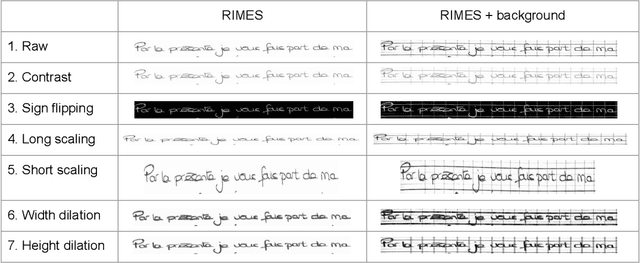

Abstract:Unconstrained handwritten text recognition remains an important challenge for deep neural networks. These last years, recurrent networks and more specifically Long Short-Term Memory networks have achieved state-of-the-art performance in this field. Nevertheless, they are made of a large number of trainable parameters and training recurrent neural networks does not support parallelism. This has a direct influence on the training time of such architectures, with also a direct consequence on the time required to explore various architectures. Recently, recurrence-free architectures such as Fully Convolutional Networks with gated mechanisms have been proposed as one possible alternative achieving competitive results. In this paper, we explore convolutional architectures and compare them to a CNN+BLSTM baseline. We propose an experimental study regarding different architectures on an offline handwriting recognition task using the RIMES dataset, and a modified version of it that consists of augmenting the images with notebook backgrounds that are printed grids.

End-to-end Handwritten Paragraph Text Recognition Using a Vertical Attention Network

Dec 07, 2020

Abstract:Unconstrained handwritten text recognition remains challenging for computer vision systems. Paragraph text recognition is traditionally achieved by two models: the first one for line segmentation and the second one for text line recognition. We propose a unified end-to-end model using hybrid attention to tackle this task. We achieve state-of-the-art character error rate at line and paragraph levels on three popular datasets: 1.90% for RIMES, 4.32% for IAM and 3.63% for READ 2016. The proposed model can be trained from scratch, without using any segmentation label contrary to the standard approach. Our code and trained model weights are available at https://github.com/FactoDeepLearning/VerticalAttentionOCR.

Fast object detection in compressed JPEG Images

Apr 16, 2019

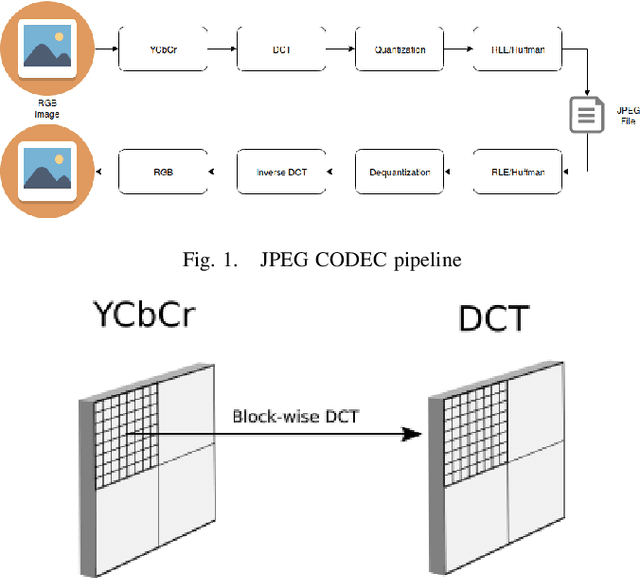

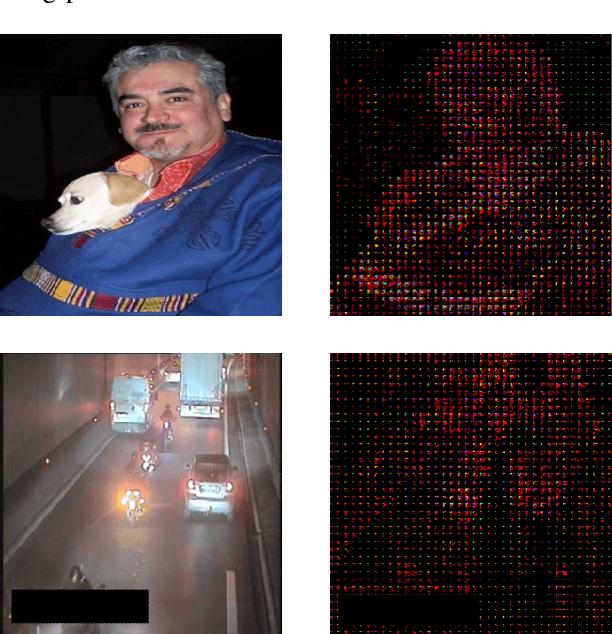

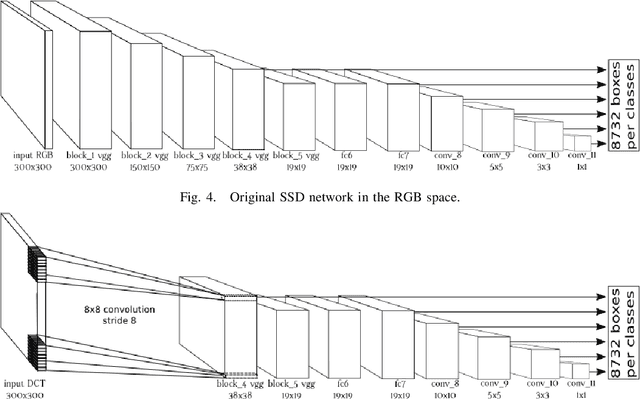

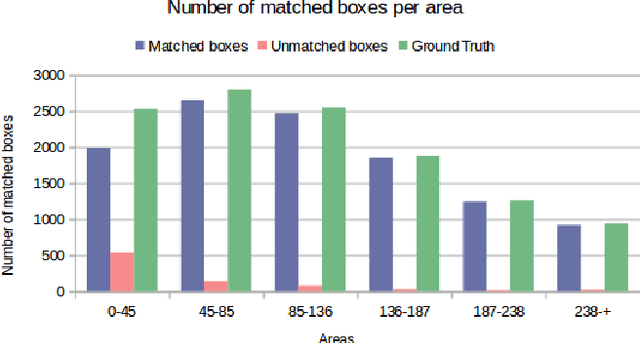

Abstract:Object detection in still images has drawn a lot of attention over past few years, and with the advent of Deep Learning impressive performances have been achieved with numerous industrial applications. Most of these deep learning models rely on RGB images to localize and identify objects in the image. However in some application scenarii, images are compressed either for storage savings or fast transmission. Therefore a time consuming image decompression step is compulsory in order to apply the aforementioned deep models. To alleviate this drawback, we propose a fast deep architecture for object detection in JPEG images, one of the most widespread compression format. We train a neural network to detect objects based on the blockwise DCT (discrete cosine transform) coefficients {issued from} the JPEG compression algorithm. We modify the well-known Single Shot multibox Detector (SSD) by replacing its first layers with one convolutional layer dedicated to process the DCT inputs. Experimental evaluations on PASCAL VOC and industrial dataset comprising images of road traffic surveillance show that the model is about $2\times$ faster than regular SSD with promising detection performances. To the best of our knowledge, this paper is the first to address detection in compressed JPEG images.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge