Yang Lyu

A Bi-directional Adaptive Framework for Agile UAV Landing

Jan 06, 2026Abstract:Autonomous landing on mobile platforms is crucial for extending quadcopter operational flexibility, yet conventional methods are often too inefficient for highly dynamic scenarios. The core limitation lies in the prevalent ``track-then-descend'' paradigm, which treats the platform as a passive target and forces the quadcopter to perform complex, sequential maneuvers. This paper challenges that paradigm by introducing a bi-directional cooperative landing framework that redefines the roles of the vehicle and the platform. The essential innovation is transforming the problem from a single-agent tracking challenge into a coupled system optimization. Our key insight is that the mobile platform is not merely a target, but an active agent in the landing process. It proactively tilts its surface to create an optimal, stable terminal attitude for the approaching quadcopter. This active cooperation fundamentally breaks the sequential model by parallelizing the alignment and descent phases. Concurrently, the quadcopter's planning pipeline focuses on generating a time-optimal and dynamically feasible trajectory that minimizes energy consumption. This bi-directional coordination allows the system to execute the recovery in an agile manner, characterized by aggressive trajectory tracking and rapid state synchronization within transient windows. The framework's effectiveness, validated in dynamic scenarios, significantly improves the efficiency, precision, and robustness of autonomous quadrotor recovery in complex and time-constrained missions.

Probing the Critical Point (CritPt) of AI Reasoning: a Frontier Physics Research Benchmark

Oct 01, 2025

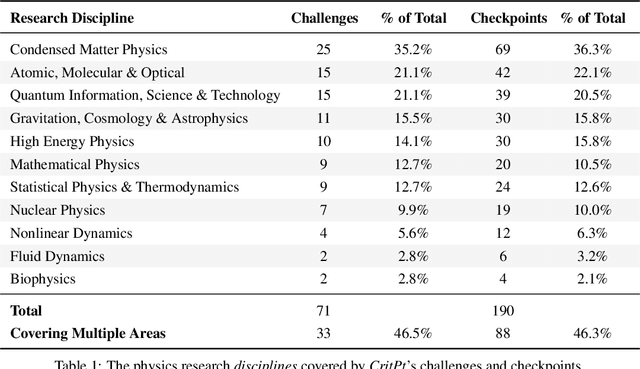

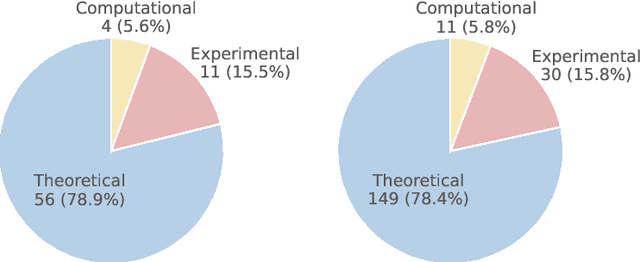

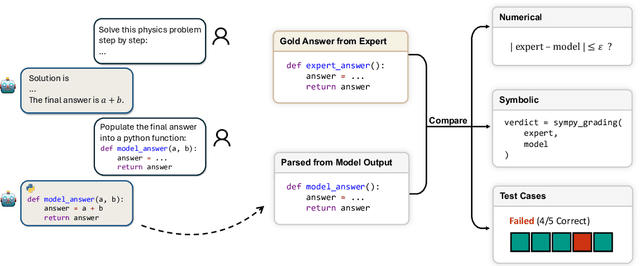

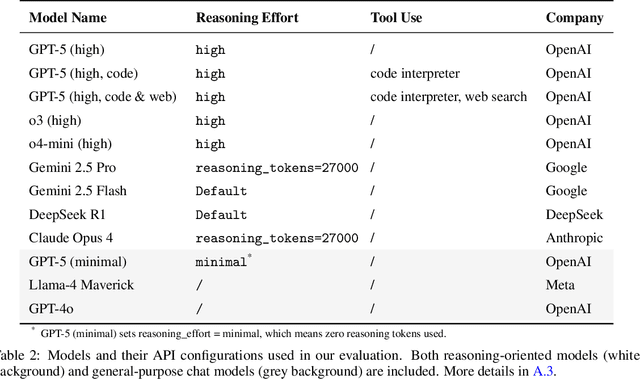

Abstract:While large language models (LLMs) with reasoning capabilities are progressing rapidly on high-school math competitions and coding, can they reason effectively through complex, open-ended challenges found in frontier physics research? And crucially, what kinds of reasoning tasks do physicists want LLMs to assist with? To address these questions, we present the CritPt (Complex Research using Integrated Thinking - Physics Test, pronounced "critical point"), the first benchmark designed to test LLMs on unpublished, research-level reasoning tasks that broadly covers modern physics research areas, including condensed matter, quantum physics, atomic, molecular & optical physics, astrophysics, high energy physics, mathematical physics, statistical physics, nuclear physics, nonlinear dynamics, fluid dynamics and biophysics. CritPt consists of 71 composite research challenges designed to simulate full-scale research projects at the entry level, which are also decomposed to 190 simpler checkpoint tasks for more fine-grained insights. All problems are newly created by 50+ active physics researchers based on their own research. Every problem is hand-curated to admit a guess-resistant and machine-verifiable answer and is evaluated by an automated grading pipeline heavily customized for advanced physics-specific output formats. We find that while current state-of-the-art LLMs show early promise on isolated checkpoints, they remain far from being able to reliably solve full research-scale challenges: the best average accuracy among base models is only 4.0% , achieved by GPT-5 (high), moderately rising to around 10% when equipped with coding tools. Through the realistic yet standardized evaluation offered by CritPt, we highlight a large disconnect between current model capabilities and realistic physics research demands, offering a foundation to guide the development of scientifically grounded AI tools.

COLI: A Hierarchical Efficient Compressor for Large Images

Jul 15, 2025Abstract:The escalating adoption of high-resolution, large-field-of-view imagery amplifies the need for efficient compression methodologies. Conventional techniques frequently fail to preserve critical image details, while data-driven approaches exhibit limited generalizability. Implicit Neural Representations (INRs) present a promising alternative by learning continuous mappings from spatial coordinates to pixel intensities for individual images, thereby storing network weights rather than raw pixels and avoiding the generalization problem. However, INR-based compression of large images faces challenges including slow compression speed and suboptimal compression ratios. To address these limitations, we introduce COLI (Compressor for Large Images), a novel framework leveraging Neural Representations for Videos (NeRV). First, recognizing that INR-based compression constitutes a training process, we accelerate its convergence through a pretraining-finetuning paradigm, mixed-precision training, and reformulation of the sequential loss into a parallelizable objective. Second, capitalizing on INRs' transformation of image storage constraints into weight storage, we implement Hyper-Compression, a novel post-training technique to substantially enhance compression ratios while maintaining minimal output distortion. Evaluations across two medical imaging datasets demonstrate that COLI consistently achieves competitive or superior PSNR and SSIM metrics at significantly reduced bits per pixel (bpp), while accelerating NeRV training by up to 4 times.

Resolving Memorization in Empirical Diffusion Model for Manifold Data in High-Dimensional Spaces

May 05, 2025Abstract:Diffusion models is a popular computational tool to generate new data samples. It utilizes a forward diffusion process that add noise to the data distribution and then use a reverse process to remove noises to produce samples from the data distribution. However, when the empirical data distribution consists of $n$ data point, using the empirical diffusion model will necessarily produce one of the existing data points. This is often referred to as the memorization effect, which is usually resolved by sophisticated machine learning procedures in the current literature. This work shows that the memorization problem can be resolved by a simple inertia update step at the end of the empirical diffusion model simulation. Our inertial diffusion model requires only the empirical diffusion model score function and it does not require any further training. We show that choosing the inertia diffusion model sample distribution is an $O\left(n^{-\frac{2}{d+4}}\right)$ Wasserstein-1 approximation of a data distribution lying on a $C^2$ manifold of dimension $d$. Since this estimate is significant smaller the Wasserstein1 distance between population and empirical distributions, it rigorously shows the inertial diffusion model produces new data samples. Remarkably, this upper bound is completely free of the ambient space dimension, since there is no training involved. Our analysis utilizes the fact that the inertial diffusion model samples are approximately distributed as the Gaussian kernel density estimator on the manifold. This reveals an interesting connection between diffusion model and manifold learning.

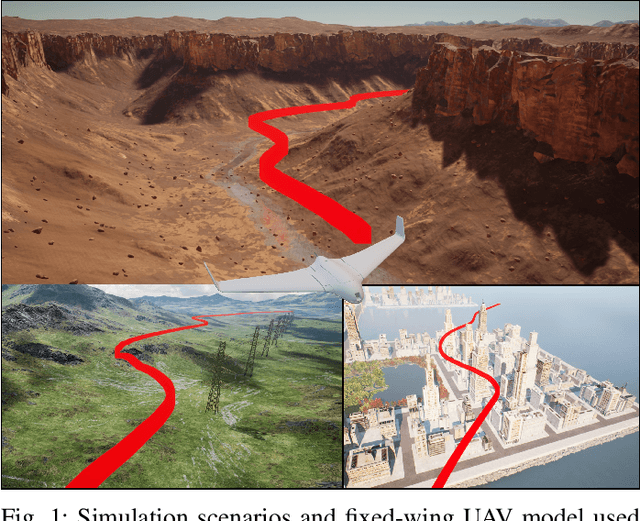

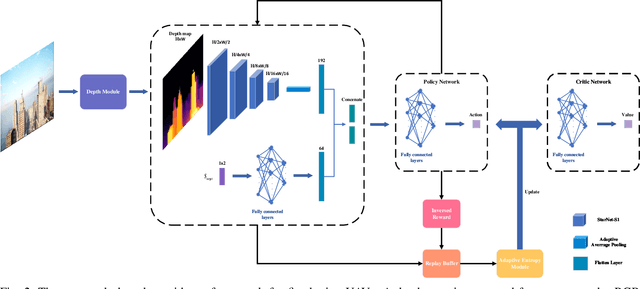

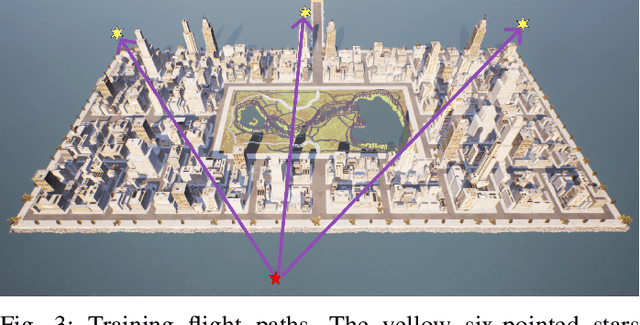

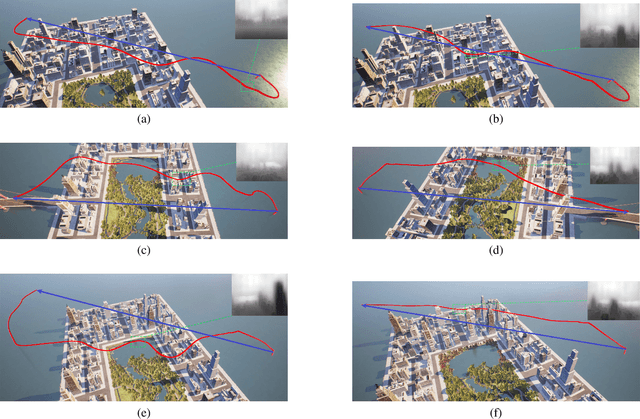

Monocular Obstacle Avoidance Based on Inverse PPO for Fixed-wing UAVs

Nov 27, 2024

Abstract:Fixed-wing Unmanned Aerial Vehicles (UAVs) are one of the most commonly used platforms for the burgeoning Low-altitude Economy (LAE) and Urban Air Mobility (UAM), due to their long endurance and high-speed capabilities. Classical obstacle avoidance systems, which rely on prior maps or sophisticated sensors, face limitations in unknown low-altitude environments and small UAV platforms. In response, this paper proposes a lightweight deep reinforcement learning (DRL) based UAV collision avoidance system that enables a fixed-wing UAV to avoid unknown obstacles at cruise speed over 30m/s, with only onboard visual sensors. The proposed system employs a single-frame image depth inference module with a streamlined network architecture to ensure real-time obstacle detection, optimized for edge computing devices. After that, a reinforcement learning controller with a novel reward function is designed to balance the target approach and flight trajectory smoothness, satisfying the specific dynamic constraints and stability requirements of a fixed-wing UAV platform. An adaptive entropy adjustment mechanism is introduced to mitigate the exploration-exploitation trade-off inherent in DRL, improving training convergence and obstacle avoidance success rates. Extensive software-in-the-loop and hardware-in-the-loop experiments demonstrate that the proposed framework outperforms other methods in obstacle avoidance efficiency and flight trajectory smoothness and confirm the feasibility of implementing the algorithm on edge devices. The source code is publicly available at \url{https://github.com/ch9397/FixedWing-MonoPPO}.

Light-LOAM: A Lightweight LiDAR Odometry and Mapping based on Graph-Matching

Oct 06, 2023

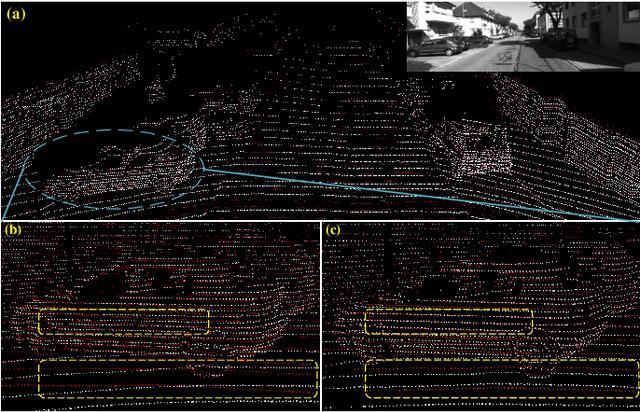

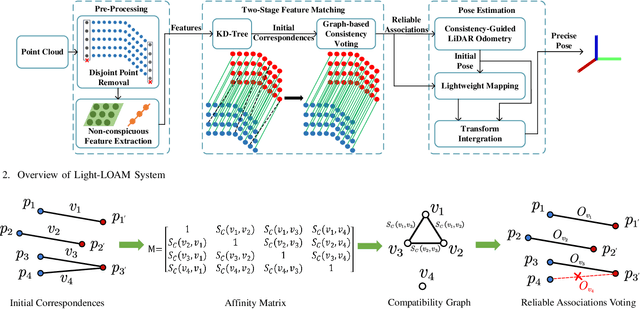

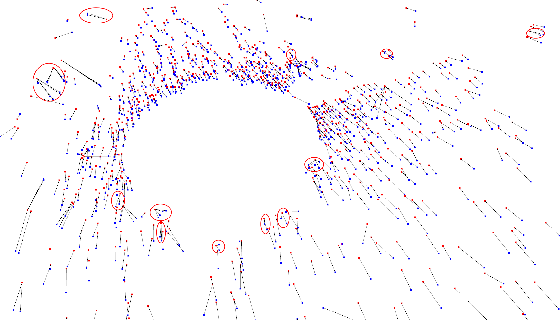

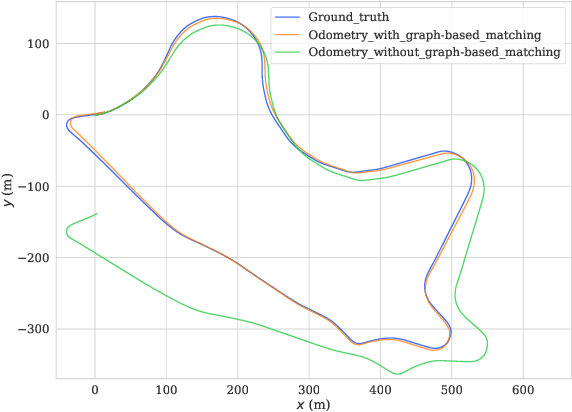

Abstract:Simultaneous Localization and Mapping (SLAM) plays an important role in robot autonomy. Reliability and efficiency are the two most valued features for applying SLAM in robot applications. In this paper, we consider achieving a reliable LiDAR-based SLAM function in computation-limited platforms, such as quadrotor UAVs based on graph-based point cloud association. First, contrary to most works selecting salient features for point cloud registration, we propose a non-conspicuous feature selection strategy for reliability and robustness purposes. Then a two-stage correspondence selection method is used to register the point cloud, which includes a KD-tree-based coarse matching followed by a graph-based matching method that uses geometric consistency to vote out incorrect correspondences. Additionally, we propose an odometry approach where the weight optimizations are guided by vote results from the aforementioned geometric consistency graph. In this way, the optimization of LiDAR odometry rapidly converges and evaluates a fairly accurate transformation resulting in the back-end module efficiently finishing the mapping task. Finally, we evaluate our proposed framework on the KITTI odometry dataset and real-world environments. Experiments show that our SLAM system achieves a comparative level or higher level of accuracy with more balanced computation efficiency compared with the mainstream LiDAR-based SLAM solutions.

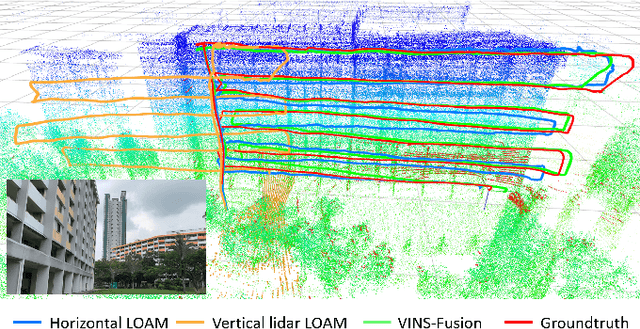

NTU VIRAL: A Visual-Inertial-Ranging-Lidar Dataset, From an Aerial Vehicle Viewpoint

Feb 01, 2022

Abstract:In recent years, autonomous robots have become ubiquitous in research and daily life. Among many factors, public datasets play an important role in the progress of this field, as they waive the tall order of initial investment in hardware and manpower. However, for research on autonomous aerial systems, there appears to be a relative lack of public datasets on par with those used for autonomous driving and ground robots. Thus, to fill in this gap, we conduct a data collection exercise on an aerial platform equipped with an extensive and unique set of sensors: two 3D lidars, two hardware-synchronized global-shutter cameras, multiple Inertial Measurement Units (IMUs), and especially, multiple Ultra-wideband (UWB) ranging units. The comprehensive sensor suite resembles that of an autonomous driving car, but features distinct and challenging characteristics of aerial operations. We record multiple datasets in several challenging indoor and outdoor conditions. Calibration results and ground truth from a high-accuracy laser tracker are also included in each package. All resources can be accessed via our webpage https://ntu-aris.github.io/ntu_viral_dataset.

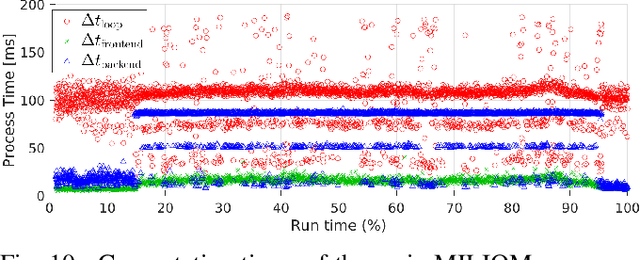

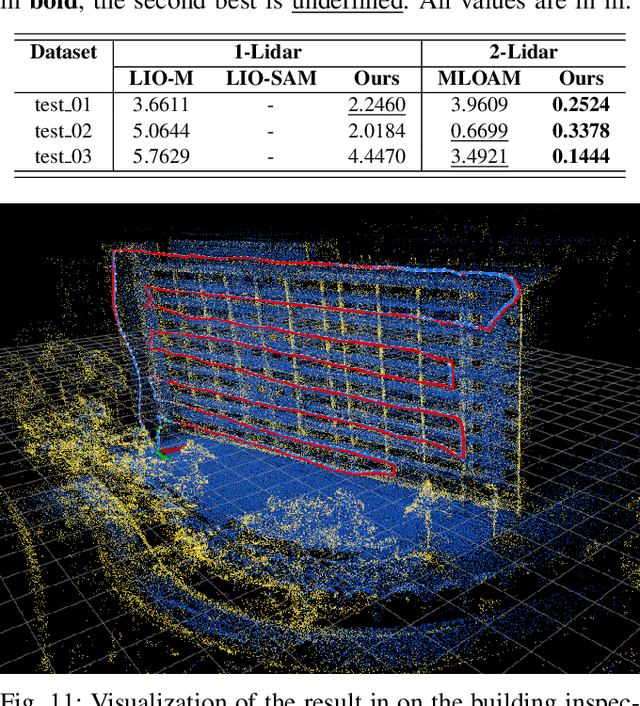

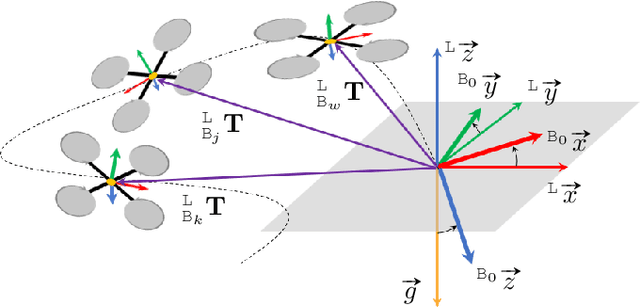

MILIOM: Tightly Coupled Multi-Input Lidar-Inertia Odometry and Mapping

Apr 24, 2021

Abstract:In this paper we investigate a tightly coupled Lidar-Inertia Odometry and Mapping (LIOM) scheme, with the capability to incorporate multiple lidars with complementary field of view (FOV). In essence, we devise a time-synchronized scheme to combine extracted features from separate lidars into a single pointcloud, which is then used to construct a local map and compute the feature-map matching (FMM) coefficients. These coefficients, along with the IMU preinteration observations, are then used to construct a factor graph that will be optimized to produce an estimate of the sliding window trajectory. We also propose a key frame-based map management strategy to marginalize certain poses and pointclouds in the sliding window to grow a global map, which is used to assemble the local map in the later stage. The use of multiple lidars with complementary FOV and the global map ensures that our estimate has low drift and can sustain good localization in situations where single lidar use gives poor result, or even fails to work. Multi-thread computation implementations are also adopted to fractionally cut down the computation time and ensure real-time performance. We demonstrate the efficacy of our system via a series of experiments on public datasets collected from an aerial vehicle.

Vision Based Autonomous UAV Plane Estimation And Following for Building Inspection

Feb 02, 2021

Abstract:Unmanned Aerial Vehicle (UAV) has already demonstrated its potential in many civilian applications, and the fa\c{c}ade inspection is among the most promising ones. In this paper, we focus on enabling the autonomous perception and control of a small UAV for a fa\c{c}ade inspection task. Specifically, we consider the perception as a planar object pose estimation problem by simplifying the building structure as concatenation of planes, and the control as an optimal reference tracking control problem. First, a vision based adaptive observer is proposed which can realize stable plane pose estimation under very mild observation conditions. Second, a model predictive controller is designed to achieve stable tracking and smooth transition in a multi-plane scenario, while the persistent excitation (PE) condition of the observer and the maneuver constraints of the UAV are satisfied. The proposed autonomous plane pose estimation and plane tracking methods are tested in both simulation and practical building fas\c{c}ade inspection scenarios, which demonstrate their effectiveness and practicability.

SPINS: Structure Priors aided Inertial Navigation System

Dec 28, 2020

Abstract:Although Simultaneous Localization and Mapping (SLAM) has been an active research topic for decades, current state-of-the-art methods still suffer from instability or inaccuracy due to feature insufficiency or its inherent estimation drift, in many civilian environments. To resolve these issues, we propose a navigation system combing the SLAM and prior-map-based localization. Specifically, we consider additional integration of line and plane features, which are ubiquitous and more structurally salient in civilian environments, into the SLAM to ensure feature sufficiency and localization robustness. More importantly, we incorporate general prior map information into the SLAM to restrain its drift and improve the accuracy. To avoid rigorous association between prior information and local observations, we parameterize the prior knowledge as low dimensional structural priors defined as relative distances/angles between different geometric primitives. The localization is formulated as a graph-based optimization problem that contains sliding-window-based variables and factors, including IMU, heterogeneous features, and structure priors. We also derive the analytical expressions of Jacobians of different factors to avoid the automatic differentiation overhead. To further alleviate the computation burden of incorporating structural prior factors, a selection mechanism is adopted based on the so-called information gain to incorporate only the most effective structure priors in the graph optimization. Finally, the proposed framework is extensively tested on synthetic data, public datasets, and, more importantly, on the real UAV flight data obtained from a building inspection task. The results show that the proposed scheme can effectively improve the accuracy and robustness of localization for autonomous robots in civilian applications.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge