Monocular Obstacle Avoidance Based on Inverse PPO for Fixed-wing UAVs

Paper and Code

Nov 27, 2024

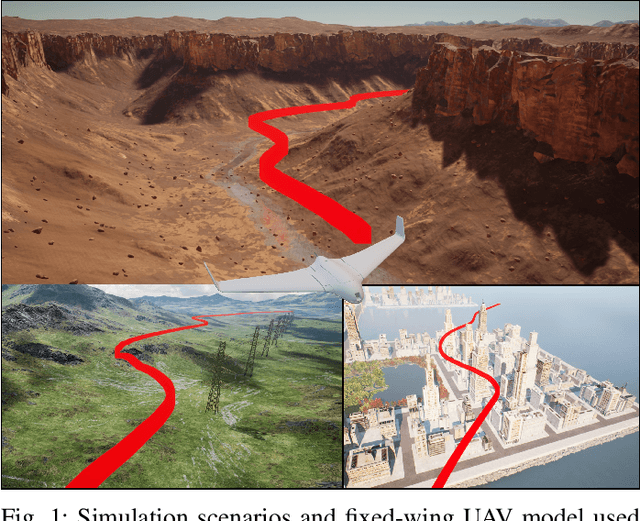

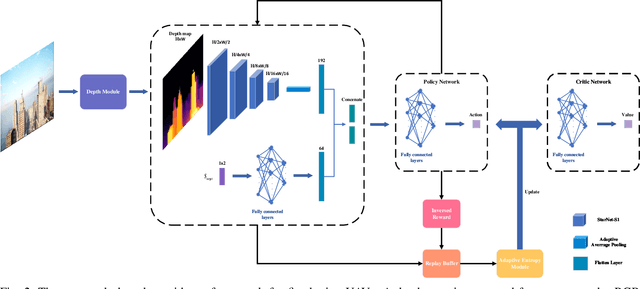

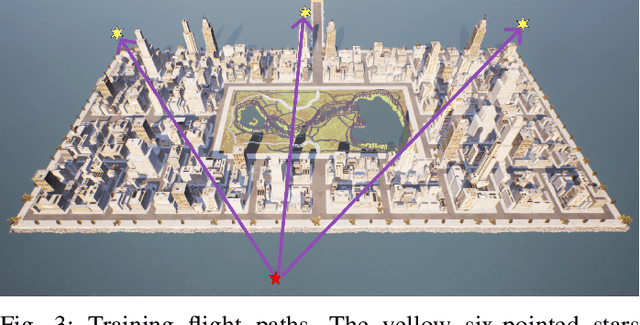

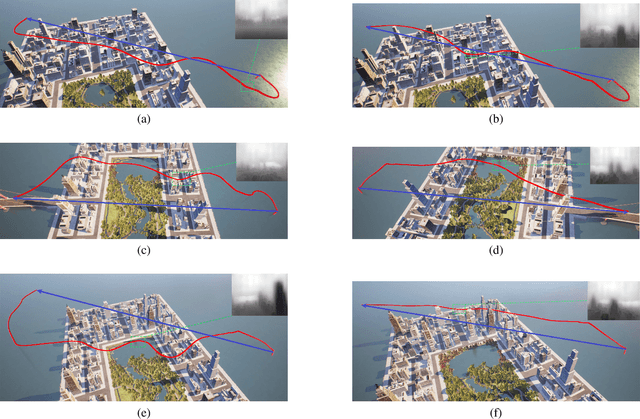

Fixed-wing Unmanned Aerial Vehicles (UAVs) are one of the most commonly used platforms for the burgeoning Low-altitude Economy (LAE) and Urban Air Mobility (UAM), due to their long endurance and high-speed capabilities. Classical obstacle avoidance systems, which rely on prior maps or sophisticated sensors, face limitations in unknown low-altitude environments and small UAV platforms. In response, this paper proposes a lightweight deep reinforcement learning (DRL) based UAV collision avoidance system that enables a fixed-wing UAV to avoid unknown obstacles at cruise speed over 30m/s, with only onboard visual sensors. The proposed system employs a single-frame image depth inference module with a streamlined network architecture to ensure real-time obstacle detection, optimized for edge computing devices. After that, a reinforcement learning controller with a novel reward function is designed to balance the target approach and flight trajectory smoothness, satisfying the specific dynamic constraints and stability requirements of a fixed-wing UAV platform. An adaptive entropy adjustment mechanism is introduced to mitigate the exploration-exploitation trade-off inherent in DRL, improving training convergence and obstacle avoidance success rates. Extensive software-in-the-loop and hardware-in-the-loop experiments demonstrate that the proposed framework outperforms other methods in obstacle avoidance efficiency and flight trajectory smoothness and confirm the feasibility of implementing the algorithm on edge devices. The source code is publicly available at \url{https://github.com/ch9397/FixedWing-MonoPPO}.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge