Yadollah Yaghoobzadeh

FFE-Hallu:Hallucinations in Fixed Figurative Expressions:Benchmark of Idioms and Proverbs in the Persian Language

Jan 27, 2026Abstract:Figurative language, particularly fixed figurative expressions (FFEs) such as idioms and proverbs, poses persistent challenges for large language models (LLMs). Unlike literal phrases, FFEs are culturally grounded, largely non-compositional, and conventionally fixed, making them especially vulnerable to figurative hallucination. We define figurative hallucination as the generation or endorsement of expressions that sound idiomatic and plausible but do not exist as authentic figurative expressions in the target language. We introduce FFEHallu, the first comprehensive benchmark for evaluating figurative hallucination in LLMs, with a focus on Persian, a linguistically rich yet underrepresented language. FFEHallu consists of 600 carefully curated instances spanning three complementary tasks: (i) FFE generation from meaning, (ii) detection of fabricated FFEs across four controlled construction categories, and (iii) FFE to FFE translation from English to Persian. Evaluating six state of the art multilingual LLMs, we find systematic weaknesses in figurative competence and cultural grounding. While models such as GPT4.1 demonstrate relatively strong performance in rejecting fabricated FFEs and retrieving authentic ones, most models struggle to reliably distinguish real expressions from high quality fabrications and frequently hallucinate during cross lingual translation. These findings reveal substantial gaps in current LLMs handling of figurative language and underscore the need for targeted benchmarks to assess and mitigate figurative hallucination.

Layer-wise Positional Bias in Short-Context Language Modeling

Jan 07, 2026Abstract:Language models often show a preference for using information from specific positions in the input regardless of semantic relevance. While positional bias has been studied in various contexts, from attention sinks to task performance degradation in long-context settings, prior work has not established how these biases evolve across individual layers and input positions, or how they vary independent of task complexity. We introduce an attribution-based framework to analyze positional effects in short-context language modeling. Using layer conductance with a sliding-window approach, we quantify how each layer distributes importance across input positions, yielding layer-wise positional importance profiles. We find that these profiles are architecture-specific, stable across inputs, and invariant to lexical scrambling. Characterizing these profiles, we find prominent recency bias that increases with depth and subtle primacy bias that diminishes through model depth. Beyond positional structure, we also show that early layers preferentially weight content words over function words across all positions, while later layers lose this word-type differentiation.

A Dual-Axis Taxonomy of Knowledge Editing for LLMs: From Mechanisms to Functions

Aug 12, 2025Abstract:Large language models (LLMs) acquire vast knowledge from large text corpora, but this information can become outdated or inaccurate. Since retraining is computationally expensive, knowledge editing offers an efficient alternative -- modifying internal knowledge without full retraining. These methods aim to update facts precisely while preserving the model's overall capabilities. While existing surveys focus on the mechanism of editing (e.g., parameter changes vs. external memory), they often overlook the function of the knowledge being edited. This survey introduces a novel, complementary function-based taxonomy to provide a more holistic view. We examine how different mechanisms apply to various knowledge types -- factual, temporal, conceptual, commonsense, and social -- highlighting how editing effectiveness depends on the nature of the target knowledge. By organizing our review along these two axes, we map the current landscape, outline the strengths and limitations of existing methods, define the problem formally, survey evaluation tasks and datasets, and conclude with open challenges and future directions.

GenKnowSub: Improving Modularity and Reusability of LLMs through General Knowledge Subtraction

May 16, 2025Abstract:Large language models often struggle with zero-shot generalization, and several modular approaches have been proposed to address this challenge. Yet, we hypothesize that a key limitation remains: the entanglement of general knowledge and task-specific adaptations. To overcome this, we propose a modular framework that disentangles these components by constructing a library of task-specific LoRA modules alongside a general-domain LoRA. By subtracting this general knowledge component from each task-specific module, we obtain residual modules that focus more exclusively on task-relevant information, a method we call general knowledge subtraction (GenKnowSub). Leveraging the refined task-specific modules and the Arrow routing algorithm \citep{ostapenko2024towards}, we dynamically select and combine modules for new inputs without additional training. Our studies on the Phi-3 model and standard Arrow as baselines reveal that using general knowledge LoRAs derived from diverse languages, including English, French, and German, yields consistent performance gains in both monolingual and cross-lingual settings across a wide set of benchmarks. Further experiments on Phi-2 demonstrate how GenKnowSub generalizes to weaker LLMs. The complete code and data are available at https://github.com/saharsamr/Modular-LLM.

Explanations of Deep Language Models Explain Language Representations in the Brain

Feb 21, 2025

Abstract:Recent advances in artificial intelligence have given rise to large language models (LLMs) that not only achieve human-like performance but also share computational principles with the brain's language processing mechanisms. While previous research has primarily focused on aligning LLMs' internal representations with neural activity, we introduce a novel approach that leverages explainable AI (XAI) methods to forge deeper connections between the two domains. Using attribution methods, we quantified how preceding words contribute to an LLM's next-word predictions and employed these explanations to predict fMRI recordings from participants listening to the same narratives. Our findings demonstrate that attribution methods robustly predict brain activity across the language network, surpassing traditional internal representations in early language areas. This alignment is hierarchical: early-layer explanations correspond to the initial stages of language processing in the brain, while later layers align with more advanced stages. Moreover, the layers more influential on LLM next-word prediction$\unicode{x2014}$those with higher attribution scores$\unicode{x2014}$exhibited stronger alignment with neural activity. This work establishes a bidirectional bridge between AI and neuroscience. First, we demonstrate that attribution methods offer a powerful lens for investigating the neural mechanisms of language comprehension, revealing how meaning emerges from preceding context. Second, we propose using brain alignment as a metric to evaluate the validity of attribution methods, providing a framework for assessing their biological plausibility.

PerCul: A Story-Driven Cultural Evaluation of LLMs in Persian

Feb 11, 2025Abstract:Large language models predominantly reflect Western cultures, largely due to the dominance of English-centric training data. This imbalance presents a significant challenge, as LLMs are increasingly used across diverse contexts without adequate evaluation of their cultural competence in non-English languages, including Persian. To address this gap, we introduce PerCul, a carefully constructed dataset designed to assess the sensitivity of LLMs toward Persian culture. PerCul features story-based, multiple-choice questions that capture culturally nuanced scenarios. Unlike existing benchmarks, PerCul is curated with input from native Persian annotators to ensure authenticity and to prevent the use of translation as a shortcut. We evaluate several state-of-the-art multilingual and Persian-specific LLMs, establishing a foundation for future research in cross-cultural NLP evaluation. Our experiments demonstrate a 11.3% gap between best closed source model and layperson baseline while the gap increases to 21.3% by using the best open-weight model. You can access the dataset from here: https://huggingface.co/datasets/teias-ai/percul

Extending LLMs to New Languages: A Case Study of Llama and Persian Adaptation

Dec 17, 2024Abstract:Large language models (LLMs) have made great progress in classification and text generation tasks. However, they are mainly trained on English data and often struggle with low-resource languages. In this study, we explore adding a new language, i.e., Persian, to Llama (a model with a limited understanding of Persian) using parameter-efficient fine-tuning. We employ a multi-stage approach involving pretraining on monolingual Persian data, aligning representations through bilingual pretraining and instruction datasets, and instruction-tuning with task-specific datasets. We evaluate the model's performance at each stage on generation and classification tasks. Our findings suggest that incorporating the Persian language, through bilingual data alignment, can enhance classification accuracy for Persian tasks, with no adverse impact and sometimes even improvements on English tasks. Additionally, the results highlight the model's initial strength as a critical factor when working with limited training data, with cross-lingual alignment offering minimal benefits for the low-resource language. Knowledge transfer from English to Persian has a marginal effect, primarily benefiting simple classification tasks.

A Comparative Study of LLMs, NMT Models, and Their Combination in Persian-English Idiom Translation

Dec 13, 2024

Abstract:Large language models (LLMs) have shown superior capabilities in translating figurative language compared to neural machine translation (NMT) systems. However, the impact of different prompting methods and LLM-NMT combinations on idiom translation has yet to be thoroughly investigated. This paper introduces two parallel datasets of sentences containing idiomatic expressions for Persian$\rightarrow$English and English$\rightarrow$Persian translations, with Persian idioms sampled from our PersianIdioms resource, a collection of 2,200 idioms and their meanings. Using these datasets, we evaluate various open- and closed-source LLMs, NMT models, and their combinations. Translation quality is assessed through idiom translation accuracy and fluency. We also find that automatic evaluation methods like LLM-as-a-judge, BLEU and BERTScore are effective for comparing different aspects of model performance. Our experiments reveal that Claude-3.5-Sonnet delivers outstanding results in both translation directions. For English$\rightarrow$Persian, combining weaker LLMs with Google Translate improves results, while Persian$\rightarrow$English translations benefit from single prompts for simpler models and complex prompts for advanced ones.

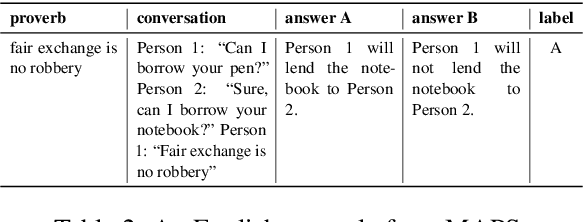

Comparative Study of Multilingual Idioms and Similes in Large Language Models

Oct 21, 2024

Abstract:This study addresses the gap in the literature concerning the comparative performance of LLMs in interpreting different types of figurative language across multiple languages. By evaluating LLMs using two multilingual datasets on simile and idiom interpretation, we explore the effectiveness of various prompt engineering strategies, including chain-of-thought, few-shot, and English translation prompts. We extend the language of these datasets to Persian as well by building two new evaluation sets. Our comprehensive assessment involves both closed-source (GPT-3.5, GPT-4o mini, Gemini 1.5), and open-source models (Llama 3.1, Qwen2), highlighting significant differences in performance across languages and figurative types. Our findings reveal that while prompt engineering methods are generally effective, their success varies by figurative type, language, and model. We also observe that open-source models struggle particularly with low-resource languages in similes. Additionally, idiom interpretation is nearing saturation for many languages, necessitating more challenging evaluations.

Counterfactuals As a Means for Evaluating Faithfulness of Attribution Methods in Autoregressive Language Models

Aug 21, 2024

Abstract:Despite the widespread adoption of autoregressive language models, explainability evaluation research has predominantly focused on span infilling and masked language models (MLMs). Evaluating the faithfulness of an explanation method -- how accurately the method explains the inner workings and decision-making of the model -- is very challenging because it is very hard to separate the model from its explanation. Most faithfulness evaluation techniques corrupt or remove some input tokens considered important according to a particular attribution (feature importance) method and observe the change in the model's output. This approach creates out-of-distribution inputs for causal language models (CLMs) due to their training objective of next token prediction. In this study, we propose a technique that leverages counterfactual generation to evaluate the faithfulness of attribution methods for autoregressive language modeling scenarios. Our technique creates fluent and in-distribution counterfactuals that makes evaluation protocol more reliable. Code is available at https://github.com/Sepehr-Kamahi/faith

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge