Y V S Harish

DeepMPCVS: Deep Model Predictive Control for Visual Servoing

May 03, 2021

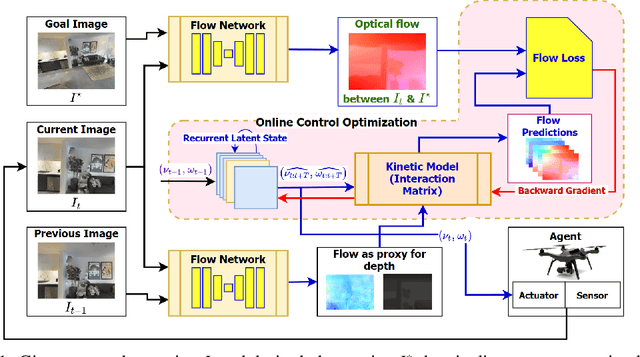

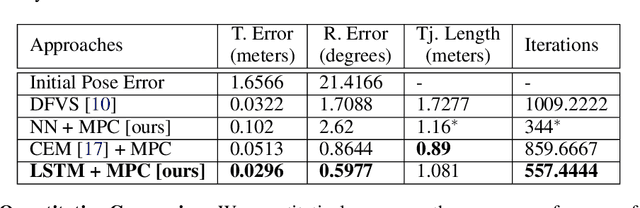

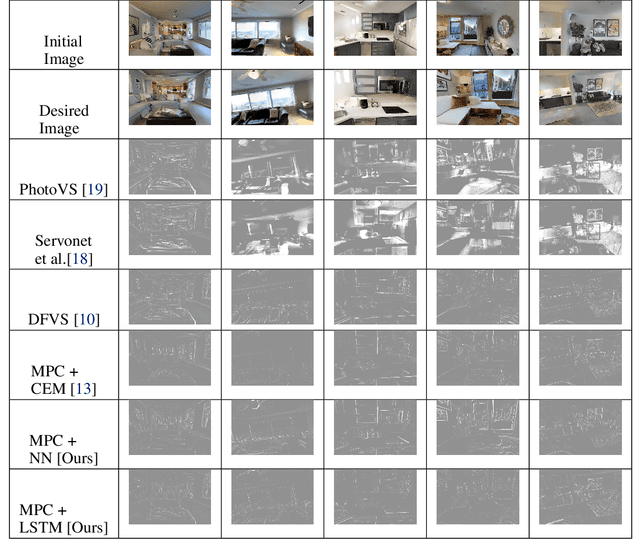

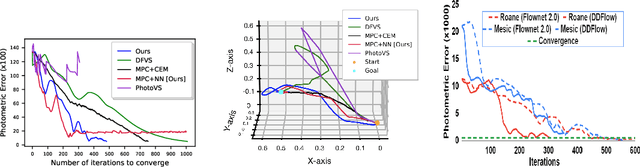

Abstract:The simplicity of the visual servoing approach makes it an attractive option for tasks dealing with vision-based control of robots in many real-world applications. However, attaining precise alignment for unseen environments pose a challenge to existing visual servoing approaches. While classical approaches assume a perfect world, the recent data-driven approaches face issues when generalizing to novel environments. In this paper, we aim to combine the best of both worlds. We present a deep model predictive visual servoing framework that can achieve precise alignment with optimal trajectories and can generalize to novel environments. Our framework consists of a deep network for optical flow predictions, which are used along with a predictive model to forecast future optical flow. For generating an optimal set of velocities we present a control network that can be trained on the fly without any supervision. Through extensive simulations on photo-realistic indoor settings of the popular Habitat framework, we show significant performance gain due to the proposed formulation vis-a-vis recent state-of-the-art methods. Specifically, we show a faster convergence and an improved performance in trajectory length over recent approaches.

* Accepted at 4th Annual Conference on Robot Learning, CoRL 2020, Cambridge, MA, USA, November 16 - November 18, 2020

DFVS: Deep Flow Guided Scene Agnostic Image Based Visual Servoing

Mar 08, 2020

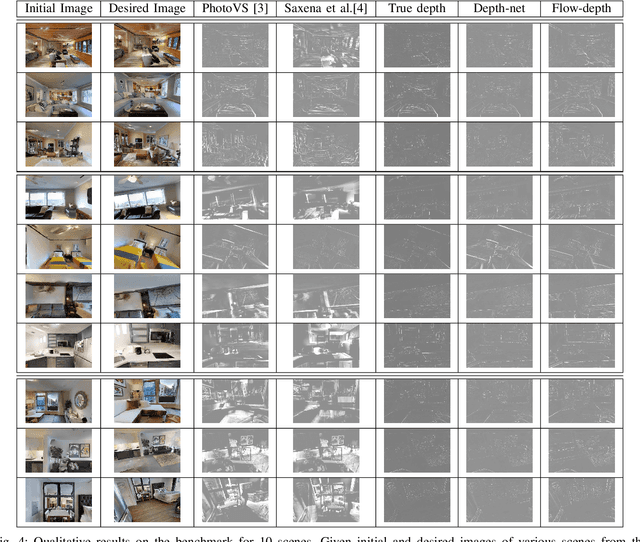

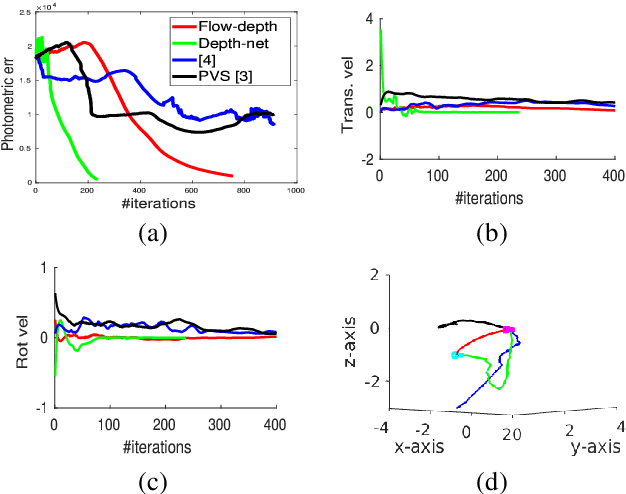

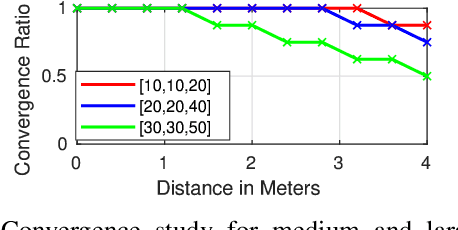

Abstract:Existing deep learning based visual servoing approaches regress the relative camera pose between a pair of images. Therefore, they require a huge amount of training data and sometimes fine-tuning for adaptation to a novel scene. Furthermore, current approaches do not consider underlying geometry of the scene and rely on direct estimation of camera pose. Thus, inaccuracies in prediction of the camera pose, especially for distant goals, lead to a degradation in the servoing performance. In this paper, we propose a two-fold solution: (i) We consider optical flow as our visual features, which are predicted using a deep neural network. (ii) These flow features are then systematically integrated with depth estimates provided by another neural network using interaction matrix. We further present an extensive benchmark in a photo-realistic 3D simulation across diverse scenes to study the convergence and generalisation of visual servoing approaches. We show convergence for over 3m and 40 degrees while maintaining precise positioning of under 2cm and 1 degree on our challenging benchmark where the existing approaches that are unable to converge for majority of scenarios for over 1.5m and 20 degrees. Furthermore, we also evaluate our approach for a real scenario on an aerial robot. Our approach generalizes to novel scenarios producing precise and robust servoing performance for 6 degrees of freedom positioning tasks with even large camera transformations without any retraining or fine-tuning.

Object Parsing in Sequences Using CoordConv Gated Recurrent Networks

Oct 02, 2019

Abstract:We present a monocular object parsing framework for consistent keypoint localization by capturing temporal correlation on sequential data. In this paper, we propose a novel recurrent network based architecture to model long-range dependencies between intermediate features which are highly useful in tasks like keypoint localization and tracking. We leverage the expressiveness of the popular stacked hourglass architecture and augment it by adopting memory units between intermediate layers of the network with weights shared across stages for video frames. We observe that this weight sharing scheme not only enables us to frame hourglass architecture as a recurrent network but also prove to be highly effective in producing increasingly refined estimates for sequential tasks. Furthermore, we propose a new memory cell, we call CoordConvGRU which learns to selectively preserve spatio-temporal correlation and showcase our results on the keypoint localization task. The experiments show that our approach is able to model the motion dynamics between the frames and significantly outperforms the baseline hourglass network. Even though our network is trained on a synthetically rendered dataset, we observe that with minimal fine tuning on 300 real images we are able to achieve performance at par with various state-of-the-art methods trained with the same level of supervisory inputs. By using a simpler architecture than other methods enables us to run it in real time on a standard GPU which is desirable for such applications. Finally, we make our architectures and 524 annotated sequences of cars from KITTI dataset publicly available.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge