Xuanxuan Zhang

Dynamic Initialization for LiDAR-inertial SLAM

Apr 02, 2025Abstract:The accuracy of the initial state, including initial velocity, gravity direction, and IMU biases, is critical for the initialization of LiDAR-inertial SLAM systems. Inaccurate initial values can reduce initialization speed or lead to failure. When the system faces urgent tasks, robust and fast initialization is required while the robot is moving, such as during the swift assessment of rescue environments after natural disasters, bomb disposal, and restarting LiDAR-inertial SLAM in rescue missions. However, existing initialization methods usually require the platform to remain stationary, which is ineffective when the robot is in motion. To address this issue, this paper introduces a robust and fast dynamic initialization method for LiDAR-inertial systems (D-LI-Init). This method iteratively aligns LiDAR-based odometry with IMU measurements to achieve system initialization. To enhance the reliability of the LiDAR odometry module, the LiDAR and gyroscope are tightly integrated within the ESIKF framework. The gyroscope compensates for rotational distortion in the point cloud. Translational distortion compensation occurs during the iterative update phase, resulting in the output of LiDAR-gyroscope odometry. The proposed method can initialize the system no matter the robot is moving or stationary. Experiments on public datasets and real-world environments demonstrate that the D-LI-Init algorithm can effectively serve various platforms, including vehicles, handheld devices, and UAVs. D-LI-Init completes dynamic initialization regardless of specific motion patterns. To benefit the research community, we have open-sourced our code and test datasets on GitHub.

Selective Kalman Filter: When and How to Fuse Multi-Sensor Information to Overcome Degeneracy in SLAM

Dec 23, 2024

Abstract:Research trends in SLAM systems are now focusing more on multi-sensor fusion to handle challenging and degenerative environments. However, most existing multi-sensor fusion SLAM methods mainly use all of the data from a range of sensors, a strategy we refer to as the all-in method. This method, while merging the benefits of different sensors, also brings in their weaknesses, lowering the robustness and accuracy and leading to high computational demands. To address this, we propose a new fusion approach -- Selective Kalman Filter -- to carefully choose and fuse information from multiple sensors (using LiDAR and visual observations as examples in this paper). For deciding when to fuse data, we implement degeneracy detection in LiDAR SLAM, incorporating visual measurements only when LiDAR SLAM exhibits degeneracy. Regarding degeneracy detection, we propose an elegant yet straightforward approach to determine the degeneracy of LiDAR SLAM and to identify the specific degenerative direction. This method fully considers the coupled relationship between rotational and translational constraints. In terms of how to fuse data, we use visual measurements only to update the specific degenerative states. As a result, our proposed method improves upon the all-in method by greatly enhancing real-time performance due to less processing visual data, and it introduces fewer errors from visual measurements. Experiments demonstrate that our method for degeneracy detection and fusion, in addressing degeneracy issues, exhibits higher precision and robustness compared to other state-of-the-art methods, and offers enhanced real-time performance relative to the all-in method. The code is openly available.

A Real-time Degeneracy Sensing and Compensation Method for Enhanced LiDAR SLAM

Dec 10, 2024

Abstract:LiDAR is widely used in Simultaneous Localization and Mapping (SLAM) and autonomous driving. The LiDAR odometry is of great importance in multi-sensor fusion. However, in some unstructured environments, the point cloud registration cannot constrain the poses of the LiDAR due to its sparse geometric features, which leads to the degeneracy of multi-sensor fusion accuracy. To address this problem, we propose a novel real-time approach to sense and compensate for the degeneracy of LiDAR. Firstly, this paper introduces the degeneracy factor with clear meaning, which can measure the degeneracy of LiDAR. Then, the Density-Based Spatial Clustering of Applications with Noise (DBSCAN) clustering method adaptively perceives the degeneracy with better environmental generalization. Finally, the degeneracy perception results are utilized to fuse LiDAR and IMU, thus effectively resisting degeneracy effects. Experiments on our dataset show the method's high accuracy and robustness and validate our algorithm's adaptability to different environments and LiDAR scanning modalities.

AC-LIO: Towards Asymptotic and Consistent Convergence in LiDAR-Inertial Odometry

Dec 08, 2024

Abstract:Existing LiDAR-Inertial Odometry (LIO) frameworks typically utilize prior state trajectories derived from IMU integration to compensate for the motion distortion within LiDAR frames, and demonstrate outstanding accuracy and stability in regular low-speed and smooth scenes. However, in high-speed or intense motion scenarios, the residual distortion may increase due to the limitation of IMU's accuracy and frequency, which will degrade the consistency between the LiDAR frame with its represented geometric environment, leading pointcloud registration to fall into local optima and consequently increasing the drift in long-time and large-scale localization. To address the issue, we propose a novel asymptotically and consistently converging LIO framework called AC-LIO. First, during the iterative state estimation, we backwards propagate the update term based on the prior state chain, and asymptotically compensate the residual distortion before next iteration. Second, considering the weak correlation between the initial error and motion distortion of current frame, we propose a convergence criteria based on pointcloud constraints to control the back propagation. The approach of guiding the asymptotic distortion compensation based on convergence criteria can promote the consistent convergence of pointcloud registration and increase the accuracy and robustness of LIO. Experiments show that our AC-LIO framework, compared to other state-of-the-art frameworks, effectively promotes consistent convergence in state estimation and further improves the accuracy of long-time and large-scale localization and mapping.

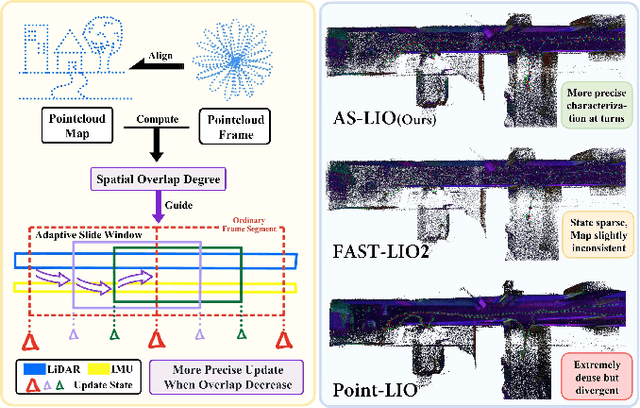

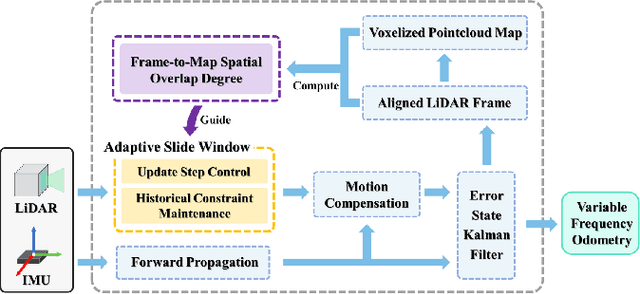

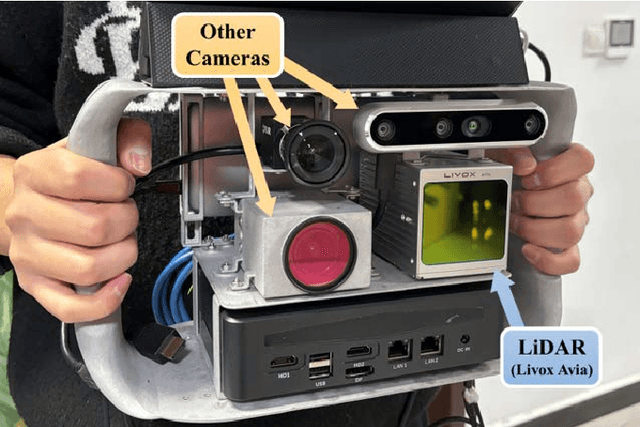

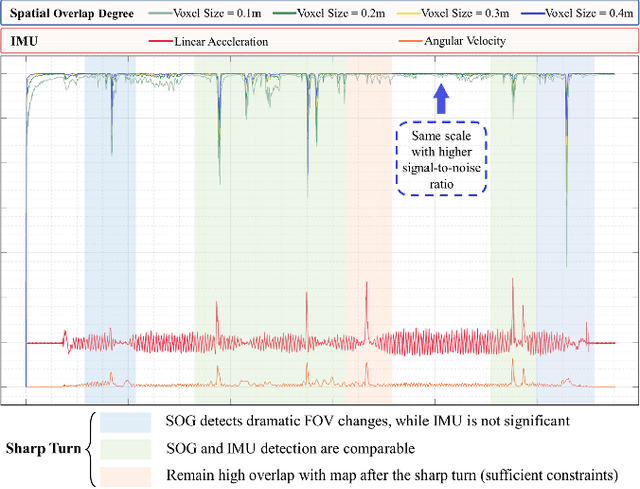

AS-LIO: Spatial Overlap Guided Adaptive Sliding Window LiDAR-Inertial Odometry for Aggressive FOV Variation

Aug 21, 2024

Abstract:LiDAR-Inertial Odometry (LIO) demonstrates outstanding accuracy and stability in general low-speed and smooth motion scenarios. However, in high-speed and intense motion scenarios, such as sharp turns, two primary challenges arise: firstly, due to the limitations of IMU frequency, the error in estimating significantly non-linear motion states escalates; secondly, drastic changes in the Field of View (FOV) may diminish the spatial overlap between LiDAR frame and pointcloud map (or between frames), leading to insufficient data association and constraint degradation. To address these issues, we propose a novel Adaptive Sliding window LIO framework (AS-LIO) guided by the Spatial Overlap Degree (SOD). Initially, we assess the SOD between the LiDAR frames and the registered map, directly evaluating the adverse impact of current FOV variation on pointcloud alignment. Subsequently, we design an adaptive sliding window to manage the continuous LiDAR stream and control state updates, dynamically adjusting the update step according to the SOD. This strategy enables our odometry to adaptively adopt higher update frequency to precisely characterize trajectory during aggressive FOV variation, thus effectively reducing the non-linear error in positioning. Meanwhile, the historical constraints within the sliding window reinforce the frame-to-map data association, ensuring the robustness of state estimation. Experiments show that our AS-LIO framework can quickly perceive and respond to challenging FOV change, outperforming other state-of-the-art LIO frameworks in terms of accuracy and robustness.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge