Xiya Cao

Towards personalized, precise and survey-free environment recognition: AI-enhanced sensor fusion without pre-deployment

Sep 16, 2025Abstract:Accurate and personalized environment recognition is essential for seamless indoor positioning and optimized connectivity, yet traditional fingerprinting requires costly site surveys and lacks user-level adaptation. We present a survey-free, on-device sensor-fusion framework that builds a personalized, lightweight multi-source fingerprint (FP) database from pedestrian dead reckoning (PDR), WiFi/cellular, GNSS, and interaction time tags. Matching is performed by an AI-enhanced dynamic time warping module (AIDTW) that aligns noisy, asynchronous sequences. To turn perception into continually improving actions, a cloud-edge online Reinforcement Learning from Human Feedback (RLHF) loop aggregates desensitized summaries and human feedback in the cloud to optimize a policy via proximal policy optimization (PPO), and periodically distills updates to devices. Across indoor/outdoor scenarios, our system reduces network-transition latency (measured by time-to-switch, TTS) by 32-65% in daily environments compared with conventional baselines, without site-specific pre-deployment.

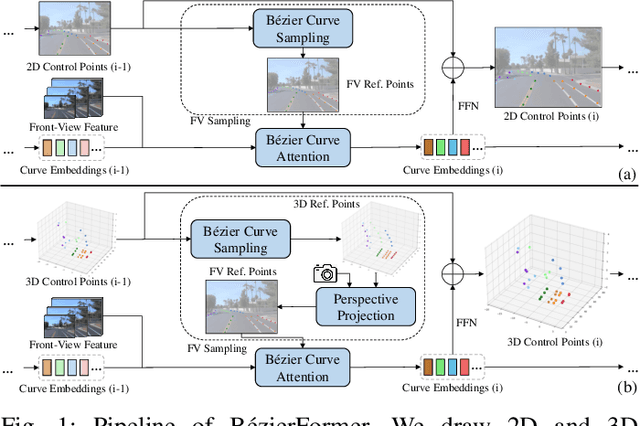

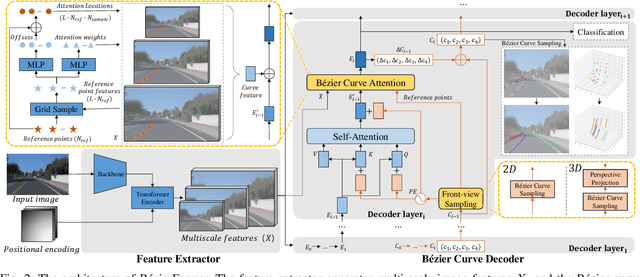

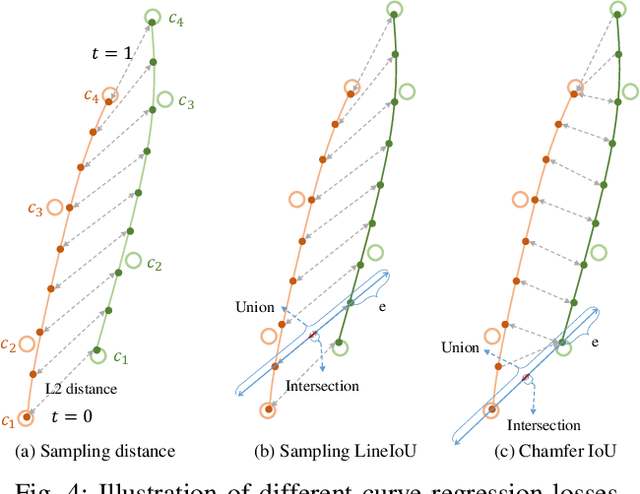

BezierFormer: A Unified Architecture for 2D and 3D Lane Detection

Apr 25, 2024

Abstract:Lane detection has made significant progress in recent years, but there is not a unified architecture for its two sub-tasks: 2D lane detection and 3D lane detection. To fill this gap, we introduce B\'{e}zierFormer, a unified 2D and 3D lane detection architecture based on B\'{e}zier curve lane representation. B\'{e}zierFormer formulate queries as B\'{e}zier control points and incorporate a novel B\'{e}zier curve attention mechanism. This attention mechanism enables comprehensive and accurate feature extraction for slender lane curves via sampling and fusing multiple reference points on each curve. In addition, we propose a novel Chamfer IoU-based loss which is more suitable for the B\'{e}zier control points regression. The state-of-the-art performance of B\'{e}zierFormer on widely-used 2D and 3D lane detection benchmarks verifies its effectiveness and suggests the worthiness of further exploration.

A survey on deep learning approaches for data integration in autonomous driving system

Jun 17, 2023

Abstract:The perception module of self-driving vehicles relies on a multi-sensor system to understand its environment. Recent advancements in deep learning have led to the rapid development of approaches that integrate multi-sensory measurements to enhance perception capabilities. This paper surveys the latest deep learning integration techniques applied to the perception module in autonomous driving systems, categorizing integration approaches based on "what, how, and when to integrate." A new taxonomy of integration is proposed, based on three dimensions: multi-view, multi-modality, and multi-frame. The integration operations and their pros and cons are summarized, providing new insights into the properties of an "ideal" data integration approach that can alleviate the limitations of existing methods. After reviewing hundreds of relevant papers, this survey concludes with a discussion of the key features of an optimal data integration approach.

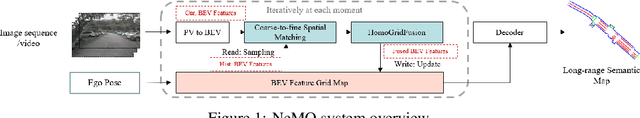

NeMO: Neural Map Growing System for Spatiotemporal Fusion in Bird's-Eye-View and BDD-Map Benchmark

Jun 07, 2023

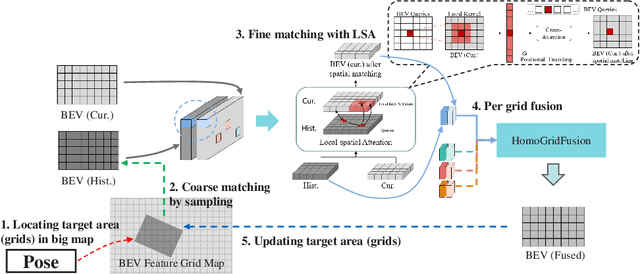

Abstract:Vision-centric Bird's-Eye View (BEV) representation is essential for autonomous driving systems (ADS). Multi-frame temporal fusion which leverages historical information has been demonstrated to provide more comprehensive perception results. While most research focuses on ego-centric maps of fixed settings, long-range local map generation remains less explored. This work outlines a new paradigm, named NeMO, for generating local maps through the utilization of a readable and writable big map, a learning-based fusion module, and an interaction mechanism between the two. With an assumption that the feature distribution of all BEV grids follows an identical pattern, we adopt a shared-weight neural network for all grids to update the big map. This paradigm supports the fusion of longer time series and the generation of long-range BEV local maps. Furthermore, we release BDD-Map, a BDD100K-based dataset incorporating map element annotations, including lane lines, boundaries, and pedestrian crossing. Experiments on the NuScenes and BDD-Map datasets demonstrate that NeMO outperforms state-of-the-art map segmentation methods. We also provide a new scene-level BEV map evaluation setting along with the corresponding baseline for a more comprehensive comparison.

RIO: Rotation-equivariance supervised learning of robust inertial odometry

Nov 23, 2021

Abstract:This paper introduces rotation-equivariance as a self-supervisor to train inertial odometry models. We demonstrate that the self-supervised scheme provides a powerful supervisory signal at training phase as well as at inference stage. It reduces the reliance on massive amounts of labeled data for training a robust model and makes it possible to update the model using various unlabeled data. Further, we propose adaptive Test-Time Training (TTT) based on uncertainty estimations in order to enhance the generalizability of the inertial odometry to various unseen data. We show in experiments that the Rotation-equivariance-supervised Inertial Odometry (RIO) trained with 30% data achieves on par performance with a model trained with the whole database. Adaptive TTT improves models performance in all cases and makes more than 25% improvements under several scenarios.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge