Xinyu Mu

OmniRAG-Agent: Agentic Omnimodal Reasoning for Low-Resource Long Audio-Video Question Answering

Feb 03, 2026Abstract:Long-horizon omnimodal question answering answers questions by reasoning over text, images, audio, and video. Despite recent progress on OmniLLMs, low-resource long audio-video QA still suffers from costly dense encoding, weak fine-grained retrieval, limited proactive planning, and no clear end-to-end optimization.To address these issues, we propose OmniRAG-Agent, an agentic omnimodal QA method for budgeted long audio-video reasoning. It builds an image-audio retrieval-augmented generation module that lets an OmniLLM fetch short, relevant frames and audio snippets from external banks. Moreover, it uses an agent loop that plans, calls tools across turns, and merges retrieved evidence to answer complex queries. Furthermore, we apply group relative policy optimization to jointly improve tool use and answer quality over time. Experiments on OmniVideoBench, WorldSense, and Daily-Omni show that OmniRAG-Agent consistently outperforms prior methods under low-resource settings and achieves strong results, with ablations validating each component.

KBQA-o1: Agentic Knowledge Base Question Answering with Monte Carlo Tree Search

Jan 31, 2025

Abstract:Knowledge Base Question Answering (KBQA) aims to answer natural language questions with a large-scale structured knowledge base (KB). Despite advancements with large language models (LLMs), KBQA still faces challenges in weak KB awareness, imbalance between effectiveness and efficiency, and high reliance on annotated data. To address these challenges, we propose KBQA-o1, a novel agentic KBQA method with Monte Carlo Tree Search (MCTS). It introduces a ReAct-based agent process for stepwise logical form generation with KB environment exploration. Moreover, it employs MCTS, a heuristic search method driven by policy and reward models, to balance agentic exploration's performance and search space. With heuristic exploration, KBQA-o1 generates high-quality annotations for further improvement by incremental fine-tuning. Experimental results show that KBQA-o1 outperforms previous low-resource KBQA methods with limited annotated data, boosting Llama-3.1-8B model's GrailQA F1 performance to 78.5% compared to 48.5% of the previous sota method with GPT-3.5-turbo.

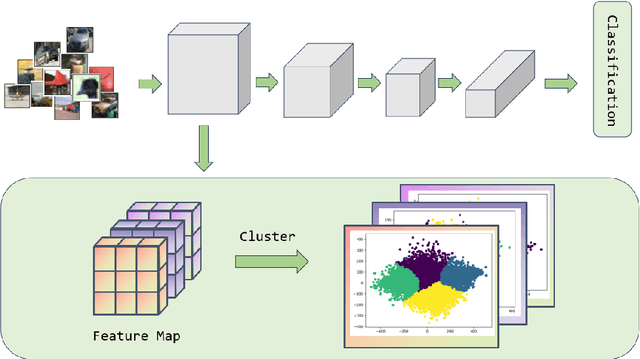

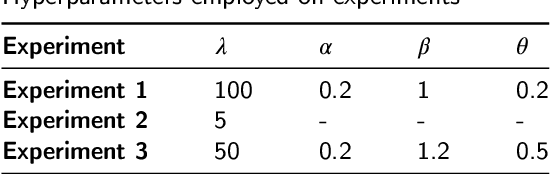

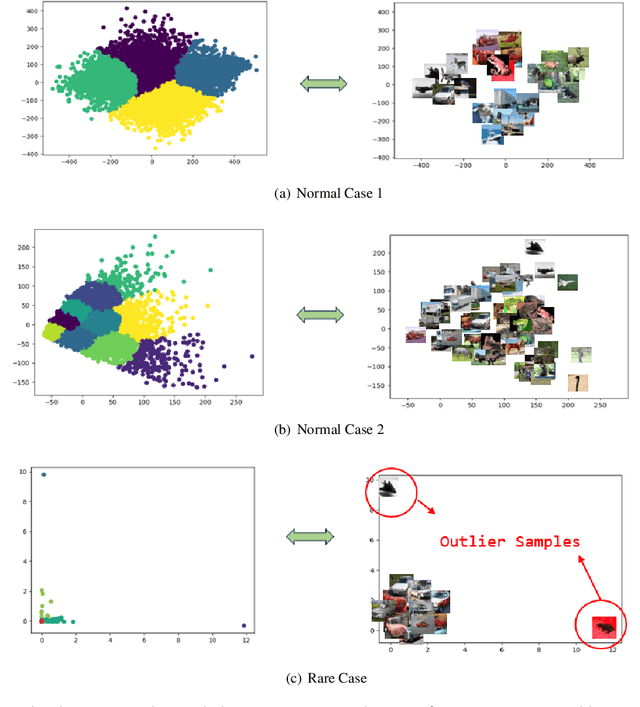

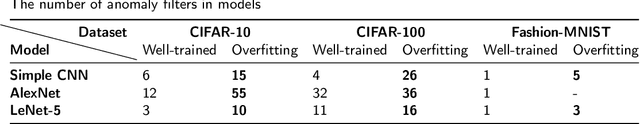

Explaining Model Overfitting in CNNs via GMM Clustering

Dec 12, 2024

Abstract:Convolutional Neural Networks (CNNs) have demonstrated remarkable prowess in the field of computer vision. However, their opaque decision-making processes pose significant challenges for practical applications. In this study, we provide quantitative metrics for assessing CNN filters by clustering the feature maps corresponding to individual filters in the model via Gaussian Mixture Model (GMM). By analyzing the clustering results, we screen out some anomaly filters associated with outlier samples. We further analyze the relationship between the anomaly filters and model overfitting, proposing three hypotheses. This method is universally applicable across diverse CNN architectures without modifications, as evidenced by its successful application to models like AlexNet and LeNet-5. We present three meticulously designed experiments demonstrating our hypotheses from the perspectives of model behavior, dataset characteristics, and filter impacts. Through this work, we offer a novel perspective for evaluating the CNN performance and gain new insights into the operational behavior of model overfitting.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge