Xinqi Li

Groupwise Registration with Physics-Informed Test-Time Adaptation on Multi-parametric Cardiac MRI

Oct 29, 2025Abstract:Multiparametric mapping MRI has become a viable tool for myocardial tissue characterization. However, misalignment between multiparametric maps makes pixel-wise analysis challenging. To address this challenge, we developed a generalizable physics-informed deep-learning model using test-time adaptation to enable group image registration across contrast weighted images acquired from multiple physical models (e.g., a T1 mapping model and T2 mapping model). The physics-informed adaptation utilized the synthetic images from specific physics model as registration reference, allows for transductive learning for various tissue contrast. We validated the model in healthy volunteers with various MRI sequences, demonstrating its improvement for multi-modal registration with a wide range of image contrast variability.

Contrast-Agnostic Groupwise Registration by Robust PCA for Quantitative Cardiac MRI

Nov 03, 2023Abstract:Quantitative cardiac magnetic resonance imaging (MRI) is an increasingly important diagnostic tool for cardiovascular diseases. Yet, co-registration of all baseline images within the quantitative MRI sequence is essential for the accuracy and precision of quantitative maps. However, co-registering all baseline images from a quantitative cardiac MRI sequence remains a nontrivial task because of the simultaneous changes in intensity and contrast, in combination with cardiac and respiratory motion. To address the challenge, we propose a novel motion correction framework based on robust principle component analysis (rPCA) that decomposes quantitative cardiac MRI into low-rank and sparse components, and we integrate the groupwise CNN-based registration backbone within the rPCA framework. The low-rank component of rPCA corresponds to the quantitative mapping (i.e. limited degree of freedom in variation), while the sparse component corresponds to the residual motion, making it easier to formulate and solve the groupwise registration problem. We evaluated our proposed method on cardiac T1 mapping by the modified Look-Locker inversion recovery (MOLLI) sequence, both before and after the Gadolinium contrast agent administration. Our experiments showed that our method effectively improved registration performance over baseline methods without introducing rPCA, and reduced quantitative mapping error in both in-domain (pre-contrast MOLLI) and out-of-domain (post-contrast MOLLI) inference. The proposed rPCA framework is generic and can be integrated with other registration backbones.

OneFlow: Redesign the Distributed Deep Learning Framework from Scratch

Oct 29, 2021

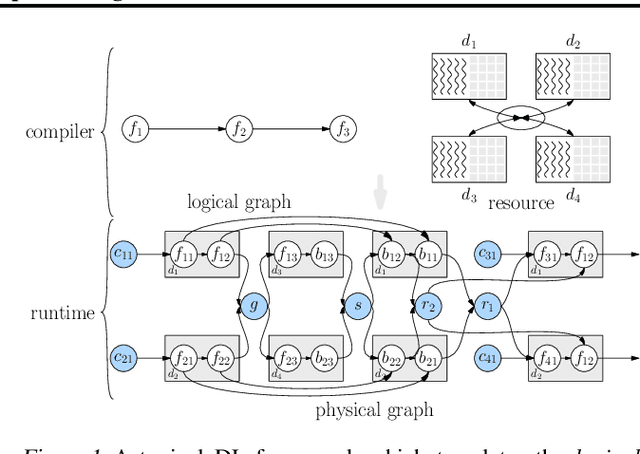

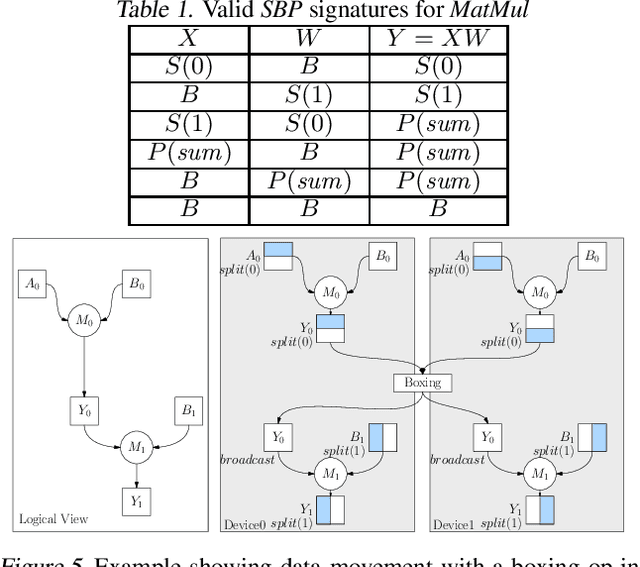

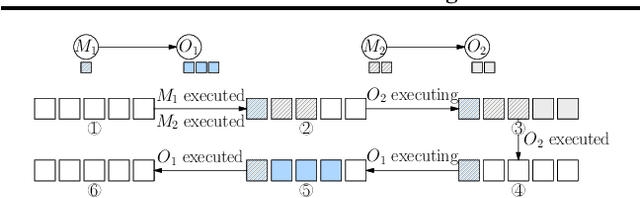

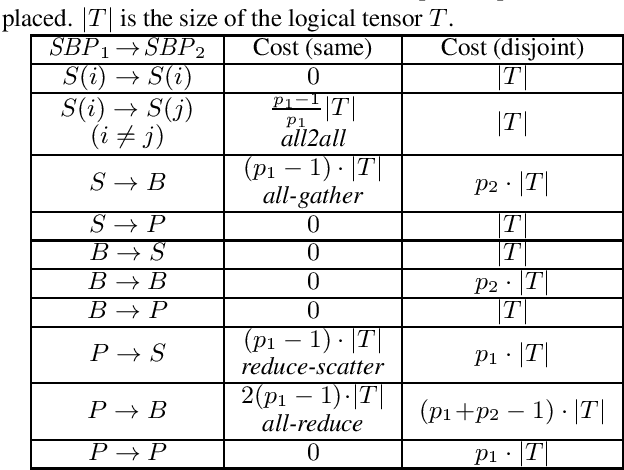

Abstract:Deep learning frameworks such as TensorFlow and PyTorch provide a productive interface for expressing and training a deep neural network (DNN) model on a single device or using data parallelism. Still, they may not be flexible or efficient enough in training emerging large models on distributed devices, which require more sophisticated parallelism beyond data parallelism. Plugins or wrappers have been developed to strengthen these frameworks for model or pipeline parallelism, but they complicate the usage and implementation of distributed deep learning. Aiming at a simple, neat redesign of distributed deep learning frameworks for various parallelism paradigms, we present OneFlow, a novel distributed training framework based on an SBP (split, broadcast and partial-value) abstraction and the actor model. SBP enables much easier programming of data parallelism and model parallelism than existing frameworks, and the actor model provides a succinct runtime mechanism to manage the complex dependencies imposed by resource constraints, data movement and computation in distributed deep learning. We demonstrate the general applicability and efficiency of OneFlow for training various large DNN models with case studies and extensive experiments. The results show that OneFlow outperforms many well-known customized libraries built on top of the state-of-the-art frameworks. The code of OneFlow is available at: https://github.com/Oneflow-Inc/oneflow.

Zero-Shot Fine-Grained Classification by Deep Feature Learning with Semantics

Jul 04, 2017

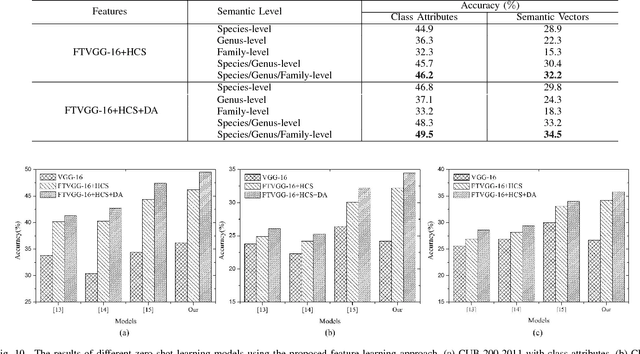

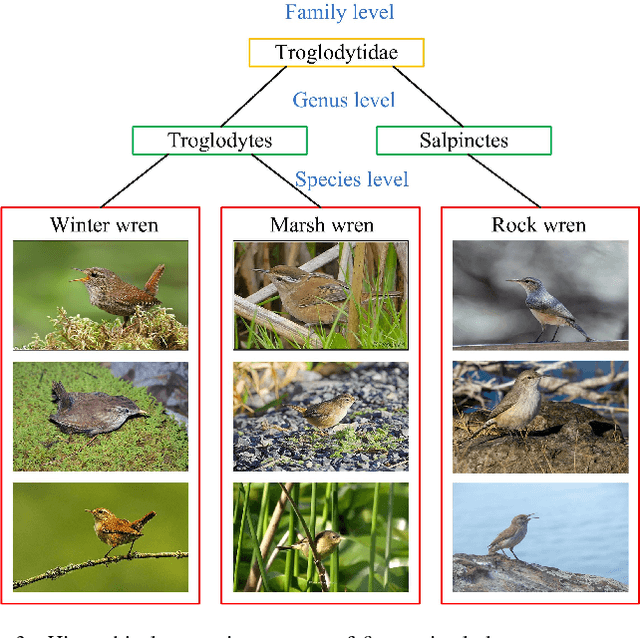

Abstract:Fine-grained image classification, which aims to distinguish images with subtle distinctions, is a challenging task due to two main issues: lack of sufficient training data for every class and difficulty in learning discriminative features for representation. In this paper, to address the two issues, we propose a two-phase framework for recognizing images from unseen fine-grained classes, i.e. zero-shot fine-grained classification. In the first feature learning phase, we finetune deep convolutional neural networks using hierarchical semantic structure among fine-grained classes to extract discriminative deep visual features. Meanwhile, a domain adaptation structure is induced into deep convolutional neural networks to avoid domain shift from training data to test data. In the second label inference phase, a semantic directed graph is constructed over attributes of fine-grained classes. Based on this graph, we develop a label propagation algorithm to infer the labels of images in the unseen classes. Experimental results on two benchmark datasets demonstrate that our model outperforms the state-of-the-art zero-shot learning models. In addition, the features obtained by our feature learning model also yield significant gains when they are used by other zero-shot learning models, which shows the flexility of our model in zero-shot fine-grained classification.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge