Xinliang Wu

Self-Attention Empowered Graph Convolutional Network for Structure Learning and Node Embedding

Mar 06, 2024Abstract:In representation learning on graph-structured data, many popular graph neural networks (GNNs) fail to capture long-range dependencies, leading to performance degradation. Furthermore, this weakness is magnified when the concerned graph is characterized by heterophily (low homophily). To solve this issue, this paper proposes a novel graph learning framework called the graph convolutional network with self-attention (GCN-SA). The proposed scheme exhibits an exceptional generalization capability in node-level representation learning. The proposed GCN-SA contains two enhancements corresponding to edges and node features. For edges, we utilize a self-attention mechanism to design a stable and effective graph-structure-learning module that can capture the internal correlation between any pair of nodes. This graph-structure-learning module can identify reliable neighbors for each node from the entire graph. Regarding the node features, we modify the transformer block to make it more applicable to enable GCN to fuse valuable information from the entire graph. These two enhancements work in distinct ways to help our GCN-SA capture long-range dependencies, enabling it to perform representation learning on graphs with varying levels of homophily. The experimental results on benchmark datasets demonstrate the effectiveness of the proposed GCN-SA. Compared to other outstanding GNN counterparts, the proposed GCN-SA is competitive.

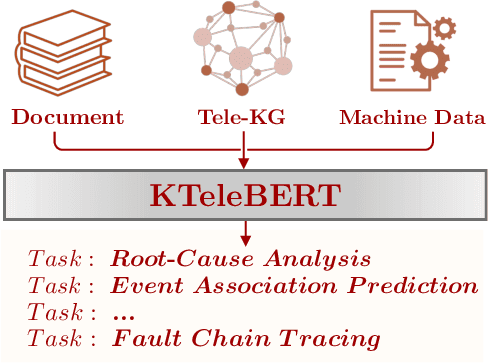

Tele-Knowledge Pre-training for Fault Analysis

Oct 20, 2022

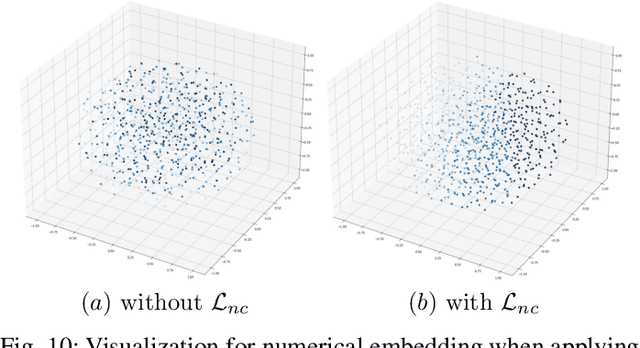

Abstract:In this work, we share our experience on tele-knowledge pre-training for fault analysis. Fault analysis is a vital task for tele-application, which should be timely and properly handled. Fault analysis is also a complex task, that has many sub-tasks. Solving each task requires diverse tele-knowledge. Machine log data and product documents contain part of the tele-knowledge. We create a Tele-KG to organize other tele-knowledge from experts uniformly. With these valuable tele-knowledge data, in this work, we propose a tele-domain pre-training model KTeleBERT and its knowledge-enhanced version KTeleBERT, which includes effective prompt hints, adaptive numerical data encoding, and two knowledge injection paradigms. We train our model in two stages: pre-training TeleBERT on 20 million telecommunication corpora and re-training TeleBERT on 1 million causal and machine corpora to get the KTeleBERT. Then, we apply our models for three tasks of fault analysis, including root-cause analysis, event association prediction, and fault chain tracing. The results show that with KTeleBERT, the performance of task models has been boosted, demonstrating the effectiveness of pre-trained KTeleBERT as a model containing diverse tele-knowledge.

SLGCN: Structure Learning Graph Convolutional Networks for Graphs under Heterophily

May 28, 2021

Abstract:The performances of GNNs for representation learning on the graph-structured data are generally limited to the issue that existing GNNs rely on one assumption, i.e., the original graph structure is reliable. However, since real-world graphs is inevitably noisy or incomplete, this assumption is often unrealistic. In this paper, we propose a structure learning graph convolutional networks (SLGCNs) to alleviate the issue from two aspects, and the proposed approach is applied to node classification. Specifically, the first is node features, we design a efficient-spectral-clustering with anchors (ESC-ANCH) approach to efficiently aggregate feature representationsfrom all similar nodes, no matter how far away they are. The second is edges, our approach generates a re-connected adjacency matrix according to the similarities between nodes and optimized for the downstream prediction task so as to make up for the shortcomings of original adjacency matrix, considering that the original adjacency matrix usually provides misleading information for aggregation step of GCN in the graphs with low level of homophily. Both the re-connected adjacency matrix and original adjacency matrix are applied to SLGCNs to aggregate feature representations from nearby nodes. Thus, SLGCNs can be applied to graphs with various levels of homophily. Experimental results on a wide range of benchmark datasets illustrate that the proposed SLGCNs outperform the stat-of-the-art GNN counterparts.

R-GSN: The Relation-based Graph Similar Network for Heterogeneous Graph

Mar 14, 2021

Abstract:Heterogeneous graph is a kind of data structure widely existing in real life. Nowadays, the research of graph neural network on heterogeneous graph has become more and more popular. The existing heterogeneous graph neural network algorithms mainly have two ideas, one is based on meta-path and the other is not. The idea based on meta-path often requires a lot of manual preprocessing, at the same time it is difficult to extend to large scale graphs. In this paper, we proposed the general heterogeneous message passing paradigm and designed R-GSN that does not need meta-path, which is much improved compared to the baseline R-GCN. Experiments have shown that our R-GSN algorithm achieves the state-of-the-art performance on the ogbn-mag large scale heterogeneous graph dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge